This is the official implementation of the paper Frozen CLIP models are Efficient Video Learners

@article{lin2022frozen,

title={Frozen CLIP Models are Efficient Video Learners},

author={Lin, Ziyi and Geng, Shijie and Zhang, Renrui and Gao, Peng and de Melo, Gerard and Wang, Xiaogang and Dai, Jifeng and Qiao, Yu and Li, Hongsheng},

journal={arXiv preprint arXiv:2208.03550},

year={2022}

}

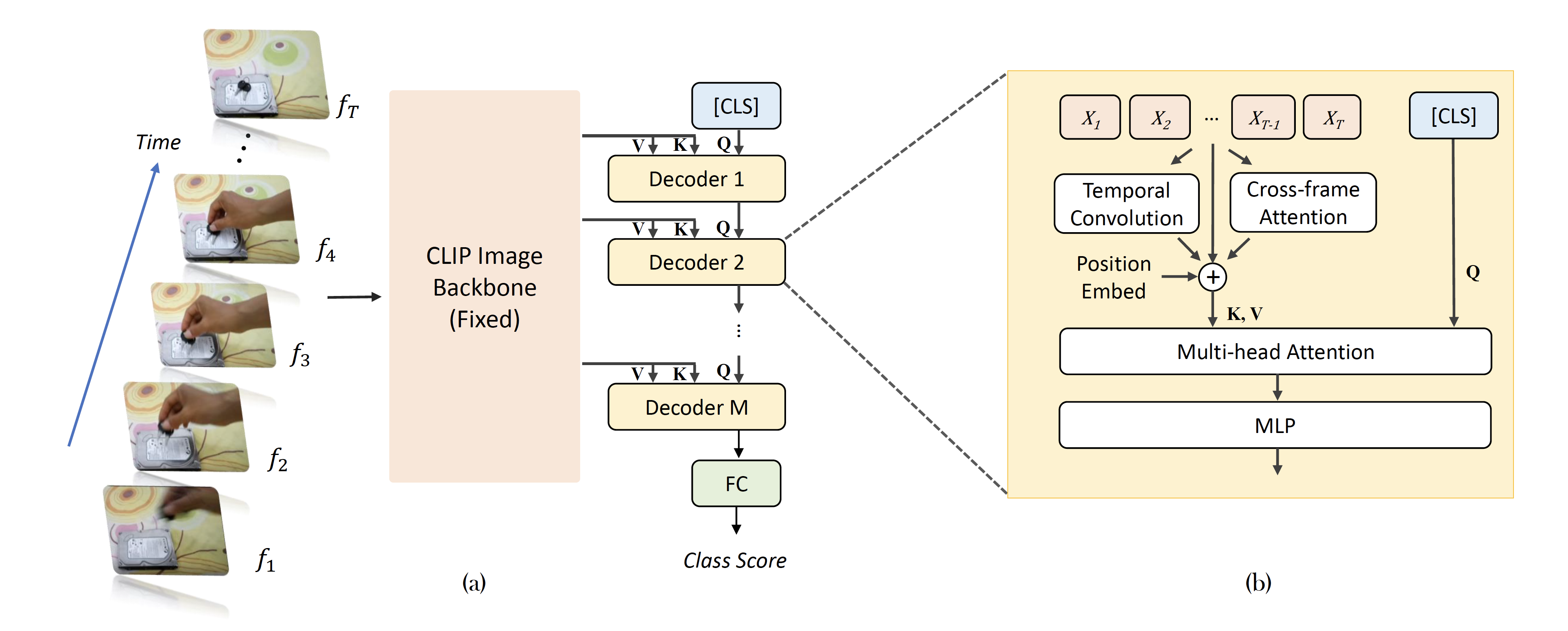

The overall architecture of the EVL framework includes a trainable Transformer decoder, trainable local temporal modules and a pretrained, fixed image backbone (CLIP is used for instance).

Using a fixed backbone significantly saves training time, and we managed to train a ViT-B/16 with 8 frames for 50 epochs in 60 GPU-hours (NVIDIA V100).

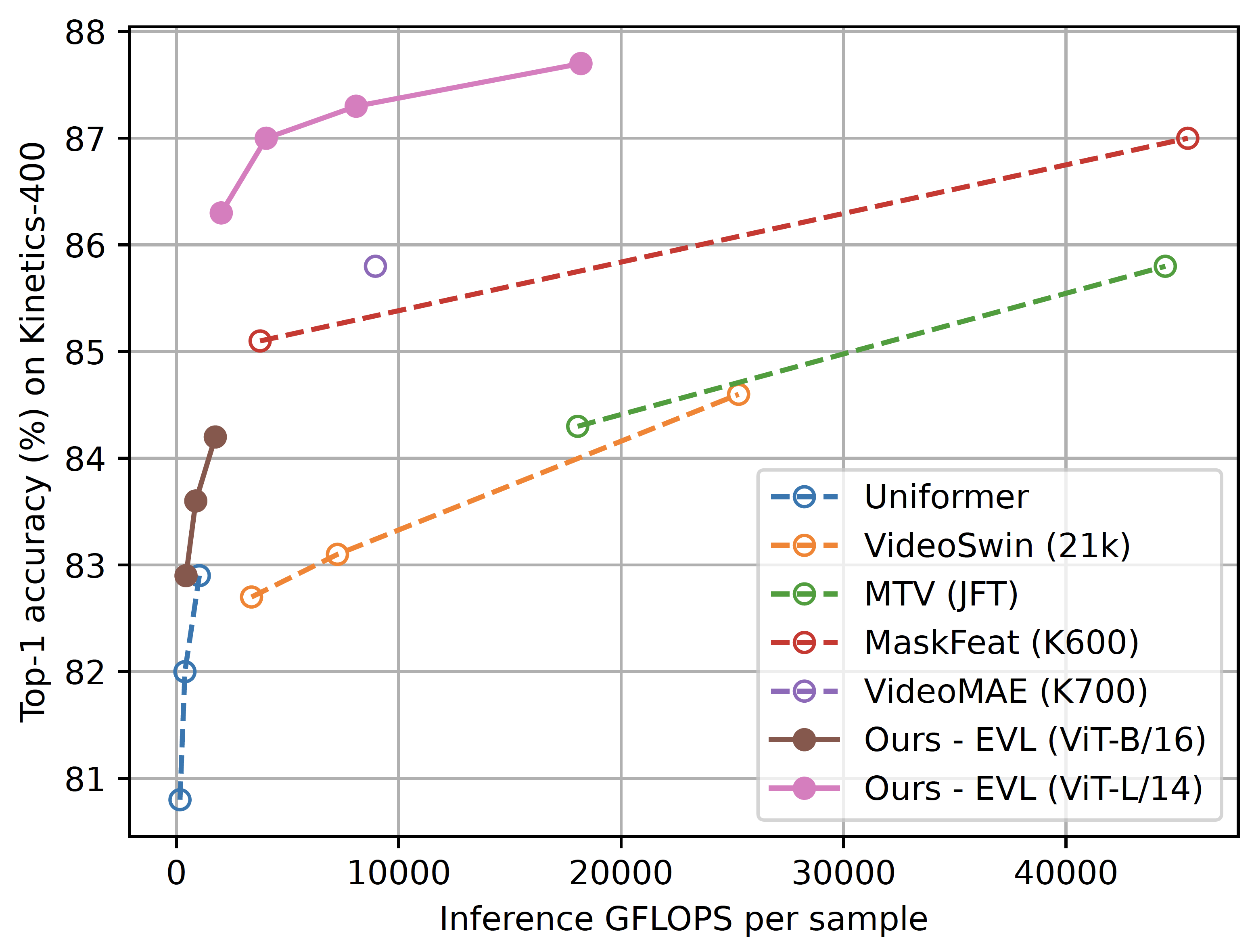

Despite with a small training computation and memory consumption, EVL models achieves high performance on Kinetics-400. A comparison with state-of-the-art methods are as follows

We tested the released code with the following conda environment

conda create -n pt1.9.0cu11.1_official -c pytorch -c conda-forge pytorch=1.9.0=py3.9_cuda11.1_cudnn8.0.5_0 cudatoolkit torchvision av

We expect that --train_list_path and --val_list_path command line arguments to be a data list file of the following format

<path_1> <label_1>

<path_2> <label_2>

...

<path_n> <label_n>

where <path_i> points to a video file, and <label_i> is an integer between 0 and num_classes - 1.

--num_classes should also be specified in the command line argument.

Additionally, <path_i> might be a relative path when --data_root is specified, and the actual path will be

relative to the path passed as --data_root.

The class mappings in the open-source weights are provided at Kinetics-400 class mappings

CLIP weights need to be downloaded from CLIP official repo

and passed to the --backbone_path command line argument.

Training and evaliation scripts are provided in the scripts folder.

Scripts should be ready to run once the environment is setup and

--backbone_path, --train_list_path and --val_list_path are replaced with your own paths.

For other command line arguments please see the help message for usage.

This is a re-implementation for open-source use. We are still re-running some models, and their scripts, weights and logs will be released later. In the following table we report the re-run accuracy, which may be slightly different from the original paper (typically +/-0.1%)

| Backbone | Decoder Layers | #frames x stride | top-1 | top-5 | Script | Model | Log |

|---|---|---|---|---|---|---|---|

| ViT-B/16 | 4 | 8 x 16 | 82.8 | 95.8 | script | google drive | google drive |

| ViT-B/16 | 4 | 16 x 16 | 83.7 | 96.2 | script | google drive | google drive |

| ViT-B/16 | 4 | 32 x 8 | 84.3 | 96.6 | script | google drive | google drive |

| ViT-L/14 | 4 | 8 x 16 | 86.3 | 97.2 | script | google drive | google drive |

| ViT-L/14 | 4 | 16 x 16 | 86.9 | 97.4 | script | google drive | google drive |

| ViT-L/14 | 4 | 32 x 8 | 87.7 | 97.6 | script | google drive | google drive |

| ViT-L/14 (336px) | 4 | 32 x 8 | 87.7 | 97.8 |

As the training process is fast, video frames are consumed at a very high rate.

For easier installation, the current version uses PyTorch-builtin data loaders.

They are not very efficient and can become a bottleneck when using ViT-B as backbones.

We provide a --dummy_dataset option to bypass actual video decoding for training speed measurement.

The model accuracy should not be affected.

Our internal data loader is pure C++-based and does not bottleneck training by much on a machine with 2x Xeon Gold 6148 CPUs and 4x V100 GPUs.

The data loader code is modified from PySlowFast. Thanks for their awesome work!