OpenRobotLab

Shanghai AI Laboratory

🤖 Demo

- 🏠 About

- 🔥 News

- 📚 Getting Started

- 📦 Model and Benchmark

- 📝 TODO List

- 🔗 Citation

- 📄 License

- 👏 Acknowledgements

Recent works have been exploring the scaling laws in the field of Embodied AI. Given the prohibitive costs of collecting real-world data, we believe the Simulation-to-Real (Sim2Real) paradigm is a more feasible path for scaling the learning of embodied models.

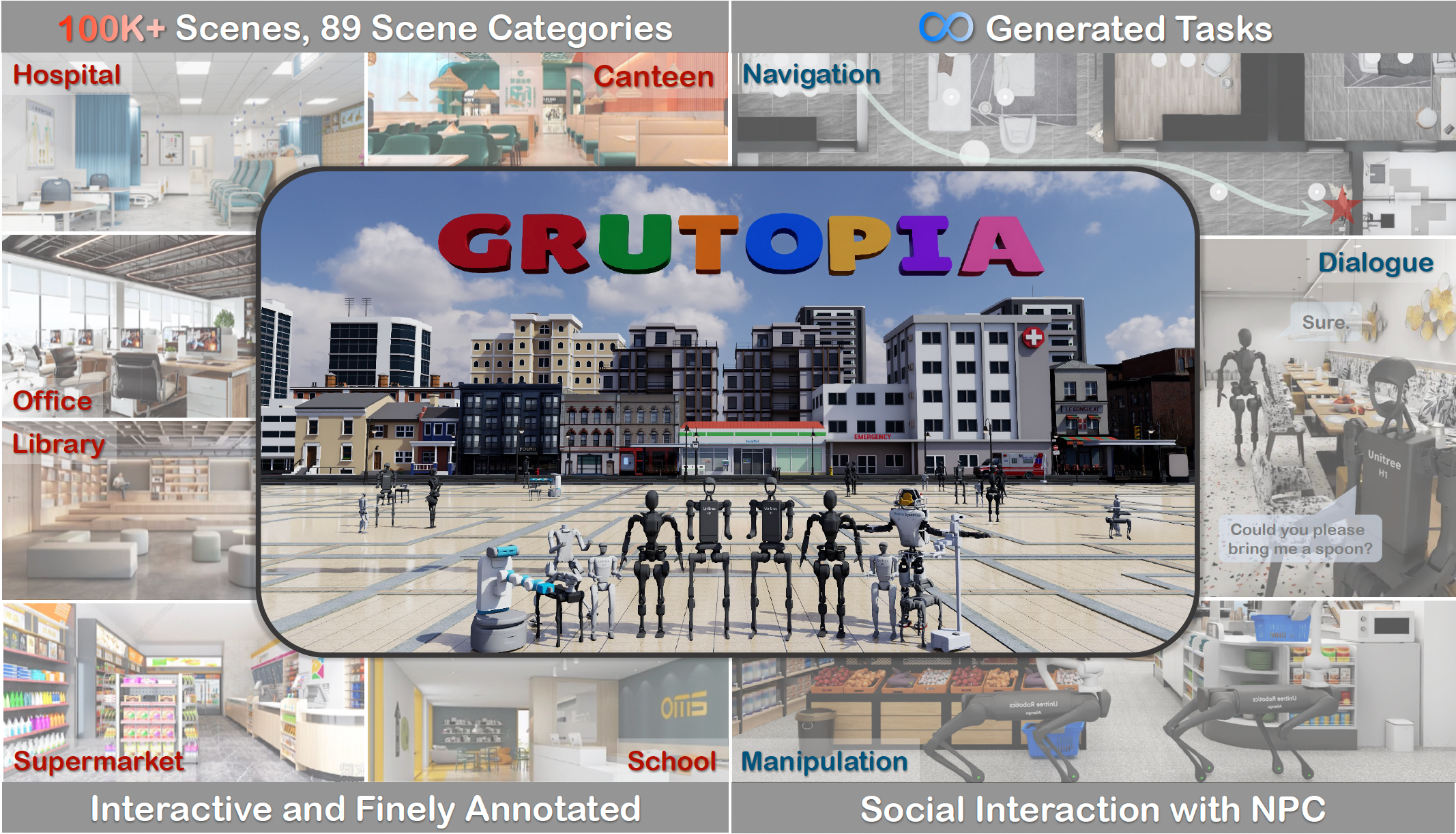

We introduce project GRUtopia (aka. 桃源 in Chinese), the first simulated interactive 3D society designed for various robots. It features several advancements:

- 🏙️ GRScenes, the scene dataset, includes 100k interactive, finely annotated scenes, which can be freely combined into city-scale environments. In contrast to previous works mainly focusing on home, GRScenes covers 89 diverse scene categories, bridging the gap of service-oriented environments where general robots would initially be deployed.

- 🧑🤝🧑 GRResidents, a Large Language Model (LLM) driven Non-Player Character (NPC) system that is responsible for social interaction, task generation, and task assignment, thus simulating social scenarios for embodied AI applications.

- 🤖 GRBench, the benchmark, focuses on legged robots as primary agents and poses moderately challenging tasks involving Object Loco-Navigation, Social Loco-Navigation, and Loco-Manipulation.

We hope that this work can alleviate the scarcity of high-quality data in this field and provide a more comprehensive assessment of embodied AI research.

- [2024-07] We release the paper and demos of GRUtopia.

We test our codes under the following environment:

- Ubuntu 20.04, 22.04

- NVIDIA Omniverse Isaac Sim 2023.1.1

- Ubuntu 20.04/22.04 Operating System

- NVIDIA GPU (RTX 2070 or higher)

- NVIDIA GPU Driver (recommended version 525.85)

- Docker (Optional)

- NVIDIA Container Toolkit (Optional)

- Conda

- Python 3.10.13 (3.10.* is ok, well installed automatically)

We provide the installation guide here. You can install locally or use docker and verify the installation easily.

Following the installation guide, you can verify the installation by running:

python ./GRUtopia/demo/h1_locomotion.py # start simulationYou can see a humanoid robot (Unitree H1) walking following a pre-defined trajectory in Isaac Sim.

Referring to the guide, you can basically run to wander a demo house:

# python ./GRUtopia/demo/h1_city.py will run a humanoid in the city block

# Its movement is much smaller given the large space of the block.

# Therefore, we recommend try with h1_house.py

python ./GRUtopia/demo/h1_house.py # start simulationYou can control a humanoid robot to walk around in a demo house and look around from different views by changing the camera view in Isaac Sim (on the top of the UI).

BTW, you can also simply load the demo city USD file into Isaac Sim to freely sightsee the city block with keyboard and mouse operations supported by Omniverse.

Please refer to the guide to try with WebUI and play with NPCs. Note that there are some additional requirements, such as installing with the docker and LLM's API keys.

We provide detailed docs and simple tutorials for the basic usage of different modules supported in GRUtopia. Welcome to try and post your suggestions!

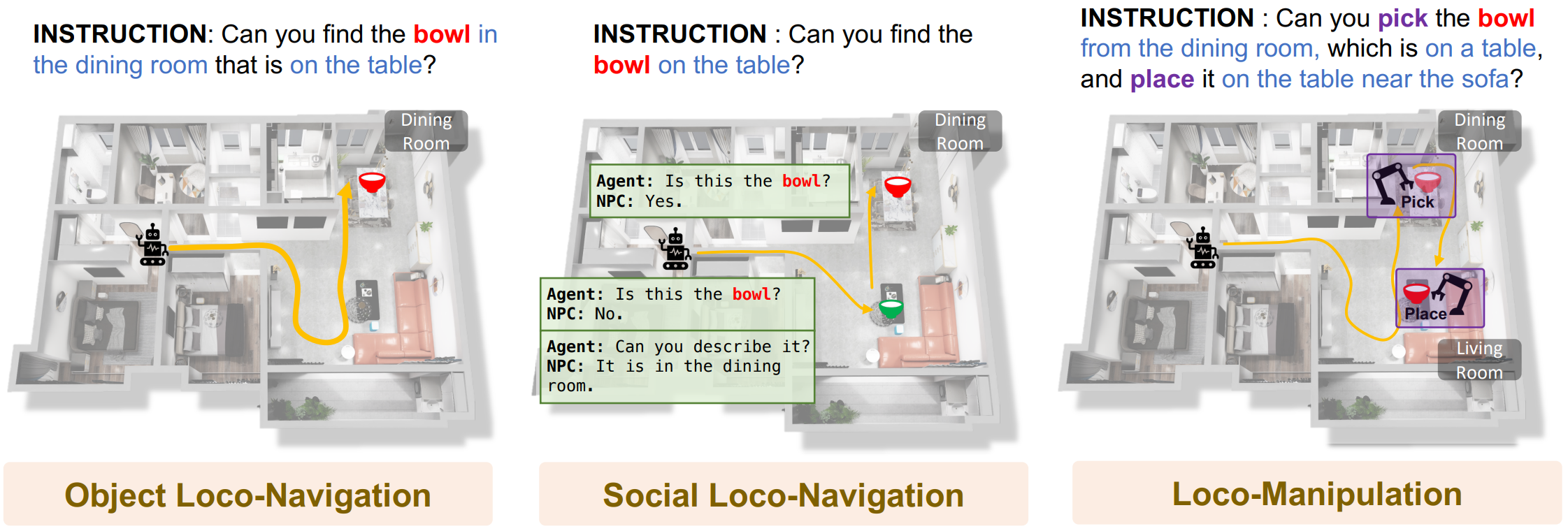

An embodied agent is expected to actively perceive its environment, engage in dialogue to clarify ambiguous human instructions, and interact with its surroundings to complete tasks. Here, we preliminarily establish three benchmarks for evaluating the capabilities of embodied agents from different aspects: Object Loco-Navigation, Social Loco-Navigation, and Loco-Manipulation. The target object in the instruction are subject to some constraints generated by the world knowledge manager. Navigation paths, dialogues, and actions are depicted in the figure.For now, please see the paper for more details of our models and benchmarks. We are actively re-organizing the codes and will release them soon. Please stay tuned.

- Release the paper with demos.

- Release the platform with basic functions and demo scenes.

- Release 100 curated scenes.

- Release the baseline models and benchmark codes.

- Polish APIs and related codes.

- Full release and further updates.

If you find our work helpful, please cite:

@inproceedings{grutopia,

title={GRUtopia: Dream General Robots in a City at Scale},

author={Wang, Hanqing and Chen, Jiahe and Huang, Wensi and Ben, Qingwei and Wang, Tai and Mi, Boyu and Huang, Tao and Zhao, Siheng and Chen, Yilun and Yang, Sizhe and Cao, Peizhou and Yu, Wenye and Ye, Zichao and Li, Jialun and Long, Junfeng and Wang, ZiRui and Wang, Huiling and Zhao, Ying and Tu, Zhongying and Qiao, Yu and Lin, Dahua and Pang Jiangmiao},

year={2024},

booktitle={arXiv},

}GRUtopia's simulation platform is MIT licensed. The open-sourced GRScenes are under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License

- OmniGibson: We refer to OmniGibson for designs of oracle actions.

- RSL_RL: We use

rsl_rllibrary to train the control policies for legged robots. - ReferIt3D: We refer to the Sr3D's approach to extract spatial relationship.

- Isaac Lab: We use some utilities from Orbit (Isaac Lab) for driving articulated joints in Isaac Sim.