Setting up Example Voting App in Codefresh

PART 1 Configuring Codefresh Repository, Deploying to Kubernetes using Helm Chart

What are we doing?

We are deploying the Docker example voting app as a Helm Release using Codefresh.

(3) - result, vote and worker micro-services

Now working with customized release names!

We will show you how to:

- Add a repository to Codefresh.

- Create a matrix pipeline to build (3) micro-services in parallel.

- Deploy the Docker example voting app to your Kubernetes cluster using Codefresh + Helm.

- The Helm chart uses a combination of local-charts for (3) and community charts for Redis and Postgres.

Now onto the How-to!

Creating Codefresh pipelines

PreReqs:

Some popular Kubernetes options

- Amazon KOPs Tutorial

- Amazon EKS (Preview)

- Azure Container Service (Moving to AKS)

- Azure Kubernetes Service (Preview) Webinar

- Google Kubernetes Engine Tutorial

- IBM Cloud Container Service

- Stackpoint Cloud

Fork this Github Repository

Either Fork or copy this repositories content to your Github Account or a GIT repository in another Version Control System.

Attach Kubernetes Cluster to Codefresh

You'll need to configure your Kubernetes cluster in Codefresh. See link above.

Setup Docker Registry Pull Secret in Kubernetes

If you'd like to add your own Docker Registry

Add Docker Registry to Codefresh

If you want to us Codefresh Integrated Docker Registry

Example commands below use cfcr (Codefresh Integrated Registry). Update if you decide to use your own Docker Registry

Configure Kubernetes namespace with Pull Secret

Command to create Pull Secret

kubectl create secret docker-registry -n <kubenetes_namespace> cfcr --docker-server=r.cfcr.io --docker-username=<codefresh_username> --docker-password=<cfcr_token> --docker-email=<codefresh_email>Command to patch Service Account with Pull Secret

kubectl patch <service_account_name> <kubernetes_namespace> -p "{\"imagePullSecrets\": [{\"name\": \"cfcr\"}]}" -n <kubernetes_namespace>Create Codefresh CLI Key

- Create API key in Codefresh

- Click GENERATE button.

- Copy key to safe location for later use in

CODEFRESH_CLI_KEYvariable.

Update YAML files

If you decided not use cfcr as your Docker Registry then you can skip this step.

Edit the Codefresh YAML in ./result, ./vote and ./worker directories with your friendly Docker registry name for registry in YAML.

Create Codefresh pipelines

In Codefresh add a new Repository and select example-voting-app

- Click Add New Repository button from Repositories screen.

- Select the example-voting-app repository you forked or clone to your VCS/Organization. (master branch if fine) Then click NEXT button.

- Click SELECT for CODEFRESH.YML option.

- Update PATH TO CODEFRESH.YML with

./codefresh-matrix-pipeline.yml. Then click NEXT button. - Review Codefresh YAML. Then click CREATE button.

- Click CREATE_PIPELINE button.

You'll arrive at the example-voting-app pipeline.

Now we need to configure some Environment Variables for the Repository.

- Click on General tab in the

example-voting-appRepository page. - Add the following variables below. (Encrypt the sensitive variables). Click ADD VARIABLE link after every variable to add.

CODEFRESH_ACCOUNTYour Codefresh Account Name (shown in lower left of Codefresh UI). If you chose to use CFCRCODEFRESH_CLI_KEYYour Codefresh CLI Key ENCRYPT.KUBE_CONTEXTYour friendly Kubernetes Cluster Name to use for release.KUBE_NAMESPACEKubernetes namespace to use for release.KUBE_PULL_SECRETKubernetes Pull Secret name.

The matrix pipeline is already configured and named after your GIT repository example-voting-app.

We need to setup the following (3) pipelines.

From Pipelines page of example-voting-app repository.

- Click ADD PIPELINE (do this 3 times)

- Name

example-voting-app1toexample-voting-app-result; Set WORKFLOW to YAML, Set Use YAML from Repository; Edit PATH_TO_YAML./result/codefresh-result-pipeline.yml; Click SAVE button at bottom of page. - Name

example-voting-app2toexample-voting-app-vote; Set WORKFLOW to YAML, Set Use YAML from Repository; Edit PATH_TO_YAML./vote/codefresh-vote-pipeline.yml; Click SAVE button at bottom of page. - Name

example-voting-app3toexample-voting-app-worker; Set WORKFLOW to YAML, Set Use YAML from Repository; Edit PATH_TO_YAML./worker/codefresh-worker-pipeline.yml; Click SAVE button at bottom of page.

Now we need to get your Codefresh Pipeline IDs to setup parallel builds.

Two options:

- CLI

codefresh get pipelines

- UI

- Open Repository.

- Click on each pipeline.

- Expand General Settings.

- Tempoary toggle on Webhook.

- Capture ID from the curl command shown.

Record your Pipeline IDs

- Open your

example-voting-appCodefresh pipeline. - Add the following environment variable.

PARALLEL_PIPELINES_IDSwith space delimited Codefresh Pipeline IDs. - Click ADD VARIABLE link below variable listing.

- Click SAVE button at bottom of page.

Now you can run your example-voting-app pipeline to produce a Helm Release.

When the build is finished you will see a new Helm Release for example-voting-app

Hopefully this has given you a somewhat overall picture of setting up Codefresh to run a Kubernetes deployment utilizing Helm.

Now you can play with the release or do something similar with your own application.

Notes

We'll be adding a Blog Post and additional followups to this example. Ex. Unit Tests, Integration Tests, Security Tests and Functional Testing.

If you'd like to schedule a demo of Codefresh to get help adding your own CI/CD steps to your Codefresh Pipelines Click Here

PART 2 Add Selenium Deployment Verification Testing of Deployment

What are we doing?

We're iterating on PART 1

- Getting the Endpoint IPs for vote and result services

- Creating a Testing image with Python, Pytest and Selenium

- Adding a composition build step using Selenium public images and Testing Docker image

- Uploading HTML PyTest Reports to S3

- Annotating Test Docker image with Selenium Report URLs

One thing we need to make sure of when using Continuous Delivery is ensuring the application is accessible and usable.

In tests/selenium/test_app.py I've created a few simple tests.

We check the vote service's webpage for page title Cats vs Dogs!, the two expected buttons Cats/Dogs and finally generate a click() to Cats button.

Next we check the result service's webpage for title Cats vs Dogs -- Result, result element and that elements text value to confirm it is not equal to no votes which indicates either our click() was never registered or the result ser vice cannot connect to the postgres database.

Using these simple tests we can confirm the deployment was successful.

I've made some updates to support multiple simultaneous releases.

HELM_RELEASE_NAMEwas added to create a reusable variable to get service's IPs. (string) prepended to services. ie.my-deployment=my-deployment-vote

I will use this in the future to create releases based on Pull Requests.

I have updated/added the following files.

./codefresh-matrix-pipeline.ymlupdated with steps to support Selenium DVTs../Dockerfileadded for Docker image for Testing (Python, PyTest, Selenium)./testsadded with python selenium tests file

New variables to add to Codefresh Pipeline's Environment Variables

BROWSERSspace delimited list of browsers to test on. This setup supports Chrome and Firefoxe. ie.chrome firefoxSERVICESspace delimited list of services, used to return service's IP. ie.vote result

This step is completely optional and I've used S3 just to demonstrate how you can archive the Deployment Verification Test (PyTest HTML) reports generated and link them to your Testing image.

Required Variables:

New variables to add to Codefresh Respository's (General) Environment Variables

S3_BUCKETNAMEthe AWS S3 Bucket Name to store the reports in. For my example I chose to use the Codefresh Repository nameexample-voting-app.

New Shared Configuration to add to Codefresh's Account Settings -> Shared Configurations

Why did I choose to store the variables in a Shared Secret Shared Configuration Context?

Normally, the AWS CLI credentials are required by more than one Codefresh Pipeline and can be shared across the account when you've generated them from a Service Account for usage in Codefresh.

I called my Shared Secret AWS_CLI

AWS_DEFAULT_REGION- AWS Region of S3 BucketAWS_ACCESS_KEY_ID- AWS Access Key with write permissions to S3 bucketAWS_SECRET_ACCESS_KEY- AWS Secret Access Key for Access Key

Now back in your Codefresh Matrix Pipeline IMPORT FROM SHARED CONFIGURATION and select your Shared Secret

When you add the YAML for the build step ArchiveSeleniumDVTs a new folder based on the Codefresh Build ID CF_BUILD_ID will be created and the reports for firefox and chrome will be uploaded and finally your Docker Testing image will be annotated with the HTTP URLs to the reports. By default the command is allowing public access using --acl public-read. If you want to convert these to be only accessible by authorized users please remove.

Additional Resources:

http://pytest-selenium.readthedocs.io/en/latest/index.html https://github.com/SeleniumHQ/selenium/tree/master/py/test/selenium/webdriver/common https://github.com/SeleniumHQ/docker-selenium

DOCKER'S ORIGINAL CONTENT BELOW, PLEASE NOTE DOCKER SWARM DOES NOT WORK AT THIS TIME.

Example Voting App

Getting started

Download Docker. If you are on Mac or Windows, Docker Compose will be automatically installed. On Linux, make sure you have the latest version of Compose. If you're using Docker for Windows on Windows 10 pro or later, you must also switch to Linux containers.

Run in this directory:

docker-compose up

The app will be running at http://localhost:5000, and the results will be at http://localhost:5001.

Alternately, if you want to run it on a Docker Swarm, first make sure you have a swarm. If you don't, run:

docker swarm init

Once you have your swarm, in this directory run:

docker stack deploy --compose-file docker-stack.yml vote

Run the app in Kubernetes

The folder k8s-specifications contains the yaml specifications of the Voting App's services.

Run the following command to create the deployments and services objects:

$ kubectl create -f k8s-specifications/

deployment "db" created

service "db" created

deployment "redis" created

service "redis" created

deployment "result" created

service "result" created

deployment "vote" created

service "vote" created

deployment "worker" created

The vote interface is then available on port 31000 on each host of the cluster, the result one is available on port 31001.

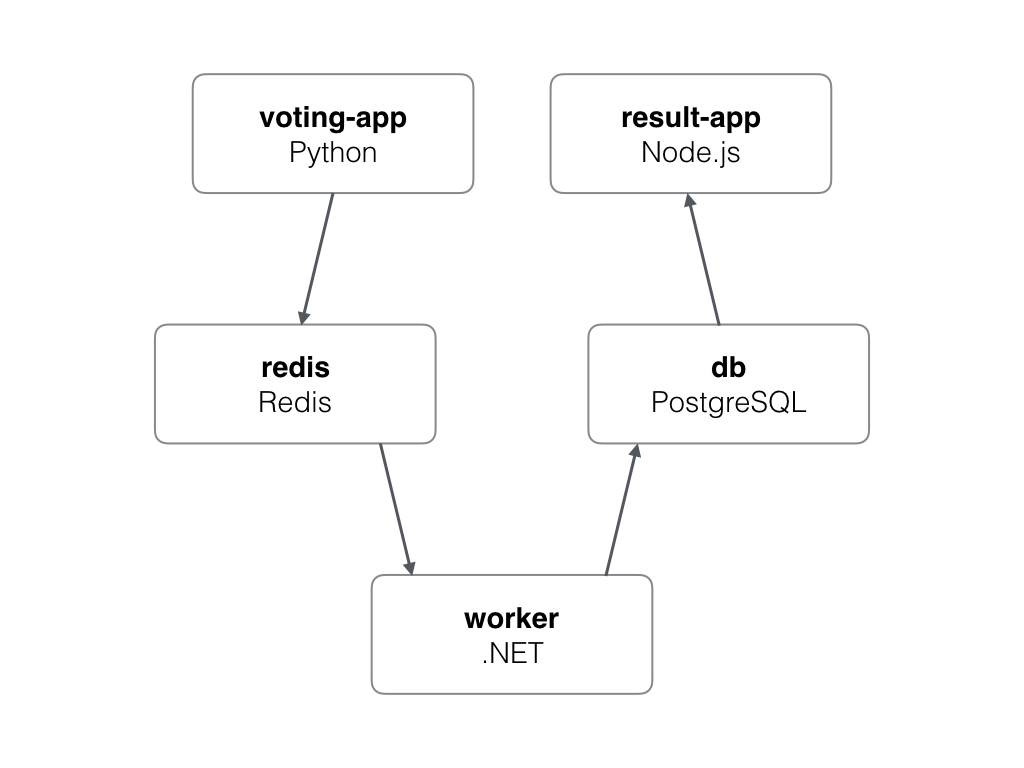

Architecture

- A Python webapp which lets you vote between two options

- A Redis queue which collects new votes

- A .NET worker which consumes votes and stores them in…

- A Postgres database backed by a Docker volume

- A Node.js webapp which shows the results of the voting in real time

Note

The voting application only accepts one vote per client. It does not register votes if a vote has already been submitted from a client.