language and vision tools: This repo has all the tools needed to learn about language and vision from 4 Datasets.

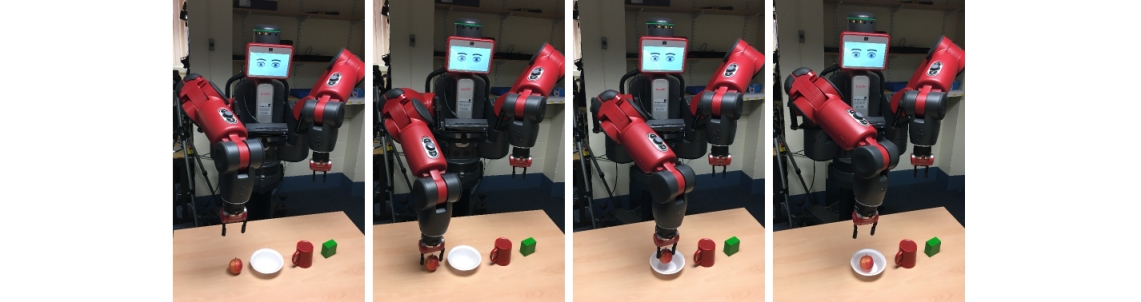

In this dataset a Baxter Robot (from rethink Robotics) has been used to collect 204 videos of the robot manipulating different objects along with natural language descriptions.

-Dataset available on http://doi.org/10.5518/110

-Paper available on http://www.aaai.org/Conferences/AAAI/2017/PreliminaryPapers/22-Alomari-14913.pdf

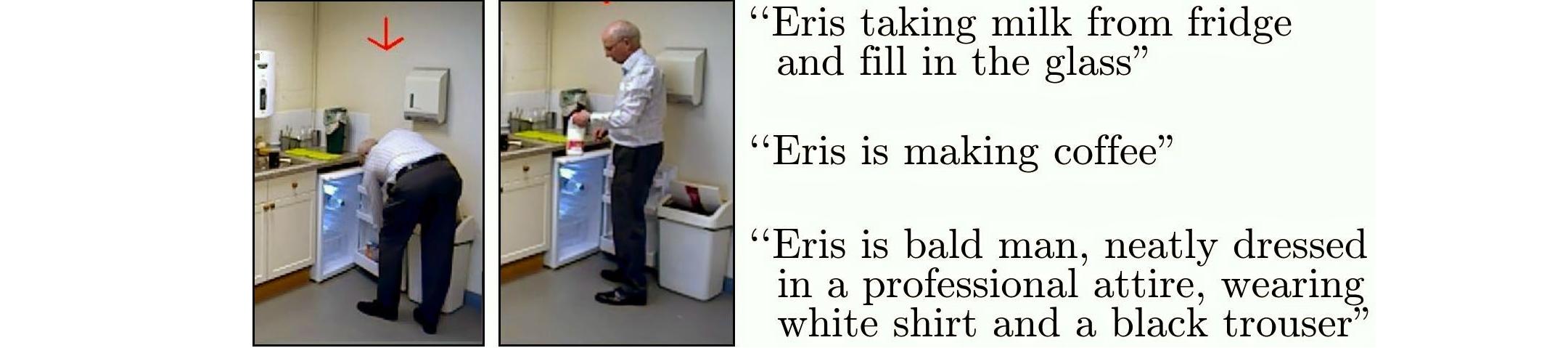

In this dataset a Scitos-A5 Robot (from metra-labs) has been used to collect 493 videos of people performing different activities in an office environment along with natural language descriptions.

-Dataset available on https://doi.org/10.5518/86

-Paper available on http://eprints.whiterose.ac.uk/103049/

In this dataset a custom made robot has been used to manipulate different objects (a total of 204 videos were collected) along with natural language descriptions.

-Paper available on http://www.cs.utexas.edu/~jsinapov/papers/jsinapov_ijcai2016.pdf

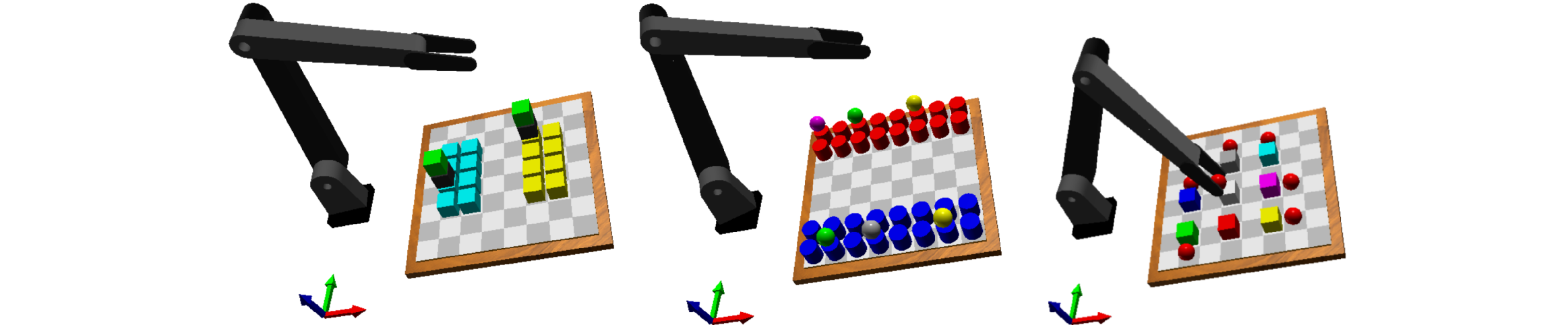

In this a Python simulation platform was used to generate a 1000 scenes mimking the Duckes linguistic dataset.

-Simulation available on https://github.com/OMARI1988/robot_simulation

-Dataset available on http://doi.org/10.5518/32

-Paper available on http://eprints.whiterose.ac.uk/95572/