Download mnist.pkl.gz from link

Load dataset using load_data.py

See tile_view_util.py & visualization.ipynb

Another link1 and link2 for dataset dimension-reduced visualization

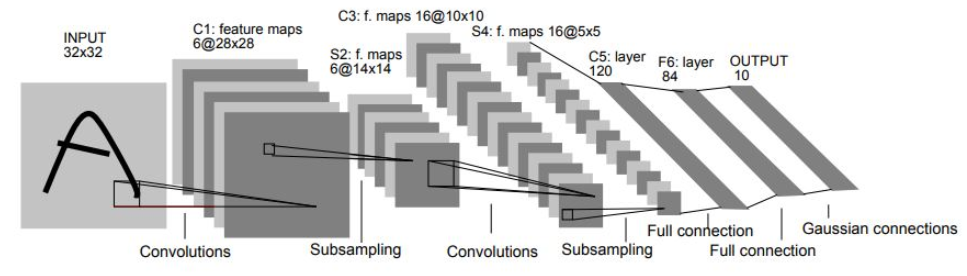

LeNet - 5^Lecun et al., 1998 Architecture

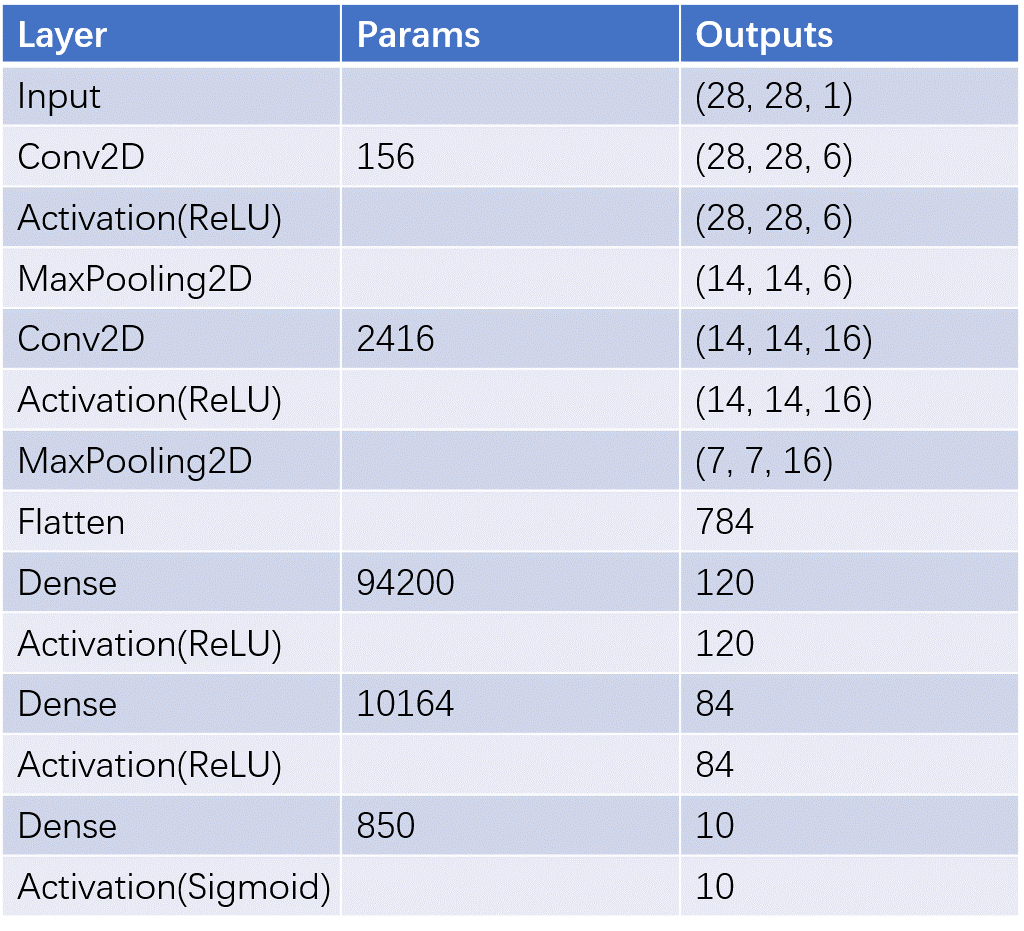

- kernel initializer: Xavier^Glorot et al., 2010 Normal

- optimizer: SGD

- learning rate:

$\alpha = 1$ - batch size: 128

- epoch: 20

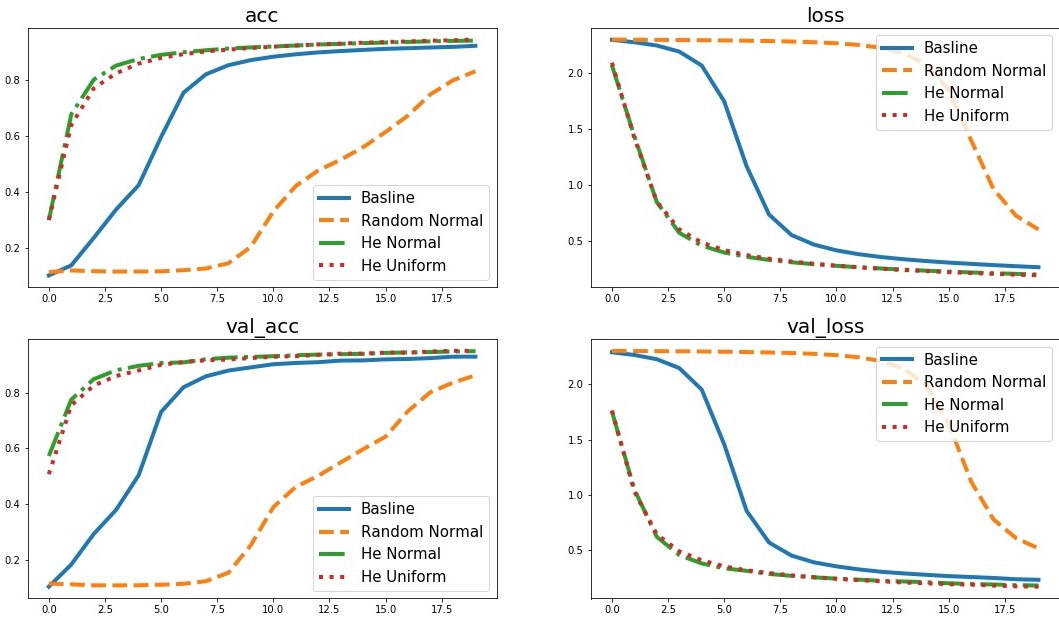

Since He initialization^He et al., 2015 is optimal for ReLU activation, I've tried both he_uniform and he_normal. Besides, I also experimented withrandom_normal as a control.

He Normal and He Uniform do not differ much in terms of accuracy performance, but He Normal is slightly outperforms He uniform.

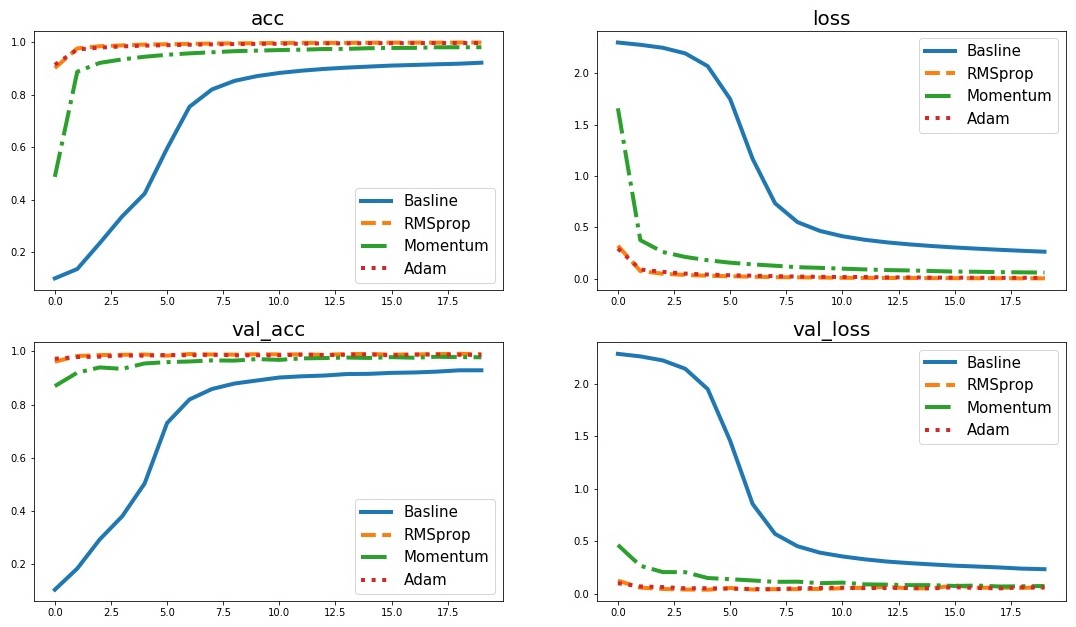

I've tried Momentum (

RMSprop slightly outperforms Adam

- optimizer: RMSprop

- initializer: He Normal

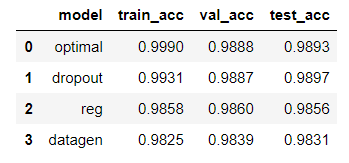

accuracy on test set: 98.93%

Applied Dropout on the last 2 Full Connected Layers of the optimal model, keep_prob = 0.7.

Besides, applied L2_regularization on the last 2 Full Connected Layers of the optimal model respectively.

Data Augmentation: (datagen)

datagen = ImageDataGenerator(

rotation_range=20,

width_shift_range=0.2,

height_shift_range=0.2

)plot data: plot_data.ipynb