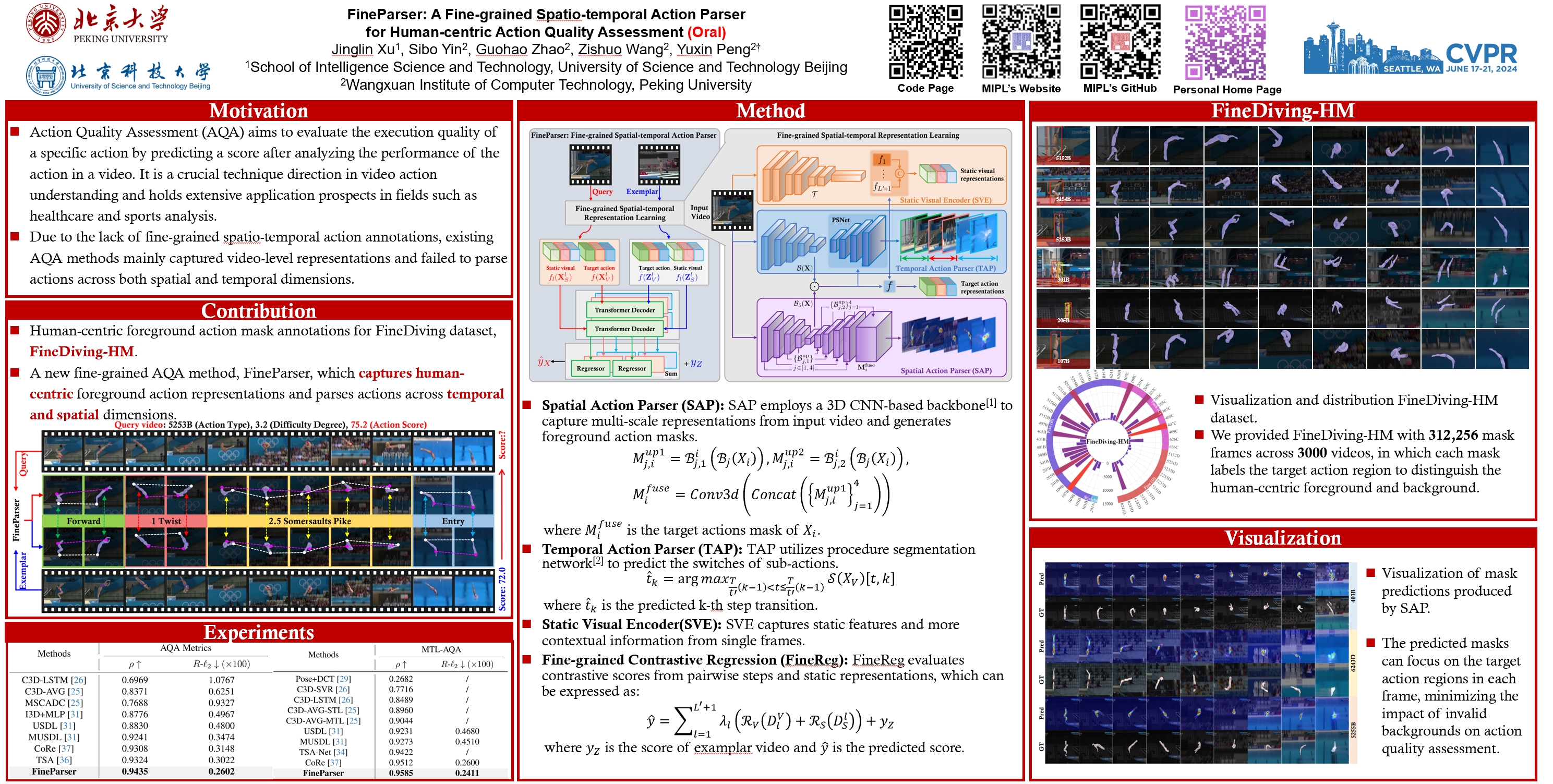

FineParser: A Fine-grained Spatio-temporal Action Parser for Human-centric Action Quality Assessment

Created by Jinglin Xu, Sibo Yin, Guohao Zhao, Zishuo Wang, Yuxin Peng

This repository contains the PyTorch implementation for FineParser (CVPR 2024, Oral).

Make sure the following dependencies installed (python):

- pytorch >= 0.4.0

- matplotlib=3.1.0

- einops

- timm

- torch_videovision

pip install git+https://github.com/hassony2/torch_videovision

To download FineDiving dataset and annotations, please follow FineDiving.

To download the FineDiving dataset, please sign the Release Agreement and send it to send it to Jinglin Xu (xujinglinlove@gmail.com). By sending the application, you are agreeing and acknowledging that you have read and understand the notice. We will reply with the file and the corresponding guidelines right after we receive your request!

The format of the FineDiving-HM dataset division is consistent with FineDiving. Please place the downloaded FineDiving-HM in data.

$DATASET_ROOT

├── FineDiving

| ├── FINADivingWorldCup2021_Men3m_final_r1

| ├── 0

| ├── 00489.jpg

| ...

| └── 00592.jpg

| ...

| └── 11

| ├── 14425.jpg

| ...

| └── 14542.jpg

| ...

| └── FullMenSynchronised10mPlatform_Tokyo2020Replays_2

| ├── 0

| ...

| └── 16

└──FineDiving_HM

| ├── FINADivingWorldCup2021_Men3m_final_r1

| ├── 0

| ├── 00489.jpg

| ...

| └── 00592.jpg

| ...

| └── 11

| ├── 14425.jpg

| ...

| └── 14542.jpg

| ...

| └── FullMenSynchronised10mPlatform_Tokyo2020Replays_2

| ├── 0

| ...

| └── 16

$ANNOTATIONS_ROOT

| ├── FineDiving_coarse_annotation.pkl

| ├── FineDiving_fine-grained_annotation.pkl

| ├── Sub_action_Types_Table.pkl

| ├── fine-grained_annotation_aqa.pkl

| ├── train_split.pkl

| ├── test_split.pkl

Training on 4*NVIDIA RTX 4090.

To download pretrained_i3d_wight, please follow kinetics_i3d_pytorch, and put model_rgb.pth in models folder.

To train the model, please run:

python launch.pyTo test the trained model, please set test: True in config and run:

python launch.py@InProceedings{Xu_2024_CVPR_fineparser,

author = {Xu, Jinglin and Yin, Sibo and Zhao, Guohao and Wang, Zishuo and Peng, Yuxin},

title = {FineParser: A Fine-grained Spatio-temporal Action Parser for Human-centric Action Quality Assessment},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2024},

pages = {14628-14637}

}