SafePO-Baselines

Update !!!

- We added a parameter to choose whether to use the discounted total cost to updateLagrange multiplier. Without any change to the previous command, you just need to add

--use_discount_cost_update_lag Truewhen run a command in terminal.

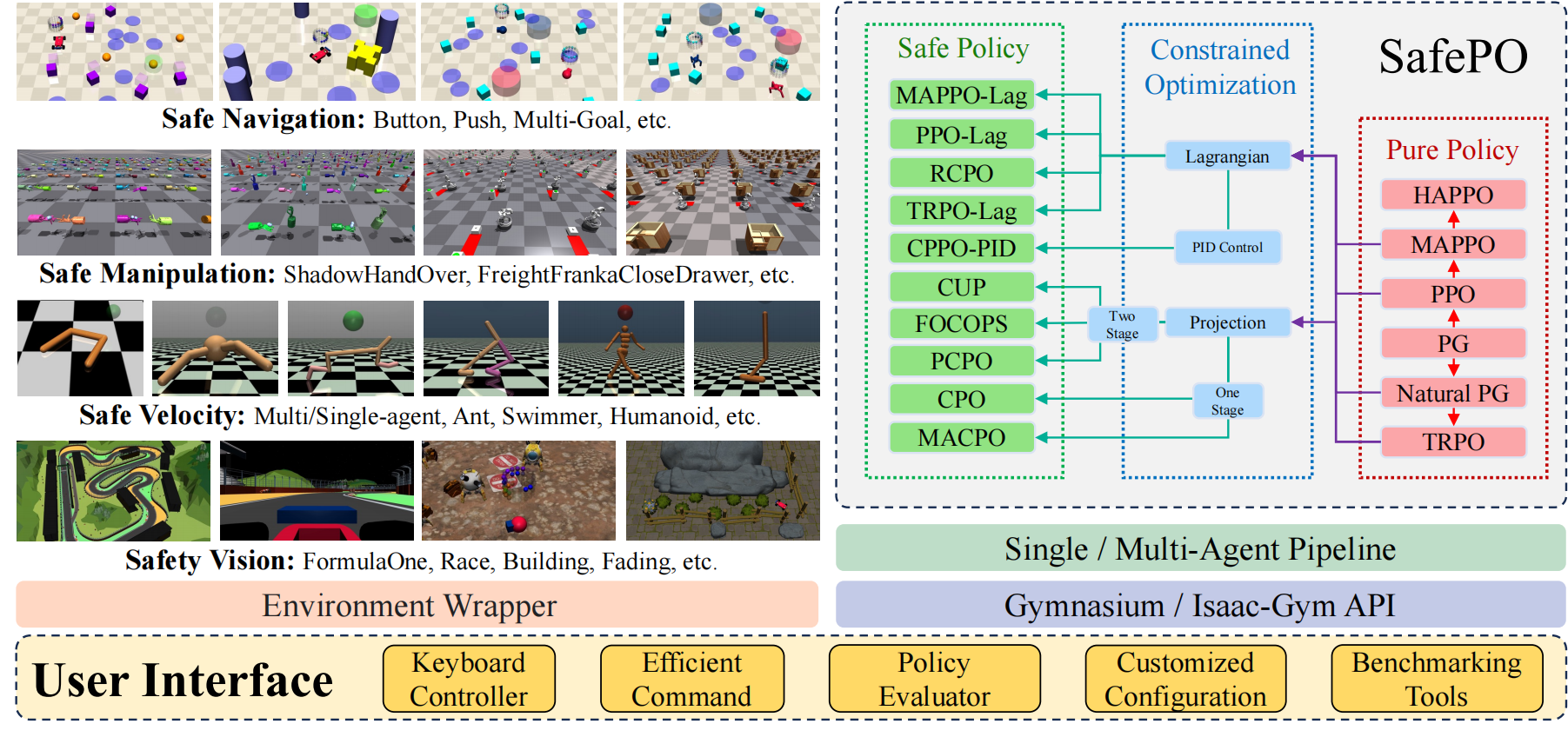

Safe Policy Optimization (SafePO) is a comprehensive algorithm benchmark for Safe Reinforcement Learning (Safe RL). It provides RL research community with a unified platform for processing and evaluating algorithms in various safe reinforcement learning environments. In order to better help the community study this problem, SafePO is developed with the following key features:

- Comprehensive Safe RL benchmark: We offer high-quality implementation of both single-agent safe reinforcement learning algorithms (CPO, PCPO, FOCOPS, P3O, PPO-Lag, TRPO-Lag, PDO, CPPO-PID, RCPO, IPO, and SAC-Lag) and multi-agent safe reinforcement learning algorithms (HAPPO, MAPPO-Lag, IPPO, MACPO, and MAPPO).

- Richer interfaces:In SafePO, you can modify the parameters of the algorithm according to your requirements. We provide customizable YAML files for each algorithm, and you can also pass in the parameters you want to change via argparse at the terminal.

- Fairer and more effective:In the past, when comparing different algorithms, the number of interactions of each algorithm and the processing mode of buffer may be different. To solve this problem, we abstract the most basic Policy Gradient class and inherit all other algorithms from this class to ensure a fairer and more reasonable performance comparison. In order to improve efficiency, we also support multi-core CPU parallelization, which greatly accelerates algorithm development and verification.

- More information:We provide rich data visualization methods. Reinforcement learning algorithms typically involves huge number of parameters. In order to better understand the changes of each parameter in the training process, we use log files, TensorBoard, and wandb to visualize them. We believe this will help developers tune each algorithm more efficiently.

Overview of Algorithms

Here we provide a table of Safe RL algorithms that the benchmark includes.

This work is currently under review. We have already implemented and tested five more algorithms: PDO, RCPO, CPPO-PID, IPO, SAC-Lag. We will add them into the repository as soon as possible.

| Algorithm | Proceedings&Cites | Official Code Repo | Official Code Last Update | Official Github Stars |

|---|---|---|---|---|

| PPO-Lag | Tensorflow 1 |  |

|

|

| TRPO-Lag | Tensorflow 1 |  |

|

|

| FOCOPS | Neurips 2020 (Cite: 27) | Pytorch |  |

|

| CPO | ICML 2017(Cite: 663) | |||

| PCPO | ICLR 2020(Cite: 67) | Theano | ||

| P3O | IJCAI 2022(Cite: 0) | |||

| IPO | AAAI 2020(Cite: 47) | |||

| PDO | ||||

| RCPO | ICLR 2019 (Cite: 238) | |||

| CPPO-PID | Neurips 2020(Cite: 71) | Pytorch |  |

|

| MACPO | Preprint(Cite: 4) | Pytorch |  |

|

| MAPPO-Lag | Preprint(Cite: 4) | Pytorch |  |

|

| HATRPO | ICLR 2022 (Cite: 10) | Pytorch |  |

|

| HAPPO (Purely reward optimisation) | ICLR 2022 (Cite: 10) | Pytorch |  |

|

| MAPPO (Purely reward optimisation) | Preprint(Cite: 98) | Pytorch |  |

|

| IPPO (Purely reward optimisation) | Preprint(Cite: 28) |

Supported Environments

For detailed instructions, please refer to Environments.md.

Pre-requisites

To use SafePO-Baselines, you need to install environments. Please refer to Mujoco, Safety-Gym, Bullet-Safety-Gym for more details on installation. Details regarding the installation of IsaacGym can be found here. We currently support the Preview Release 3 version of IsaacGym.

Conda-Environment

conda create -n safe python=3.8

conda activate safe

# because the cuda version, we recommend you install pytorch manual.

pip install torch==1.8.0+cu111 torchvision==0.9.0+cu111 torchaudio==0.8.0 -f https://download.pytorch.org/whl/torch_stable.html

pip install -e .

conda install mpi4py

conda install scipyFor detailed instructions, please refer to Installation.md.

Getting Started

Single-Agent

train.py is the entrance file. Running train.py with arguments about algorithms and environments does the training. For example, to run PPO-Lag in Safexp-PointGoal1-v0, with 4 cpu cores and seed 0, you can use the following command:

python train.py --env-id Safexp-PointGoal1-v0 --algo ppo-lag --cores 4 --seed 0

Here we provide the list of common arguments:

| Argument | Default | Info |

|---|---|---|

| --algo | required | the name of algorithm exec |

| --cores | int | the number of cpu physical cores you use |

| --seed | int | the seed you use |

| --check_freq | int: 25 | check the snyc parameter |

| --entropy_coef | float:0.01 | the parameter of entropy |

| --gamma | float:0.99 | the value of dicount |

| --lam | float: 0.95 | the value of GAE lambda |

| --lam_c | float: 0.95 | the value of GAE cost lambda |

| --max_ep_len | int: 1000 | unless environment have the default value else, we take 1000 as default value |

| --max_grad_norm | float: 0.5 | the clip of parameters |

| --num_mini_batches | int: 16 | used for value network tranining |

| --optimizer | Adam | the optimizer of Policy other : SGD, other class in torch.optim |

| --pi_lr | float: 3e-4 | the learning rate of policy |

| --steps_per_epoch | int: 32000 | the number of interactor steps |

| --target_kl | float: 0.01 | the value of trust region |

| --train_pi_iterations | int: 80 | the number of policy learn iterations |

| --train_v_iterations | int: 40 | the number of value network and cost value network iterations |

| --use_cost_value_function | bool: False | use cost_value_function or not |

| --use_entropy | bool:False | use entropy or not |

| --use_reward_penalty | bool:False | use reward_penalty or not |

Multi-Agent

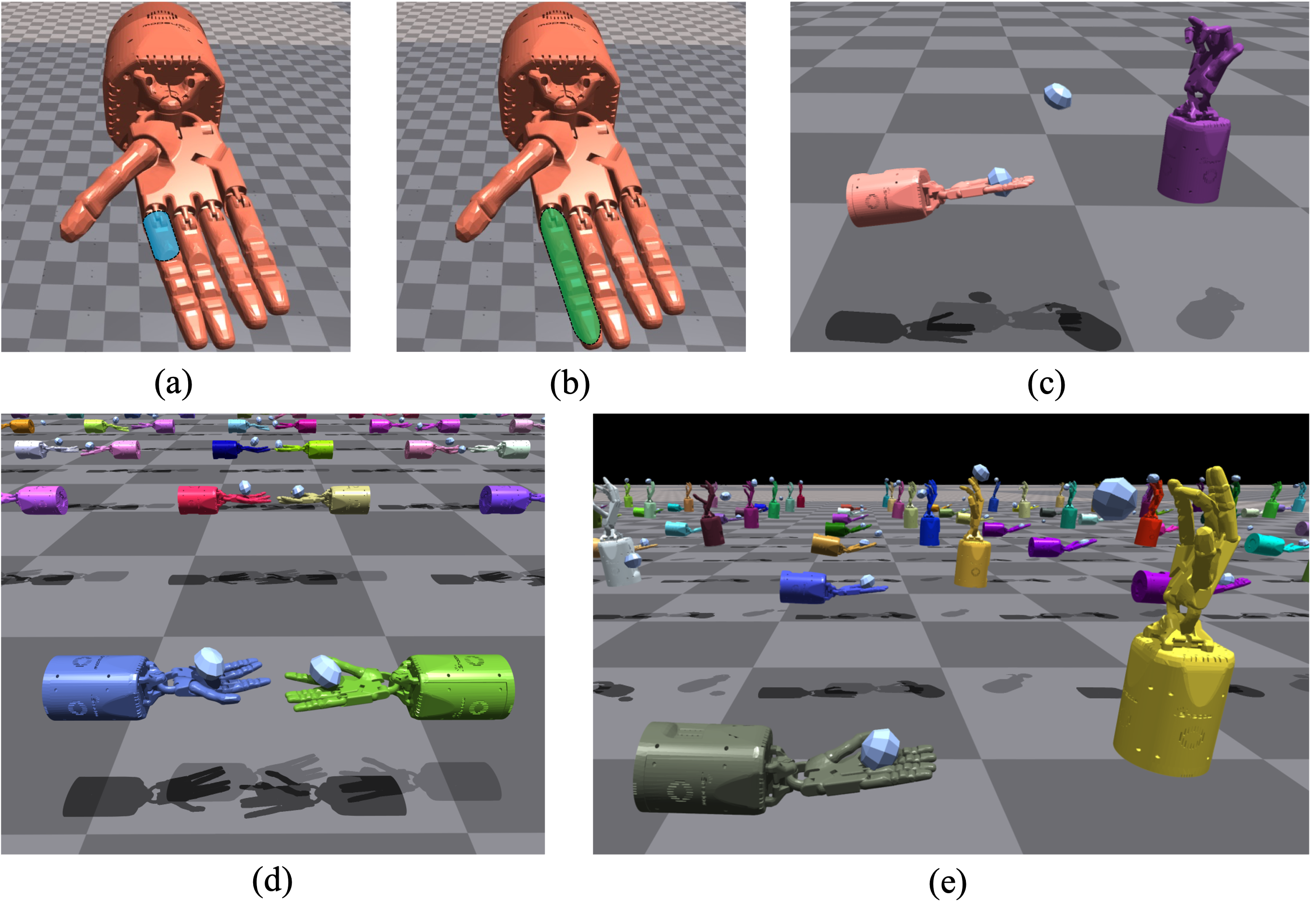

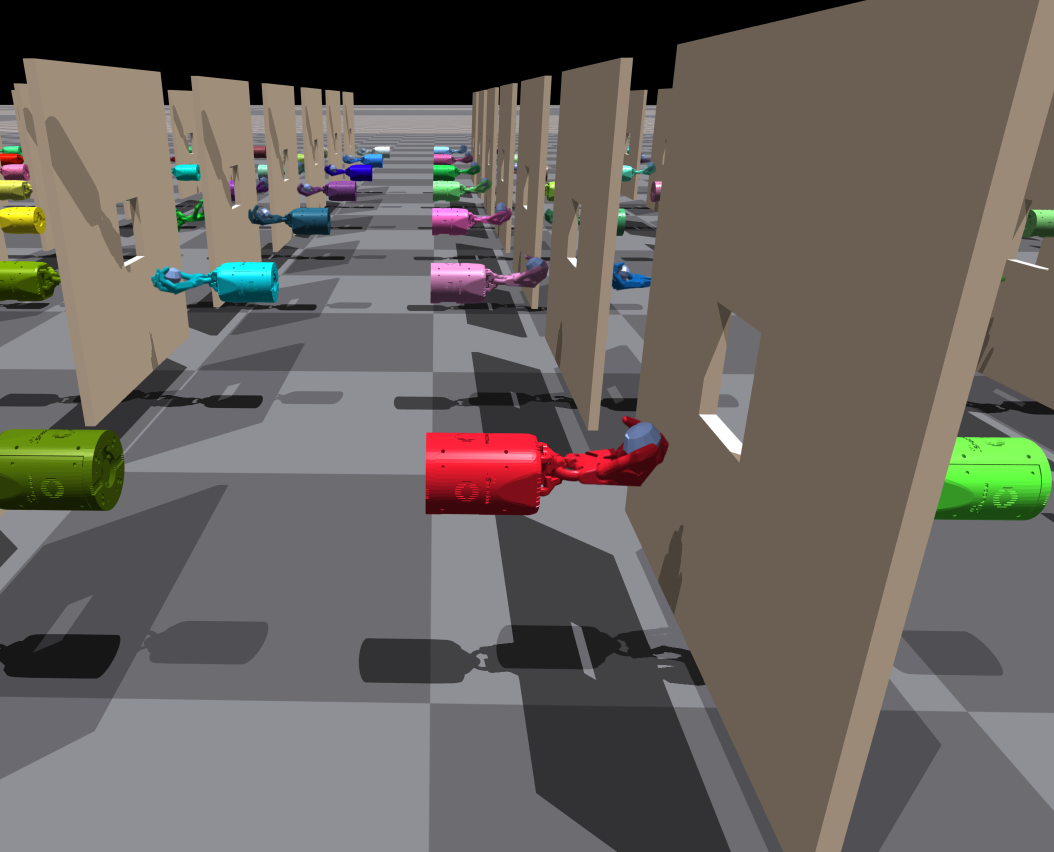

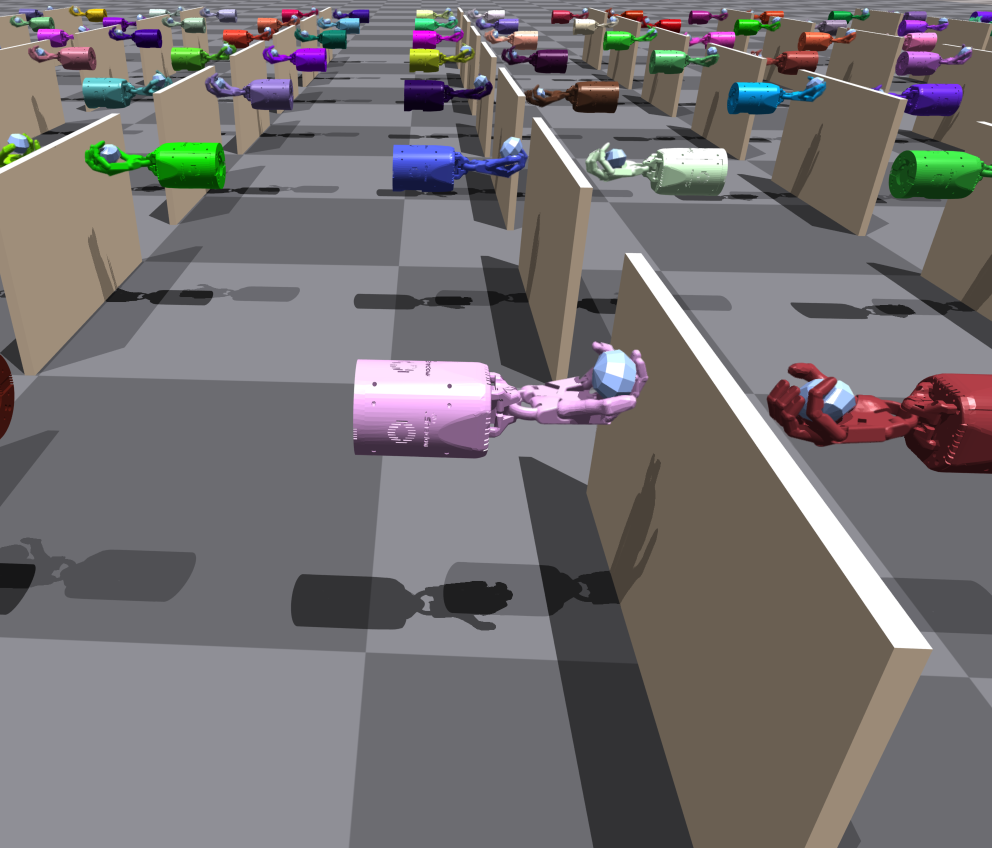

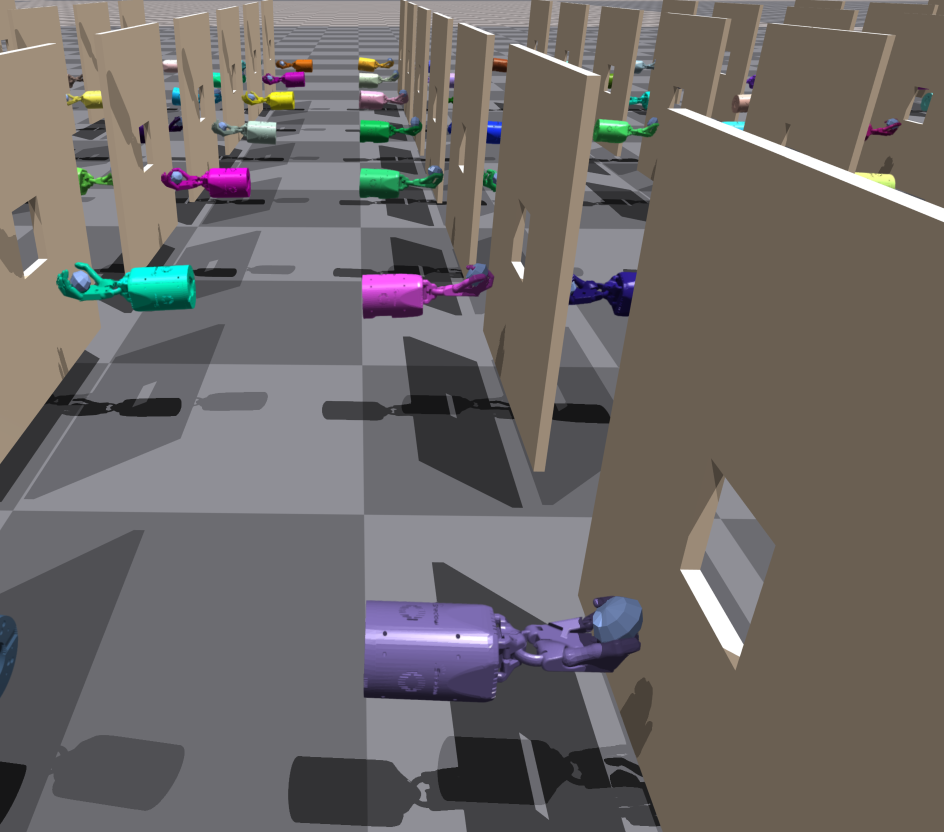

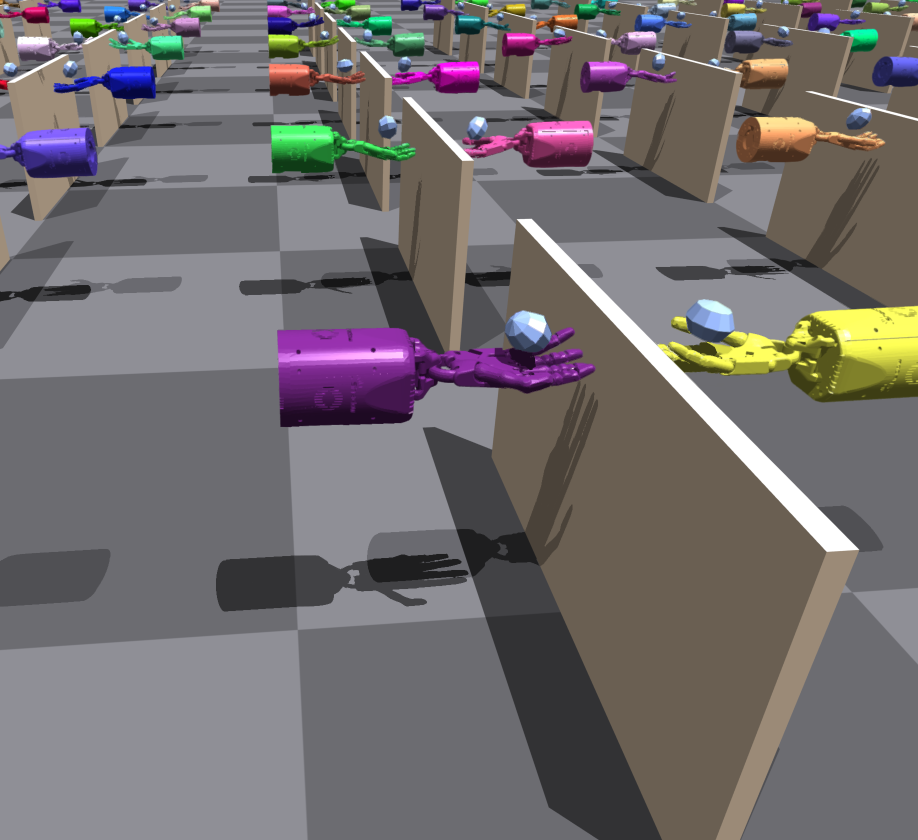

We also provide a safe MARL algorithm benchmark for safe MARL research on the challenging tasks of Safety DexterousHands. HAPPO, IPPO, MACPO, MAPPO-Lag and MAPPO have already been implemented.

safepo/envs/safe_dexteroushands/train_marl.py is the entrance file. Running train_marl.py with arguments about algorithms and tasks does the training. For example, you can use the following command:

# algo: macpo, mappolag, mappo, ippo, happo

python train_marl.py --task=ShadowHandOver --algo=macpo Selected Tasks

We implement some different constraints to the base environments, expanding the setting to both single-agent and multi-agent.

What's More

Our team has also designed a number of more interesting safety tasks for two-handed dexterous manipulation, and this work will soon be releasing code for use by more Safe RL researchers.

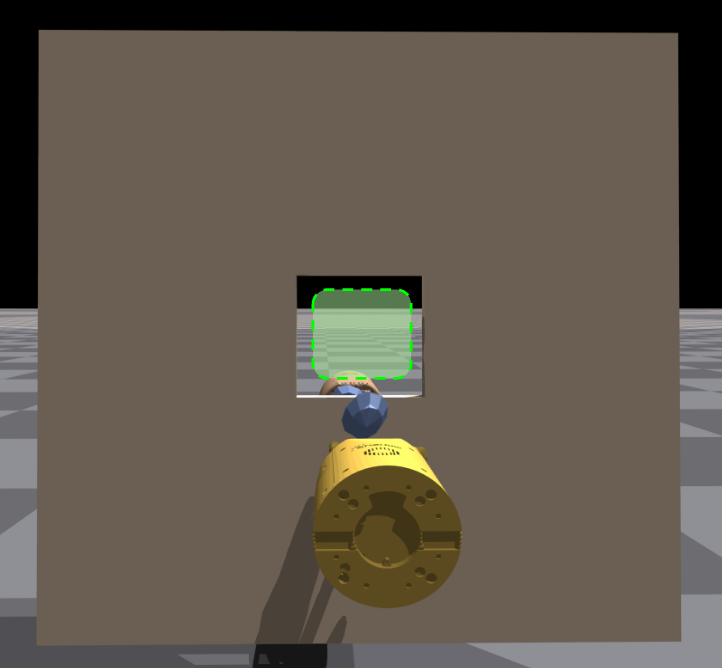

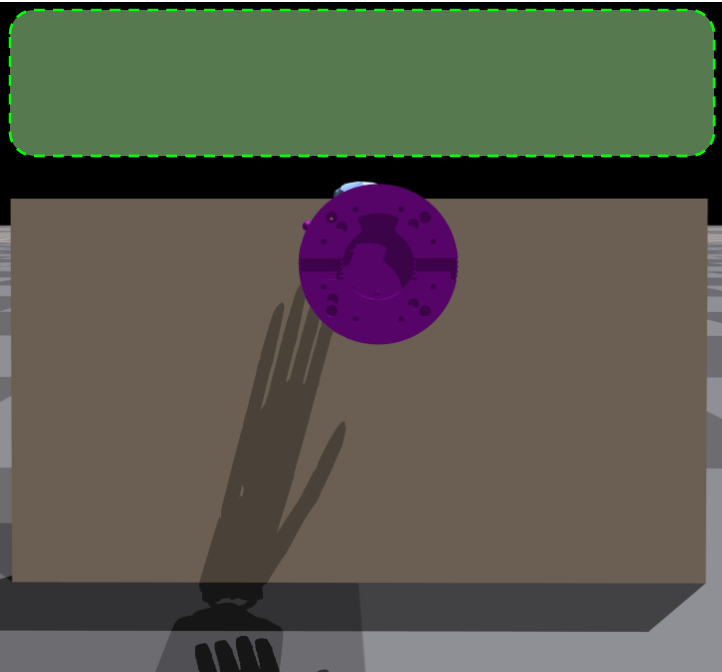

| Base Environments | Description | Demo |

|---|---|---|

| ShadowHandOverWall | None |  |

| ShadowHandOverWallDown | None |  |

| ShadowHandCatchOver2UnderarmWall | None |  |

| ShadowHandCatchOver2UnderarmWallDown | None |  |

And the safe region are :

| Wall | Wall Down |

|---|---|

|

|

Machine Configuration

We test all algorithms and experiments on CPU: AMD Ryzen Threadripper PRO 3975WX 32-Cores and GPU: NVIDIA GeForce RTX 3090, Driver Version: 495.44.

Maintenance

This repo is under long-term maintenance of PKU-MARL team. We will keep adding new algorithms and supporting new environments as they come out. Please watch us and stay tuned!

Ethical and Responsible Use

SafePO aims to benefit safe RL community research, and is released under the Apache-2.0 license. Illegal usage or any violation of the license is not allowed.

PKU-MARL Team

The Baseline is a project contributed by MARL team at Peking University, please contact yaodong.yang@pku.edu.cn if you are interested to collaborate. We also thank the list of contributors of the following open source repositories: Spinning Up, Bullet-Safety-Gym, SvenG, Safety-Gym.