JChunk project is simple library that enables different types of text splitting strategies. This project begun thanks to Greg Kamradt's post text splitting ideas

For now there is only Pablo Sanchidrian developing this project (in free time) so it might take a while to get to a first stable version.

Feel free to contribute!!

- Fixed Character Chunker (DONE)

- Recursive Character Text Chunker (DONE)

- Document Specific Chunker (NOT STARTED)

- Semantic Chunker (DONE)

- Agentic Chunker (NOT STARTED)

To build with running unit tests

./mvnw clean packageTo reformat using the java-format plugin

./mvnw spring-javaformat:applyTo update the year on license headers using the license-maven-plugin

./mvnw license:update-file-header -PlicenseTo check javadocs using the javadoc:javadoc

./mvnw javadoc:javadoc -PjavadocCharacter splitting is a basic text processing technique where text is divided into fixed-size chunks of characters. While it's not suitable for most advanced text processing tasks due to its simplicity and rigidity, it serves as an excellent starting point to understand the fundamentals of text splitting. See the following aspects of this chunker including its advantages, disadvantages, and key concepts like chunk size, chunk overlap, and separators.

The chunk size is the number of characters each chunk will contain. For example, if you set a chunk size of 50, each chunk will consist of 50 characters.

Example:

- Input Text: "This is an example of character splitting."

- Chunk Size: 10

- Output Chunks:

["This is an", " example o", "f characte", "r splitti", "ng."]

Chunk overlap refers to the number of characters that will overlap between consecutive chunks. This helps in maintaining context across chunks by ensuring that a portion of the text at the end of one chunk is repeated at the beginning of the next chunk.

Example:

- Input Text: "This is an example of character splitting."

- Chunk Size: 10

- Chunk Overlap: 4

- Output Chunks:

["This is an", " an examp", "mple of ch", "aracter sp", " splitting."]

Separators are specific character sequences used to split the text. For instance, you might want to split your text at every comma or period.

Example:

- Input Text: "This is an example. Let's split on periods. Okay?"

- Separator: ". "

- Output Chunks: ["This is an example", "Let's split on periods", "Okay?"]

Pros

- Easy & Simple: Character splitting is straightforward to implement and understand.

- Basic Segmentation: It provides a basic way to segment text into smaller pieces.

Cons

- Rigid: Does not consider the structure or context of the text.

- Duplicate Data: Chunk overlap creates duplicate data, which might not be efficient.

When performing retrieval-augmented generation (RAG), fixed chunk sizes can sometimes be inadequate, either missing crucial information or adding extraneous content. To address this, we can use embeddings to represent the semantic meaning of text, allowing us to chunk content based on semantic relationships.

- Sentence Splitting:

Split the entire text into sentences using delimiters like '.', '?', and '!' (alternative strategies can also be used).

- Mapping Sentences:

Transform the list of sentences into the following structure:

[

{

"sentence": "this is the sentence.",

"index": 0

},

{

"sentence": "this is the next sentence.",

"index": 1

},

{

"sentence": "this is the last sentence.",

"index": 2

}

]- Combining Sentences:

Combine each sentence with its preceding and succeeding sentences (the number of sentences will be given by a bufferSize variable ) to reduce noise and better capture relationships. Add a key combined for this combined text.

Example for buffer size 1:

[

{

"sentence": "this is the sentence.",

"combined": "this is the sentence. this is the next sentence.",

"index": 0

},

{

"sentence": "this is the next sentence.",

"combined": "this is the sentence. this is the next sentence. this is the last sentence.",

"index": 1

},

{

"sentence": "this is the last sentence.",

"combined": "this is the next sentence. this is the last sentence.",

"index": 2

}

]- Generating Embeddings:

Compute the embedding of each combined.

[

{

"sentence": "this is the sentence.",

"combined": "this is the sentence. this is the next sentence.",

"embedding": [0.002, 0.003, 0.004, ...], // embedding of the combined key text

"index": 0

},

// ...

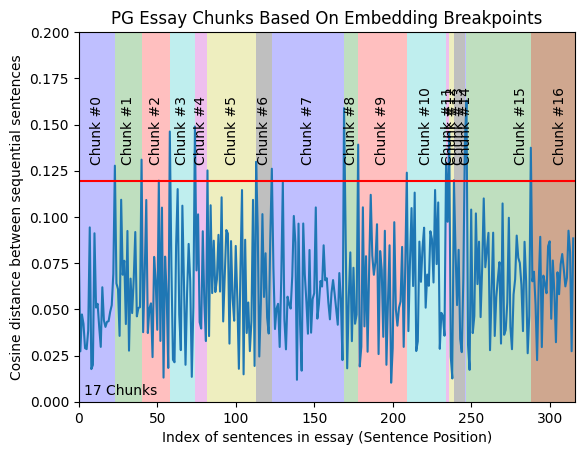

]- Calculating Distances:

Compute the cosine distances between sequential pairs.

- Identifying Breakpoints:

Analyze the distances to identify sections where distances are smaller (indicating related content) and areas with larger distances (indicating less related content).

- Determining Split Points:

Use the 95th percentile of the distances as the threshold for determining breakpoints (can use any other percentile or threshold technique).

- Splitting Chunks:

Split the text into chunks at the identified breakpoints.

- Done!

Please read CONTRIBUTING.md for details on our code of conduct, and the process for submitting pull requests to us.

Frontend to test the different chunkers and see the results in a more visual way.