Neural Face Editor - create and edit anime images with power of GAN's and SoTA methods!

Team:

- Mikhail Rudakov, B19-DS, m.rudakov@innopolis.university

- Anna Startseva, B19-DS, a.startseva@innopolis.university

- Andrey Palaev, B19-DS, a.palaev@innopolis.university

Neural Face Editor is an application that utilizes GAN latent space manipulation to change anime faces facial attributes. Application is available in form of telegram bot via @neural_face_editor_bot. To achieve such result, StyleGAN [1] is trained on animefacesdataset [2] for anime faces generation. Then, several SVM are trained for separate attributes. Finally, ideas from [3] are used to perform unconditional and conditional attributes manipulation. To let user use their own pictures, hybrid GAN inversion [4] is implemented. To assess GAN image generation, FID [5] distance is calculated on the dataset of real and fake images. Output quality of generated images has been assessed manually. More detailes are provided in project report.

Anime_Face_Manipulations.mp4

GAN_Inversion.mp4

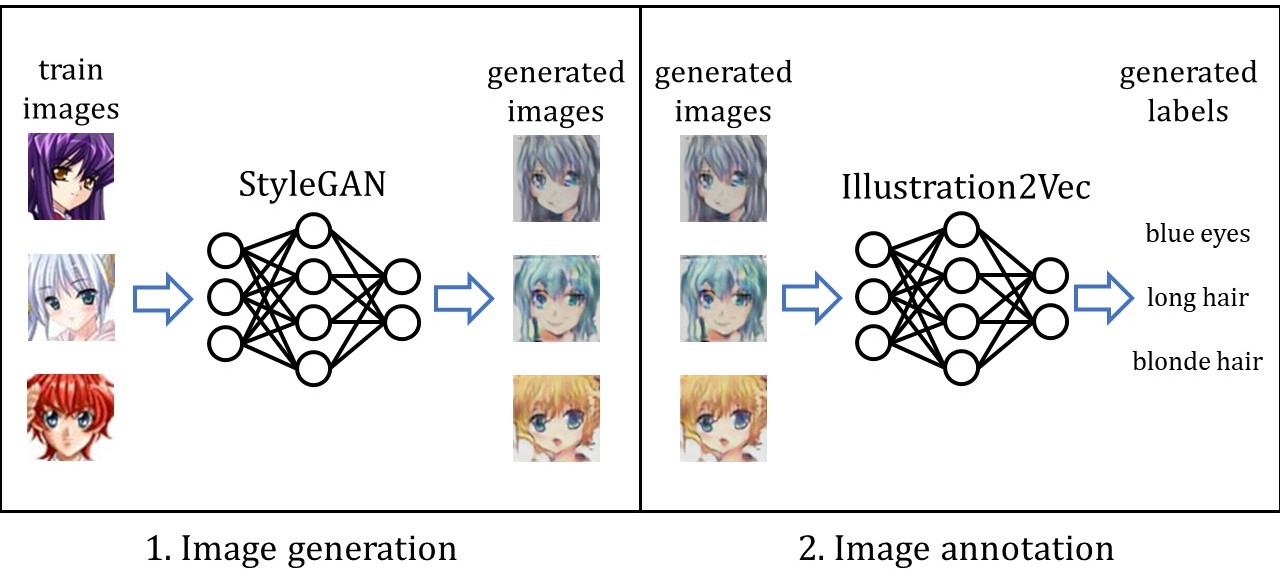

Mainly adopted from Shen et al. [3], our project architecture consists of several models.

Mainly adopted from Shen et al. [3], our project architecture consists of several models.

Stage 1 is image generation. StyleGAN is used to produce images of anime faces.

Stage 2 is generated image annotation. We use illustration2Vec proposed by Saito and Matsui [6] to tag images with tags we need.

Stage 3 is image classification. For each attribute we want to be able to control further, we train a SVM for separating latent codes based on some feature. This lets us know the vector in which this feature changes in the latent space.

Sample generated images, produced by GAN:

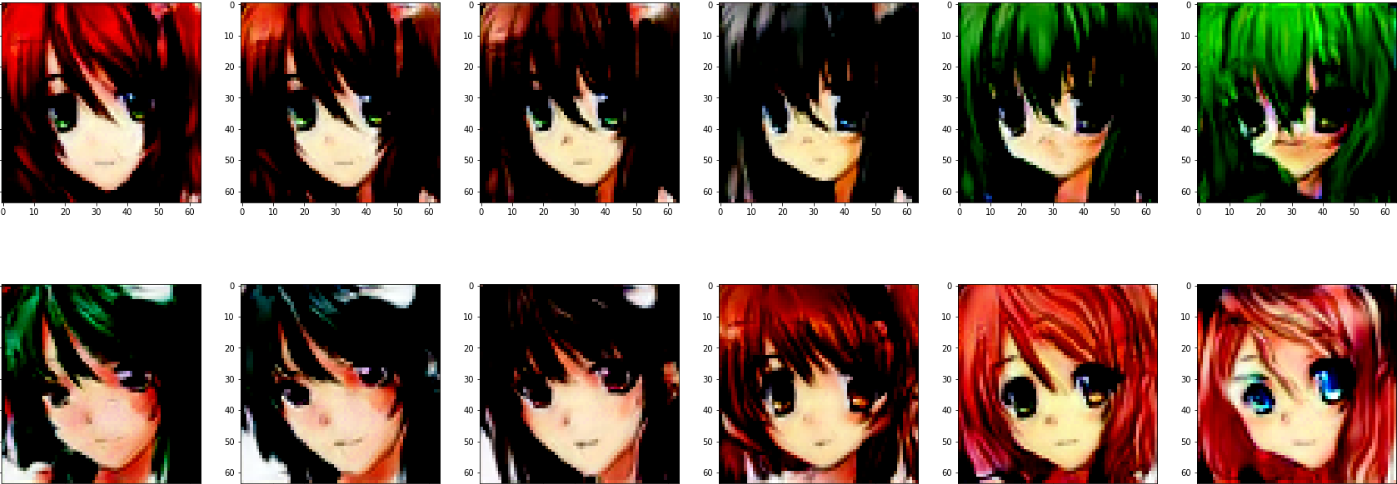

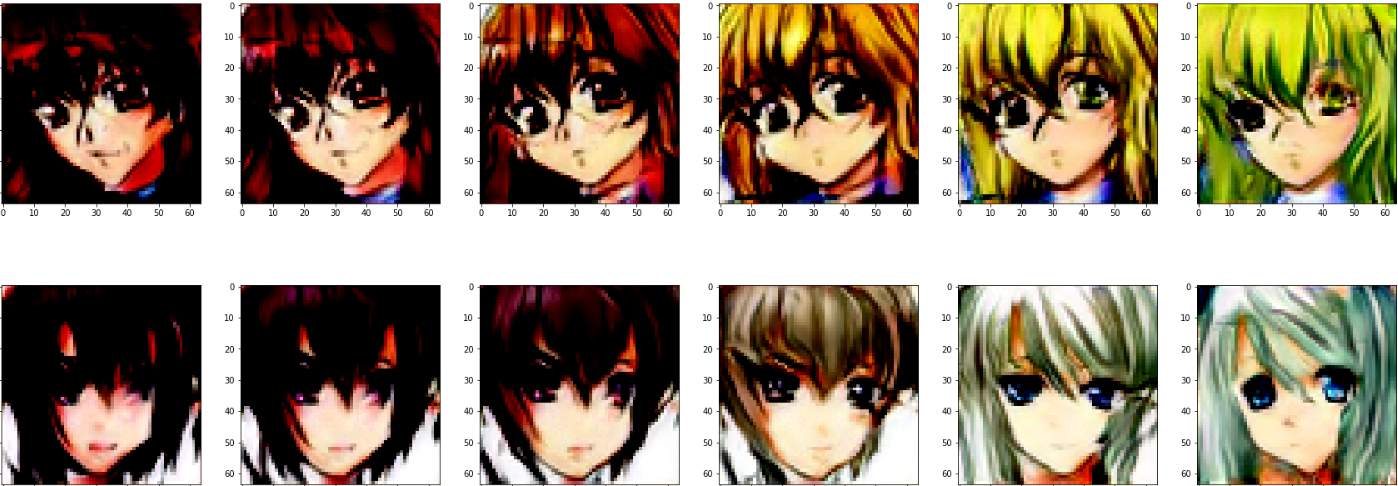

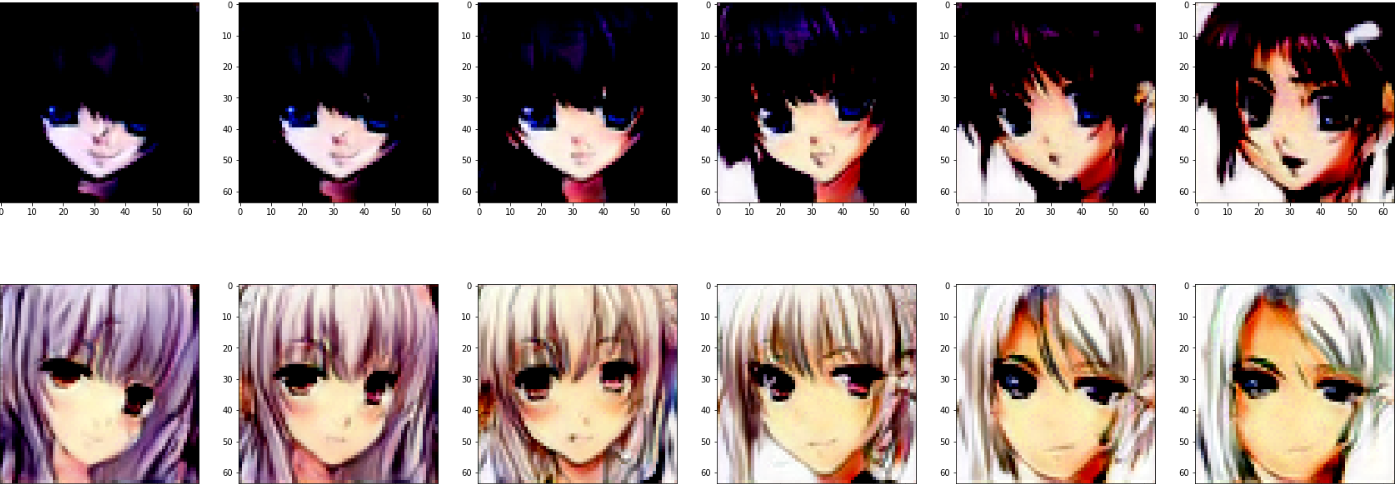

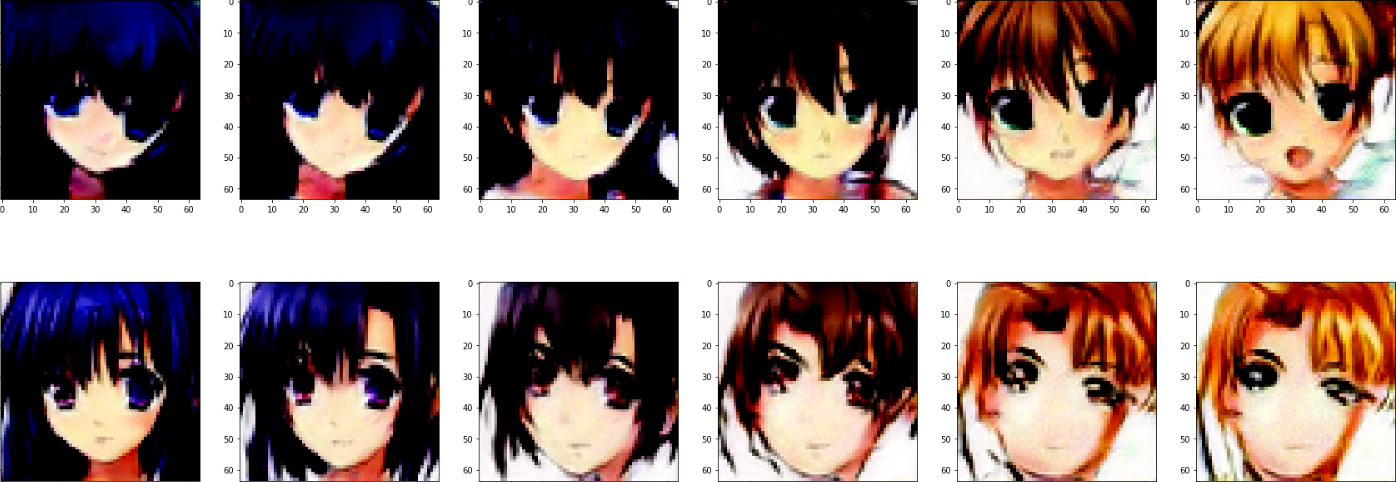

After getting separating hyperplanes, we implemented the way of attribute manipulation from [3]. Specifically, given the separating hyperplane n and the latent vector z, we generate several images using a = np.linspace(-3, 3, 6). Examples of image manipulation:

- Making hair more (or less) green while keeping the hair length

- Making hair more (or less) red without controlling the hair length

- Changing the hair length preserving the hair colour

- Changing the hair length without controlling the hair colour

Some results to access quality of GAN inversion:

[1] T. Karras, S. Laine, and T. Aila, “A style-based generator architecture for generative adversarial networks,” in CVPR, 2019.

[2] Anonymous, D. community, and G. Branwen, “Danbooru2021: A large-scale crowdsourced and tagged anime illustration dataset,” https://www.gwern.net/Danbooru2021, January 2022. [Online]. Available: https://www.gwern.net/Danbooru2021

[3] Y. Shen, J. Gu, X. Tang, and B. Zhou, “Interpreting the latent space of gans for semantic face editing,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 9240–9249

[4] J. Zhu, Y. Shen, D. Zhao, and B. Zhou, “In-domain gan inversion for real image editing,” in ECCV, 2020.

[5] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “Gans trained by a two time-scale update rule converge to a local nash equilibrium,” Advances in neural information processing systems, vol. 30, 2017.

[6] Saito, Masaki, and Yusuke Matsui. "Illustration2vec: a semantic vector representation of illustrations." SIGGRAPH Asia 2015 Technical Briefs. 2015. 1-4.