If you are interested in step by step explanation and design review, check out the post for this repo at project-for-beginners-batch-edition

A real data engineering project usually involves multiple components. Setting up a data engineering project, while conforming to best practices can be extremely time-consuming. If you are

A data analyst, student, scientist, or engineer looking to gain data engineering experience, but is unable to find a good starter project.

Wanting to work on a data engineering project that simulates a real-life project.

Looking for an end-to-end data engineering project.

Looking for a good project to get data engineering experience for job interviews.

Then this tutorial is for you. In this tutorial, you will

-

Setup Apache Airflow, AWS EMR, AWS Redshift, AWS Spectrum, and AWS S3.

-

Learn data pipeline best practices.

-

Learn how to spot failure points in a data pipeline and build systems resistant to failures.

-

Learn how to design and build a data pipeline from business requirements.

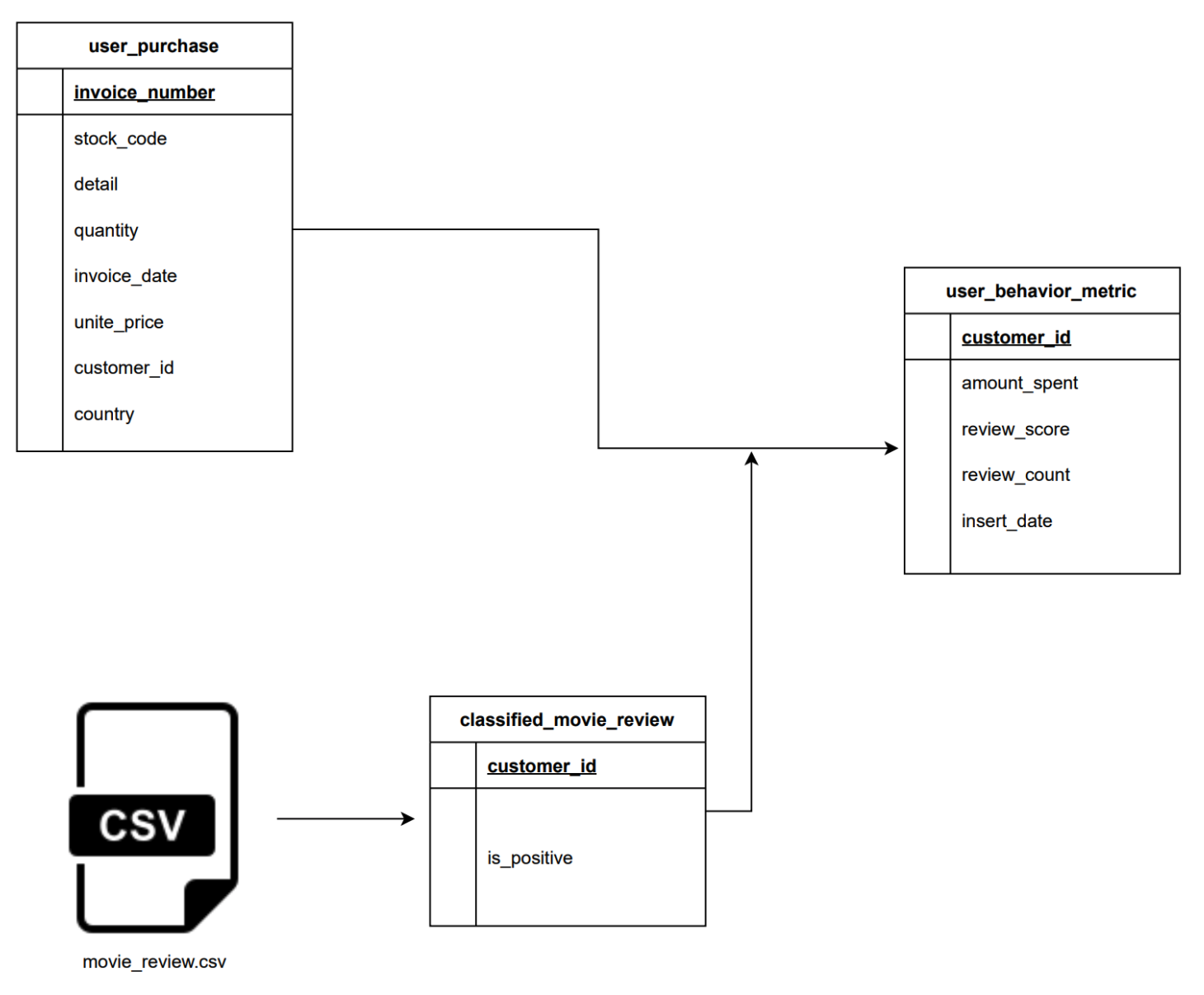

Let's assume that you work for a user behavior analytics company that collects user data and creates a user profile. We are tasked with building a data pipeline to populate the user_behavior_metric table. The user_behavior_metric table is an OLAP table, meant to be used by analysts, dashboard software, etc. It is built from

user_purchase: OLTP table with user purchase information.movie_review.csv: Data sent every day by an external data vendor.

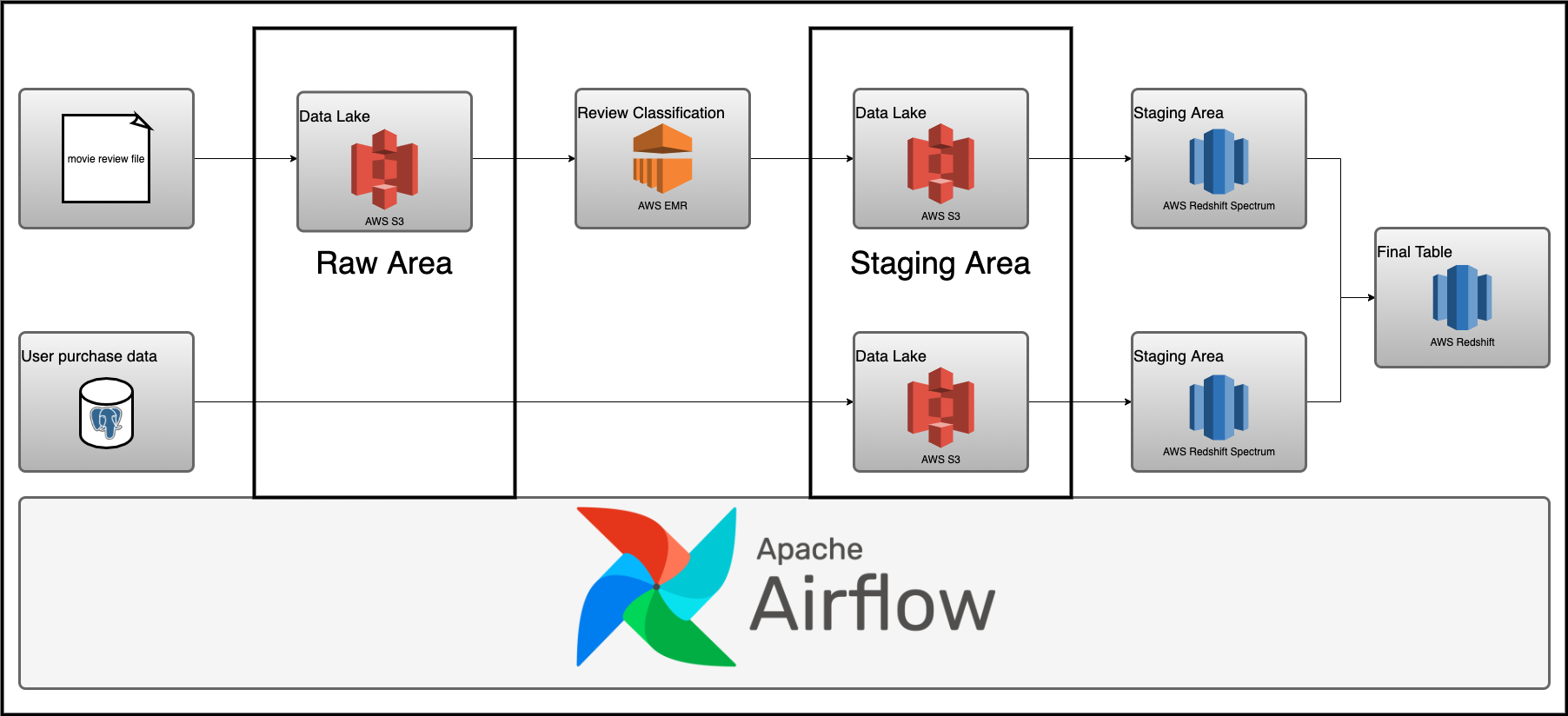

We will be using Airflow to orchestrate

- Classifying movie reviews with Apache Spark.

- Loading the classified movie reviews into the data warehouse.

- Extracting user purchase data from an OLTP database and loading it into the data warehouse.

- Joining the classified movie review data and user purchase data to get

user behavior metricdata.

- Docker with at least 4GB of RAM and Docker Compose v1.27.0 or later

- psql

- AWS account

- AWS CLI installed and configured

To set up the infrastructure and base tables we have a script called setup_infra.sh. This can be run as shown below.

git clone https://github.com/josephmachado/beginner_de_project.git

cd beginner_de_project

./setup_infra.sh {your-bucket-name}This sets up the following components

log on to www.localhost:8080 to see the Airflow UI. The username and password are both airflow.

When you are done, do not forget to turn off your instances. In your terminal run

./spindown_infra.sh {your-bucket-name}To stop all your AWS services and local docker containers.

Contributions are welcome. If you would like to contribute you can help by opening a github issue or putting up a PR.