NEW: For users interested in our chromatin information pipeline (f-VICE scores), it is now available in the pre 1.1 branch of this repository! Please let us know if you encounter any bugs via the Issues tab or emailing dricardo@wustl.edu.

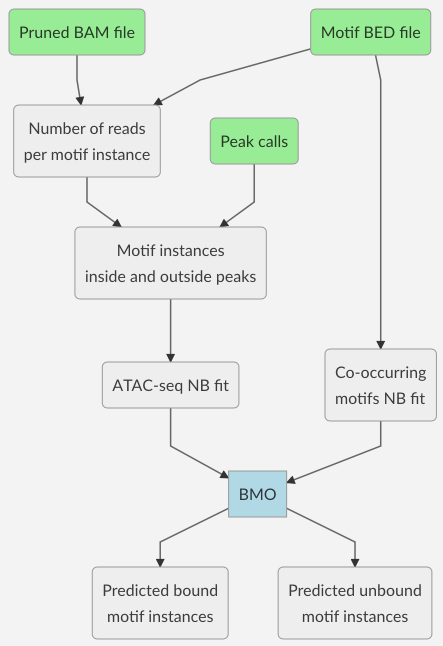

BMO (pronounced beemo) is an algorithm to predict TF binding from ATAC-seq data without using footprints. BMO uses negative binomial models of ATAC-seq fragments and number of co-occurring motifs to determine the likelihood of a given motif instance being bound. More details about the model can be found in our Nature Communications manuscript:

Disclaimer: BMO has only been tested with paired-end ATAC-seq data.

Platforms tested: Linux (Debian 9), macOS (10.13)

Installation time: less than 5 minutes.

From your machine, run the command below wherever you want BMO's main directory to be (e.g. ~/software).

git clone https://github.com/ParkerLab/BMO.gitBMO was designed to run using Snakemake, an extremely powerful tool for running reproducible pipelines. The command below will create a base conda environment with snakemake and mamba to handle dependencies. All the other dependencies will be handled internally by snakemake. Requirements: Having a working Anaconda or Miniconda installation in your system.

conda create -c conda-forge -c bioconda -n bmo_base snakemake mamba

conda activate bmo_base- ATAC-seq experiment processed 1 and indexed BAM file

- ATAC-seq peak calls 2

- Motif BED6 3 files. One file per PWM scan 4

1 We recommend high quality (MAPQ ≥ 30), autosomal, duplicate removed (we use Picard), properly paired, and uniquely mapped reads only (SAM flags -f 3 -F 4 -F 8 -F 256 -F 1024 -F 2048). If you don't have an ATAC-seq pipeline set up yet, you should consider using the Parker Lab ATAC-seq Snakemake pipeline.

2 We call them with MACS2, using flags --broad --nomodel --shift -100 --extsize 200 --keep-dup all and then intersect out blacklisted regions.

3 Mandatory. Pipeline will crash otherwise.

4 We use FIMO.

Our Snakemake pipeline makes use of a configuration file, which includes information of paths and input files. The template is located in config/config.yaml. Let's go through each of the fields:

bmo_dir: this is the folder where you cloned this repository.motif_dir: path to your motif BED6 files.motif_ext: extension associated with the motif files. Do not add a dot in the beginning (e.g. usebed.gzinstead of.bed.gz).motif_file: plain text file containing the list of motif names (no paths or extensions), one per line.results: directory where the output will be saved.ionice: a string to append before each command call to set disk/CPU priority (e.g.ionice -c2 -n7). For Mac users:ioniceis not supported in macOS, so the default value should be set to blank or"".use_shm: True or False. For some of the I/O heavy steps, BMO can use a shared memory partition (i.e. RAM that behave as disk space, like/dev/shm). This can increase BMO's concurrency when submitting jobs on a single machine with >10Gb of RAM to spare. Can also be used to send jobs to a SSD partition, instead. Only use this option if you know what you are doing.shm_dir: path for shared memory operations ifuse_shm: True.samples: information about each ATAC-seq experiment to be processed. Thebamfileandpeakfilefields under each sample handle are mandatory and point to the experiment's BAM and peak calls, respectively. Attention: make sure that the sample handles (main identation of each sample) are unique.

IMPORTANT: Because the config is a yaml file, make sure to maintain all the indentations as they appear or unexpected behaviors may occur.

To execute the pipeline, you need to copy both the Snakefile and input/config.yaml to wherever you want to run the analysis in your machine/cluster and then update the config file as described in the previous section. After that, just run the code below and Snakemake will take care of the rest. Simple as that.

snakemake [-j {threads}] [--resources io_limit={concurrency}] --configfile config.yaml

- Flags in [brackets] are optional.

{threads}is an integer value for the total number of cores Snakemake is allowed to use. if running on a HPC cluster, then set to 999 or some arbitrarily high value.{concurrency}is also an integer, and determines the number of maximum I/O intensive jobs to run simultaneously (we recommend starting with 1-3 and keeping an eye on the%iowaitcolumn ofsarto see how much your machine can handle). Alternatively, the high I/O jobs can be pushed to RAM or to an SSD partition by changinguse_shm: Truein the config file (see details in the previous section). If using the latter option, then also addshm_limit={shm_concurrency}to the--resourcescall above, where{shm_concurrency}is an integer for the maximum number of concurring jobs when using the RAM/SSD partition. If eitherio_limitorshm_limitare not set, then all jobs will be submitted with no regards to maximum concurrency (which should not be an issue if running in a cluster).

Edit the variables in the # Config section of src/bmo_manual.sh similarly to the config.yaml file and run the commands below. Make sure you have all the dependencies listed in envs/bmo.yml installed in your system (note: the required R packages have the prefix r- in the yml file).

bash scripts/bmo_manual.sh > pipeline.shThis will create the work directory structure and a file with the list of commands named pipeline.sh. If you wish to use a job scheduler, then change the # drmr: lines to whatever resource call parameters your scheduler uses. The example here uses drmr:

drmr pipeline.shThe expected run time will vary depending on the size of your input files. As an example, a relatively large motif file (~400K instances) running on a very large BAM file (~80M reads) takes less than 5 minutes and 500Mb of RAM using 4 cores. The same motif file takes less than 2 minutes and the same amount of RAM to run on a smaller BAM file (~35M reads) using the same number of cores.

We included some example data at examples. It consists of a heavily downsampled chromosome 1 of one of the Buenrostro et al 2013[1] original GM12878 ATAC-seq samples and chromosomes 1 of our CTCF_known2 and ELF1_known1 motif scans. The example code will generate the output files at work/bmo/example_buenrostro_rep1/bound.

[1] doi: 10.1038/nmeth.2688

The example also includes a config file, which we will use to instruct Snakemake. To run the example, execute the code below.

# conda activate bmo_base

snakemake -j 4 --configfile examples/example_config.yaml --use-condaThe # Config preamble of src/bmo_manual.sh is already set to run the example data.

# Generate and execute pipeline file

bash src/bmo_manual.sh > pipeline.sh

bash pipeline.shOnce the pipeline finishes running, you can find results in the work/bmo/{sample}/bound folder. The BED6 files there will correspond to the predicted bound motif instances for each motif.

- Chromosome name

- Start position

- End position

- Feature name

- BMO score (-log10 adjusted p-value)

- Strand

If you want to use different filtering thresholds than our defaults, then look for the files at work/bmo/{sample}/all. The output is as described below:

- Chromosome name

- Start position

- End position

- Feature name

- Feature score (e.g. FIMO score)

- Strand

- Number of ATAC-seq fragments in feature vicinity

- Number of co-occurring motifs in feature vicinity

- ATAC-seq fragments negative bionomial p-value

- Co-occurring negative bionomial motifs p-value

- Combined p-value

- Adjusted combined p-value (using Benjamini-Yekutieli)

- BMO score (-log10 adjusted p-value)

- Predicted bound 0/1 (1 is yes)