PyPI package for conversion cost values (less is better) to fitness values (more is better) and vice versa

pip install cost2fitness

This is the package containing several methods for transformation numpy arrays depended on scales, averages and so on. But the primary way to use it is the conversion from cost values (less is better) to fitness values (more is better) and vice versa. It can be highly helpful when u r using

- evolutionary algorithms depended on numeric differences: so, it's important to set good representation of samples scores for better selection

- sampling methods with probabilities depended on samples scores

There are several simple transformers. Each transformer is the subclass of BaseTransformer class containing name field and transform(array) method which transforms input array to new representation.

Checklist:

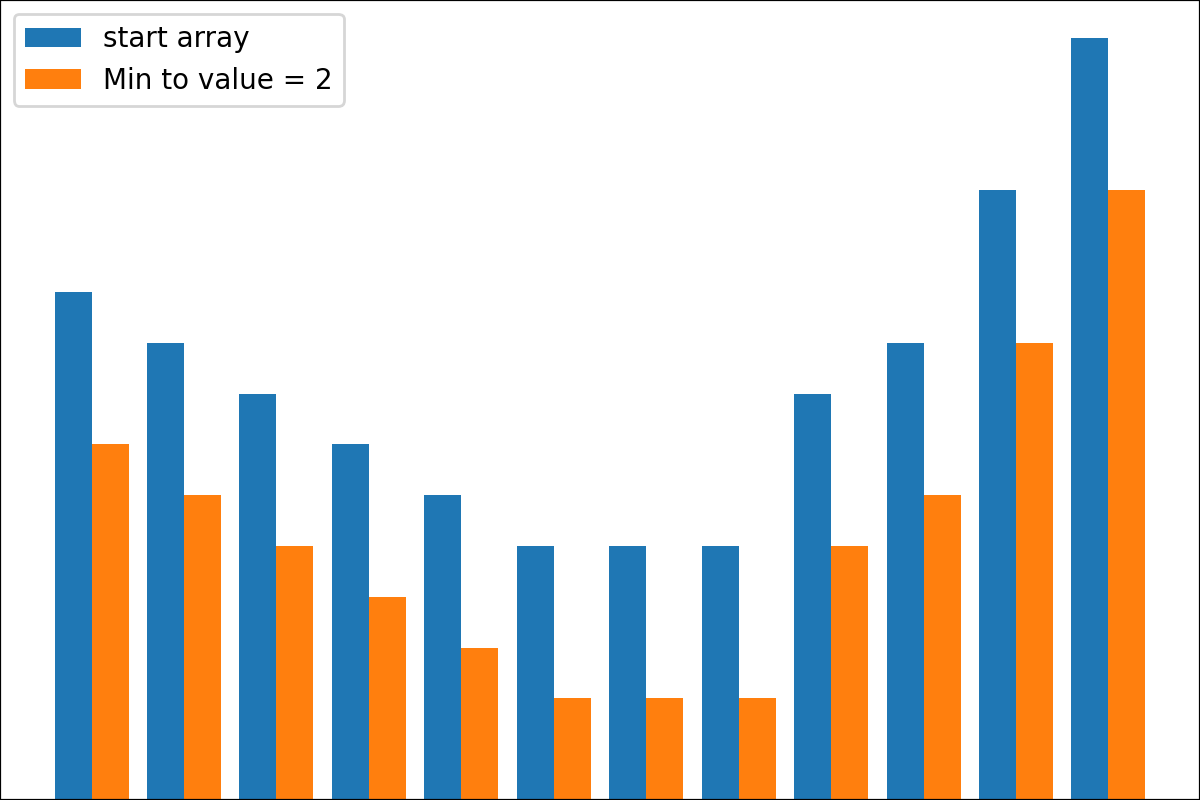

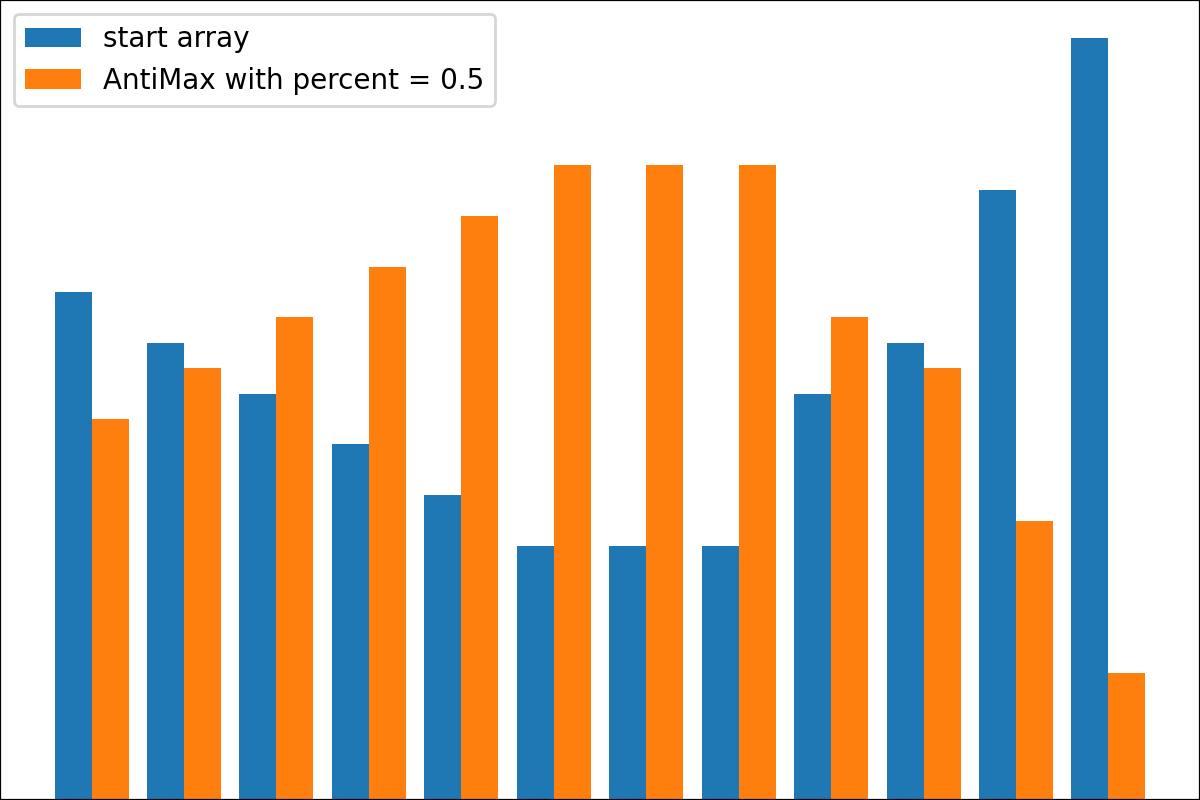

ReverseByAverage,AntiMax,AntiMaxPercent(percent),Min2Zero,Min2Value(value),ProbabilityView(converts data to probabilities),SimplestReverse,AlwaysOnes(returns array of ones),NewAvgByMult(new_average),NewAvgByShift(new_average)Divider(divider_number_or_array)(divides array on number or array, useful for fixed start normalization)Argmax(returns position of maximum element in array)Prob2Class(threshold = 0.5)(to convert probabilities to classes 0/1)ToNumber(converts array to one number by getting first element)

U can create your transformer using simple logic from file.

import numpy as np

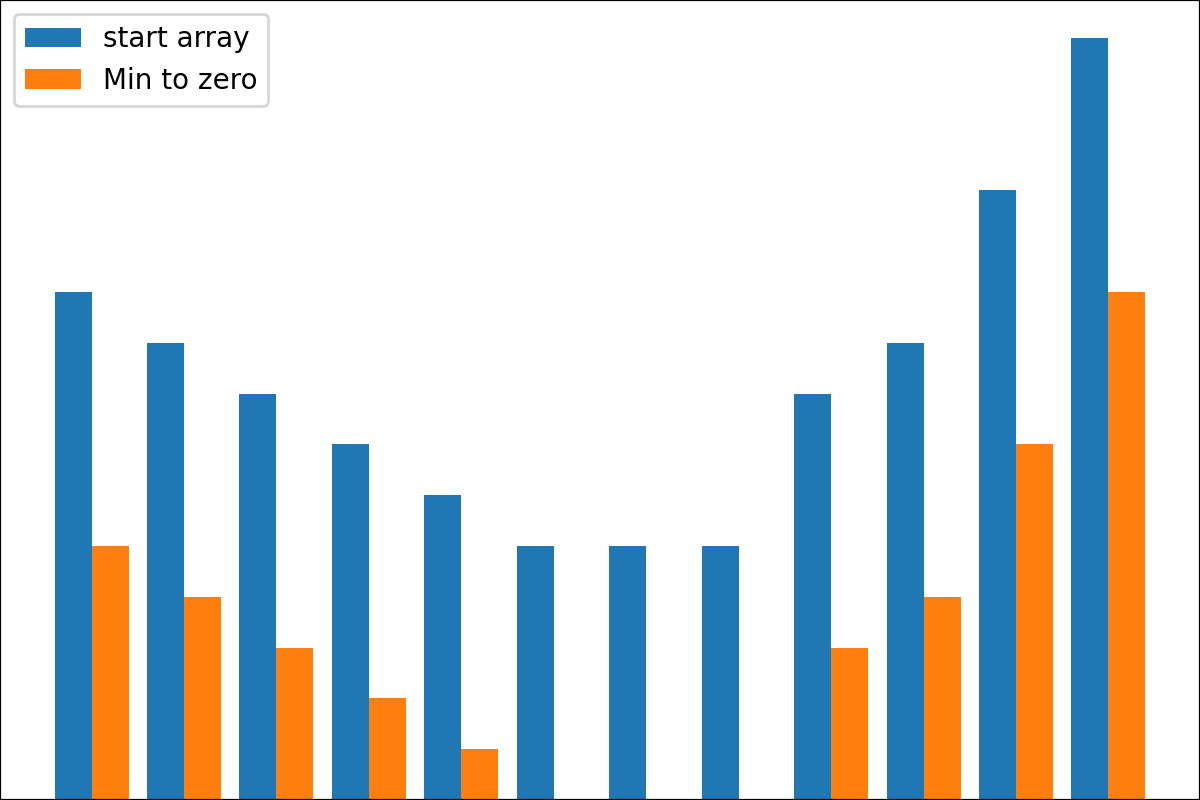

from cost2fitness import Min2Zero

tf = Min2Zero()

arr_of_scores = np.array([10, 8, 7, 5, 8, 9, 20, 12, 6, 18])

tf.transform(arr_of_scores)

# array([ 5, 3, 2, 0, 3, 4, 15, 7, 1, 13])U also can combine these transformers using Pl pipeline. For example:

import numpy as np

from cost2fitness import ReverseByAverage, AntiMax, Min2Zero, Pl

pipe = Pl([

Min2Zero(),

ReverseByAverage(),

AntiMax()

])

arr_of_scores = np.array([10, 8, 7, 5, 8, 9])

# return each result of pipeline transformation (with input)

pipe.transform(arr_of_scores, return_all_steps= True)

#array([[10. , 8. , 7. , 5. , 8. ,

# 9. ],

# [ 5. , 3. , 2. , 0. , 3. ,

# 4. ],

# [ 0.66666667, 2.66666667, 3.66666667, 5.66666667, 2.66666667,

# 1.66666667],

# [ 5. , 3. , 2. , 0. , 3. ,

# 4. ]])

# return only result of transformation

pipe.transform(arr_of_scores, return_all_steps= False)

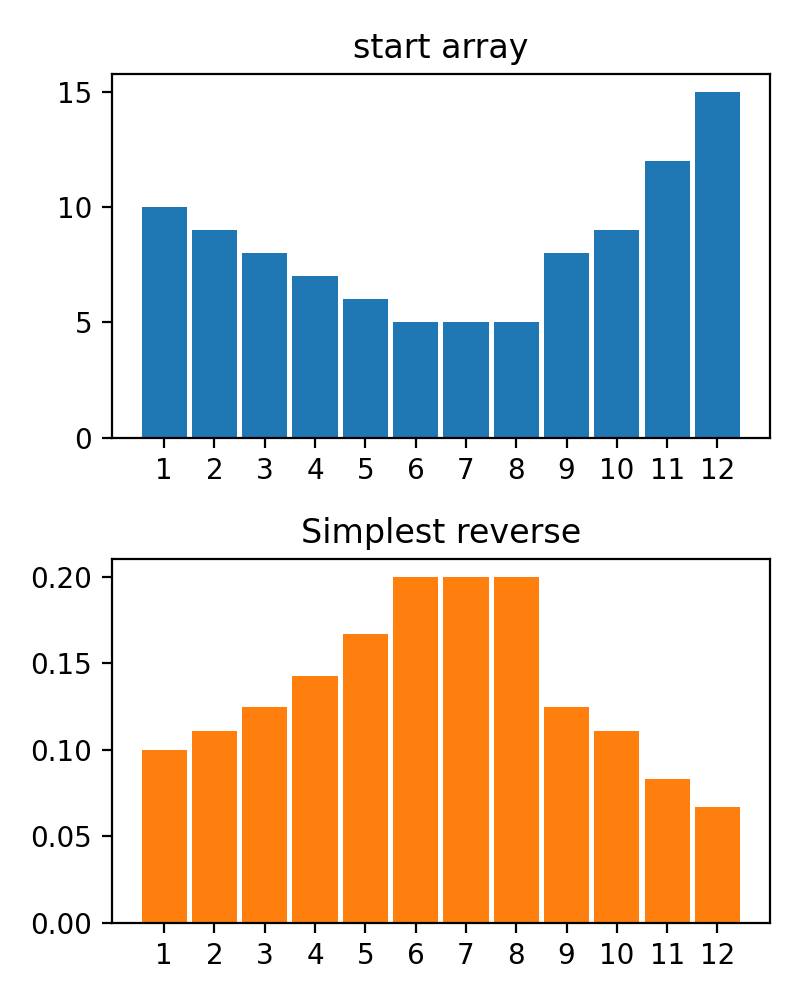

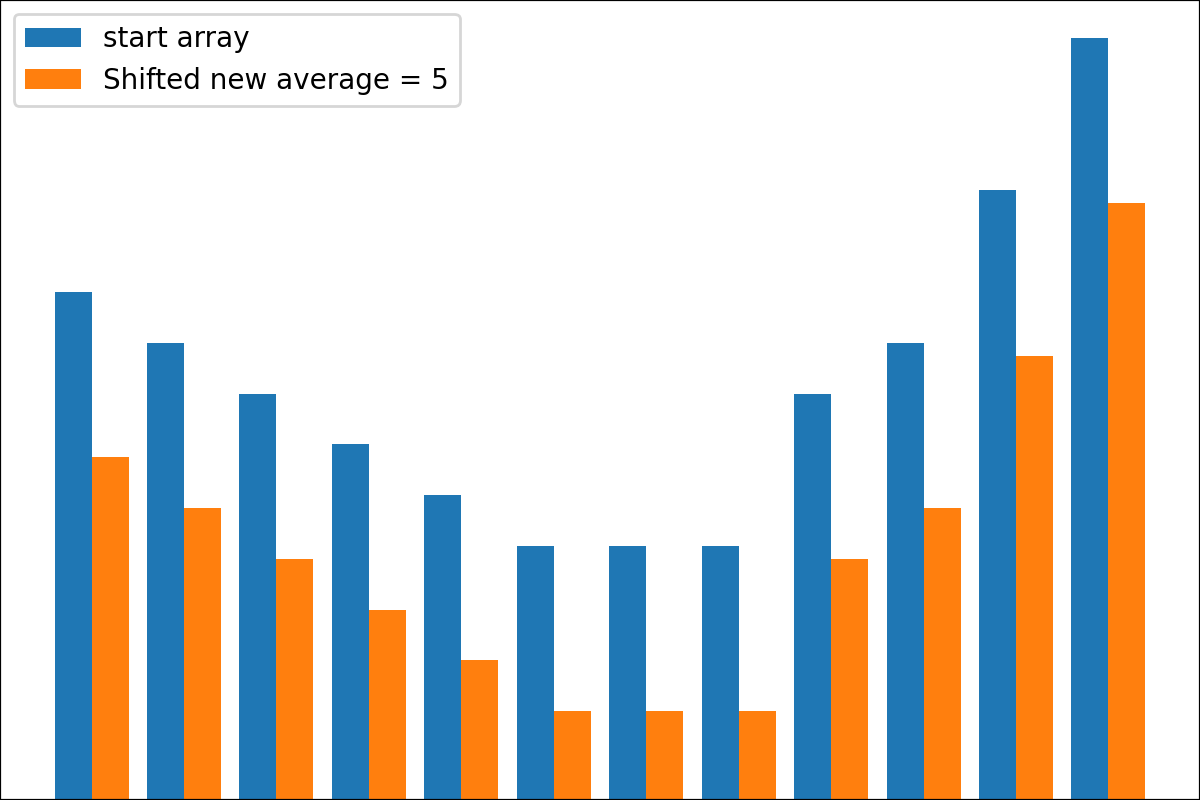

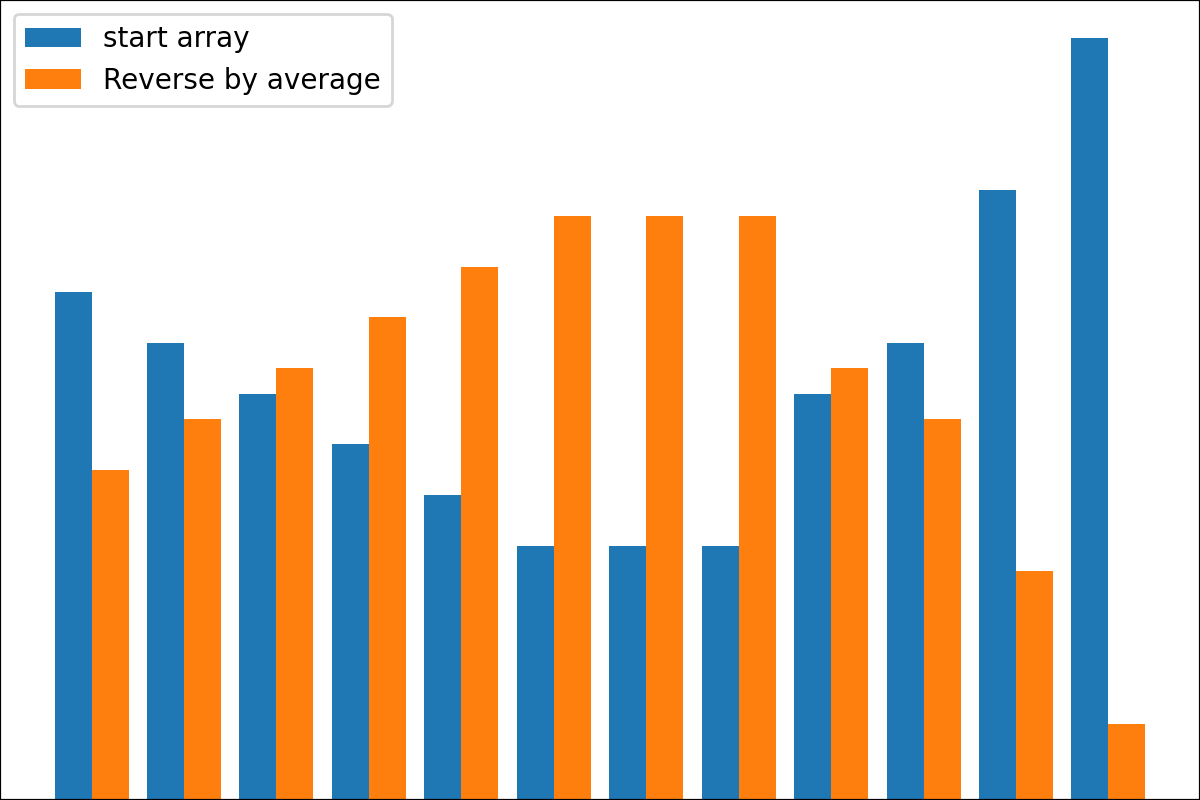

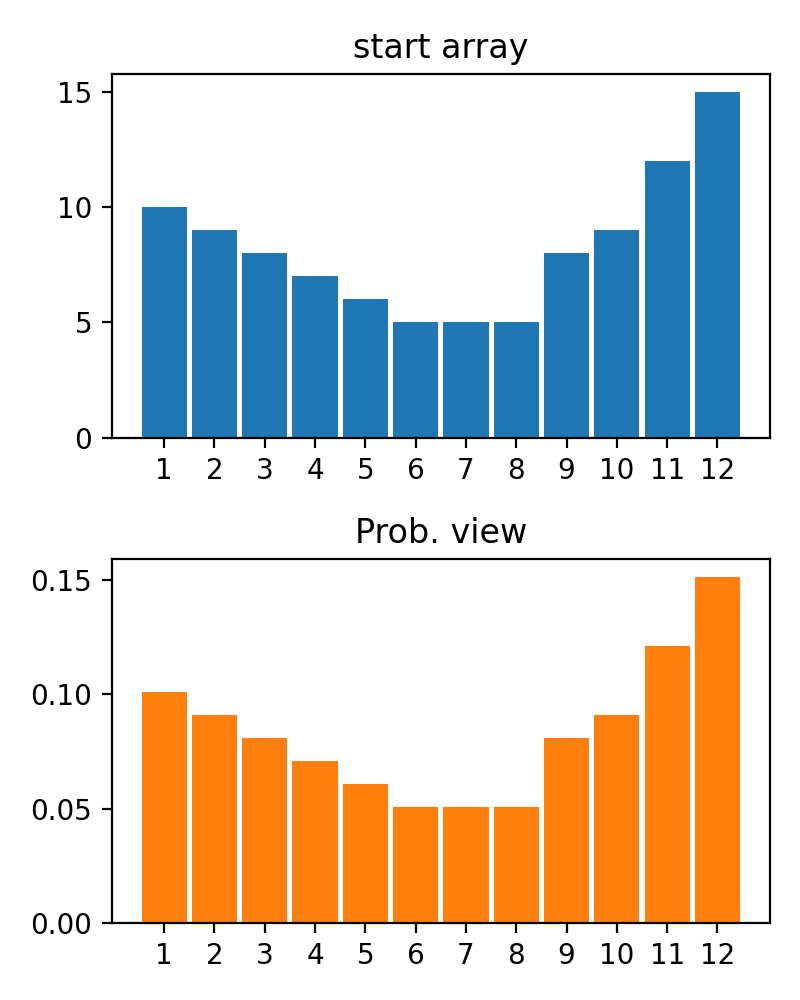

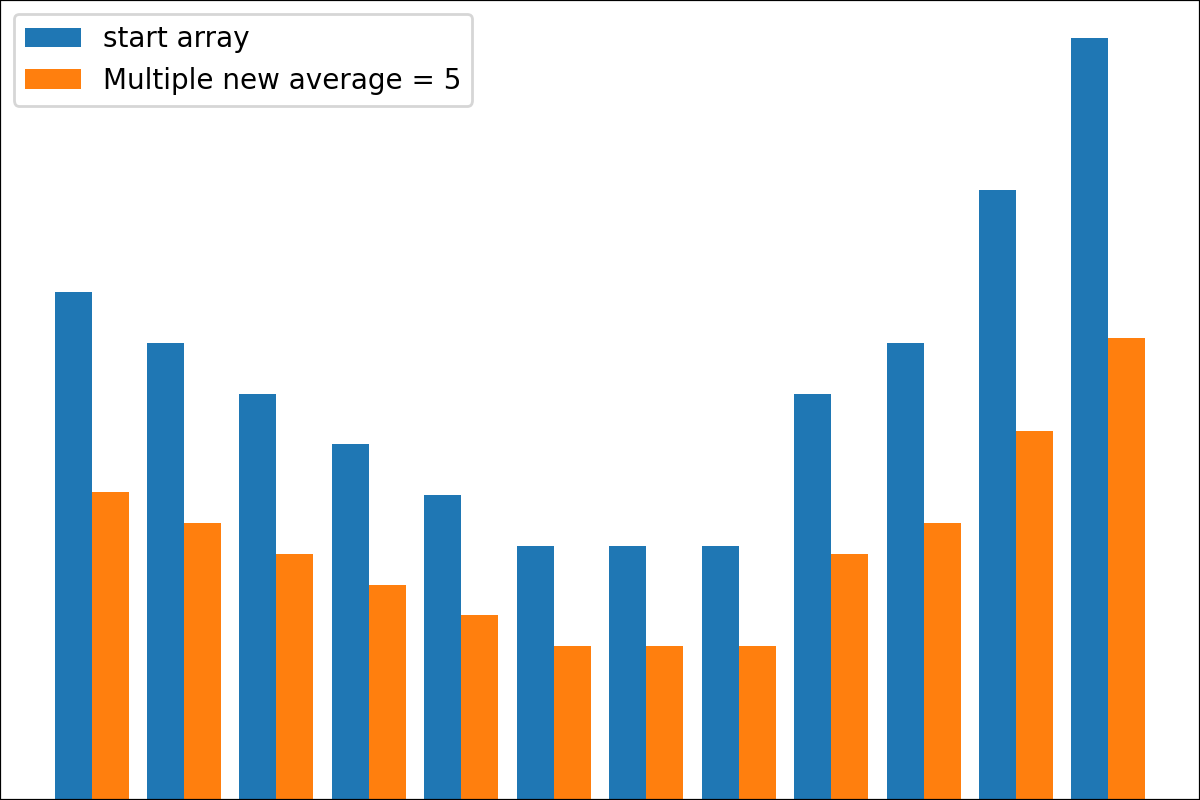

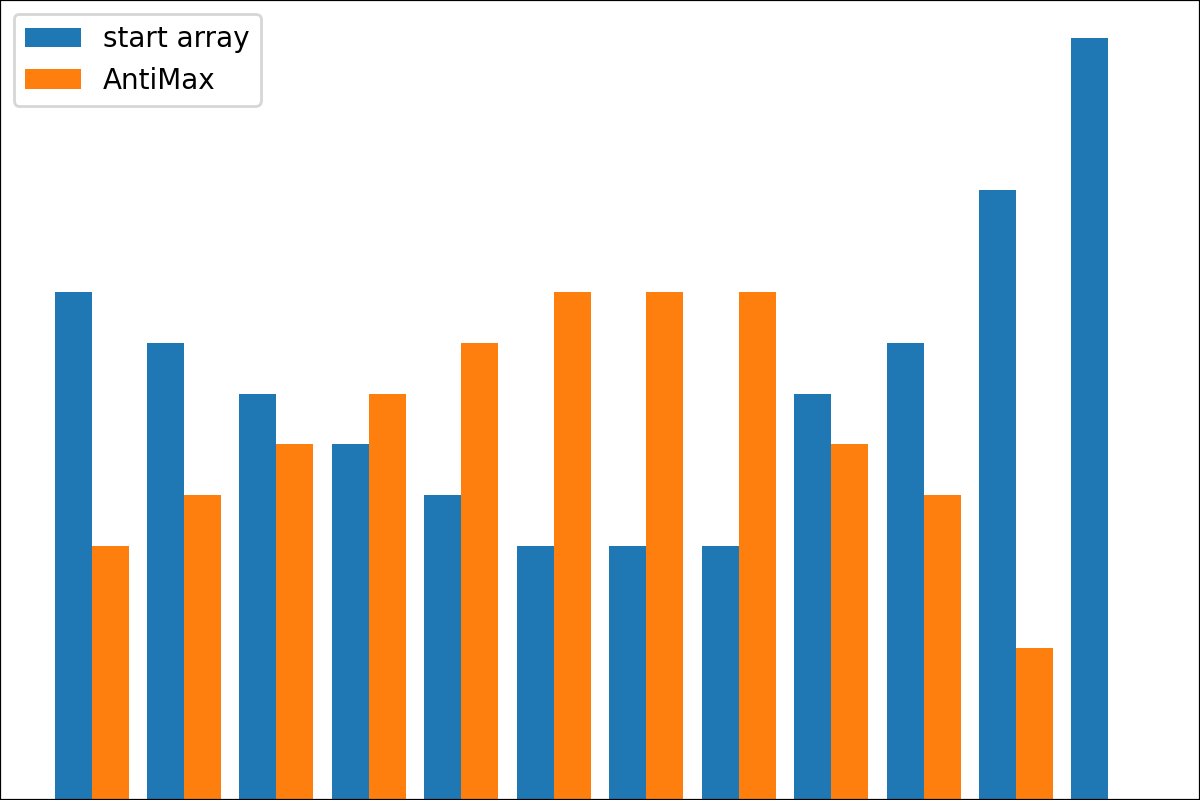

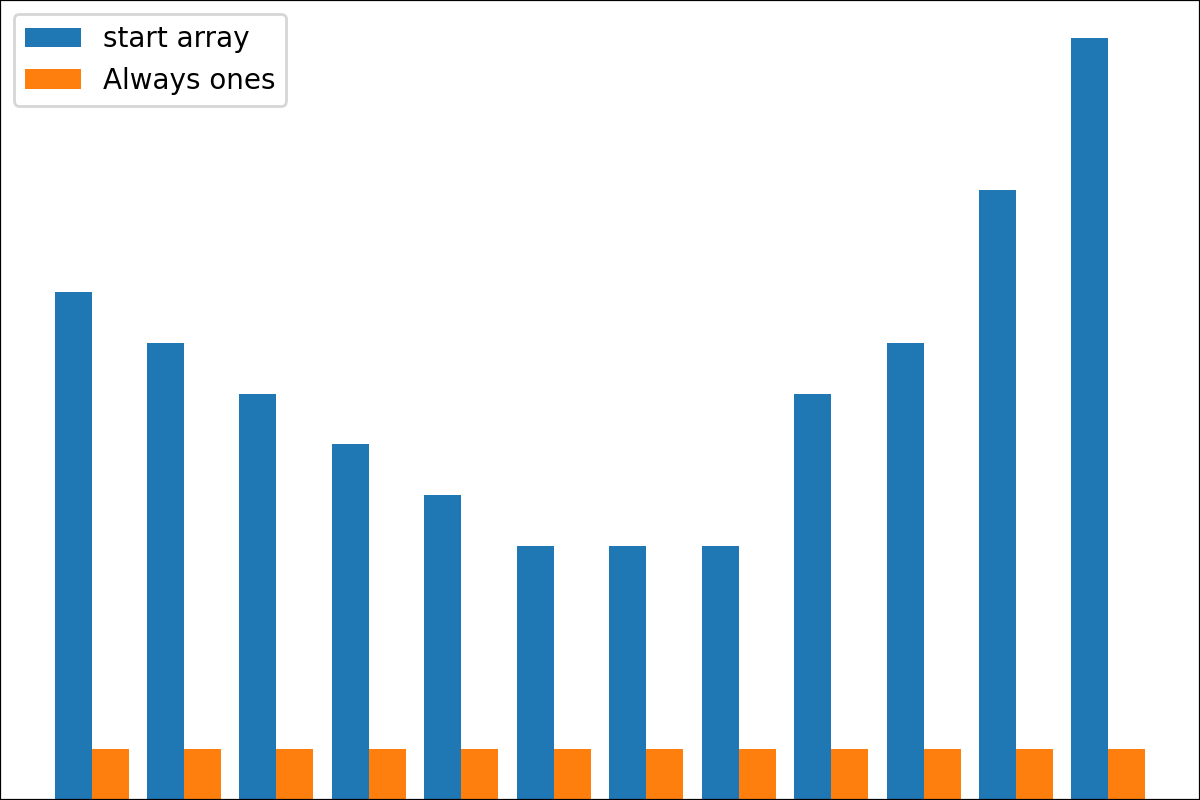

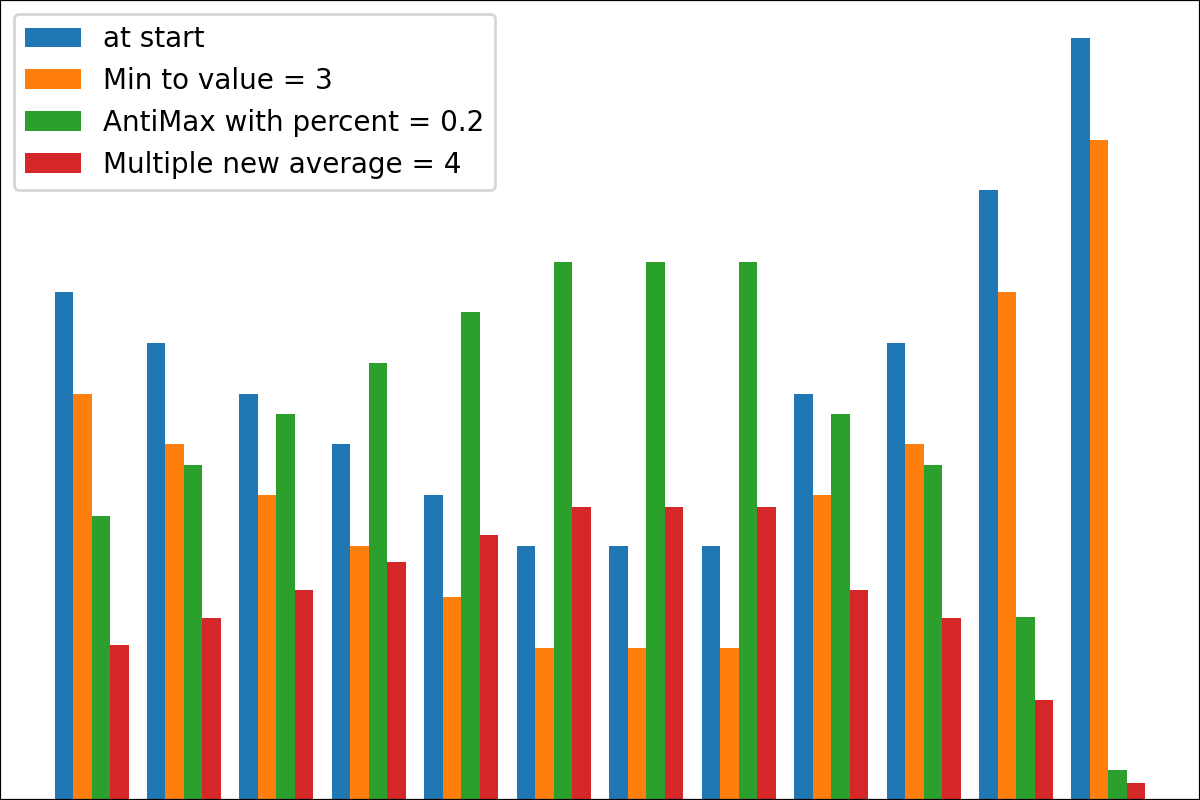

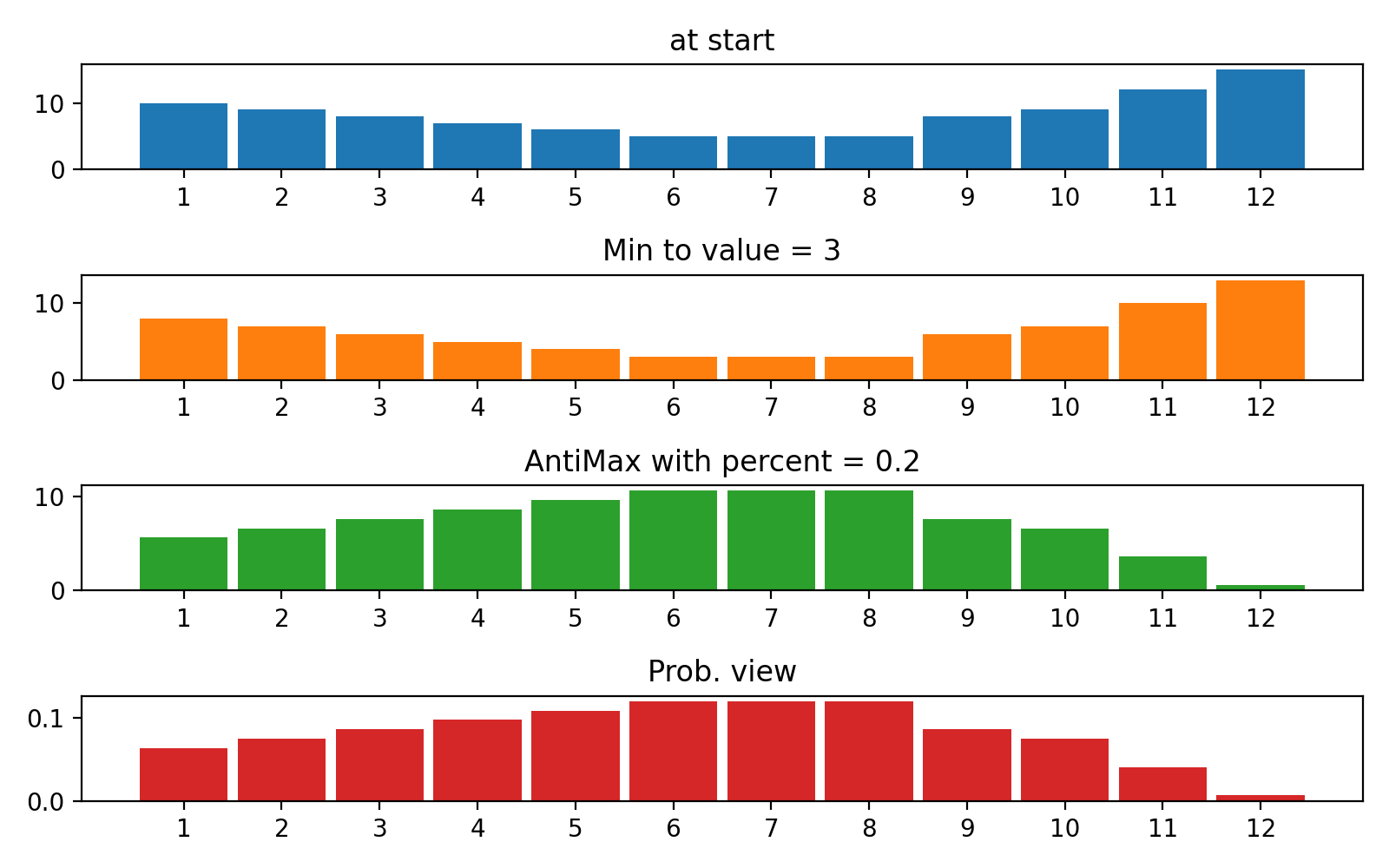

#array([5., 3., 2., 0., 3., 4.])There is plot_scores function for plotting transformation process results. It has arguments:

scores: 2D numpy array 2D numpy array with structure[start_values, first_transform(start_values), second_transform(first_transform), ...], where each object is 1D-array of scores (values/costs/fitnesses).names:None/string list, optional Names for each step for plot labels. The default isNone.kind: str, optional for 'beside' each new column will be beside previous; for 'under' there will be new plot under previous. The default is 'beside'.save_as:None/str, optional File path to save the plot. The default isNone.

I have made basic neural network tools here because it's very necessary to use simple networks with some reinforcement learning tasks, but common packages like Keras work extremely slow if u need just prediction (forward propagation) only for 1 sample but many times. So it will be faster to use simple numpy-based packages for these cases.

It was not so difficult to use this transformers logic for creating neural networks. So this package has next neural network layers as transformers:

-

Activations:

SoftmaxReluLeakyRelu(alpha = 0.01)SigmoidTanhArcTanSwish(beta = 0.25)SoftplusSoftsignElu(alpha)Selu(alpha, scale)

-

Dense layers tools:

Bias(bias_len, bias_array = None)-- to add bias with lengthbias_len. Ifbias_arrayisNone, uses random biasMatrixDot(from_size, to_size, matrix_array = None)NNStep(from_size, to_size, matrix_array = None, bias_array = None)-- it'sMatrixDotandBiastogether, if u wanna create them faster

And there are several helpers methods for using pipeline object like neural network (only for forward propagation of course):

-

pipelineobjects methods:get_shapes()-- to get list of shapes of needed array for NNtotal_weights()-- get count of weights for overall NNset_weights(weights)- set weights (as list of arrays with needed shapes) for NN

-

Alone functions:

arr_to_weigths(arr, shapes)-- converts 1D-arrayarrto list of arrays with shapesshapesto put it inset_weightsmethod

See simplest example