Website|arXiv|BibTeX

Peihao Xiang, Chaohao Lin, Kaida Wu, and Ou Bai

HCPS Laboratory, Department of Electrical and Computer Engineering, Florida International University

Official TensorFlow implementation and pre-trained VideoMAE models for MultiMAE-DER: Multimodal Masked Autoencoder for Dynamic Emotion Recognition.

Illustration of our MultiMAE-DER.

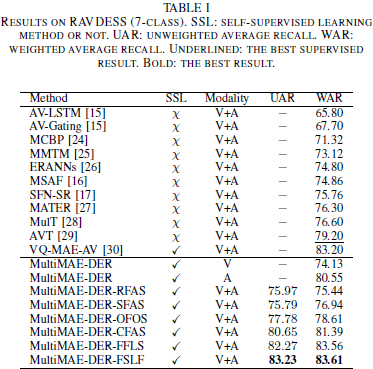

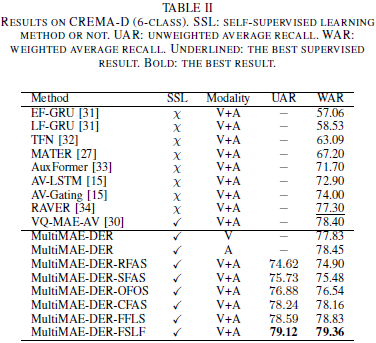

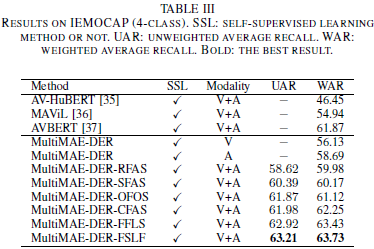

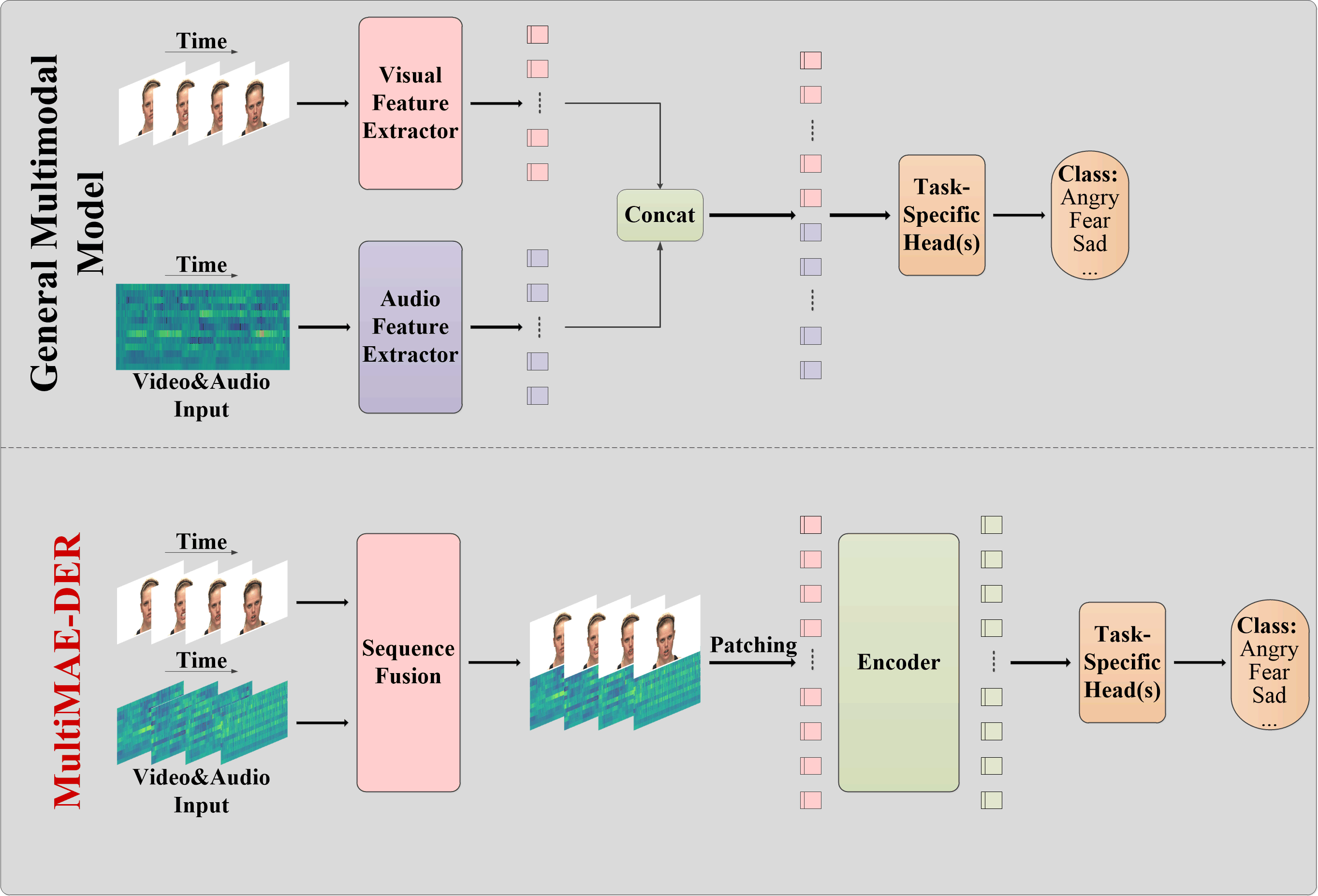

General Multimodal Model vs. MultiMAE-DER. The uniqueness of our approach lies in the capability to extract features from cross-domain data using only a single encoder, eliminating the need for targeted feature extraction for different modalities.

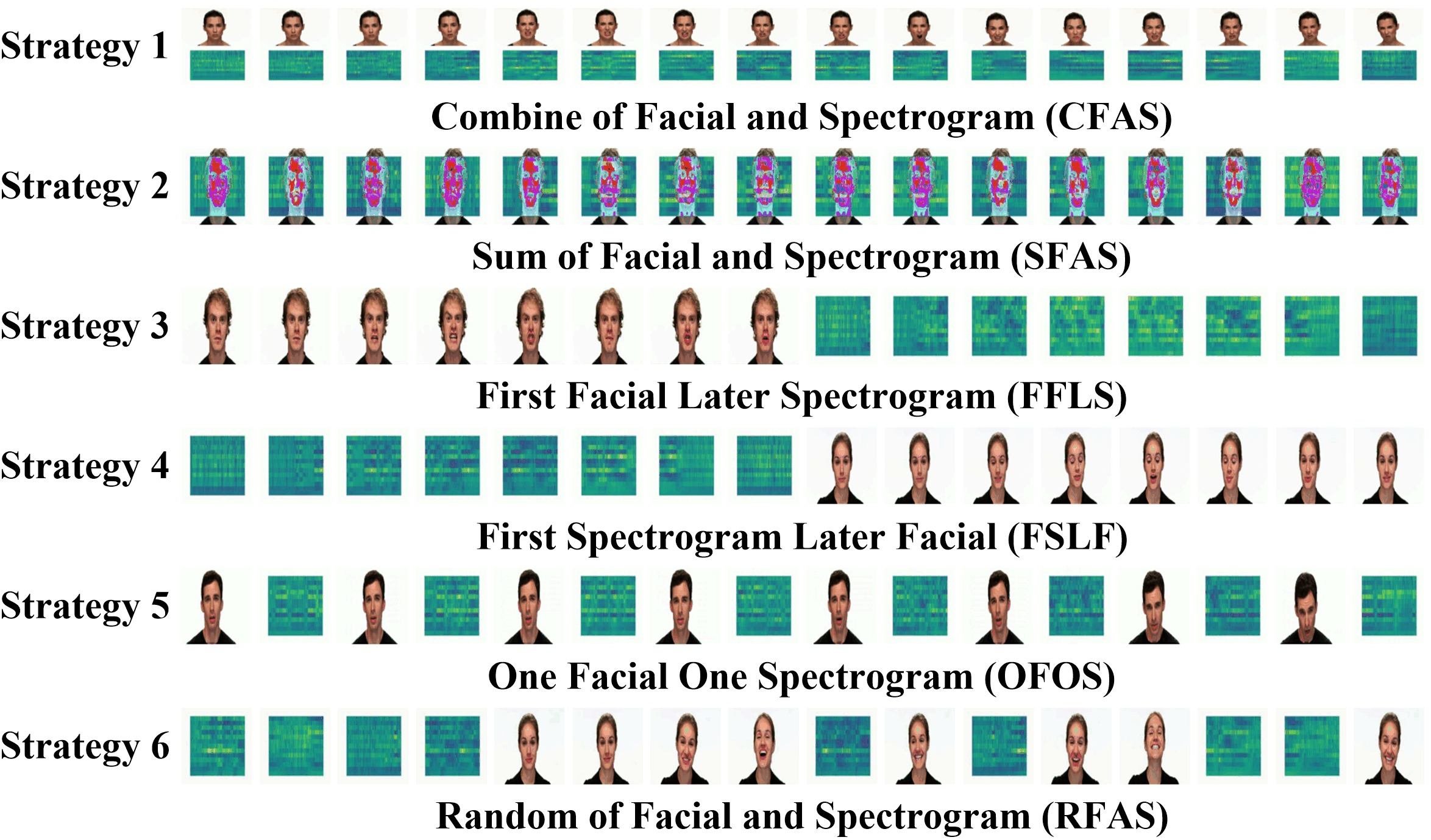

Multimodal Sequence Fusion Strategies.

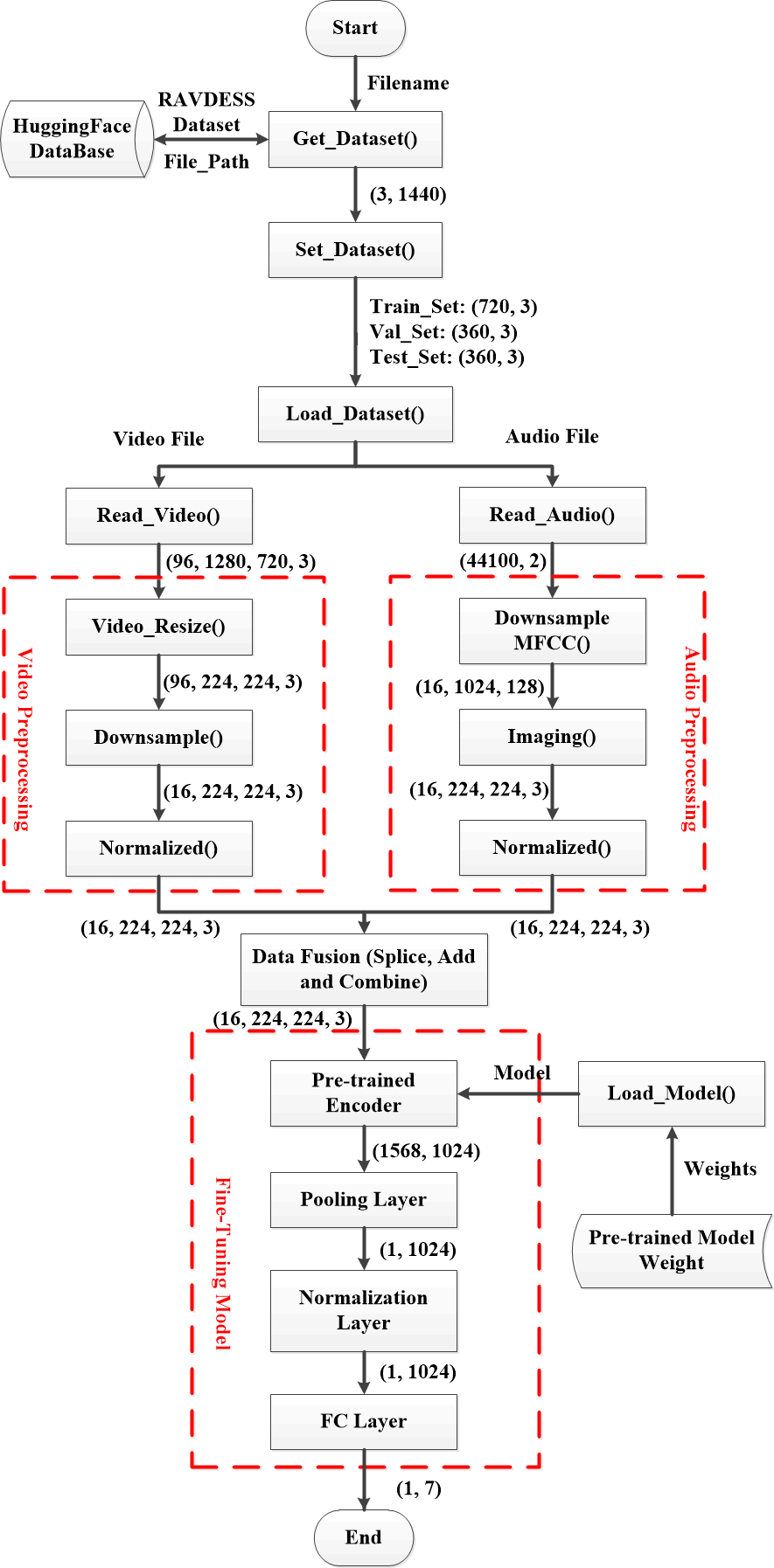

The architecture of MultiMAE-DER.

If you have any questions, please feel free to reach me out at pxian001@fiu.edu.

This project is built upon VideoMAE and MAE-DFER. Thanks for their great codebase.

This project is under the Apache License 2.0. See LICENSE for details.

If you find this repository helpful, please consider citing our work:

@misc{xiang2024multimaeder,

title={MultiMAE-DER: Multimodal Masked Autoencoder for Dynamic Emotion Recognition},

author={Peihao Xiang and Chaohao Lin and Kaida Wu and Ou Bai},

year={2024},

eprint={2404.18327},

archivePrefix={arXiv},

primaryClass={cs.CV}

}