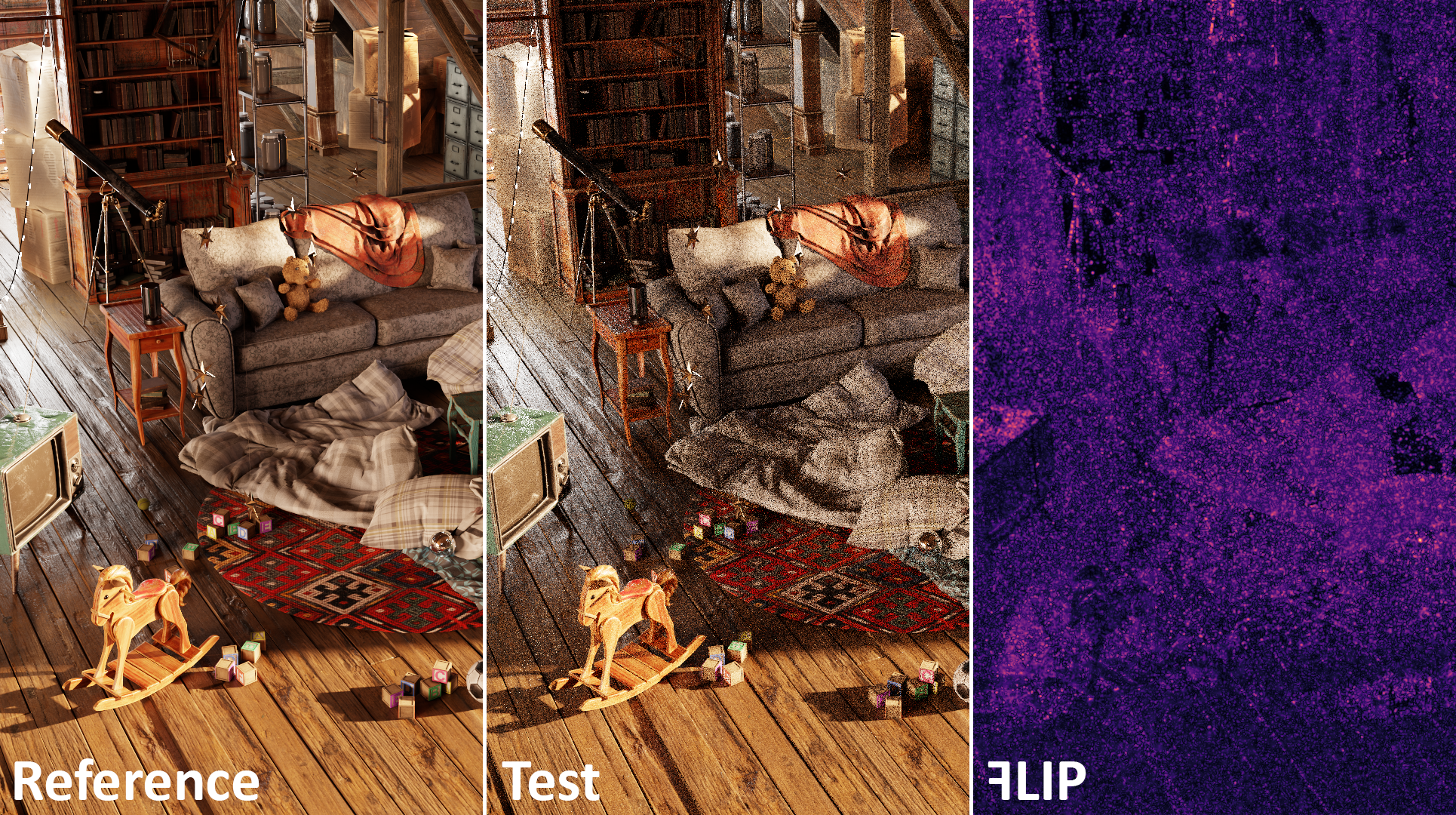

ꟻLIP: A Tool for Visualizing and Communicating Errors in Rendered Images (v1.2)

By Pontus Ebelin, Jim Nilsson, and Tomas Akenine-Möller, with Magnus Oskarsson, Kalle Åström, Mark D. Fairchild, and Peter Shirley.

This repository holds implementations of the LDR-ꟻLIP and HDR-ꟻLIP image error metrics. It also holds code for the ꟻLIP tool, presented in Ray Tracing Gems II.

The changes made for the different versions of ꟻLIP are summarized in the version list.

A list of papers that use/cite ꟻLIP.

A note about the precision of ꟻLIP.

An image gallery displaying a large quantity of reference/test images and corresponding error maps from different metrics.

Version 1.2. implemented separated convolutions for the C++ and CUDA versions of ꟻLIP. A note explaining those can be found here.

License

Copyright © 2020-2023, NVIDIA Corporation & Affiliates. All rights reserved.

This work is made available under a BSD 3-Clause License.

The repository distributes code for tinyexr, which is subject to a BSD 3-Clause License,

and stb_image, which is subject to an MIT License.

For individual contributions to the project, please confer the Individual Contributor License Agreement.

For business inquiries, please visit our website and submit the form: NVIDIA Research Licensing.

Python (API and Tool)

Setup (with Anaconda3):

conda create -n flip python numpy matplotlib

conda activate flip

conda install -c conda-forge opencv

conda install -c conda-forge openexr-python

Usage:

Remember to activate the flip environment through conda activate flip before using the tool.

python flip.py --reference reference.{exr|png} --test test.{exr|png} [--options]

See the README in the python folder and run python flip.py -h for further information and usage instructions.

C++ and CUDA (API and Tool)

Setup:

The FLIP.sln solution contains one CUDA backend project and one pure C++ backend project.

Compiling the CUDA project requires a CUDA compatible GPU. Instruction on how to install CUDA can be found here.

Alternatively, a CMake build can be done by creating a build directory and invoking CMake on the source dir:

mkdir build

cd build

cmake ..

cmake --build .

CUDA support is enabled via the FLIP_ENABLE_CUDA, which can be passed to CMake on the command line with

-DFLIP_ENABLE_CUDA=ON or set interactively with ccmake or cmake-gui.

FLIP_LIBRARY option allows to output a library rather than an executable.

Usage:

flip[-cuda].exe --reference reference.{exr|png} --test test.{exr|png} [options]

See the README in the cpp folder and run flip[-cuda].exe -h for further information and usage instructions.

PyTorch (Loss Function)

Setup (with Anaconda3):

conda create -n flip_dl python numpy matplotlib

conda activate flip_dl

conda install pytorch torchvision torchaudio cudatoolkit=11.1 -c pytorch -c conda-forge

conda install -c conda-forge openexr-python

Usage:

Remember to activate the flip_dl environment through conda activate flip_dl before using the loss function.

LDR- and HDR-ꟻLIP are implemented as loss modules in flip_loss.py. An example where the loss function is used to train a simple autoencoder is provided in train.py.

See the README in the pytorch folder for further information and usage instructions.

Citation

If your work uses the ꟻLIP tool to find the errors between low dynamic range images,

please cite the LDR-ꟻLIP paper:

Paper | BibTeX

If it uses the ꟻLIP tool to find the errors between high dynamic range images,

instead cite the HDR-ꟻLIP paper:

Paper | BibTeX

Should your work use the ꟻLIP tool in a more general fashion, please cite the Ray Tracing Gems II article:

Chapter | BibTeX

Acknowledgements

We appreciate the following peoples' contributions to this repository: Jonathan Granskog, Jacob Munkberg, Jon Hasselgren, Jefferson Amstutz, Alan Wolfe, Killian Herveau, Vinh Truong, Philippe Dagobert, and Hannes Hergeth.