This is a PyTorch Tutorial to Super-Resolution.

This is the fifth in a series of tutorials I'm writing about implementing cool models on your own with the amazing PyTorch library.

Basic knowledge of PyTorch, convolutional neural networks is assumed.

If you're new to PyTorch, first read Deep Learning with PyTorch: A 60 Minute Blitz and Learning PyTorch with Examples.

Questions, suggestions, or corrections can be posted as issues.

I'm using PyTorch 1.4 in Python 3.6.

27 Jan 2020: Code is now available for a PyTorch Tutorial to Machine Translation.

To build a model that can realistically increase image resolution.

Super-resolution (SR) models essentially hallucinate new pixels where previously there were none. In this tutorial, we will try to quadruple the dimensions of an image i.e. increase the number of pixels by 16x!

We're going to be implementing Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. It's not just that the results are very impressive... it's also a great introduction to GANs!

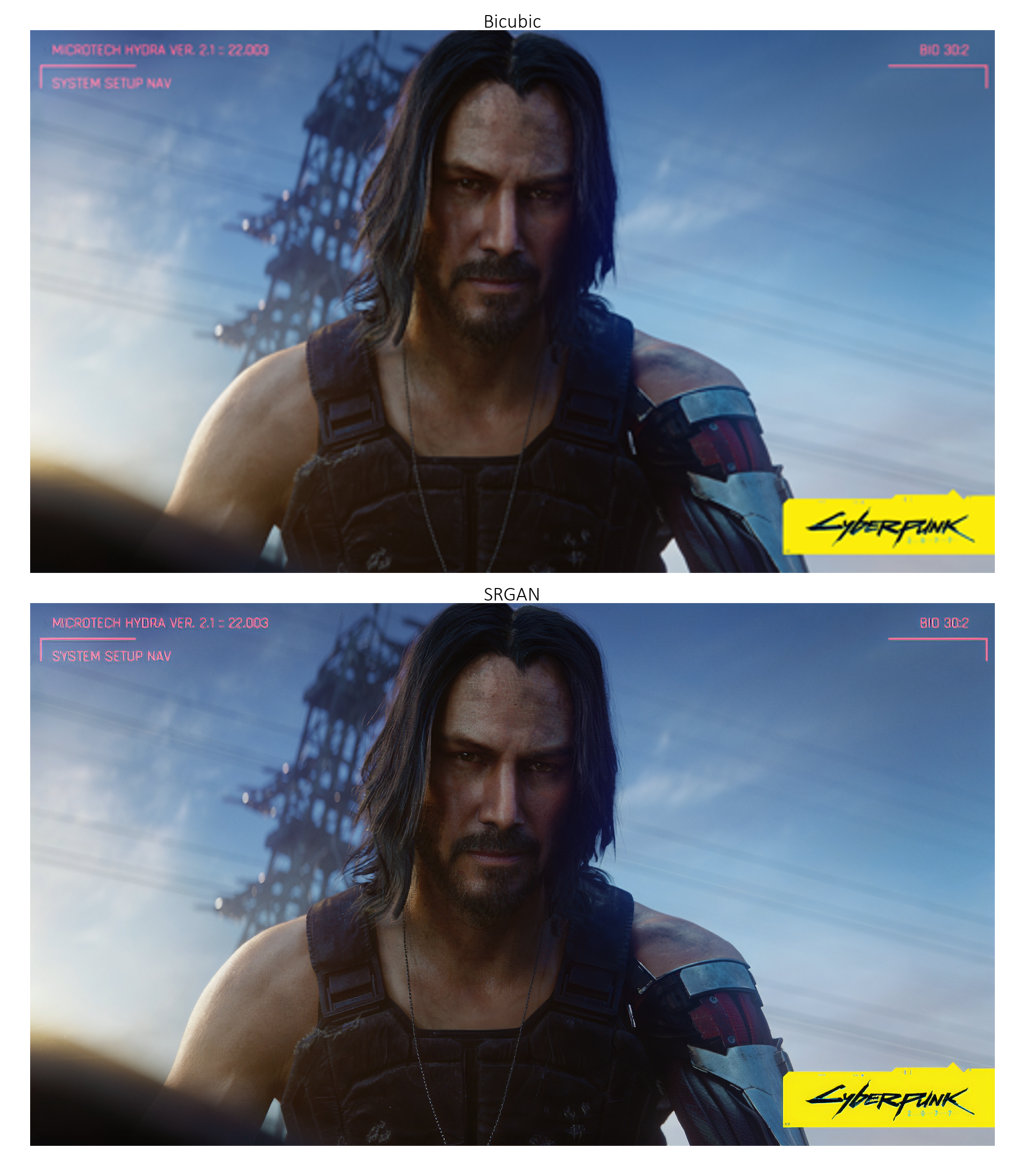

We will train the two models described in the paper — the SRResNet, and the SRGAN which greatly improves upon the former through adversarial training.

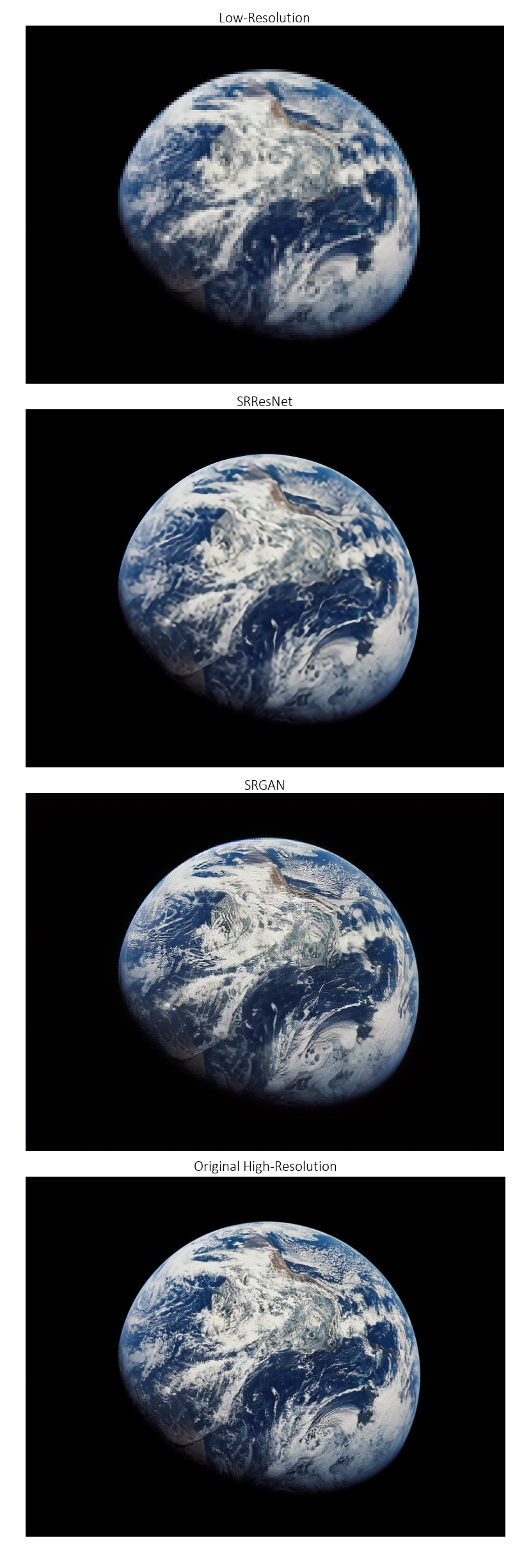

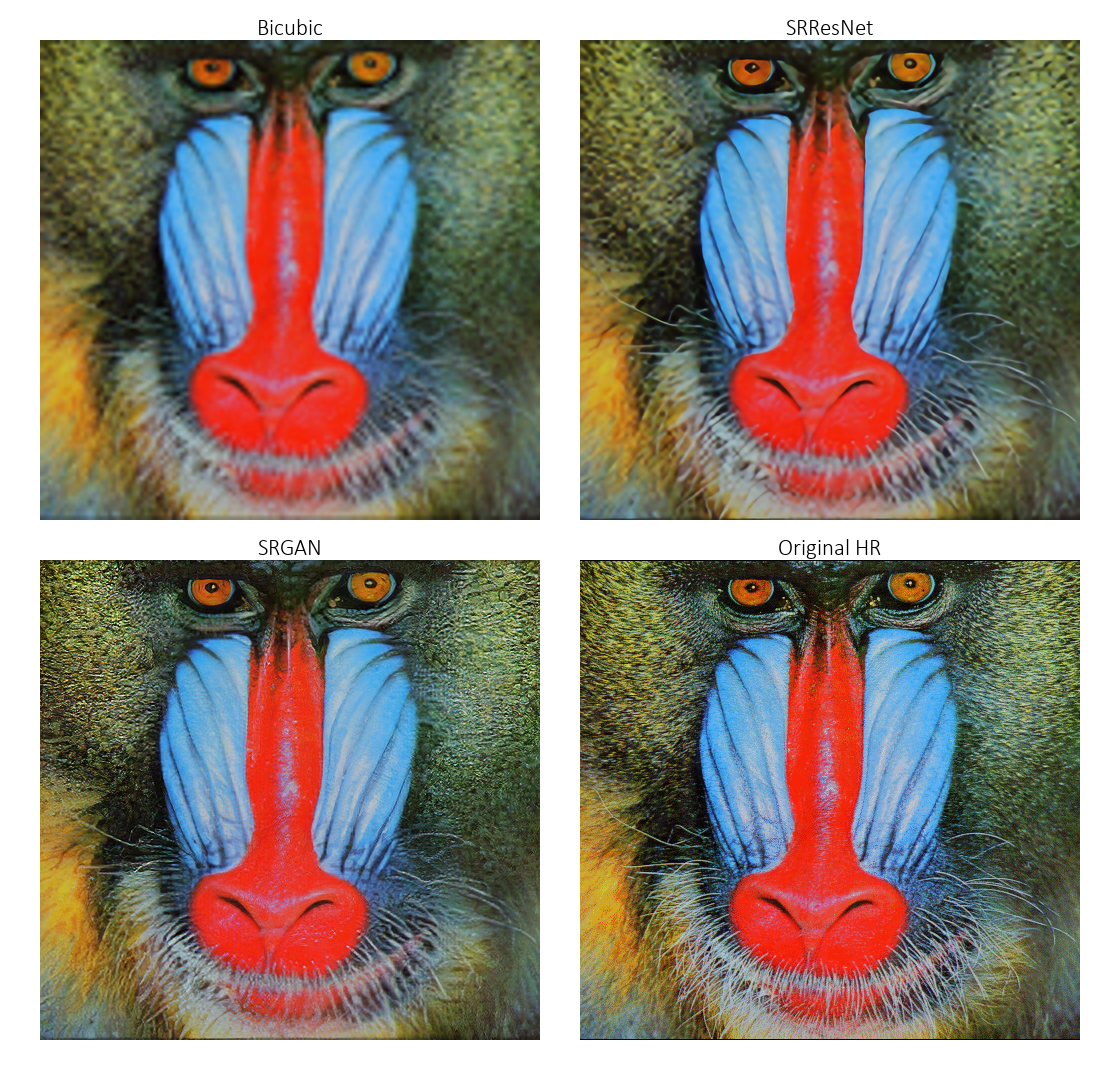

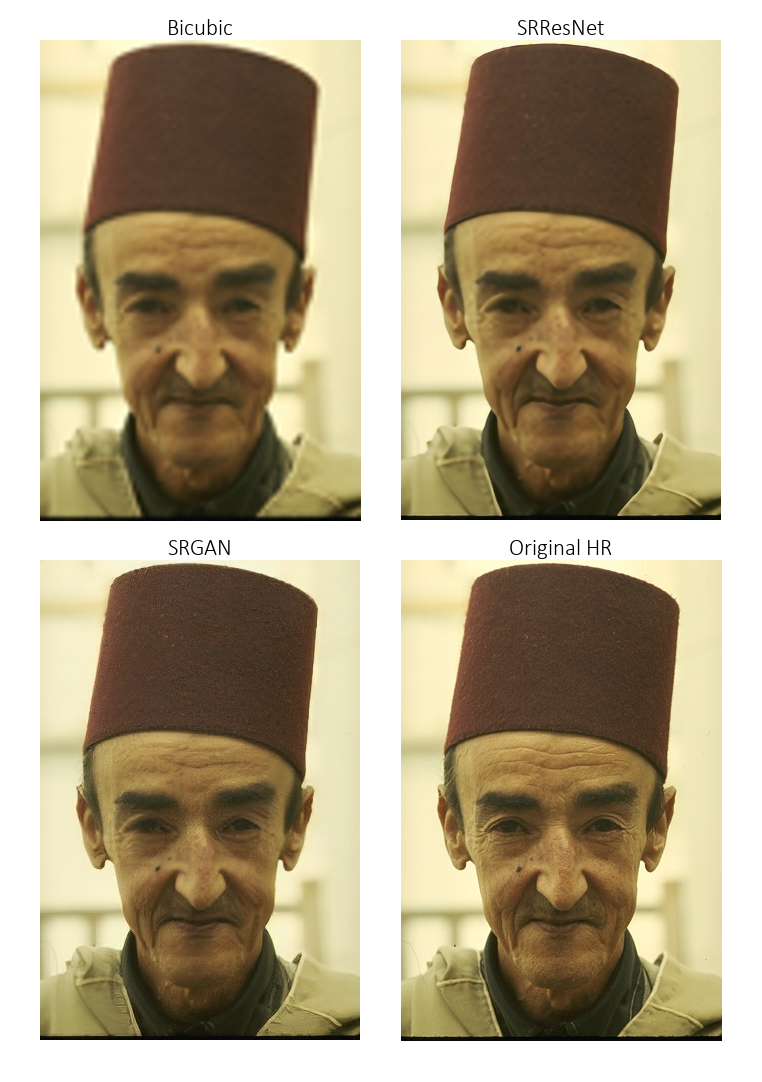

Before you proceed, take a look at some examples generated from low-resolution images not seen during training. Enhance!

Since YouTube's compression is likely reducing the video's quality, you can download the original video file here for best viewing.

Make sure to watch in 1080p so that the 4x scaling is not downsampled to a lower value.

There are large examples at the end of the tutorial.

I am still writing this tutorial.

In the meantime, you could take a look at the code – it works!

Model checkpoints are available here.

While the authors of the paper trained their models on a 350k-image subset of the ImageNet data, I simply used about 120k COCO images (train2014 and val2014 folders). They're a lot easier to obtain. If you wish to do the same, you can download them from the links listed in my other tutorial.

Here are the results (with the paper's results in parantheses):

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |||

|---|---|---|---|---|---|---|---|---|

| SRResNet | 31.927 (32.05) | 0.902 (0.9019) | 28.588 (28.49) | 0.799 (0.8184) | 27.587 (27.58) | 0.756 (0.7620) | ||

| SRGAN | 29.719 (29.40) | 0.859 (0.8472) | 26.509 (26.02) | 0.729 (0.7397) | 25.531 (25.16) | 0.678 (0.6688) | ||

| Set5 | Set5 | Set14 | Set14 | BSD100 | BSD100 |

Erm, huge grain of salt. The paper emphasizes repeatedly that PSNR and SSIM aren't really an indication of the quality of super-resolved images. The less realistic and overly smooth SRResNet images score better than those from the SRGAN. This is why the authors of the paper conduct an opinion score test, which is obviously beyond our means here.

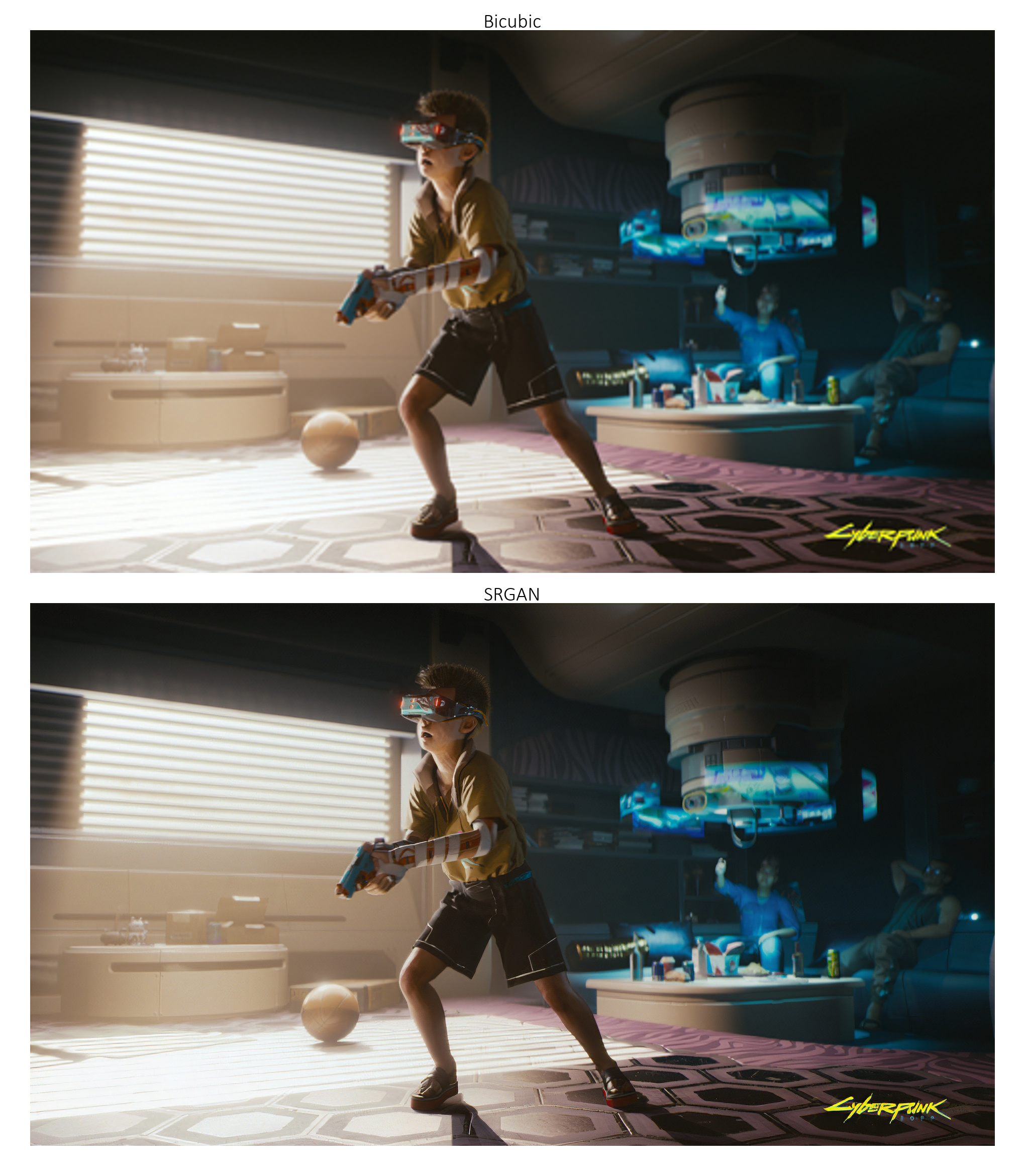

The images in the following examples (from Cyberpunk 2077) are quite large. If you are viewing this page on a 1080p screen, you would need to click on the image to view it at its actual size to be able to effectively see the 4x super-resolution.

Click on image to view at full size.

Click on image to view at full size.

Click on image to view at full size.

Click on image to view at full size.

Click on image to view at full size.

Click on image to view at full size.