3D-aware GAN inversion

FlipInversion_profile.mp4

bash exp/tests/setup_env_debug.sh

# Install pytorch3d

git clone https://github.com/facebookresearch/pytorch3d

cd pytorch3d

pip install -e .

https://github.com/PeterouZh/CIPS-3Dplusplus/releases/download/v1.0.0/pretrained.zip

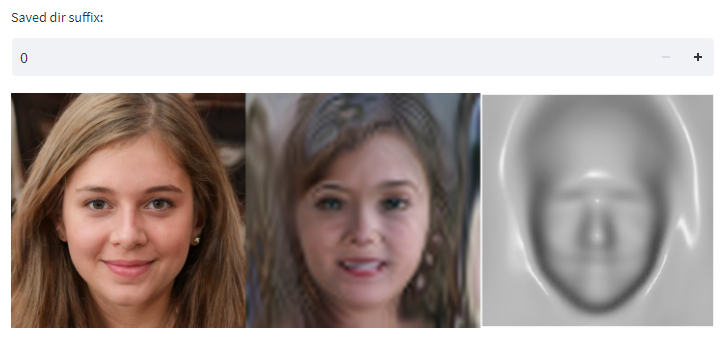

- Sampling multi-view images

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_ffhq_v10;\

Testing_train_cips3d_ffhq_v10().test__sample_multi_view_web(debug=False)" \

--tl_opts port 8501

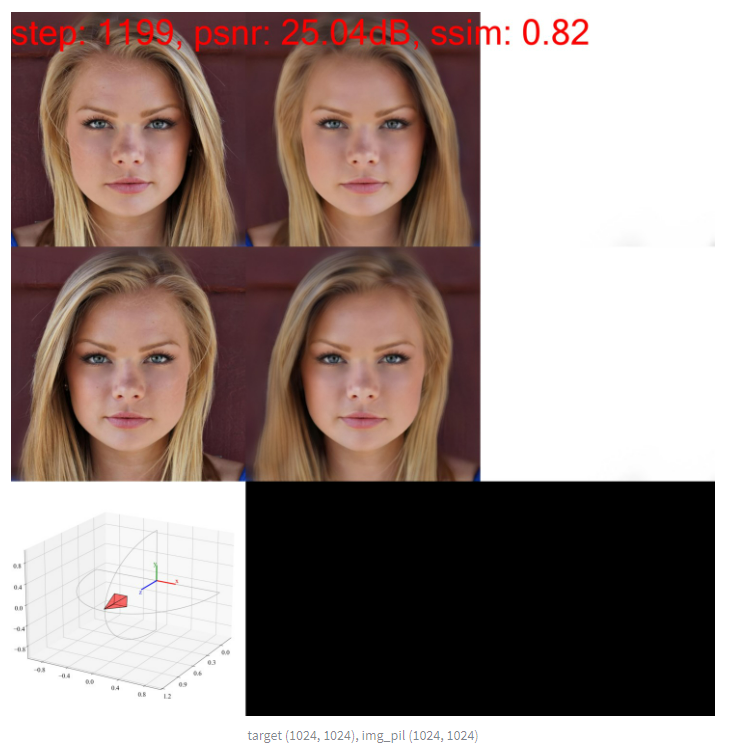

- Inversion

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_ffhq_v10;\

Testing_train_cips3d_ffhq_v10().test__flip_inversion_web(debug=False)" \

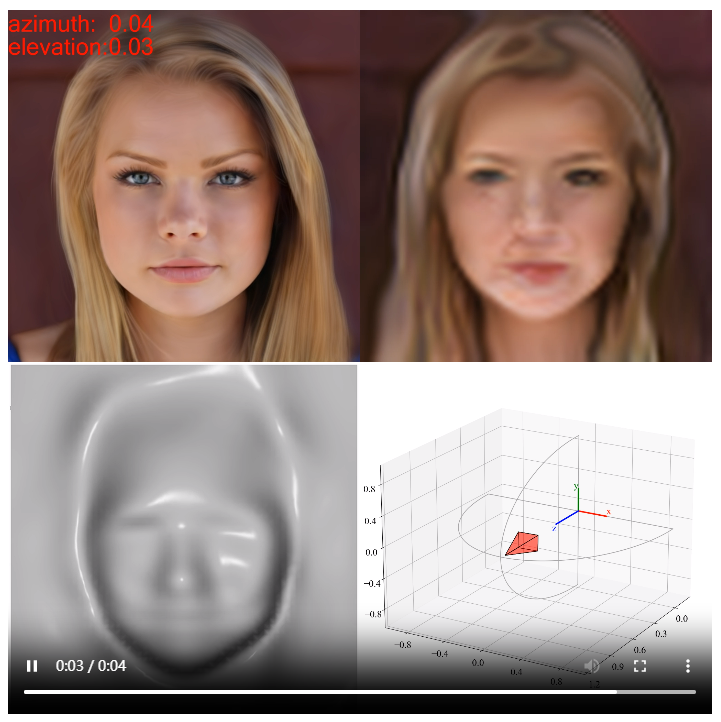

--tl_opts port 8501- Rendering multi-view images Note that in order to render multi-view images, you must first perform inversion (the previous step). When you have done the inversion, you can execute the command below to render multi-view images:

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_ffhq_v10;\

Testing_train_cips3d_ffhq_v10().test__render_multi_view_web(debug=False)" \

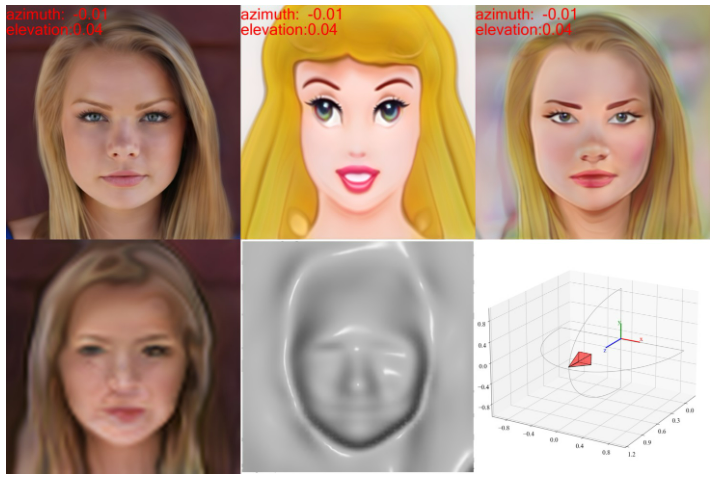

--tl_opts port 8501- Stylization

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_ffhq_v10;\

Testing_train_cips3d_ffhq_v10().test__interpolate_decoder_web(debug=False)" \

--tl_opts port 8501- Style mixing

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_ffhq_v10;\

Testing_train_cips3d_ffhq_v10().test__style_mixing_web(debug=False)" \

--tl_opts port 8501- Time

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_ffhq_v10;\

Testing_train_cips3d_ffhq_v10().test__rendering_time(debug=False)"- Sampling multi-view images

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_compcars_v10;\

Testing_train_cips3d_compcars_v10().test__sample_multi_view_web(debug=False)" \

--tl_opts port 8501

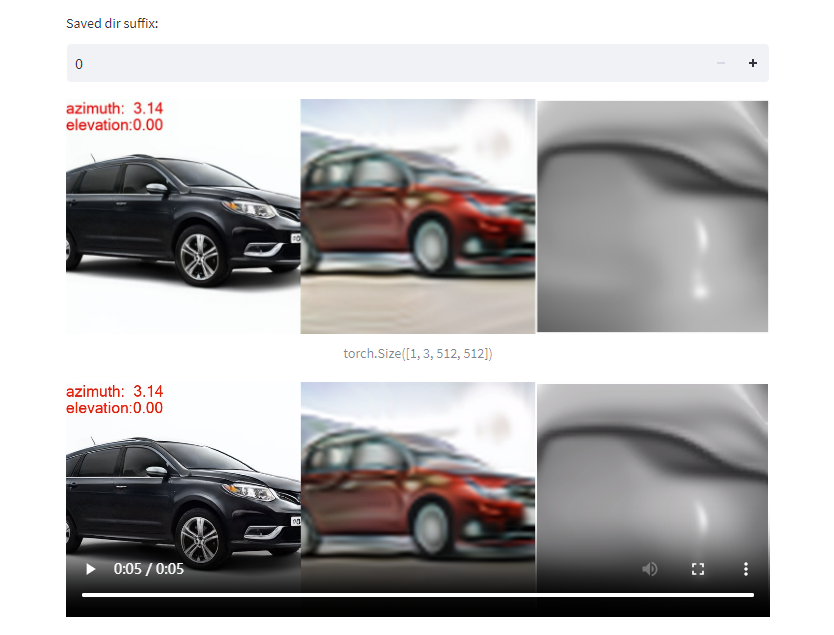

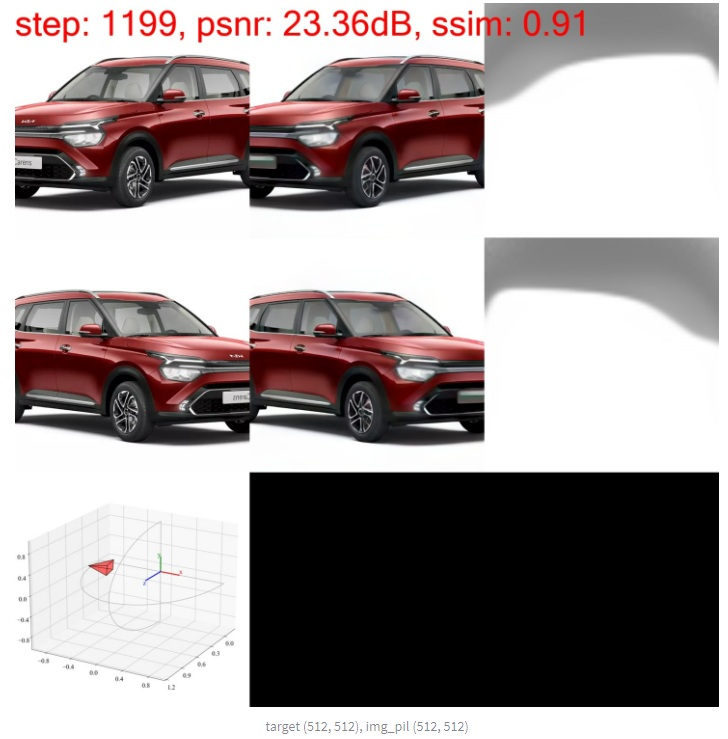

- Inversion

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_compcars_v10;\

Testing_train_cips3d_compcars_v10().test__flip_inversion_web(debug=False)" \

--tl_opts port 8501Please note, car inversion is only a preliminary experiment and the results are not stable. The main difficulty lies in accurately estimating the camera's pose for the car. Currently we mitigate this issue by setting an appropriate initial camera pose for the original image and the flipped image. (see the azim_init)

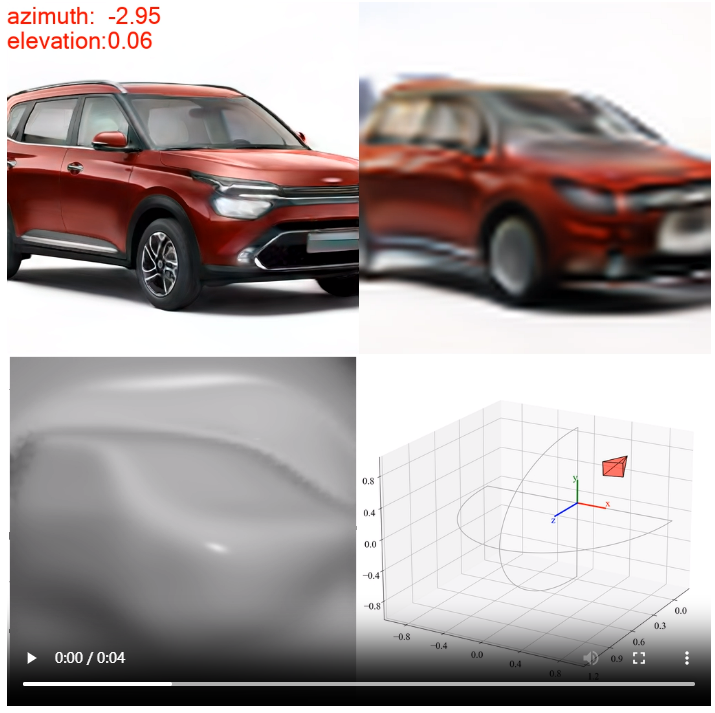

- Rendering multi-view images Note that in order to render multi-view images, you must first perform inversion (the previous step). When you have done the inversion, you can execute the command below to render multi-view images:

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=.:exp

python -c "from exp.tests.test_cips3dpp import Testing_train_cips3d_compcars_v10;\

Testing_train_cips3d_compcars_v10().test__render_multi_view_web(debug=False)" \

--tl_opts port 8501- Download ffhq dataset (

images1024x1024) https://github.com/NVlabs/ffhq-dataset

tree datasets/ffhq/images1024x1024datasets/ffhq/images1024x1024

├── 00000

│ ├── 00000.png

│ ├── 00001.png

│ ├── xxx

├── 01000

├── 01000.png

├── 01001.png

└── xxx

- Preparing lmdb dataset

bash exp/cips3d/bash/preparing_dataset/prepare_data_ffhq_r1024.sh- Training

bash exp/cips3d/bash/train_cips3d_ffhq_v10/train_r1024_r64_ks1.sh- Evaluation

bash exp/cips3d/bash/train_cips3d_ffhq_v10/eval_fid_r1024.sh- Download Disney cartoon dataset

tree datasets/Disney_cartoon/cartoondatasets/Disney_cartoon/cartoon/

├── Cartoons_00002_01.jpg

├── Cartoons_00003_01.jpg

├── xxx

- Preparing lmdb dataset

rm -rf datasets/Disney_cartoon/cartoon/.ipynb_checkpoints/

pushd datasets/Disney_cartoon/cartoon/

mkdir images

mv *jpg images/

popd

bash exp/cips3d/bash/preparing_dataset/prepare_data_disney_r1024.sh- Finetuning

bash exp/cips3d/bash/train_cips3d_ffhq_v10/finetune_r1024_r64_ks1_disney.sh