I may update something later.

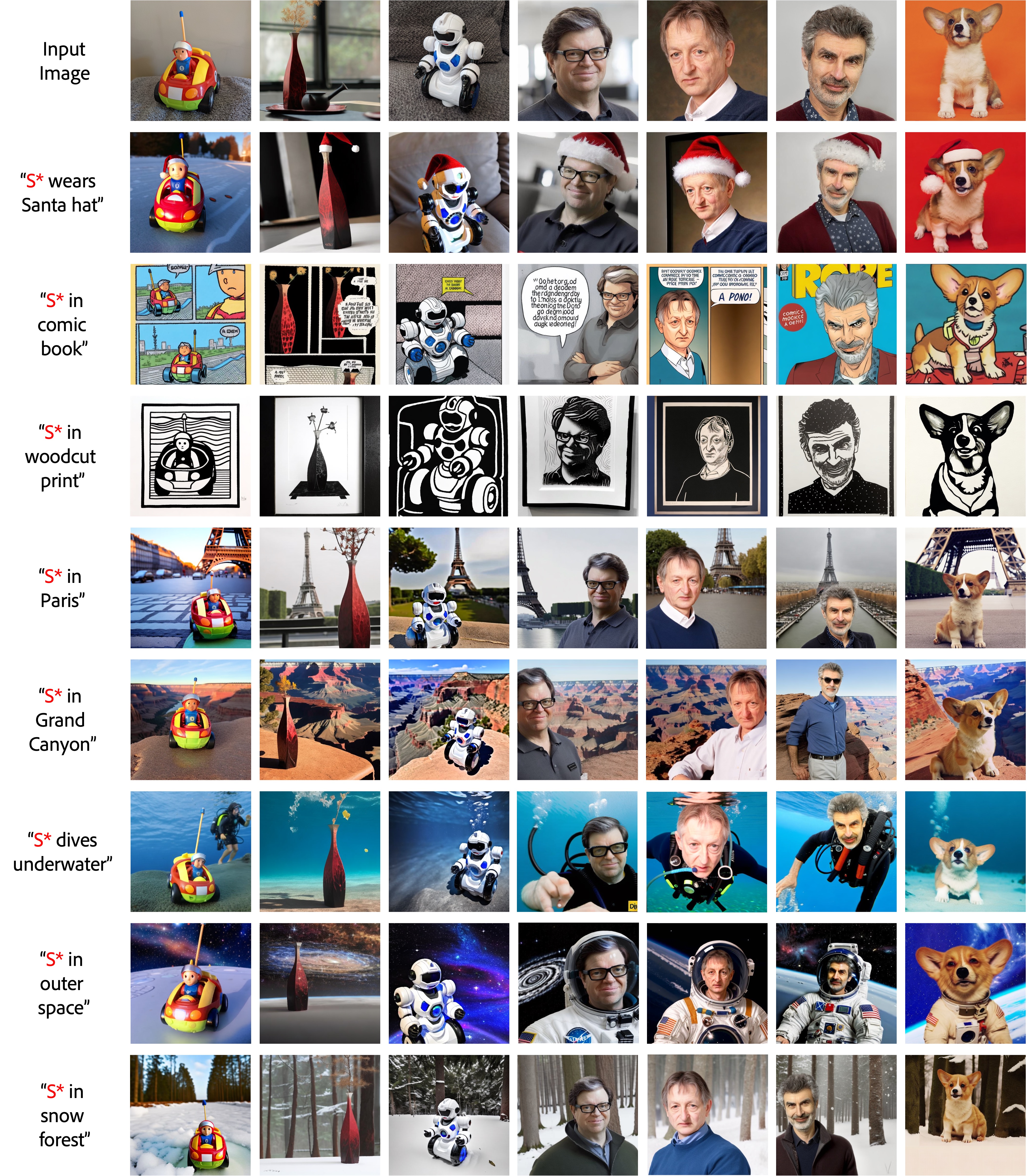

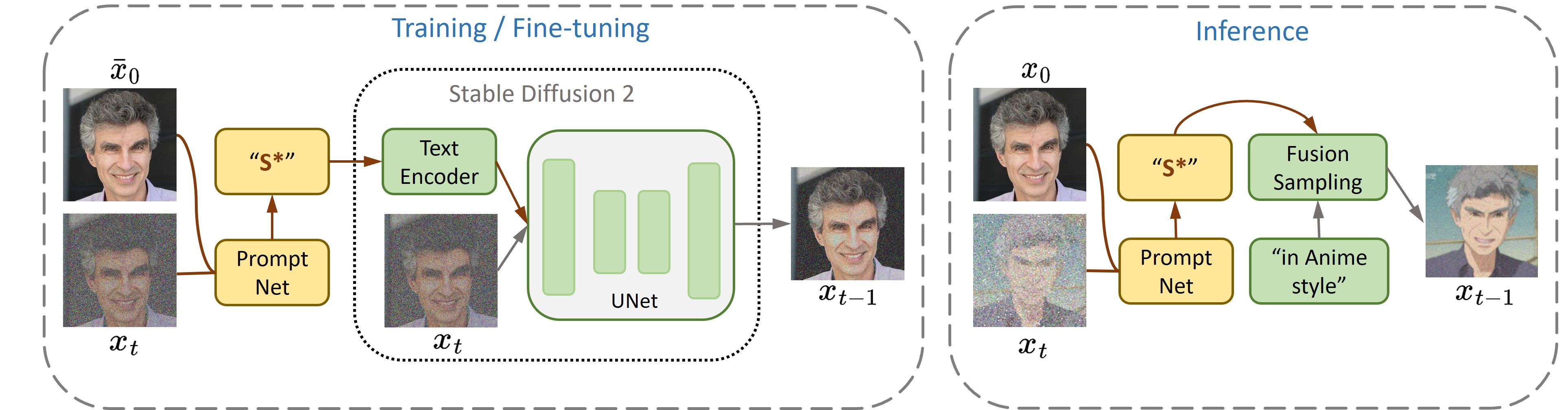

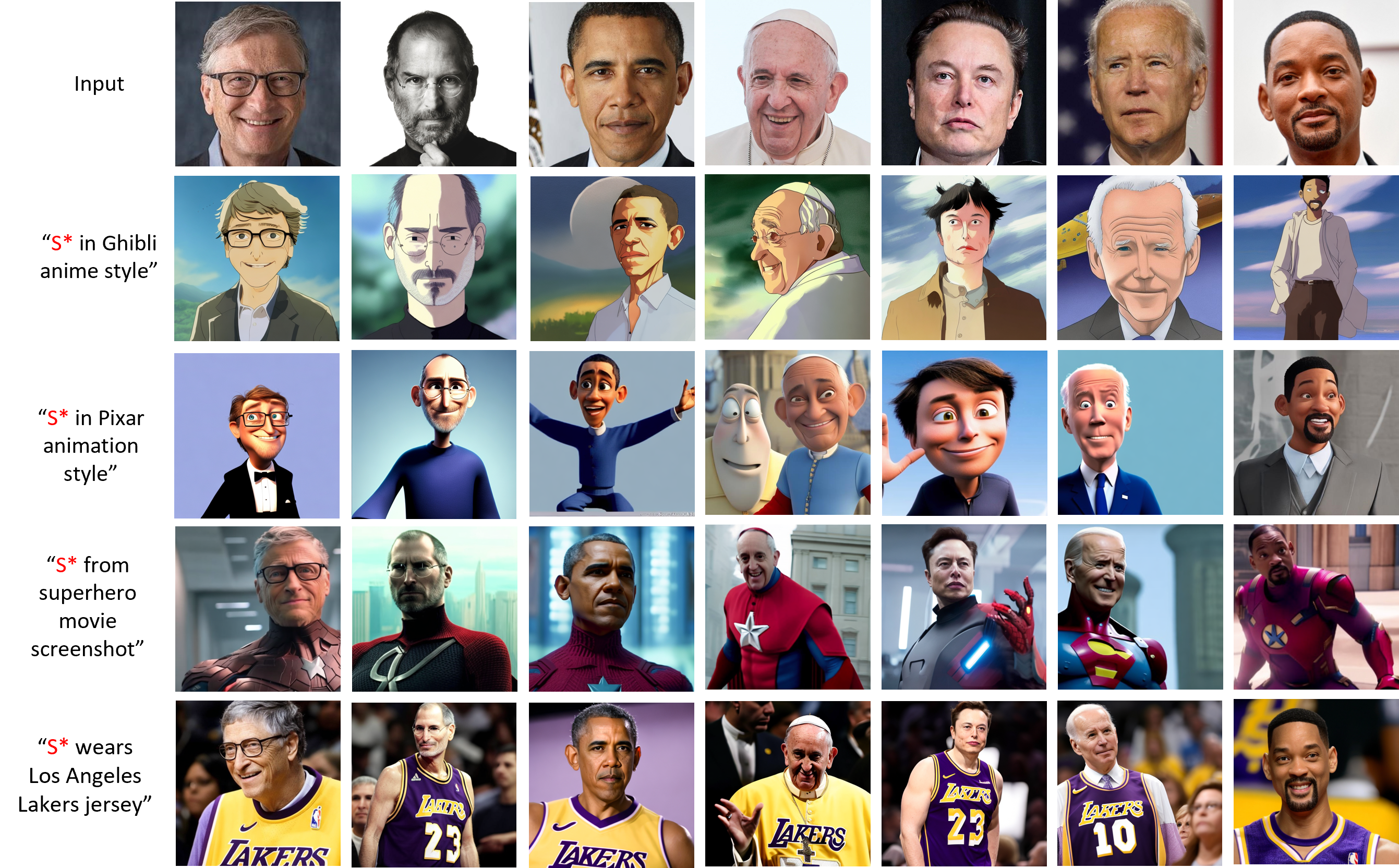

ProFusion is a framework for customizing pre-trained large-scale text-to-image generation models, which is Stable Diffusion 2 in our examples.

With ProFusion, you can generate infinite number of creative images for a novel/unique concept, with single testing image, on single GPU (~20GB are needed when fine-tune with batch size 1).

-

Install dependencies (we revised original diffusers);

cd ./diffusers pip install -e ".[torch]" cd .. pip install accelerate==0.16.0 torchvision transformers==4.25.1 datasets ftfy tensorboard Jinja2 regex tqdm joblib -

Initialize Accelerate;

accelerate config -

Download a model pre-trained on FFHQ;

-

Customize model with a testing image, example is shown in the notebook test.ipynb;

If you want to train a PromptNet encoder for other domains, or on your own dataset.

-

First, prepare an image-only dataset;

- In our example, we use FFHQ. Our pre-processed FFHQ can be found at google drive link.

-

Then, run

accelerate launch --mixed_precision="fp16" train.py\ --pretrained_model_name_or_path="stabilityai/stable-diffusion-2-base" \ --train_data_dir=./images_512 \ --max_train_steps=80000 \ --learning_rate=2e-05 \ --output_dir="./promptnet" \ --train_batch_size=8 \ --promptnet_l2_reg=0.000 \ --gradient_checkpointing

@article{zhou2023enhancing,

title={Enhancing Detail Preservation for Customized Text-to-Image Generation: A Regularization-Free Approach},

author={Zhou, Yufan and Zhang, Ruiyi and Sun, Tong and Xu, Jinhui},

journal={arXiv preprint arXiv:2305.13579},

year={2023}

}