Made in Vancouver, Canada by Picovoice

This repository serves as a minimalist and extensible framework designed for benchmarking various speaker recognition engines in the context of streaming audio.

For this benchmark, it is assumed that during the enrollment step access to the entire enrollment audio is available. Then, the enrolled speaker is detected within a stream of audio frames using the speaker recognition engine. The duration of each audio frame is 96 ms.

VoxConverse is a well-known dataset used in speaker identification. It contains conversations in many languages and includes time details for speakers.

- Clone the VoxConverse repository. This repository contains only the labels

in the form of

.rttmfiles. - Download the test set from the links provided in the

README.mdfile of the cloned repository and extract the downloaded files.

The Detection Accuracy (DA) metric is determined by the accuracy of the recognition system as a binary classification, and its computation relies on the formula:

where

The Detection Error Rate (DER) metric assesses the duration of errors relative to the total duration of enrolled speaker segments:

where

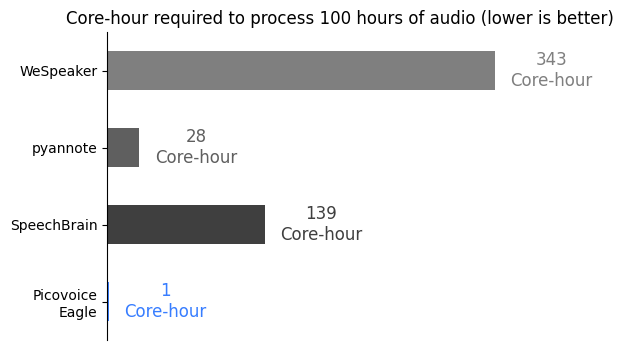

The Core-Hour metric is used to evaluate the computational efficiency of the speaker recognition engine, indicating the number of hours required to process one hour of audio on a single CPU core.

This benchmark has been developed and tested on Ubuntu 20.04 using Python 3.8.

- Set up your dataset as described in the Data section.

- Install the requirements:

pip3 install -r requirements.txt- In the commands that follow, replace

${DATASET}with a supported dataset,${DATA_FOLDER}with the path to the dataset folder, and${LABEL_FOLDER}with the path to the label folder. For further details, refer to the Data. Replace${TYPE}withACCURACYorCPUfor accuracy and CPU usage benchmark respectively.

python3 benchmark.py \

--type ${TYPE} \

--dataset ${DATASET} \

--data-folder ${DATA_FOLDER} \

--label-folder ${LABEL_FOLDER} \

--engine ${ENGINE} \

...Additionally, specify the desired engine using the --engine flag. For instructions on each engine and the required

flags, consult the section below.

Replace ${PICOVOICE_ACCESS_KEY} with AccessKey obtained from Picovoice Console.

python3 benchmark.py \

--dataset ${DATASET} \

--data-folder ${DATA_FOLDER} \

--label-folder ${LABEL_FOLDER} \

--engine PICOVOICE_EAGLE \

--picovoice-access-key ${PICOVOICE_ACCESS_KEY}Obtain your authentication token to download pretrained models by visiting

their Hugging Face page.

Then replace ${AUTH_TOKEN} with the authentication token.

python3 benchmark.py \

--dataset ${DATASET} \

--data-folder ${DATA_FOLDER} \

--label-folder ${LABEL_FOLDER} \

--engine PYANNOTE \

--auth-token ${AUTH_TOKEN}python3 benchmark.py \

--dataset ${DATASET} \

--data-folder ${DATA_FOLDER} \

--label-folder ${LABEL_FOLDER} \

--engine SPEECHBRAINObtain your authentication token to download pretrained models by visiting

their Hugging Face page.

Then replace ${AUTH_TOKEN} with the authentication token.

python3 benchmark.py \

--dataset ${DATASET} \

--data-folder ${DATA_FOLDER} \

--label-folder ${LABEL_FOLDER} \

--engine WESPEAKER \

--auth-token ${AUTH_TOKEN}Measurement is carried on an Ubuntu 22.04.3 LTS machine with AMD CPU (AMD Ryzen 7 5700X (16) @ 3.400GHz), 64 GB of

RAM, and NVMe storage.

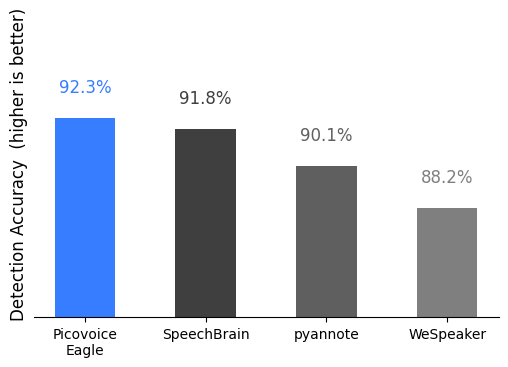

| Engine | VoxConverse |

|---|---|

| Picovoice Eagle | 92.3% |

| SpeechBrain | 91.8% |

| pyannote | 90.1% |

| WeSpeaker | 88.2% |

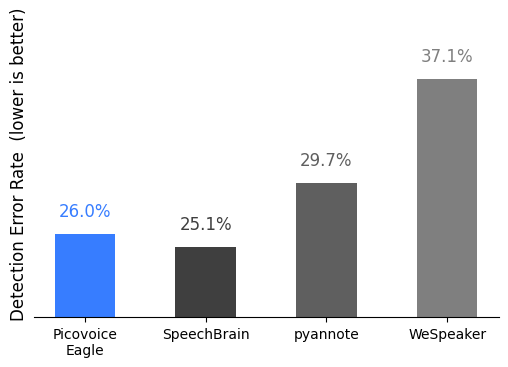

| Engine | VoxConverse |

|---|---|

| Picovoice Eagle | 26.0% |

| SpeechBrain | 25.1% |

| pyannote | 29.7% |

| WeSpeaker | 37.1% |

| Engine | Core-Hour |

|---|---|

| Picovoice Eagle | 1 |

| SpeechBrain | 139 |

| pyannote | 28 |

| WeSpeaker | 343 |