This repository is the official implementation of Zero-Painter.

Zero-Painter: Training-Free Layout Control for Text-to-Image Synthesis

Marianna Ohanyan*,

Hayk Manukyan*,

Zhangyang Wang,

Shant Navasardyan,

Humphrey Shi

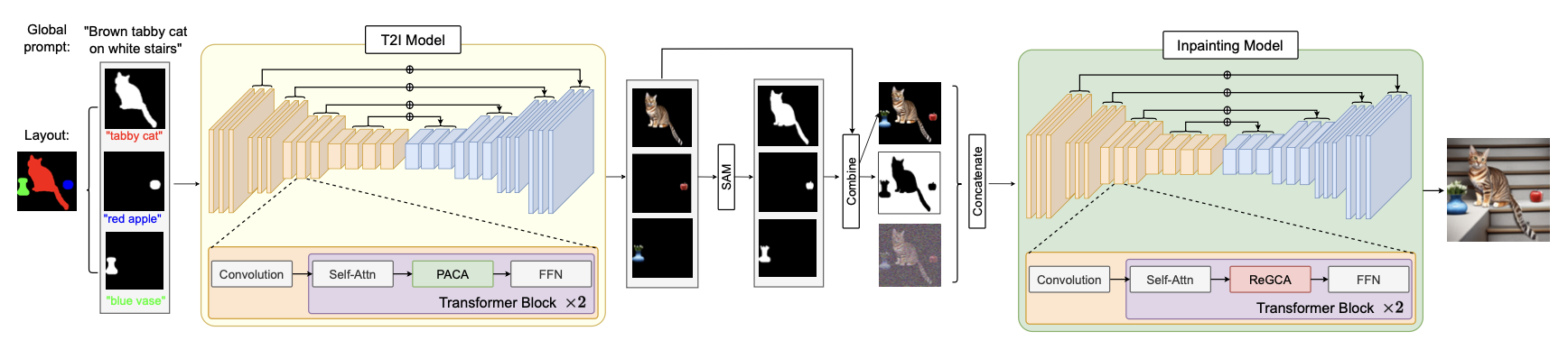

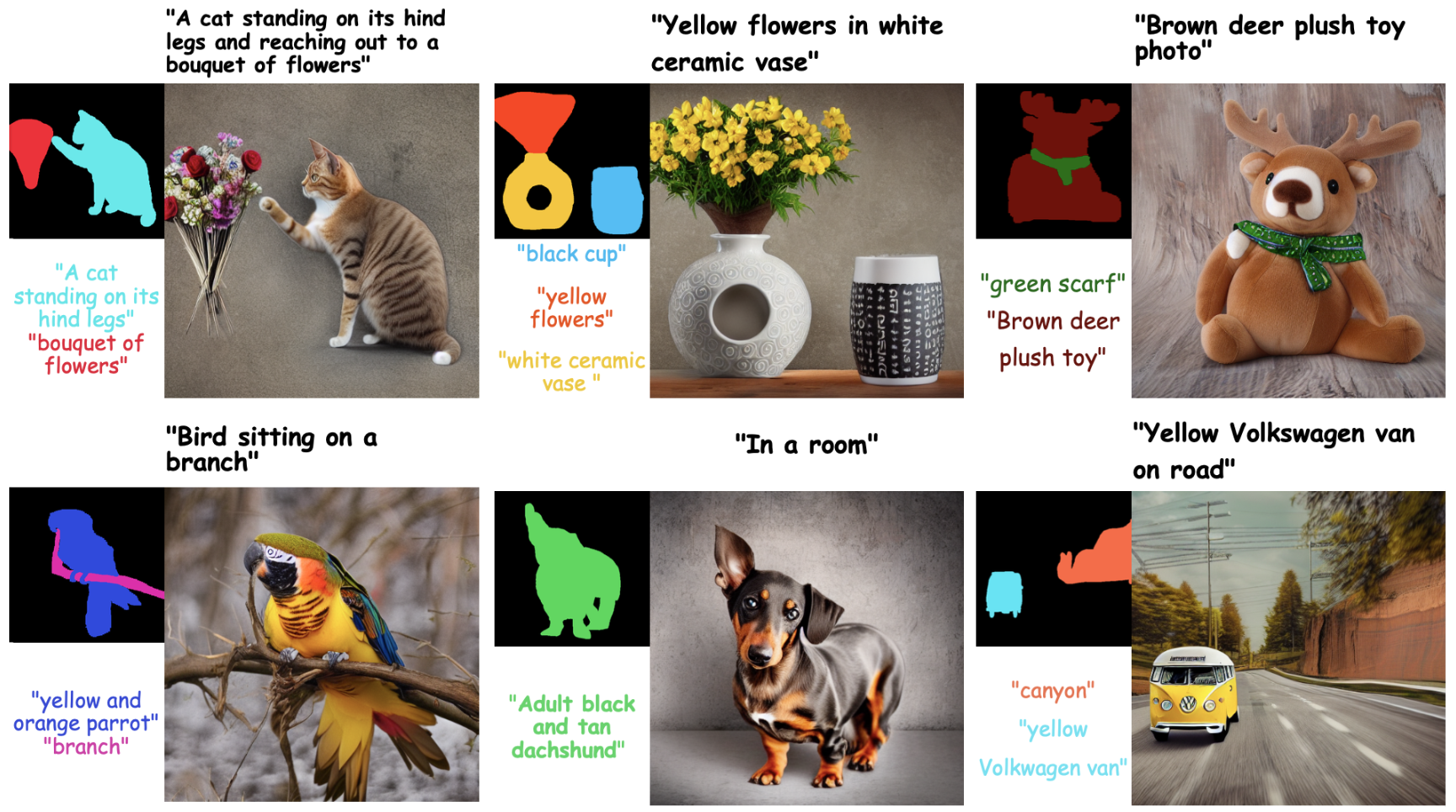

We present Zero-Painter , a novel training-free framework for layout-conditional text-to-image synthesis that facilitates the creation of detailed and controlled imagery from textual prompts. Our method utilizes object masks and individual descriptions, coupled with a global text prompt, to generate images with high fidelity. Zero-Painter employs a two-stage process involving our novel Prompt-Adjusted Cross-Attention (PACA) and Region-Grouped Cross-Attention (ReGCA) blocks, ensuring precise alignment of generated objects with textual prompts and mask shapes. Our extensive experiments demonstrate that Zero-Painter surpasses current state-of-the-art methods in preserving textual details and adhering to mask shapes.

- [2024.06.6] ZeroPainter paper and code is released.

- [2024.02.27] Paper is accepted to CVPR 2024.

Install with pip:

pip3 install -r requirements.txt- Download models and put them in the

modelsfolder. - You can use the following script to perform inference on the given mask and prompts pair:

python zero_painter.py \

--mask-path data/masks/1_rgb.png \

--metadata data/metadata/1.json \

--output-dir data/outputs/

meatadata sould be in the following format

[{

"prompt": "Brown gift box beside red candle.",

"color_context_dict": {

"(244, 54, 32)": "Brown gift box",

"(54, 245, 32)": "red candle"

}

}]

If you use our work in your research, please cite our publication:

@article{Zeropainter,

title={Zero-Painter: Training-Free Layout Control for Text-to-Image Synthesis},

url={http://arxiv.org/abs/2406.04032},

publisher={arXiv},

author={Ohanyan, Marianna and Manukyan, Hayk and Wang, Zhangyang and Navasardyan, Shant and Shi, Humphrey},

year={2024}}