视频链接 | 在线体验 | 部署文档| 更新日志 | 常见问题

本项目提供基于HuggingFace社区和ModelScope魔搭社区的在线体验, 欢迎尝试和反馈!

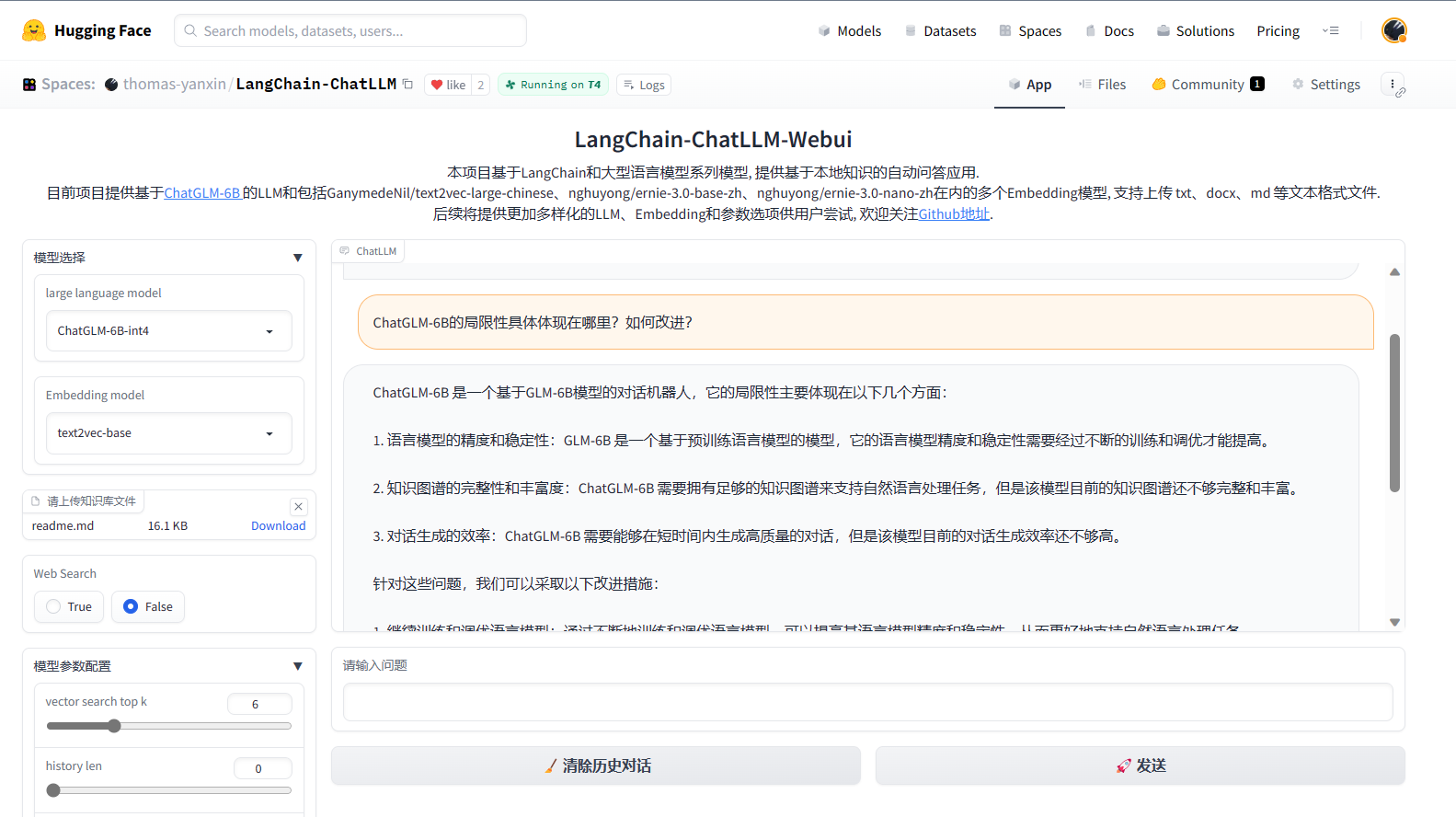

受langchain-ChatGLM启发, 利用LangChain和ChatGLM-6B系列模型制作的Webui, 提供基于本地知识的大模型应用.

目前支持上传 txt、docx、md、pdf等文本格式文件, 提供包括ChatGLM-6B系列、Belle系列等模型文件以及GanymedeNil/text2vec-large-chinese、nghuyong/ernie-3.0-base-zh、nghuyong/ernie-3.0-nano-zh等Embedding模型.

提供ModelScope版本和HuggingFace版本.

需要Python>=3.8.1

若存在网络问题可点击以下链接快速下载:

| large language model | Embedding model |

|---|---|

| ChatGLM-6B | text2vec-large-chinese |

| ChatGLM-6B-int8 | ernie-3.0-base-zh |

| ChatGLM-6B-int4 | ernie-3.0-nano-zh |

| ChatGLM-6B-int4-qe | ernie-3.0-xbase-zh |

| Vicuna-7b-1.1 | simbert-base-chinese |

| Vicuna-13b-1.1 | paraphrase-multilingual-MiniLM-L12-v2 |

| BELLE-LLaMA-7B-2M | |

| BELLE-LLaMA-13B-2M | |

| Minimax |

详情请见: 更新日志

项目处于初期阶段, 有很多可以做的地方和优化的空间, 欢迎感兴趣的社区大佬们一起加入!

- ChatGLM-6B: ChatGLM-6B: 开源双语对话语言模型

- LangChain: Building applications with LLMs through composability

- langchain-ChatGLM: 基于本地知识的 ChatGLM 应用实现

ChatGLM论文引用

@inproceedings{

zeng2023glm-130b,

title={{GLM}-130B: An Open Bilingual Pre-trained Model},

author={Aohan Zeng and Xiao Liu and Zhengxiao Du and Zihan Wang and Hanyu Lai and Ming Ding and Zhuoyi Yang and Yifan Xu and Wendi Zheng and Xiao Xia and Weng Lam Tam and Zixuan Ma and Yufei Xue and Jidong Zhai and Wenguang Chen and Zhiyuan Liu and Peng Zhang and Yuxiao Dong and Jie Tang},

booktitle={The Eleventh International Conference on Learning Representations (ICLR)},

year={2023},

url={https://openreview.net/forum?id=-Aw0rrrPUF}

}

@inproceedings{du2022glm,

title={GLM: General Language Model Pretraining with Autoregressive Blank Infilling},

author={Du, Zhengxiao and Qian, Yujie and Liu, Xiao and Ding, Ming and Qiu, Jiezhong and Yang, Zhilin and Tang, Jie},

booktitle={Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)},

pages={320--335},

year={2022}

}

BELLE论文引用

@misc{BELLE,

author = {Yunjie Ji, Yong Deng, Yan Gong, Yiping Peng, Qiang Niu, Baochang Ma and Xiangang Li},

title = {BELLE: Be Everyone's Large Language model Engine },

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/LianjiaTech/BELLE}},

}

@article{belle2023exploring,

title={Exploring the Impact of Instruction Data Scaling on Large Language Models: An Empirical Study on Real-World Use Cases},

author={Yunjie Ji, Yong Deng, Yan Gong, Yiping Peng, Qiang Niu, Lei Zhang, Baochang Ma, Xiangang Li},

journal={arXiv preprint arXiv:2303.14742},

year={2023}

}

- langchain-ChatGLM提供的基础框架

- 魔搭ModelScope提供展示空间

- OpenI启智社区提供调试算力

- langchain-serve提供十分简易的Serving方式

- 除此以外, 感谢来自社区的同学们对本项目的关注和支持!