- [2024.04.11] Our approach now supports multi-modal models with ViT as backbone (Pytorch only)! Welcome to try it according to the tutorial!

- [2024.01.17] The original code is available now! Welcome to try it according to the tutorial!

- [2024.01.16] The paper has been accepted by ICLR 2024 and selected for oral presentation!

Recognition Models (Please download and put the models to the path ckpt/keras_model):

| Datasets | Model |

|---|---|

| Celeb-A | keras-ArcFace-R100-Celeb-A.h5 |

| VGG-Face2 | keras-ArcFace-R100-VGGFace2.h5 |

| CUB-200-2011 | cub-resnet101.h5, cub-resnet101-new.h5, cub-efficientnetv2m.h5, cub-mobilenetv2.h5, cub-vgg19.h5 |

Uncertainty Estimation Models (Please download and put the models to the path ckpt/pytorch_model):

| Datasets | Model |

|---|---|

| Celeb-A | edl-101-10177.pth |

| VGG-Face2 | edl-101-8631.pth |

| CUB-200-2011 | cub-resnet101-edl.pth |

opencv-python

opencv-contrib-python

mtutils

xplique>=1.0.3conda create -n smdl python=3.10

conda activate smdl

python3 -m pip install tensorflow[and-cuda]

pip install git+https://github.com/facebookresearch/segment-anything.git

First, the priori saliency maps for sub-region division needs to be generated.

CUDA_VISIBLE_DEVICES=0 python generate_explanation_maps.py

Don't forget to open this file and revise the variable mode and net_mode:

-

mode: ["Celeb-A", "VGGFace2", "CUB", "CUB-FAIR"] -

net_mode: ["resnet", "efficientnet", "vgg19", "mobilenetv2"], note that these net_mode only formodeis CUB-FAIR.

CUDA_VISIBLE_DEVICES=0 python smdl_explanation.py

Xplique: a Neural Networks Explainability Toolbox

Score-CAM: a third-party implementation with Keras.

Segment-Anything: a new AI model from Meta AI that can "cut out" any object, in any image, with a single click.

@inproceedings{chen2024less,

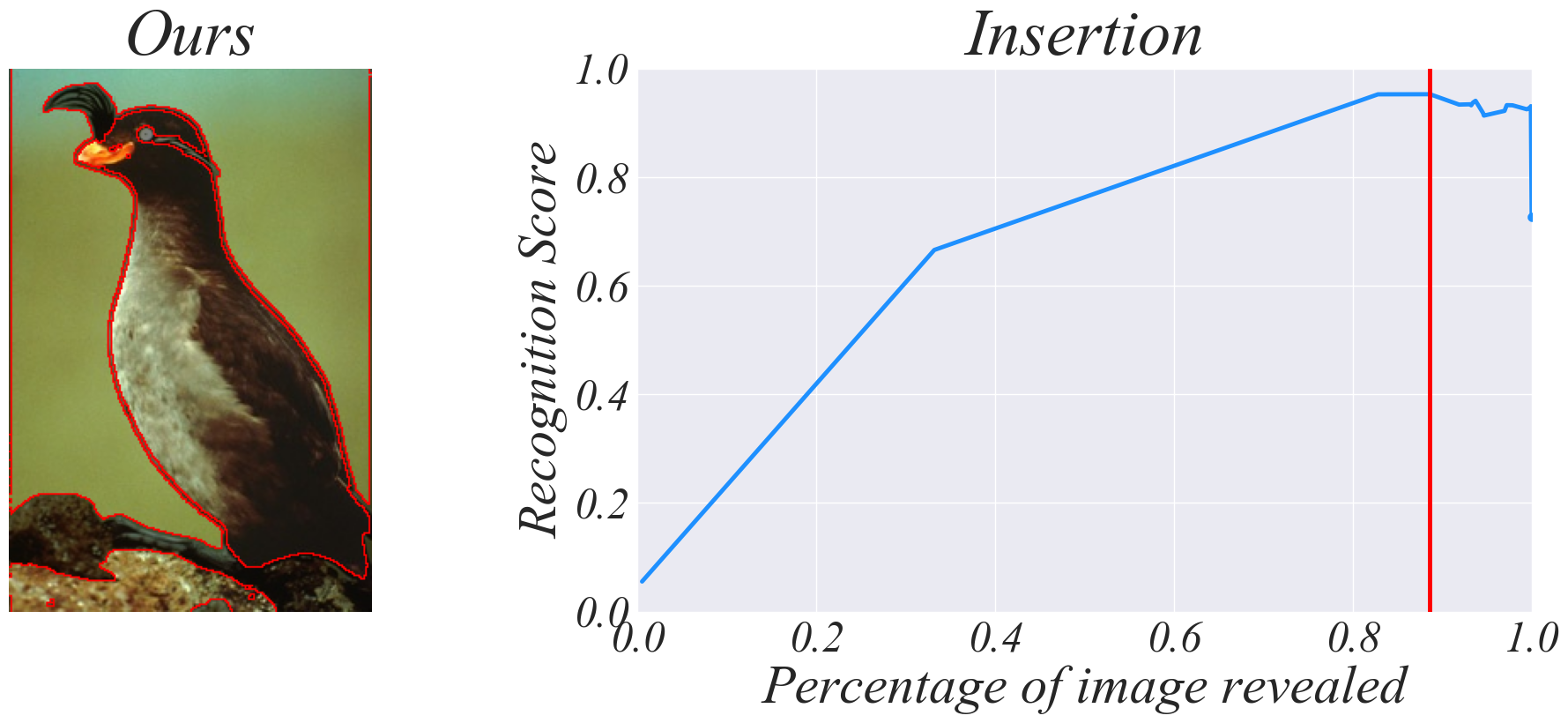

title={Less is More: Fewer Interpretable Region via Submodular Subset Selection},

author={Chen, Ruoyu and Zhang, Hua and Liang, Siyuan and Li, Jingzhi and Cao, Xiaochun},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024}

}