PyXAB is a Python open-source library for X-armed bandit algorithms, a prestigious set of optimizers for online black-box optimization, i.e., optimize an objective without gradients, also known as the continuous-arm bandit (CAB), Lipschitz bandit, global optimization (GO) and bandit-based black-box optimization problems.

PyXAB includes implementations of different X-armed bandit algorithms, including the classic ones such as HOO (Bubeck et al., 2011), StoSOO (Valko et al., 2013), and HCT (Azar et al., 2014), and the most recent works such as GPO (Shang et al., 2019) and VHCT (Li et al, 2021). PyXAB also provides the most commonly-used synthetic objectives to evaluate the performance of different algorithms and the implementations for different hierarchical partitions

First define the blackbox objective, the parameter domain, the partition of the space, and the algorithm, e.g.

target = Garland()

domain = [[0, 1]]

partition = BinaryPartition

algo = T_HOO(rounds=1000, domain=domain, partition=partition)At every round t, call algo.pull(t) to get a point. After receiving the (stochastic) reward for the point, call

algo.receive_reward(t, reward) to give the algorithm the feedback

point = algo.pull(t)

reward = target.f(point) + np.random.uniform(-0.1, 0.1) # Uniform noise example

algo.receive_reward(t, reward)-

The most up-to-date documentations

-

The roadmap for our project

-

Our paper for the library

To install via pip, run the following lines of code

pip install PyXAB # normal install

pip install --upgrade PyXAB # or update if neededTo install via git, run the following lines of code

git clone https://github.com/WilliamLwj/PyXAB.git

cd PyXAB

pip install .- Algorithm starred are meta-algorithms (wrappers)

| Partition | Description |

|---|---|

| BinaryPartition | Equal-size binary partition of the parameter space, the split dimension is chosen uniform randomly |

| RandomBinaryPartition | Random-size binary partition of the parameter space, the split dimension is chosen uniform randomly |

| DimensionBinaryPartition | Equal-size partition of the space with a binary split on each dimension, the number of children of one node is 2^d |

| KaryPartition | Equal-size K-ary partition of the parameter space, the split dimension is chosen uniform randomly |

| RandomKaryPartition | Random-size K-ary partition of the parameter space, the split dimension is chosen uniform randomly |

- Some of these objectives can be found on Wikipedia

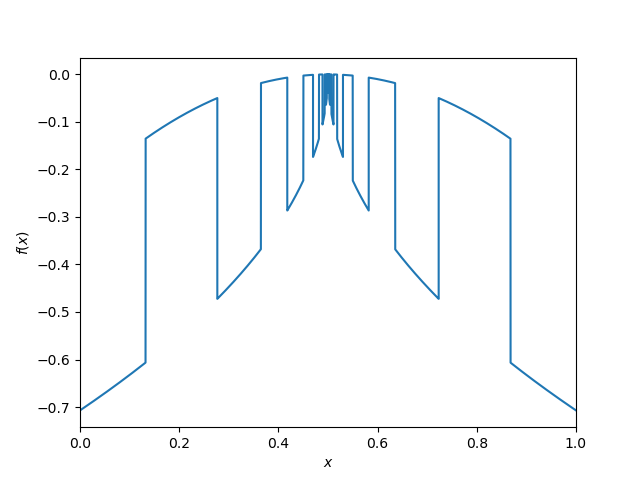

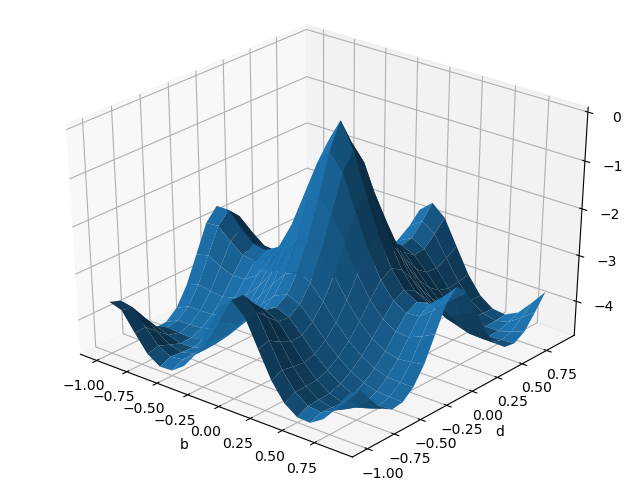

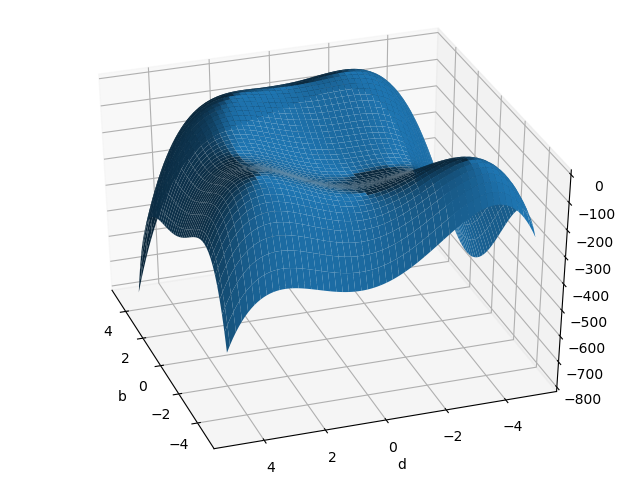

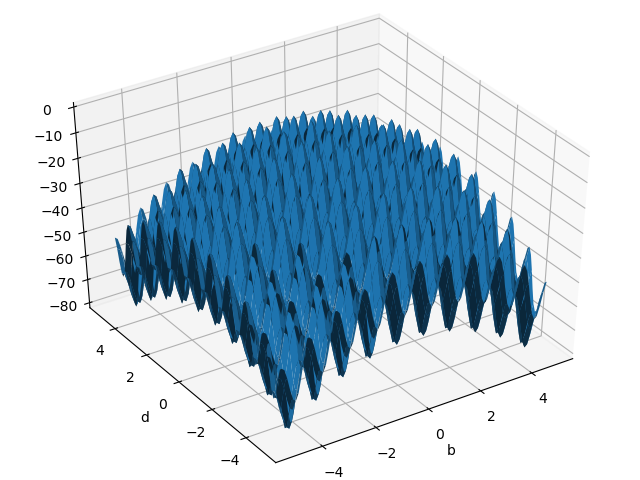

| Objectives |

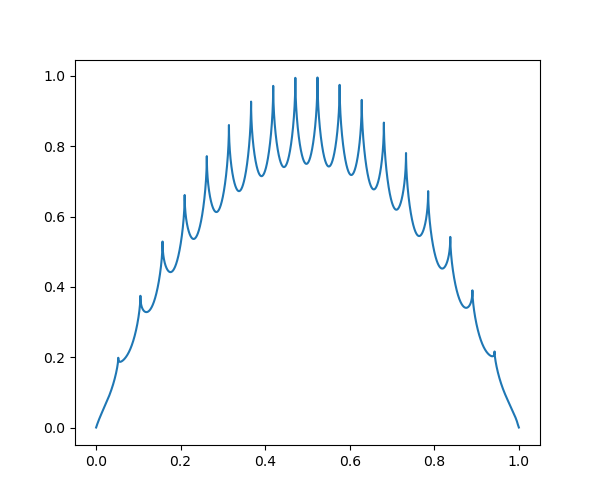

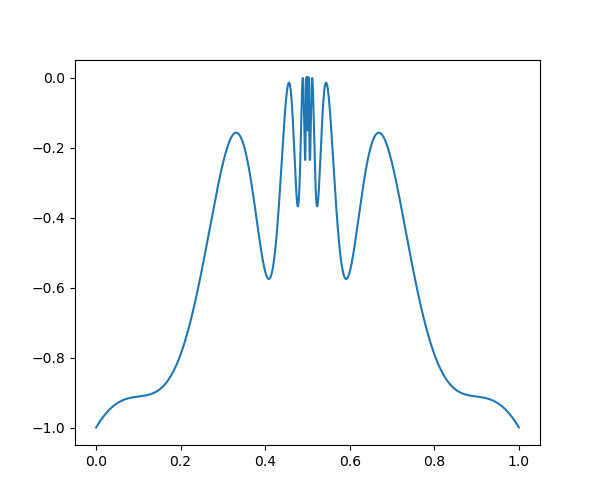

Image |

|---|---|

| Garland |  |

| DoubleSine |  |

| DifficultFunc |  |

| Ackley |  |

| Himmelblau |  |

| Rastrigin |  |

We appreciate all forms of help and contributions, including but not limited to

- Star and watch our project

- Open an issue for any bugs you find or features you want to add to our library

- Fork our project and submit a pull request with your valuable codes

Please read the contributing instructions before submitting a pull request.

If you use our package in your research or projects, we kindly ask you to cite our work

@misc{Li2023PyXAB,

doi = {10.48550/ARXIV.2303.04030},

url = {https://arxiv.org/abs/2303.04030},

author = {Li, Wenjie and Li, Haoze and Honorio, Jean and Song, Qifan},

title = {PyXAB -- A Python Library for $\mathcal{X}$-Armed Bandit and Online Blackbox Optimization Algorithms},

publisher = {arXiv},

year = {2023},

}

We would appreciate it if you could cite our related works.

@article{li2021optimum,

title={Optimum-statistical Collaboration Towards General and Efficient Black-box Optimization},

author={Li, Wenjie and Wang, Chi-Hua, Qifan Song and Cheng, Guang},

journal={arXiv preprint arXiv:2106.09215},

year={2021}

}

@misc{li2022Federated,

doi = {10.48550/ARXIV.2205.15268},

url = {https://arxiv.org/abs/2205.15268},

author = {Li, Wenjie and Song, Qifan and Honorio, Jean and Lin, Guang},

title = {Federated X-Armed Bandit},

publisher = {arXiv},

year = {2022},

}