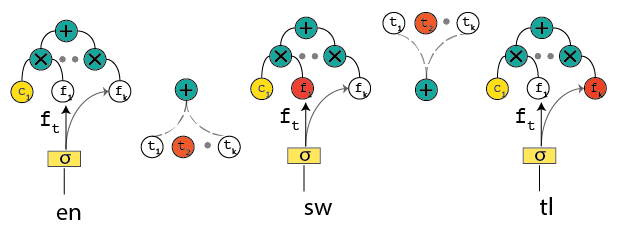

Y-Flow is an extension for MatchZoo https://github.com/faneshion/MatchZoo toolkit for text matching. Here at Yale we are developing a system for text matching for question answering, document ranking, paraphrase identification, and machine translation. The figure above indicates a surprise language coming to our system and we are going to use zero-shot learning approaches for transfer learning particularly for syntax.

| Tasks | Text 1 | Text 2 | Objective | author |

|---|---|---|---|---|

| Transfer Learning | ranking 1 | ranking 2 | ranking | yflow |

| Paraphrase Indentification | string 1 | string 2 | classification | matchzoo |

| Sentiment Analysis | product | review | classification | yflow |

| Sentence Matching | sentence 1 | sentence 2 | score | yflow |

| Textual Entailment | text | hypothesis | classification | matchzoo |

| Question Answer | question | answer | classification/ranking | matchzoo |

| Conversation | dialog | response | classification/ranking | matchzoo |

| Information Retrieval | query | document | ranking | yflow |

Please clone the repository and run

conda install -c anaconda tensorflow-gpu

git clone https://github.com/javiddadashkarimi/Y-Flow.git

cd Y-Flow

python setup.py install

export TF_CPP_MIN_LOG_LEVEL=2

In the main directory, this will install the dependencies automatically.

Or run the following to run the dependencies:pip install -r requirements.txt.

Then install trec_eval for runnning the system. Visit IndriBuildIndex to install IndriBuildIndex application.

Different text matching formats are considered in this porject for unification:

-

Word Dictionary: records the mapping from each word to a unique identifier called wid. Words that are too frequent (e.g. stopwords), too rare or noisy (e.g. fax numbers) can be filtered out by predefined rules.

-

Corpus File: records the mapping from each text to a unique identifier called tid, along with a sequence of word identifiers contained in that text. Note here each text is truncated or padded to a fixed length customized by users.

-

Relation File: is used to store the relationship between two texts, each line containing a pair of tids and the corresponding label.

-

Detailed Input Data Format: a detailed explaination of input data format can be found in Y-Flow/data/example/readme.md.

-

Example: for indexing and also generating training data in document ranking:

- IndriBuildIndex index.param

- sh generate_ranking_data.sh

In the model construction module, we employ Keras library to help users build the deep matching model layer by layer conveniently. The Keras libarary provides a set of common layers widely used in neural models, such as convolutional layer, pooling layer, dense layer and so on. To further facilitate the construction of deep text matching models, we extend the Keras library to provide some layer interfaces specifically designed for text matching.

Moreover, the toolkit has implemented two schools of representative deep text matching models, namely representation-focused models and interaction-focused models [Guo et al.].

For learning the deep matching models, the toolkit provides a variety of objective functions for regression, classification and ranking. For example, the ranking-related objective functions include several well-known pointwise, pairwise and listwise losses. It is flexible for users to pick up different objective functions in the training phase for optimization. Once a model has been trained, the toolkit could be used to produce a matching score, predict a matching label, or rank target texts (e.g., a document) against an input text.

For the document ranking example, you can run

# to trigger machine translation-involved version:

python material.py -src en -tgt sw -c en -m mt

# to trigger google translation-involoved version:

python material.py -src en -tgt tl -c tl -m google

# add query list by -q to show detailed results:

python material.py -src en -tgt tl -c tl -m google -q query991 query306

-'--source','-src', default='en', help='source language [sw,tl,en]'

-'--target','-tgt', default='sw', help='target language [sw,tl,en]'

-'--collection','-c', default='en', help='language of documents [sw,tl,en]'

-'--out','-o', default='en', help='output language [sw,tl,en]'

-'--method','-m', default='mt', help='method [mt,google,wiktionary,fastext]'

-'--query_list', '-q', nargs='*',help='check query result [query Id list]'

-

DRMM : this model is an implementation of A Deep Relevance Matching Model for Ad-hoc Retrieval.

-

MatchPyramid : this model is an implementation of Text Matching as Image Recognition

-

ARC-I : this model is an implementation of Convolutional Neural Network Architectures for Matching Natural Language Sentences

-

DSSM : this model is an implementation of Learning Deep Structured Semantic Models for Web Search using Clickthrough Data

-

CDSSM : this model is an implementation of Learning Semantic Representations Using Convolutional Neural Networks for Web Search

-

ARC-II : this model is an implementation of Convolutional Neural Network Architectures for Matching Natural Language Sentences

-

MV-LSTM : this model is an implementation of A Deep Architecture for Semantic Matching with Multiple Positional Sentence Representations

-

aNMM : this model is an implementation of aNMM: Ranking Short Answer Texts with Attention-Based Neural Matching Model

-

DUET : this model is an implementation of Learning to Match Using Local and Distributed Representations of Text for Web Search

-

models under development:

Match-SRNN, DeepRank, K-NRM ....

| author | affiliation | task | page |

|---|---|---|---|

| Irene Li | Yale University | Information Retrieval | GitHub |

| Caitlin Westerfield | Yale University | Transfer Learning | GitHub |

| Gaurav Pathak | Yale University | Zero-shot Learning | GitHub |

| Javid Dadashkarimi | Yale University | Organizer | GitHub |

| Dragomir Radev | Yale University | Adviser | GitHub |

- python2.7+

- tensorflow 1.4.1+

- keras 2.1.3+

- nltk 3.2.2+

- tqdm 4.19.4+

- h5py 2.7.1+

- indri 5.7