Portable implementation of variational autoencoders (and some variants). This tool can be used to quickly view learned latent representations of user-provided images.

Try it now on 1000 frames from the Atari game Beam Rider (i.e. sample_dataset) by running python main.py --mse --beta 2 --epochs 50 --path sample_dataset -s vae_beam.h5

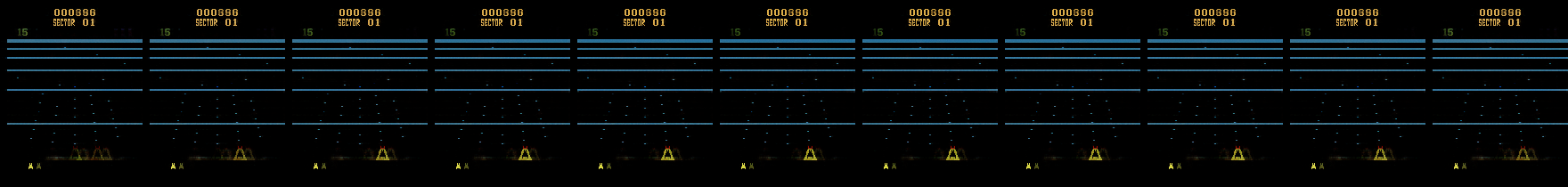

Here are reconstructions from varying latent factor 22

Reasons to use this include:

- Learn low-dimensional latent representations and use these as features to accomplish some task

- Gain intuition on how different types of VAEs and different parameters affect the latent representations learned from a user-provided image dataset.

- Explore the limits of using VAEs reliably for a user-provided dataset. For example, identify parameter bounds to avoid latent variable collapse.

New or improved functionality includes:

- Support of arbitrary image type

--instead of just MNIST - Support of color images

--instead of just primarily B/W grayscale - Support of flexible, user-specific VAE architecture

--instead of just hard-coded architecture intended for just MNIST - (ongoing) Shape matching between encoder and decoder sections

--previously none, which led to shape mismatch errors for non-MNIST datasets - (ongoing) View reconstructions from latent factors as one latent activation is varied while all others are fixed

--new - (ongoing) Return M most disentangled latent factors along with images of (5.) for these factors

--new