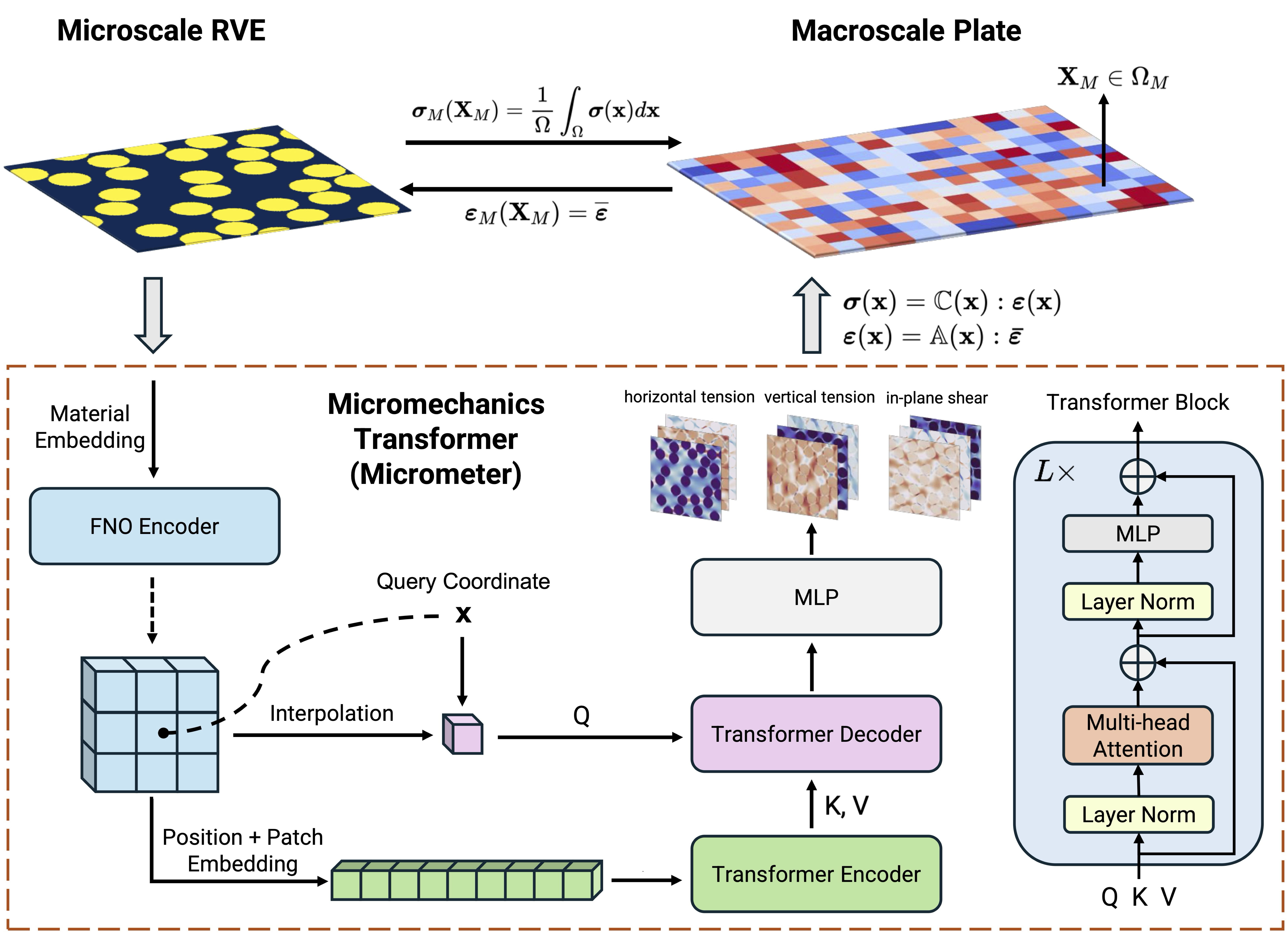

Micrometer: Micromechanics Transformer for Predicting the Mechanical Response of Heterogeneous Materials

Code for paper Micrometer: Micromechanics Transformer for Predicting Mechanical Responses of Heterogeneous Materials.

Our code has been tested with a Linux environment using the following configuration:

- Python 3.9

- CUDA 12.4

- CUDNN 8.9

- JAX 0.4.26

First, clone the repository:

git clone https://github.com/PredictiveIntelligenceLab/micrometer.git

cd micrometer

Then, install the required packages

pip3 install -U pip

pip3 install --upgrade jax jaxlib

pip3 install --upgrade -r requirements.txt

Finally, install the package:

pip3 install -e .

Our dataset can be downloaded from the following Google Drive links:

| Name | Link |

|---|---|

| CMME | Link |

| Homogenization | Link |

| Multiscale Modelling | Link |

| Transfer Learning | Link |

First, please place the downloaded the dataset and change the data path in

the corresponding config file, e.g. in configs/base.py:

dataset.data_path = "path/to/dataset"

Then, to train our Micrometer (e.g. cvit_b_16), run the following command:

python3 main.py --config=configs/base.py:cvit_b_16

The user can also train other models by changing the model name

in the above command, e.g. cvit_b_16 to cvit_l_8. We also provide UNet or FNO of different configurations as the backbone model,

which can be found in configs/models.py.

To specify the GPU device, for example, use the following command:

CUDA_VISIBLE_DEVICES=0,1,2,3 python3 main.py --config=configs/base.py:cvit_b_16

To evaluate the trained model (e.g. cvit_b_16), run the following command:

python3 main.py --config=configs/base.py:cvit_b_16 --config.mode=eval

With the pre-trained model, we can perform homogenization by running the following command:

python3 main.py --config=configs/homo.py:cvit_b_16

With the pre-trained model, we can perform multiscale modelling

python3 main.py --config=configs/multiscale.py:cvit_b_16

We can also fine-tune our pretrained model on new datasets, which is configured in

finetune_vf.py or finetune_ch.py

python3 main.py --config=configs/finetune_ch.py:cvit_b_16

We provide pre-trained model checkpoints for the best configurations. These checkpoints can be used to initialize the model for further training or evaluation.

| Model Configuration | Checkpoint |

|---|---|

| Micrometer-L-8 | Link |

| Micrometer-L-16 | Link |

Our work is motivated by our previous research on CViT. If you find this work useful, please consider citing the following papers:

@article{wang2024bridging,

title={Bridging Operator Learning and Conditioned Neural Fields: A Unifying Perspective},

author={Wang, Sifan and Seidman, Jacob H and Sankaran, Shyam and Wang, Hanwen and Pappas, George J and Perdikaris, Paris},

journal={arXiv preprint arXiv:2405.13998},

year={2024}

}

@article{wang2024micrometer,

title={Micrometer: Micromechanics Transformer for Predicting Mechanical Responses of Heterogeneous Materials},

author={Wang, Sifan and Liu, Tong-Rui and Sankaran, Shyam and Perdikaris, Paris},

journal={arXiv preprint arXiv:2410.05281},

year={2024}

}