Code and data for paper NTK-Guided Few-Shot Class Incremental Learning (Accept by IEEE TIP)

Before starting, it's crucial to understand these repositories in depth. Since these repositories are designed for 224x224 image sizes, you will need to adjust them for smaller images like CIFAR100, Mini-ImageNet, and ImageNet100 used in FSCIL (Few-Shot Class-Incremental Learning). After making the necessary modifications, proceed with self-supervised pre-training on the base session for each dataset. Train for 1000 epochs, ensuring effective convergence, and save the resulting pre-trained weights.

- MAE: Learns image representations by masking random patches of the input image and reconstructing the missing information.

- SparK: Efficient pre-training that leverages sparsity for faster convergence and enhanced performance.

- DINO: A self-distillation framework where a student network learns from a teacher network to generate consistent features across views.

- MoCo-v3: Momentum Contrast Learning's latest iteration, refining contrastive learning.

- SimCLR: A simple yet effective framework for contrastive learning using image augmentations.

- BYOL: Learn effective representations without negative samples, focusing on mutual information maximization.

Using CIFAR100 as an example, we have open-sourced the pre-trained weights obtained from these self-supervised frameworks, covering various network architectures such as ResNet18, ResNet12, ViT-Tiny, and ViT-Small. The specific Google Drive download link is: https://drive.google.com/drive/folders/1RhyhZXETrxZqCkVb7UhQMIoQWZJqLogs?usp=drive_link. You can directly download the pretrain_weights folder and place it in the root directory of the project. Alternatively, you can choose to perform the pre-training yourself and adjust the corresponding path in the load_self_pretrain_weights function within the utils.py file.

To run NTK-FSCIL from source, follow these steps:

- Clone this repository locally

cdinto the repository.- Run

conda create -n FSCIL python=3.8to created a conda environment namedFSCIL. - Activate the environment with

conda activate FSCIL. pip install -r requirements.txt

To get started, simply run the script using:

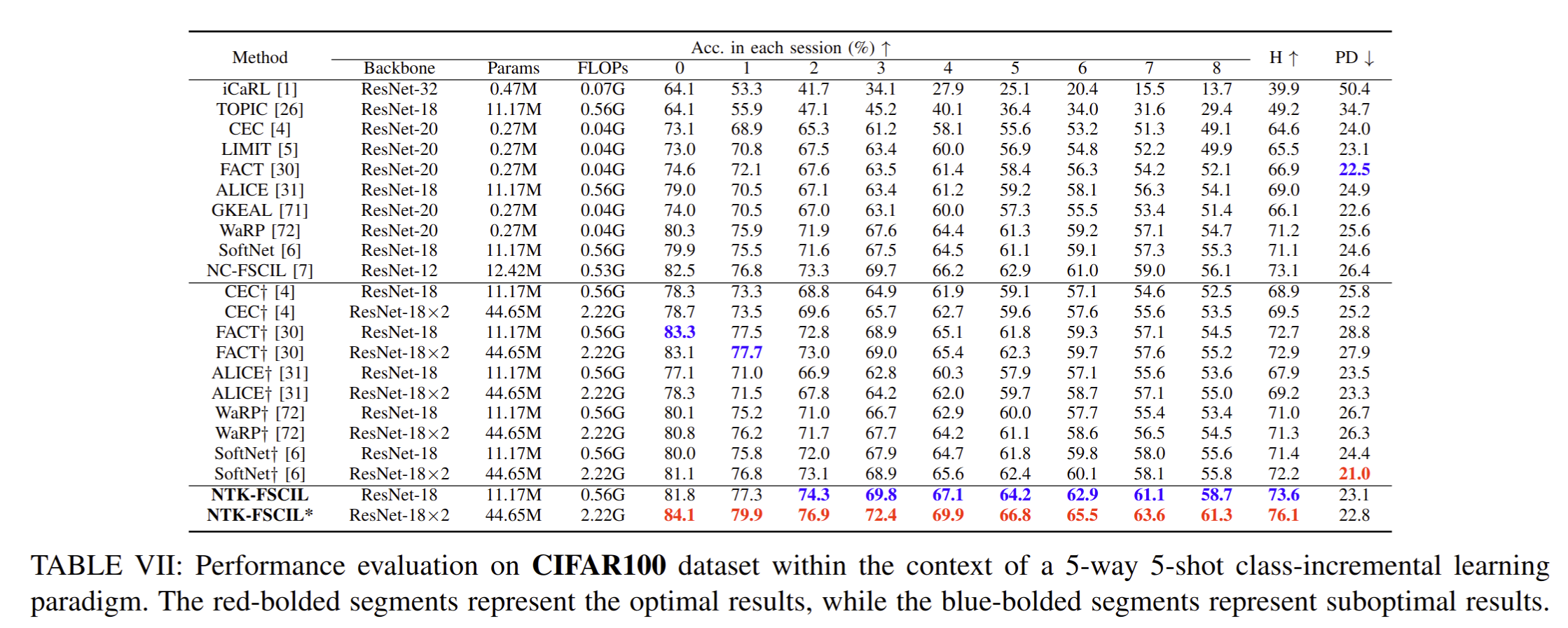

python cifar.pyThe results on CIFAR-100 are presented as follows:

We have incorporated a variety of configurable settings to offer flexibility for future users. These include:

-

Datasets:

cifar100,cub200,miniimagenet,imagenet100,imagenet1000

-

Image Transformation Methods:

Normal,AMDIM,SimCLR,AutoAug,RandAug

-

Network Architectures:

resnet12,resnet18,vit_tiny,vit_small

-

Self-Supervised Pre-Trained Weights:

dino,spark,mae,moco-v3,simclr,byol

-

Alignment Losses:

curriculum,arcface,sphereface,cosface,crossentropy

You can modify these settings directly in the command for customized experiments.

Note: We have removed the NTK constraint on the linear layers for content to be included in a future paper. Apologies for the inconvenience! However, this adjustment does not significantly impact performance and should not hinder further innovations based on this work.

If you find our work helpful, please use the following citations.

@ARTICLE{10721322,

author={Liu, Jingren and Ji, Zhong and Pang, Yanwei and Yu, Yunlong},

journal={IEEE Transactions on Image Processing},

title={NTK-Guided Few-Shot Class Incremental Learning},

year={2024},

volume={33},

number={},

pages={6029-6044},

keywords={Power capacitors;Convergence;Training;Kernel;Optimization;Neural networks;Metalearning;Incremental learning;Jacobian matrices;Thermal stability;Few-shot class-incremental learning;neural tangent kernel;generalization;self-supervised learning},

doi={10.1109/TIP.2024.3478854}

}

MIT. Check LICENSE.md.