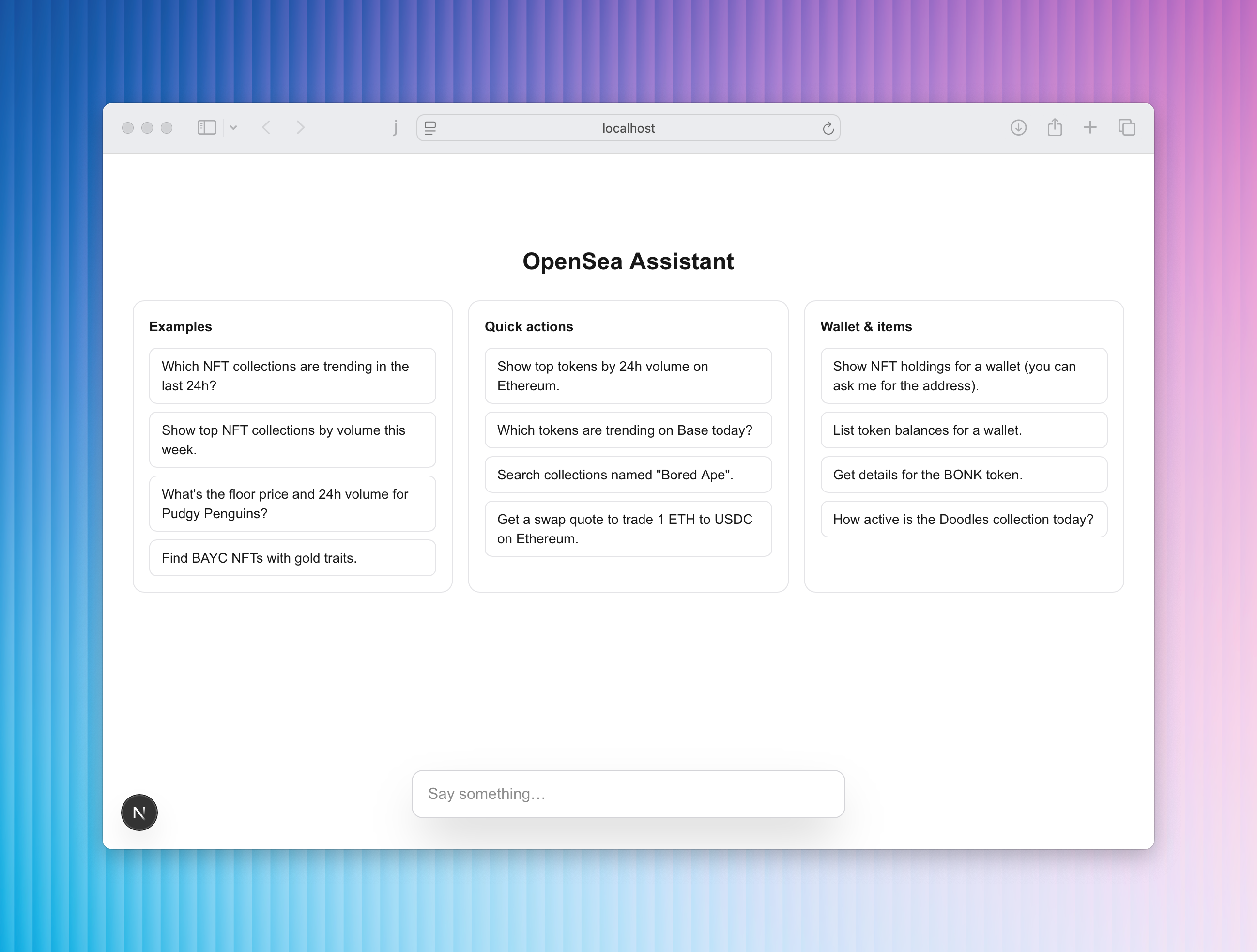

This is a minimal Next.js app showing how to connect the OpenSea MCP server to the Vercel AI SDK to build an AI experience that can search, analyze, and act on OpenSea marketplace data.

- AI runtime: Vercel AI SDK (

ai,@ai-sdk/react,@ai-sdk/openai) - MCP transport: SSE to

https://mcp.opensea.io/sse - Server route:

src/app/api/chat/route.ts - UI:

src/app/page.tsxwithuseChatand tool-call inspector

Full MCP docs: https://docs.opensea.io/docs/mcp

OpenSea MCP is a hosted server that exposes tools for AI agents to access NFT collections, items, token data, wallet balances, swap quotes, trending analytics, and more across major chains.

- Node 18+ and pnpm (or npm/yarn)

- OpenSea MCP Access Token

- More information: https://docs.opensea.io/docs/mcp

pnpm install

# or

npm installCreate a .env.local file in the project root:

OPENAI_API_KEY=your_api_key_here

OPENSEA_BEARER_TOKEN=your_access_token_hereEnvironment variables:

OPENAI_API_KEY— required by@ai-sdk/openaito run the selected OpenAI model (gpt-5-miniin this sample).OPENSEA_BEARER_TOKEN— required to authenticate requests to the OpenSea MCP server.

You can also use the provided .env.sample as a starting point.

pnpm dev

# or npm run devOpen http://localhost:3000 and try one of the example prompts on the home page.

This sample uses the AI SDK’s tool calling to dynamically fetch the tool list from the OpenSea MCP server and let the model call those tools as needed.

- The API route (

src/app/api/chat/route.ts) creates an MCP client over SSE and forwards the tool list to the model:

// snippet from src/app/api/chat/route.ts

const openSeaMCPClient = await createMCPClient({

transport: {

type: 'sse',

url: 'https://mcp.opensea.io/sse',

headers: {

Authorization: `Bearer ${process.env.OPENSEA_BEARER_TOKEN}`,

},

},

});

const tools = await openSeaMCPClient.tools();

const result = streamText({

model: openai('gpt-5-mini'),

system: 'You are a helpful assistant specialized in NFTs and tokens.',

messages: convertToModelMessages(messages),

tools,

toolChoice: 'auto',

});- The client (

src/app/page.tsx) usesuseChatto send/receive messages and displays structured tool-call I/O for transparency.

sequenceDiagram

participant U as User (Browser)

participant C as Next.js Client (`src/app/page.tsx`)

participant API as API Route (`/api/chat`)

participant MCP as OpenSea MCP (SSE)

participant LLM as Model (OpenAI via AI SDK)

U->>C: Enter prompt / click example

C->>API: POST /api/chat (messages)

API->>MCP: Create MCP client (SSE) and fetch tools

API->>LLM: streamText(model, tools, messages)

LLM-->>MCP: Tool calls (as needed)

MCP-->>LLM: Tool responses

LLM-->>API: Streamed text + tool-call parts

API-->>C: UIMessage stream

C-->>U: Render messages + tool inspector

- Which NFT collections are trending in the last 24h?

- Show top NFT collections by volume this week.

- What's the floor price and 24h volume for Pudgy Penguins?

- Search collections named "Bored Ape".

- Show top tokens by 24h volume on Ethereum.

- Get a swap quote to trade 1 ETH to USDC on Ethereum.

- Show NFT holdings for a wallet.

Set the env var in your hosting platform:

OPENAI_API_KEY(Required)OPENSEA_BEARER_TOKEN(Required)

Then build and start as usual:

pnpm build && pnpm startVercel users: add OPENAI_API_KEY and OPENSEA_BEARER_TOKEN in Project Settings → Environment Variables.

- Keep your MCP access token secret. Only expose it server-side.

- Rate limits apply per token. Use pagination for larger result sets where available.

- Chains can be specified in queries and tool inputs (e.g., Ethereum, Base, Polygon, Solana).

- OpenSea MCP will work with any model that supports tool calling. We recommend Claude Sonnet 4 or GPT-5.

- OpenSea MCP docs: https://docs.opensea.io/docs/mcp

- Vercel AI SDK docs: https://sdk.vercel.ai

- Next.js docs: https://nextjs.org/docs