🤗 Huggingface + ⚡ FastAPI = ❤️ Awesomeness. How to structure Deep Learning model serving REST API with FastAPI

How to server Hugging face models with FastAPI, the Python's fastest REST API framework.

How to server Hugging face models with FastAPI, the Python's fastest REST API framework.

The minimalistic project structure for development and production.

Installation and setup instructions to run the development mode model and serve a local RESTful API endpoint.

Files related to application are in the huggingfastapi or tests directories.

Application parts are:

huggingfastapi

├── api - Main API.

│ └── routes - Web routes.

├── core - Application configuration, startup events, logging.

├── models - Pydantic models for api.

├── services - NLP logics.

└── main.py - FastAPI application creation and configuration.

│

tests - Codes without tests is an illusion

Python 3.6+, [Make and Docker]

Install the required packages in your local environment (ideally virtualenv, conda, etc.).

python -m venv .venv

source .venv/bin/activate

make installmake runmake deploymake test-

Duplicate the

.env.examplefile and rename it to.env -

In the

.envfile configure theAPI_KEYentry. The key is used for authenticating our API.

Execute script to generate .env, and replaceexample_keywith the UUID generated:

make generate_dot_env

python -c "import uuid;print(str(uuid.uuid4()))"

- Start your app with:

PYTHONPATH=./huggingfastapi uvicorn main:app --reload-

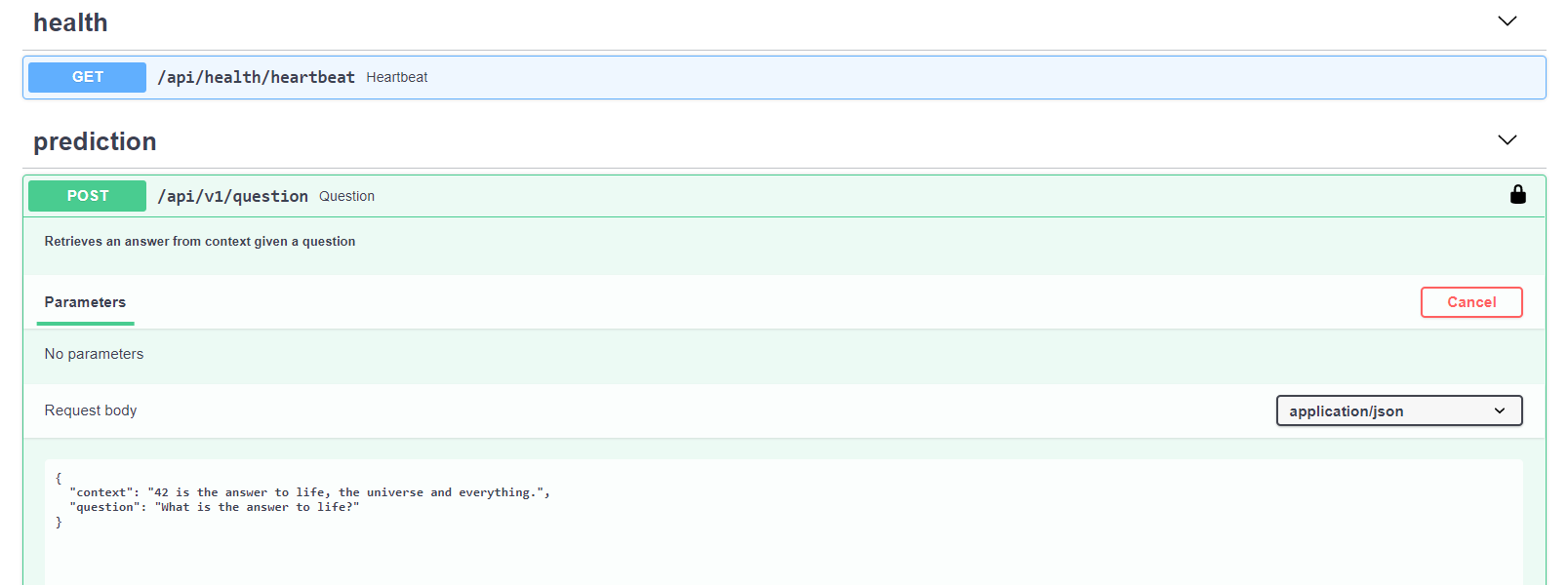

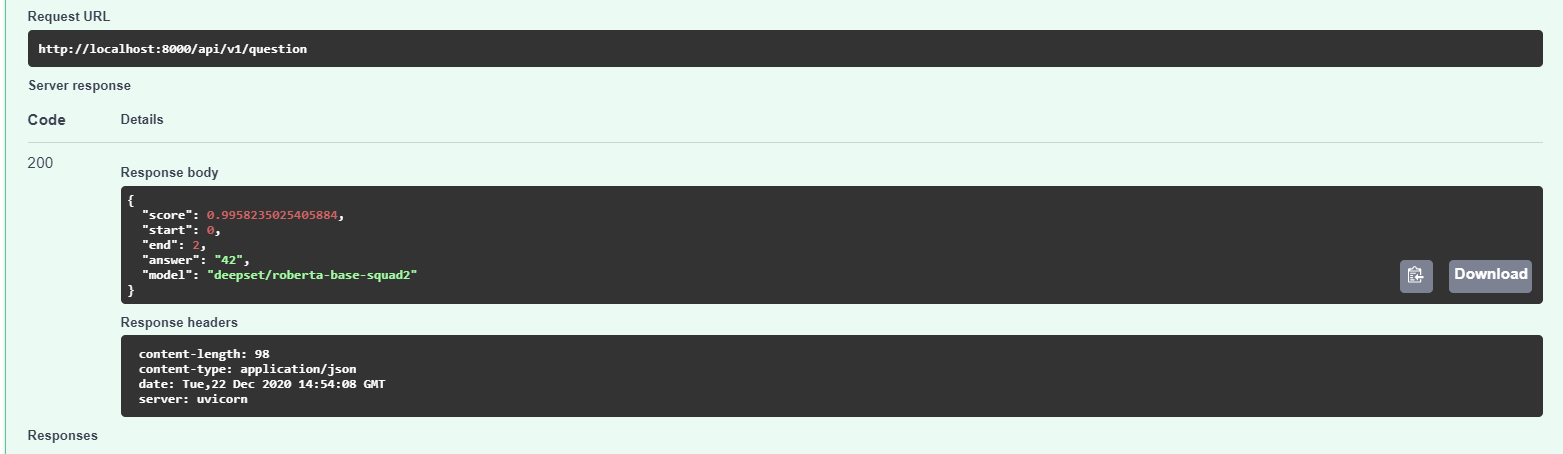

Go to http://localhost:8000/docs or http://localhost:8000/redoc for alternative swagger

-

Click

Authorizeand enter the API key as created in the Setup step.

Intall testing libraries and run tox:

pip install tox pytest flake8 coverage bandit

toxThis runs tests and coverage for Python 3.8 and Flake8, Bandit.

- Change make to invoke: invoke is overrated

- Add endpoint for uploading text file and questions