The official results in the paper:

| Models | Val, AP3D|R40 | ||

| Easy | Mod. | Hard | |

| MonoDGP | 30.7624% | 22.3421% | 19.0144% |

New and better results in this repo:

| Models | Val, AP3D|R40 | Logs | Ckpts | ||

| Easy | Mod. | Hard | |||

| MonoDGP | 30.9663% | 22.4953% | 19.9641% | log | ckpt |

| 30.7194% | 22.6883% | 19.4441% | log | ckpt | |

| 30.1314% | 22.7109% | 19.3978% | log | ckpt | |

We also provide a new ckpt pretrained on the trainval set, you can directly utilize this ckpt and submit test results to the KITTI 3D object detection benchmark to verify our method.

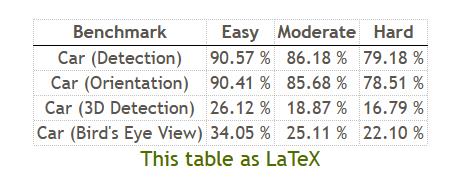

| Models | Test, AP3D|R40 | Ckpts | Results | ||

| Easy | Mod. | Hard | |||

| MonoDGP | 26.12% | 18.87% | 16.79% | ckpt | data |

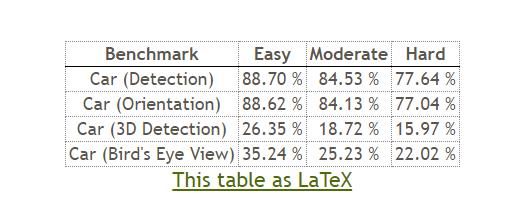

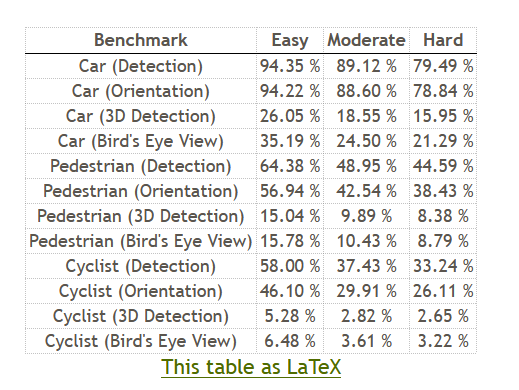

Test results submitted to the official KITTI Benchmark:

Car category:

All categories:

-

Clone this project and create a conda environment:

git clone https://github.com/PuFanqi23/MonoDGP.git cd MonoDGP conda create -n monodgp python=3.8 conda activate monodgp -

Install pytorch and torchvision matching your CUDA version:

# For example, We adopt torch 1.9.0+cu111 pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 torchaudio==0.9.0 -f https://download.pytorch.org/whl/torch_stable.html -

Install requirements and compile the deformable attention:

pip install -r requirements.txt cd lib/models/monodgp/ops/ bash make.sh cd ../../../..

-

Make dictionary for saving training losses:

mkdir logs

-

Download KITTI datasets and prepare the directory structure as:

│MonoDGP/ ├──... │data/kitti/ ├──ImageSets/ ├──training/ │ ├──image_2 │ ├──label_2 │ ├──calib ├──testing/ │ ├──image_2 │ ├──calib

You can also change the data path at "dataset/root_dir" in

configs/monodgp.yaml.

You can modify the settings of models and training in configs/monodgp.yaml and indicate the GPU in train.sh:

bash train.sh configs/monodgp.yaml > logs/monodgp.logThe best checkpoint will be evaluated as default. You can change it at "tester/checkpoint" in configs/monodgp.yaml:

bash test.sh configs/monodgp.yamlThis repo benefits from the excellent work MonoDETR.