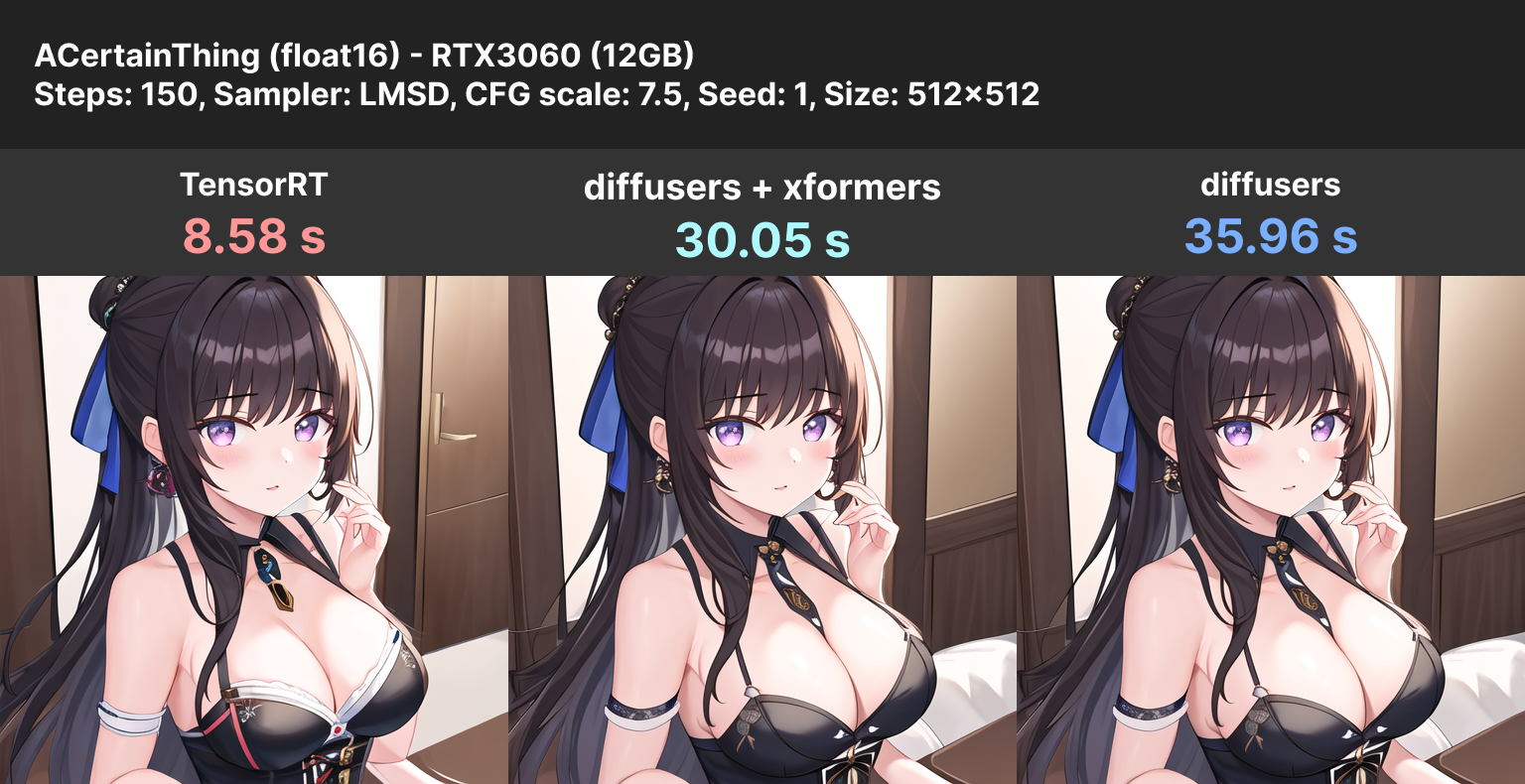

Lsmith is a fast StableDiffusionWebUI using high-speed inference technology with TensorRT

- Clone repository

git clone https://github.com/ddPn08/Lsmith.git

cd Lsmith

git submodule update --init --recursive- Launch using Docker compose

docker compose up --build- node.js (recommended version is 18)

- pnpm

- python 3.10

- pip

- CUDA

- cuDNN < 8.6.0

- TensorRT 8.5.x

- Follow the instructions on this page to build TensorRT OSS and get

libnvinfer_plugin.so. - Clone Lsmith repository

git clone https://github.com/ddPn08/Lsmith.git

cd Lsmith

git submodule update --init --recursive- Enter the repository directory.

cd Lsmith- Enter frontend directory and build frontend

cd frontend

pnpm i

pnpm build --out-dir ../dist- Run launch.sh with the path to libnvinfer_plugin.so in the LD_PRELOAD variable.

ex.)

bash launch.sh --host 0.0.0.0- node.js (recommended version is 18)

- pnpm

- python 3.10

- pip

- CUDA

- cuDNN < 8.6.0

- TensorRT 8.5.x

- Install nvidia gpu driver

- Instal cuda 11.x (Click here for the official guide)

- Install tensorrt 8.5.3.1 (Click here for the official guide)

- Clone Lsmith repository

git clone https://github.com/ddPn08/Lsmith.git

cd Lsmith

git submodule update --init --recursive- Enter frontend directory and build frontend

cd frontend

pnpm i

pnpm build --out-dir ../dist- Launch

launch-user.bat

Once started, access <ip address>:<port number> (ex http://localhost:8000) to open the WebUI.

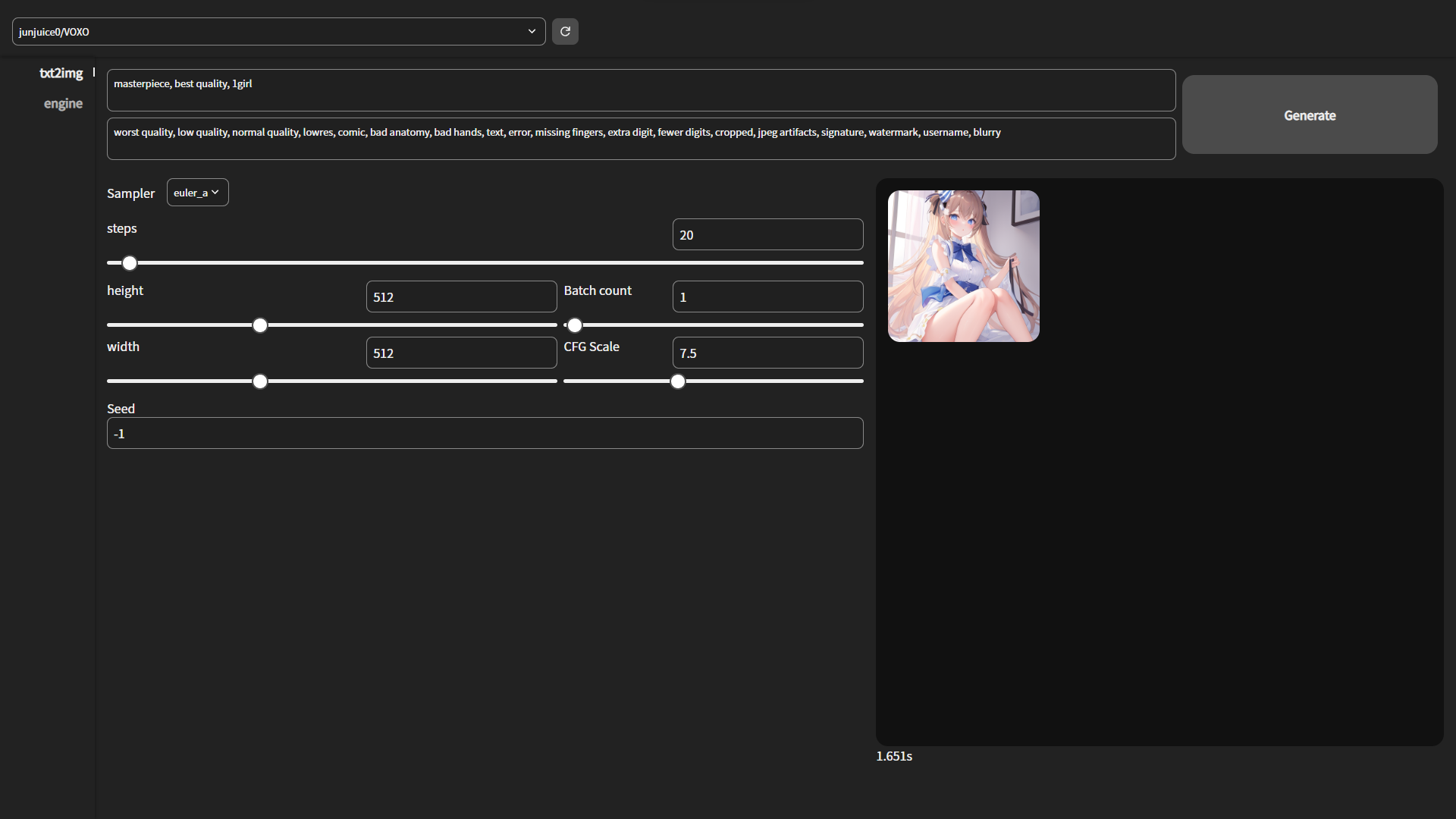

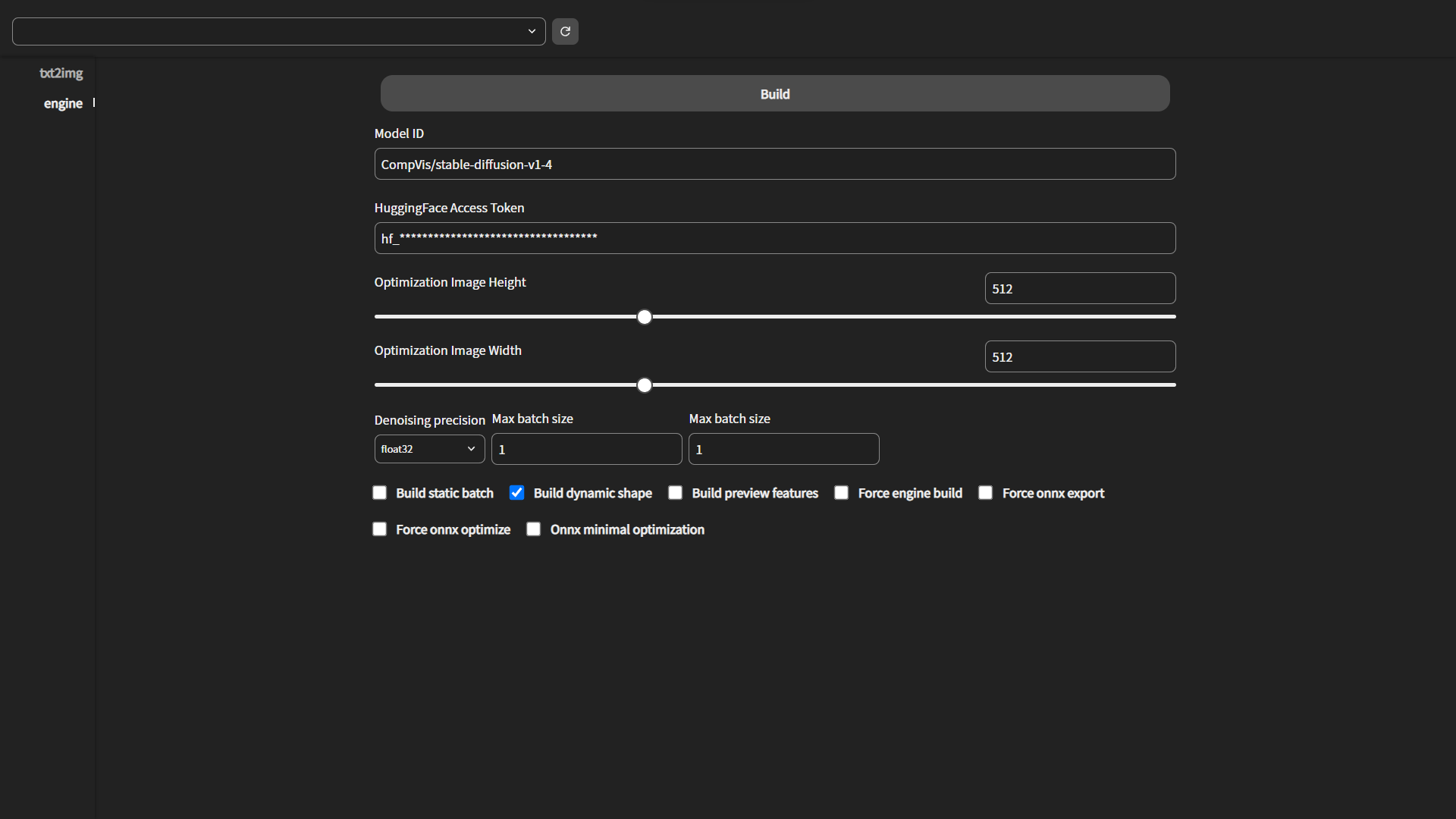

First of all, we need to convert our existing diffusers model to the tensorrt engine.

- Click on the "engine" tab

- Enter Hugging Face's Diffusers model ID in

Model ID(ex:CompVis/stable-diffusion-v1-4) - Enter your Hugging Face access token in

HuggingFace Access Token(required for some repositories). Access tokens can be obtained or created from this page. - Click the

Buildbutton to start building the engine.- There may be some warnings during the engine build, but you can safely ignore them unless the build fails.

- The build can take tens of minutes. For reference it takes an average of 15 minutes on the RTX3060 12GB.

- Select the model in the header dropdown.

- Click on the "txt2img" tab

- Click "Generate" button.

Special thanks to the technical members of the AI絵作り研究会, a Japanese AI image generation community.