(retrieve from 21/03/15)

- Bi-directional Encoder from scratch using Trax

- Fine-tune with pre-trained BERT Loss

- Fine-tune with pre-trained T5 on SQuAD data

Data: Few example of C4 data and SQuAD

Tokenize : sentencepiece

Build Bi-directional Encoder from scratch using Trax

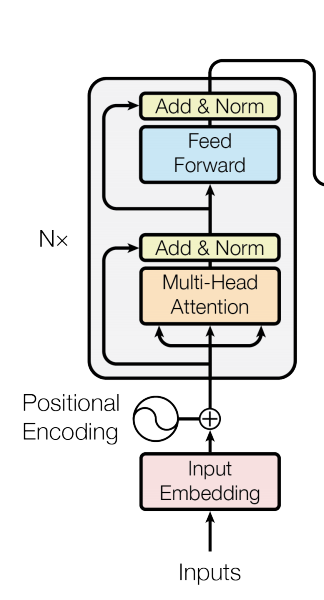

Model structure : 512 Embedding size, 6xEncoder, 8x multi head, using 0.1 dropout rate

positional encoding -> Masking -> Encoders -> LayerNorm -> Dense -> Softmax

Encoder detail : LayerNorm -> Attention -> Dropout -> FFNN(2048->512)