An implementation of the AlphaZero algorithm that plays Othello (aka. Reversi)

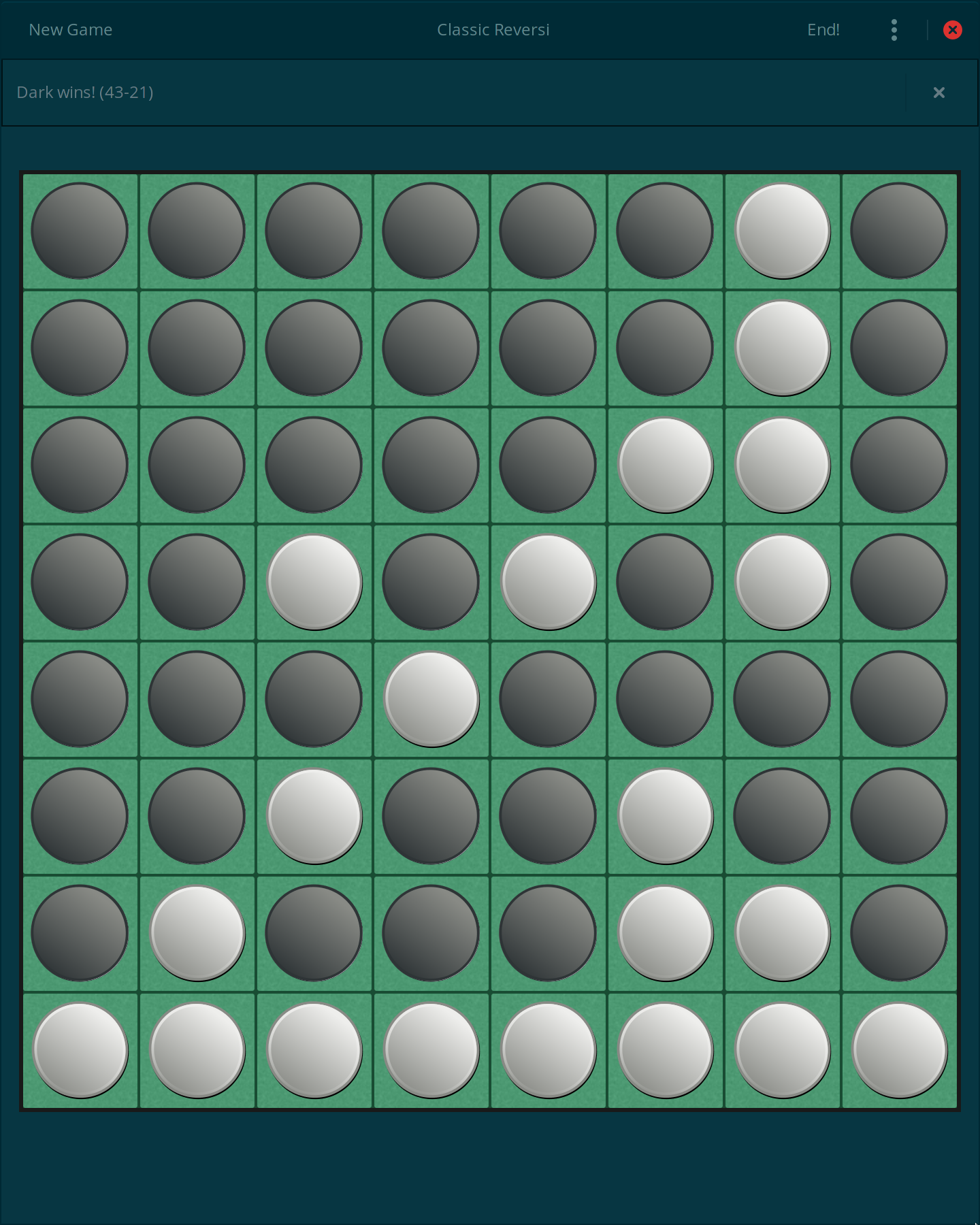

Figure 1: The final board after AlphaZero-Othello beat Iagno (Medium difficulty) for the first time!Othello is an abstract strategy board game for two players. It is played on an 8×8 board with 64 pieces, called disks, which have different colors on each side (ususally black and white). The players alternate placing disks with their assigned color facing up. If a contiguous line of a player's disks along one of the eight directions becomes surrounded on both ends by those of their opponent, all of the middle disks are flipped to the opponent's color. Every move must produce at least one of these flips. A player must pass if and only if they have no valid moves and the game ends when both players pass. The winner is the player with the most disks of their color on the final board.

On the strength of the best known computer programs relative to humans, Wikipedia has this to say:

Good Othello computer programs play very strongly against human opponents. This is mostly due to difficulties in human look-ahead peculiar to Othello: The interchangeability of the disks and therefore apparent strategic meaninglessness (as opposed to chess pieces for example) makes an evaluation of different moves much harder.

AlphaZero is a computer program created by DeepMind that plays Chess, Shogi, and Go descended from AlphaGo program which famously became the first to achieve superhuman performance playing Go. Notably, AlphaZero learns to play these games tabula rasa (i.e. given only the rules of the game itself) and is able to achieve superhuman performance in all three. The AlphaZero algorithm is easily adapted to other games.

AlphaZero uses a neural network which takes in the state s of the board and outputs two things: p(s), a distribution over set of actions, and v(s), an prediction of which player will win the game. The network is trained to minimize the following loss

where zₜ is the outcome of the game (from perspective of time t) and ̂π(s) is an improved policy.

zₜ is easy to calculate (we just look at who won in the end) but the computation of ̂π(s) is more involved.

In order to calculate ̂π(s) we use Monte Carlo Tree Search (MCTS).

To explain how this works, define

Q(s, a)is the average reward after taking actionafrom states.N(s, a)is the number of times actionawas taken at states.P(s, a)is the probability of taking actionafrom states(according top(s))

These quantities (which are just implemented using hash tables in practice) are used to calculate

which is called the upper confidence bound of Q(s, a) (here, c_puct is a hyperparameter that controls how much exploration is done.

During the search, the a that maximises U(s, a) is chosen.

This is done recursively until the game ends and then the neccesary updates to Q and N are done at each step back up the call chain.

The search is repeated from the root node many times.

As the number of simulations increases, Q(s, a) becomes more accurate and the U(s, a) also approach Q(s, a).

After all of the simulations are complete, we assign N(s, a)/sum(N(s, b) for all b) to ̂π(s, a) and the ̂π(s) and zₜ are used to train the network, producing an improved policy and value network for the next iteration.

AlphaZero-Othello is an implementation of the AlphaZero algorithm that learns to play Othello. It is written in pure Python, using the PyTorch library to accelerate numerical computations. The goal was to write the simplest and most readable implementation possible.

- 100% of the code is written by me

- Multithreaded self-play

- Multithreaded evaluation arena

- Uses a single GPU on a single node (i.e. it is not distributed)

- Self-play, evaluation, and training all happen synchronously (unlike in the original AlphaZero)

The policy and value network is build using residual blocks of the following form

Sequential(

SumModule(

Sequential(

Conv2d(n, k, 3, 1, 1),

BatchNorm2d(k),

ReLU(),

Conv2d(k, k, 3, 1, 1),

BatchNorm2d(k),

ReLU(),

),

Conv2d(n, k, 1, 1, 0),

),

ReLU(),

)where n of one block equals the k of the previous block and the second branch of the SumModule is replaced with the identity if n == k.

Five blocks are used and the channels numbers are [16, 32, 64, 128, 128, 128].

The output of the residual tower is then split.

The branch that computes pi is

Sequential(

Conv2d(128, 16, 1, 1, 0),

BatchNorm2d(16),

Flatten(),

Linear(16 * 8 * 8, 256),

BatchNorm1d(256),

Linear(256, 8 * 8 + 1),

LogSoftmax(dim=1),

)similarly, the branch that computes v is

Sequential(

Conv2d(128, 16, 1, 1, 0),

BatchNorm2d(16),

Flatten(),

Linear(16 * 8 * 8, 256),

BatchNorm1d(256),

Linear(256, 1),

Tanh(),

)Every round of self-play consists of 100 games each moved is based on 25 MCTS simulations.

20 iterations of training data are preserved in the history buffer.

The cpuct parameter is set at 3 and at the root of the MCTS search, Dirchlet noise with alpha = 0.9 is mixed with estimates of pi (25% noise).

I have played many games against the trained agent and I have never won. The agent is able to beat the Iagno engine on "Medium" difficulty but cannot beat Iagno on "Hard" difficulty.

Although the results are not spectacular, they are understandable. AlphaGo Zero was trained on 4.9 millions games of self play with 1600 simulations per MCTS while this agent was trained on about 40 thousand games with 25 simulations per MCTS.

Run demo.ipynb on Google Colab!

- AlphaGo Zero paper

- AlphaZero paper

- MuZero paper

- jonathan-laurent/AlphaZero.jl

- This post by Surag Nair as well as suragnair/alpha-zero-general