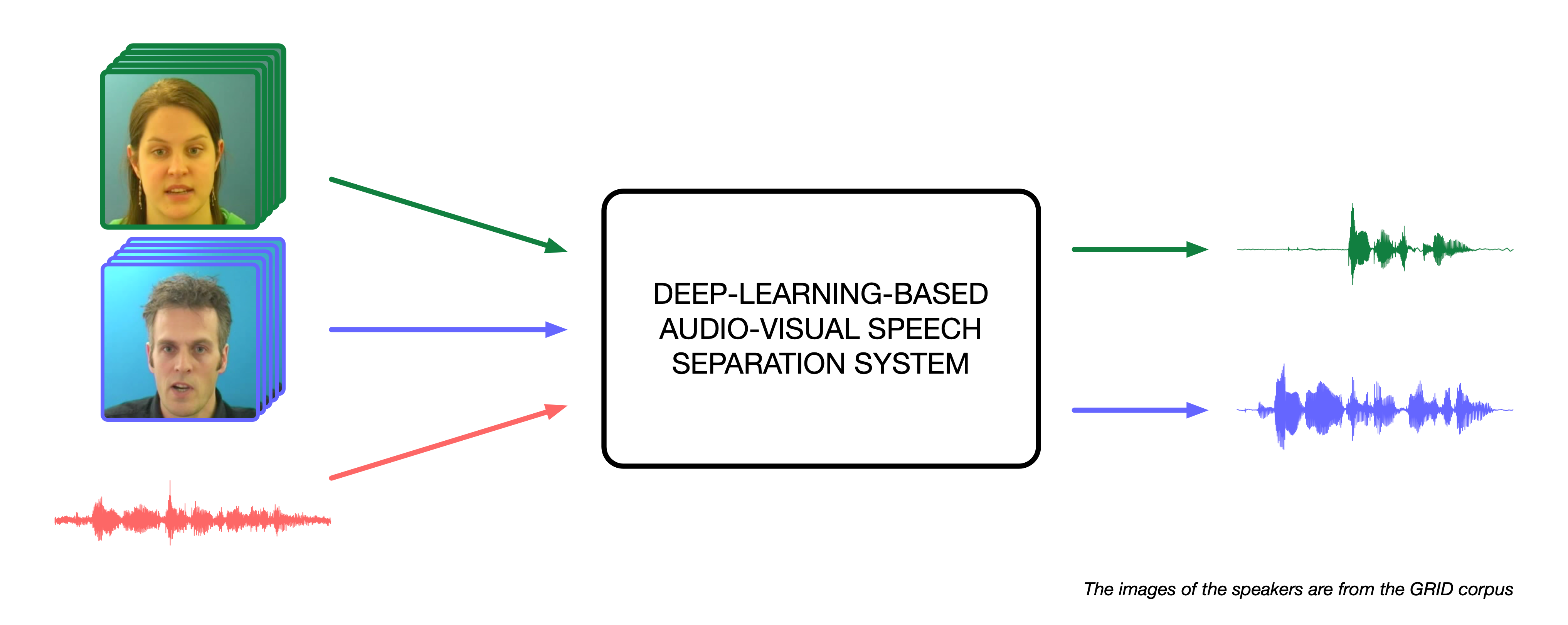

This document provides a list of resources on audio-visual speech enhancement and separation based on deep learning. It can be seen as an appendix to the overview paper that we recently wrote.

Our intention is to update the list, when new material on the topic is released. Feel free to propose changes or point out a resource that should be included.

If you like and use this work, please ⭐ and consider citing our overview article. This will highlight the interest of the community in our work.

@misc{michelsanti2020overview,

Author = {Daniel Michelsanti and Zheng-Hua Tan and Shi-Xiong Zhang and Yong Xu and Meng Yu and Dong Yu and Jesper Jensen},

Title = {An Overview of Deep-Learning-Based Audio-Visual Speech Enhancement and Separation},

eprint={2008.09586},

archivePrefix={arXiv},

Year = {2020}}

- Audio-Visual Speech Corpora

- Performance Assessment

- Audio-Visual Speech Enhancement and Separation

- Speech Reconstruction from Silent Videos

- Audio-Visual Sound Source Separation for Non-Speech Signals

- Related Overview Articles

-

AVA-ActiveSpeaker [paper] [dataset page]

-

AV Chinese Mandarin [paper]

-

AVSpeech [paper] [dataset page]

-

ASPIRE [paper] [dataset page]

-

CUAVE [paper]

-

GRID [paper] [dataset page]

-

KinectDigits [paper] [dataset page]

-

LDC2009V01 [dataset page]

-

Lombard GRID [paper] [dataset page]

-

LRS [paper]

-

LRS2 [paper] [dataset page]

-

LRS3 [paper] [dataset page]

-

Mandarin Sentences Corpus [paper]

-

MODALITY [paper] [dataset page]

-

MV-LRS [paper]

-

NTCD-TIMIT [paper] [dataset page]

-

Obama Weekly Addresses [paper]

-

OuluVS [paper] [dataset page]

-

OuluVS2 [paper] [dataset page]

-

RAVDESS [paper] [dataset page]

-

Small Mandarin Sentences Corpus [paper]

-

TCD-TIMIT [paper] [dataset page]

-

VoxCeleb [paper] [dataset page]

-

VoxCeleb2 [paper] [dataset page]

-

CSIG / CBAK / COVRL [paper]

-

HASQI [paper v1] [paper v2] [code]

-

POLQA [recommendation] [code]

-

A. Adeel, J. Ahmad, H. Larijani, and A. Hussain, “A novel real-time, lightweight chaotic-encryption scheme for next-generation audio-visual hearing aids,” Cognitive Computation, vol. 12, no. 3, pp. 589–601, 2019. [paper]

-

A. Adeel, M. Gogate, and A. Hussain, “Towards next-generation lip-reading driven hearing-aids: A preliminary prototype demo,” in Proc. of CHAT, 2017. [paper] [demo]

-

A. Adeel, M. Gogate, and A. Hussain, “Contextual deep learning-based audio-visual switching for speech enhancement in real-world environments,” Information Fusion, vol. 59, pp. 163–170, 2020. [paper]

-

A. Adeel, M. Gogate, A. Hussain, and W. M. Whitmer, “Lip-reading driven deep learning approach for speech enhancement,” IEEE Transactions on Emerging Topics in Computational Intelligence, 2019. [paper]

-

T. Afouras, J. S. Chung, and A. Zisserman, “The conversation: Deep audio-visual speech enhancement,” Proc. of Interspeech, 2018. [paper] [project page] [demo 1] [other demos]

-

T. Afouras, J. S. Chung, and A. Zisserman, “My lips are concealed: Audio-visual speech enhancement through obstructions,” in Proc. of Interspeech, 2019. [paper] [project page] [demo]

-

Z. Aldeneh, A. P. Kumar, B.-J. Theobald, E. Marchi, S. Kajarekar, D. Naik, and A. H. Abdelaziz, “Self-supervised learning of visual speech features with audiovisual speech enhancement,” arXiv preprint arXiv:2004.12031, 2020. [paper]

-

A. Arriandiaga, G. Morrone, L. Pasa, L. Badino, and C. Bartolozzi, “Audio-visual target speaker extraction on multi-talker environment using event-driven cameras,” arXiv preprint arXiv:1912.02671, 2019. [paper]

-

S.-Y. Chuang, Y. Tsao, C.-C. Lo, and H.-M. Wang, “Lite audio-visual speech enhancement,” in Proc. of Interspeech (to appear), 2020. [paper] [code]

-

S.-W. Chung, S. Choe, J. S. Chung, and H.-G. Kang, “Facefilter: Audio-visual speech separation using still images,” arXiv preprint arXiv:2005.07074, 2020. [paper] [demo]

-

A. Ephrat, I. Mosseri, O. Lang, T. Dekel, K. Wilson, A. Hassidim, W. T. Freeman, and M. Rubinstein, “Looking to listen at the cocktail party: A speaker-independent audio-visual model for speech separation,” ACM Transactions on Graphics, vol. 37, no. 4, pp. 112:1–112:11, 2018. [paper] [project page] [demo] [supplementary material]

-

A. Gabbay, A. Ephrat, T. Halperin, and S. Peleg, “Seeing through noise: Visually driven speaker separation and enhancement,” in Proc. of ICASSP, 2018. [paper] [project page] [demo] [code]

-

A. Gabbay, A. Shamir, and S. Peleg, “Visual speech enhancement,” Proc. of Interspeech, 2018. [paper] [project page] [demo 1] [other demos] [code]

-

M. Gogate, A. Adeel, R. Marxer, J. Barker, and A. Hussain, “DNN driven speaker independent audio-visual mask estimation for speech separation,” in Proc. of Interspeech, 2018. [paper]

-

M. Gogate, K. Dashtipour, A. Adeel, and A. Hussain, “Cochleanet: A robust language-independent audio-visual model for speech enhancement,” Information Fusion, vol. 63, pp. 273–285, 2020. [paper] [project page] [demo] [supplementary material]

-

R. Gu, S.-X. Zhang, Y. Xu, L. Chen, Y. Zou, and D. Yu, “Multi-modal multi-channel target speech separation,” IEEE Journal of Selected Topics in Signal Processing, 2020. [paper] [project page] [demo]

-

J.-C. Hou, S.-S. Wang, Y.-H. Lai, Y. Tsao, H.-W. Chang, and H.- M. Wang, “Audio-visual speech enhancement using multimodal deep convolutional neural networks,” IEEE Transactions on Emerging Topics in Computational Intelligence, vol. 2, no. 2, pp. 117–128, 2018. [paper]

-

J.-C. Hou, S.-S. Wang, Y.-H. Lai, J.-C. Lin, Y. Tsao, H.-W. Chang, and H.-M. Wang, “Audio-visual speech enhancement using deep neural networks,” in Proc. of APSIPA, 2016. [paper]

-

A. Hussain, J. Barker, R. Marxer, A. Adeel, W. Whitmer, R. Watt, and P. Derleth, “Towards multi-modal hearing aid design and evaluation in realistic audio-visual settings: Challenges and opportunities,” in Proc. of CHAT, 2017. [paper]

-

E. Ideli, “Audio-visual speech processing using deep learning techniques.” MSc thesis, Applied Sciences: School of Engineering Science, 2019. [paper]

-

E. Ideli, B. Sharpe, I. V. Bajić, and R. G. Vaughan,“Visually assisted time-domain speech enhancement,” in Proc. of GlobalSIP, 2019. [paper]

-

B. İnan, M. Cernak, H. Grabner, H. P. Tukuljac, R. C. Pena, and B. Ricaud, “Evaluating audiovisual source separation in the context of video conferencing,” Proc. of Interspeech, 2019. [paper] [code]

-

M. L. Iuzzolino and K. Koishida, “AV(SE)²: Audio-visual squeeze- excite speech enhancement,” in Proc. of ICASSP. IEEE, 2020, pp. 7539–7543. [paper]

-

H. R. V. Joze, A. Shaban, M. L. Iuzzolino, and K. Koishida, “MMTM: Multimodal transfer module for CNN fusion,” Proc. of CVPR, 2020. [paper]

-

F. U. Khan, B. P. Milner, and T. Le Cornu, “Using visual speech information in masking methods for audio speaker separation,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 26, no. 10, pp. 1742–1754, 2018. [paper]

-

C. Li and Y. Qian, “Deep audio-visual speech separation with attention mechanism,” in Proc. of ICASSP, 2020. [paper]

-

Y. Li, Z. Liu, Y. Na, Z. Wang, B. Tian, and Q. Fu, “A visual-pilot deep fusion for target speech separation in multitalker noisy environment,” in Proc. of ICASSP, 2020. [paper]

-

R. Lu, Z. Duan, and C. Zhang, “Listen and look: Audio–visual matching assisted speech source separation,” IEEE Signal Processing Letters, vol. 25, no. 9, pp. 1315–1319, 2018. [paper]

-

R. Lu, Z. Duan, and C. Zhang, “Audio–visual deep clustering for speech separation, ”IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 27, no. 11, pp. 1697–1712, 2019. [paper]

-

Y. Luo, J. Wang, X. Wang, L. Wen, and L. Wang, “Audio-visual speech separation using i-Vectors,” in Proc. of ICICSP, 2019. [paper]

-

D. Michelsanti, Z.-H. Tan, S. Sigurdsson, and J. Jensen, “On training targets and objective functions for deep-learning-based audio-visual speech enhancement,” in Proc. of ICASSP, 2019. [paper] [supplementary material]

-

D. Michelsanti, Z.-H. Tan, S. Sigurdsson, and J. Jensen, “Deep- learning-based audio-visual speech enhancement in presence of Lombard effect,” Speech Communication, vol. 115, pp. 38–50, 2019. [paper] [demo]

-

D. Michelsanti, Z.-H. Tan, S. Sigurdsson, and J. Jensen, “Effects of Lombard reflex on the performance of deep-learning-based audio-visual speech enhancement systems,” in Proc. of ICASSP, 2019. [paper] [demo]

-

G. Morrone, S. Bergamaschi, L. Pasa, L. Fadiga, V. Tikhanoff, and L. Badino, “Face landmark-based speaker-independent audio-visual speech enhancement in multi-talker environments,” in Proc. of ICASSP, 2019. [paper] [project page] [demo] [other demos] [code]

-

T. Ochiai, M. Delcroix, K. Kinoshita, A. Ogawa, and T. Nakatani, “Multimodal SpeakerBeam: Single channel target speech extraction with audio-visual speaker clues,” Proc. Interspeech, 2019. [paper]

-

A. Owens and A. A. Efros, “Audio-visual scene analysis with self-supervised multisensory features,” in Proc. of ECCV, 2018. [paper] [project page] [demo] [code]

-

L. Pasa, G. Morrone, and L. Badino, “An analysis of speech enhancement and recognition losses in limited resources multi-talker single channel audio-visual ASR,” in Proc. of ICASSP, 2020. [paper]

-

L. Qu, C. Weber, and S. Wermter, “Multimodal target speech separation with voice and face references,” arXiv preprint arXiv:2005.08335, 2020. [paper] [project page] [demo]

-

M. Sadeghi and X. Alameda-Pineda, “Mixture of inference networks for VAE-based audio-visual speech enhancement,” arXiv preprint arXiv:1912.10647, 2019. [paper] [project page] [demo] [code]

-

M. Sadeghi and X. Alameda-Pineda, “Robust unsupervised audio-visual speech enhancement using a mixture of variational autoencoders,” in Proc. of ICASSP, 2020. [paper] [project page] [supplementary material] [code]

-

M. Sadeghi, S. Leglaive, X. Alameda-Pineda, L. Girin, and R. Horaud, “Audio-visual speech enhancement using conditional variational autoencoders,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 1788–1800, 2020. [paper] [project page] [demo] [code]

-

Z. Sun, Y. Wang, and L. Cao, “An attention based speaker-independent audio-visual deep learning model for speech enhancement,” in Proc. of MMM, 2020. [paper]

-

K. Tan, Y. Xu, S.-X. Zhang, M. Yu, and D. Yu, “Audio-visual speech separation and dereverberation with a two-stage multimodal network,” IEEE Journal of Selected Topics in Signal Processing, vol. 14, no. 3, pp. 542–553, 2020. [paper] [project page] [demo]

-

W. Wang, C. Xing, D. Wang, X. Chen, and F. Sun, “A robust audio-visual speech enhancement model,” in Proc. of ICASSP, 2020. [paper]

-

J. Wu, Y. Xu, S.-X. Zhang, L.-W. Chen, M. Yu, L. Xie, and D. Yu, “Time domain audio visual speech separation,” in Proc. of ASRU, 2019. [paper] [project page] [demo]

-

Z. Wu, S. Sivadas, Y. K. Tan, M. Bin, and R. S. M. Goh,“Multi-modal hybrid deep neural network for speech enhancement,” arXiv preprint arXiv:1606.04750, 2016. [paper]

-

Y. Xu, M. Yu, S.-X. Zhang, L. Chen, C. Weng, J. Liu, and D. Yu, “Neural spatio-temporal beamformer for target speech separation,” Proc. of Interspeech (to appear), 2020. [paper] [project page] [demo]

-

H. Akbari, H. Arora, L. Cao, and N. Mesgarani, “Lip2AudSpec: Speech reconstruction from silent lip movements video,” in Proc. of ICASSP, 2018. [paper] [demo 1] [demo 2] [demo 3] [code]

-

A. Ephrat, T. Halperin, and S. Peleg, “Improved speech reconstruction from silent video,” in Proc. of CVAVM, 2017. [paper] [project page] [demo]

-

A. Ephrat and S. Peleg, “Vid2Speech: Speech reconstruction from silent video,” in Proc. of ICASSP, 2017. [paper] [project page] [demo 1] [demo 2] [demo 3] [code]

-

Y. Kumar, M. Aggarwal, P. Nawal, S. Satoh, R. R. Shah, and R. Zimmermann, “Harnessing AI for speech reconstruction using multi-view silent video feed,” in Proc. of ACM-MM, 2018. [paper]

-

Y. Kumar, R. Jain, K. M. Salik, R. R. Shah, Y. Yin, and R. Zimmermann, “Lipper: Synthesizing thy speech using multi-view lipreading,” in Proc. of AAAI, 2019. [paper] [demo]

-

Y. Kumar, R. Jain, M. Salik, R. R. Shah, R. Zimmermann, and Y. Yin, “MyLipper: A personalized system for speech reconstruction using multi-view visual feeds,” in Proc. of ISM, 2018. [paper] [demo]

-

T. Le Cornu and B. Milner, “Reconstructing intelligible audio speech from visual speech features,” in Proc. of Interspeech, 2015. [paper]

-

T. Le Cornu and B. Milner, “Generating intelligible audio speech from visual speech,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 25, no. 9, pp. 1751–1761, 2017. [paper] [demo]

-

D. Michelsanti, O. Slizovskaia, G. Haro, E. Go ́mez, Z.-H. Tan, and J. Jensen, “Vocoder-based speech synthesis from silent videos,” in Proc. of Interspeech (to appear), 2020. [paper] [project page] [demo]

-

K. Prajwal, R. Mukhopadhyay, V. P. Namboodiri, and C. Jawahar, “Learning individual speaking styles for accurate lip to speech synthesis,” in Proc. of CVPR, 2020. [paper] [project page] [demo] [code]

-

L. Qu, C. Weber, and S. Wermter. "LipSound: Neural Mel-Spectrogram Reconstruction for Lip Reading," in Proc. of Interspeech, 2019. [paper] [demo]

-

Y. Takashima, T. Takiguchi, and Y. Ariki, “Exemplar-based lip-to-speech synthesis using convolutional neural networks,” in Proc. of IW-FCV, 2019. [paper]

-

S. Uttam, Y. Kumar, D. Sahrawat, M. Aggarwal, R. R. Shah, D. Mahata, and A. Stent, “Hush-hush speak: Speech reconstruction using silent videos,” in Proc. of Interspeech, 2019. [paper] [demo] [code]

-

K. Vougioukas, P. Ma, S. Petridis, and M. Pantic, “Video-driven speech reconstruction using generative adversarial networks,” in Proc. of Interspeech, 2019. [paper] [project page] [demo 1] [demo 2] [demo 3]

-

C. Gan, D. Huang, H. Zhao, J. B. Tenenbaum, and A. Torralba, “Music gesture for visual sound separation,” in Proc. of CVPR, 2020. [paper] [project page] [demo]

-

R. Gao, R. Feris, and K. Grauman, “Learning to separate object sounds by watching unlabeled video,” in Proc. of ECCV, 2018. [paper] [project page] [demo 1] [demo 2] [code]

-

R. Gao and K. Grauman, “2.5D visual sound,” in Proc. of CVPR, 2019. [paper] [project page] [demo] [code]

-

R. Gao and K. Grauman, “Co-separating sounds of visual objects,” in Proc. of ICCV, 2019. [paper] [project page] [demo] [code]

-

S. Parekh, A. Ozerov, S. Essid, N. Q. Duong, P. Pérez, and G. Richard, “Identify, locate and separate: Audio-visual object extraction in large video collections using weak supervision,” in Proc. of WASPAA, 2019. [paper] [project page] [demo]

-

A. Rouditchenko, H. Zhao, C. Gan, J. McDermott, and A. Torralba, “Self-supervised audio-visual co-segmentation,” in Proc. of ICASSP, 2019. [paper]

-

O. Slizovskaia, G. Haro, and E. Gómez, “Conditioned source separation for music instrument performances,” arXiv preprint arXiv:2004.03873, 2020. [paper] [project page] [demo][code]

-

X. Xu, B. Dai, and D. Lin, “Recursive visual sound separation using minus-plus net,” in Proc. of ICCV, 2019. [paper] [demo]

-

H. Zhao, C. Gan, W.-C. Ma, and A. Torralba, “The sound of motions,” in Proc. of ICCV, 2019. [paper] [demo]

-

H. Zhao, C. Gan, A. Rouditchenko, C. Vondrick, J. McDermott, and A. Torralba, “The sound of pixels,” in Proc. of ECCV, 2018. [paper] [project page] [demo 1] [demo 2][code]

-

L. Zhu and E. Rahtu, “Separating sounds from a single image,” arXiv preprint arXiv:2007.07984, 2020. [paper] [project page]

-

L. Zhu and E. Rahtu, “Visually guided sound source separation using cascaded oppo- nent filter network,” arXiv preprint arXiv:2006.03028, 2020. [paper] [project page]

-

J. Rincón-Trujillo and D. M. Córdova-Esparza, “Analysis of speech separation methods based on deep learning,” International Journal of Computer Applications, vol. 148, no. 9, pp. 21–29, 2019. [paper]

-

B. Rivet, W. Wang, S. M. Naqvi, and J. A. Chambers, “Audiovisual speech source separation: An overview of key methodologies,” IEEE Signal Processing Magazine, vol. 31, no. 3, pp. 125–134, 2014. [paper]

-

T. M. F. Taha and A. Hussain, “A survey on techniques for enhancing speech,” International Journal of Computer Applications, vol. 179, no. 17, pp. 1–14, 2018. [paper]

-

D. L. Wang and J. Chen, “Supervised speech separation based on deep learning: An overview,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2018. [paper]

-

H. Zhu, M. Luo, R. Wang, A. Zheng, and R. He, “Deep audio-visual learning: A survey,” arXiv preprint arXiv:2001.04758, 2020. [paper]