How LMMs Perform on Video Quality Understanding?

We introduce Q-Bench-Video, a new benchmark specifically designed to evaluate LMMs' proficiency in discerning video quality.

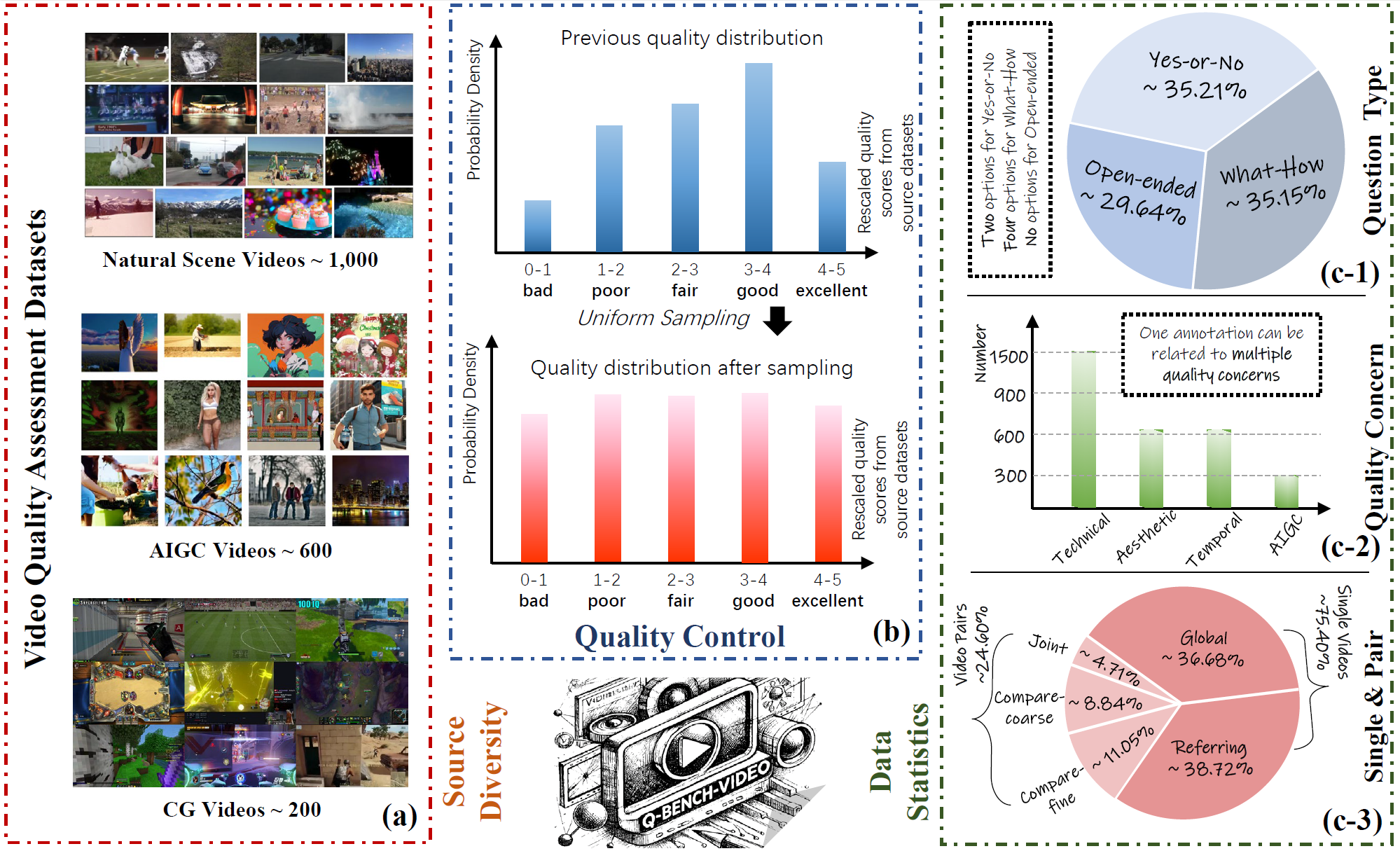

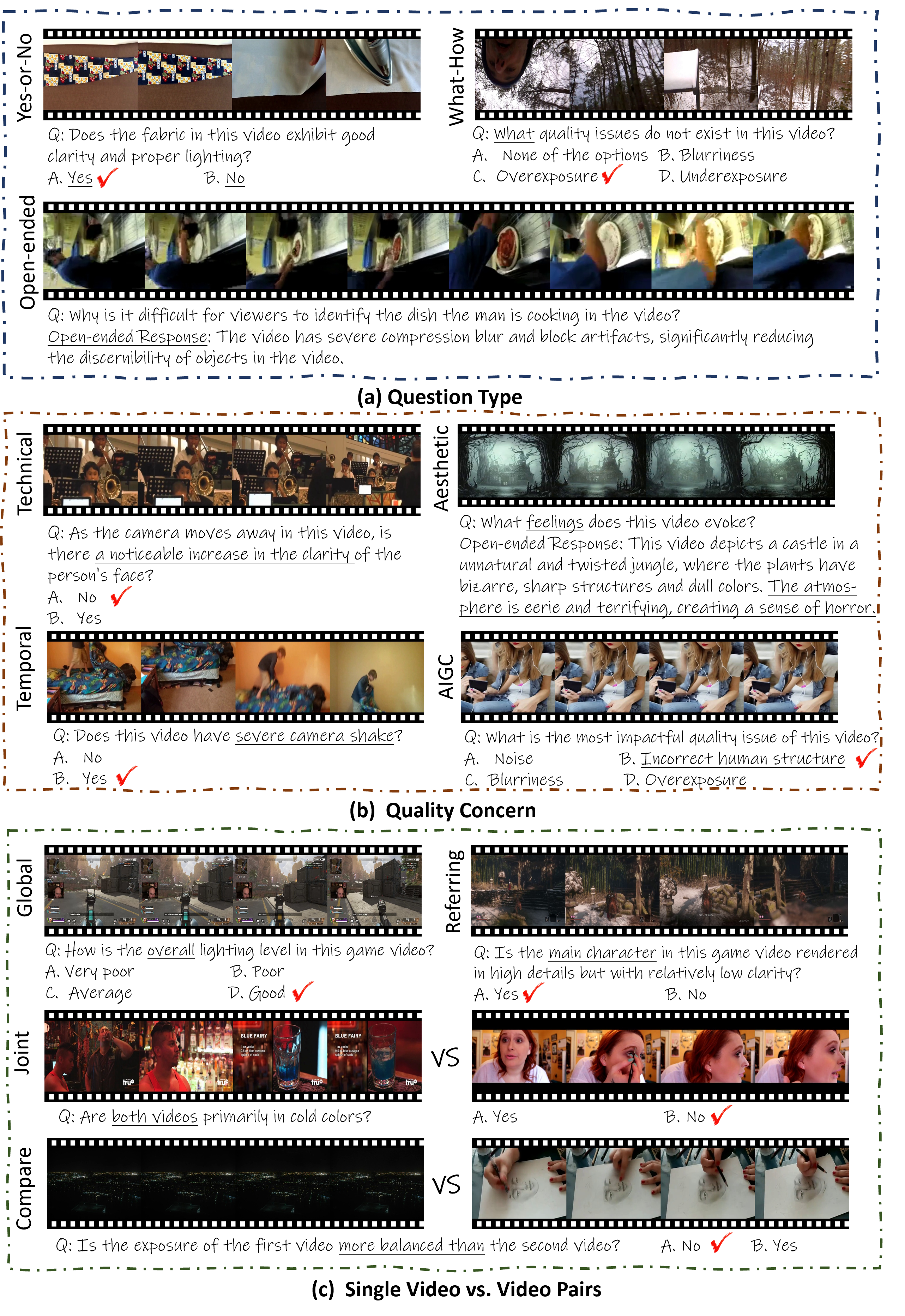

A. To ensure the diversity of video sources, Q-Bench-Video encompasses videos from natural scenes, computer graphics (CG), and AI-generated content (AIGC).

B. Building on the traditional multiple-choice questions format with the Yes-or-No and What-How categories, we include Open-ended questions to better evaluate complex scenarios. Additionally, we incorporate the video pair quality comparison question to enhance comprehensiveness.

C. Beyond the traditional Technical, Aesthetic, and Temporal distortions, we have expanded our evaluation aspects to include the dimension of AIGC distortions, which addresses the increasing demand for video generation.

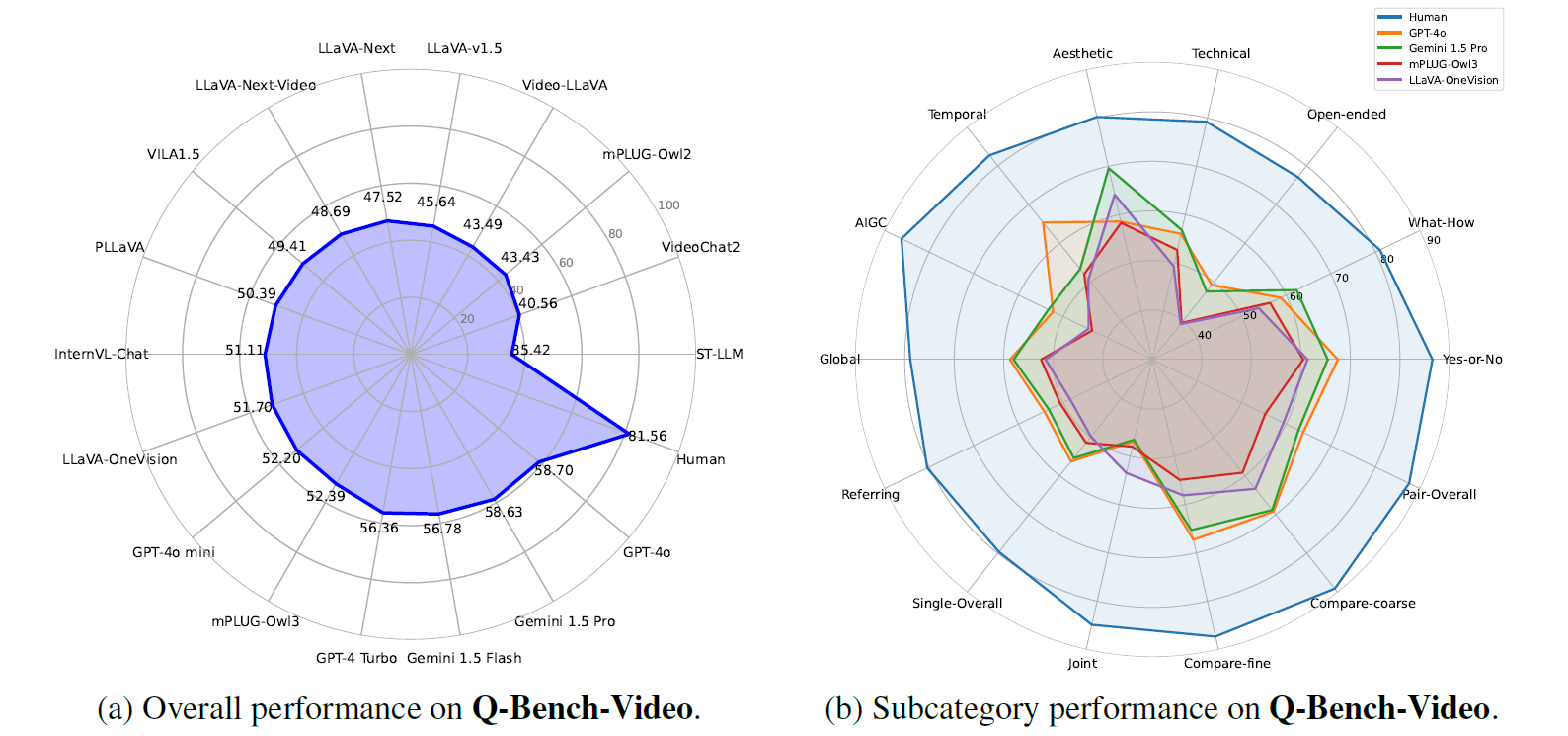

Finally, we collect a total of 2,378 question-answer pairs and test them on 12 open-source & 5 proprietary LMMs. Our findings indicate that while LMMs have a foundational understanding of video quality, their performance remains incomplete and imprecise, with a notable discrepancy compared to human-level performance.

- [2024/10/2] 🔥 Release the technical report for Q-Bench-Video.

- [2024/9/24] 🔥 Release the sample script for testing on Q-Bench-Video.

- [2024/9/20] 🔥 Github repo for Q-Bench-Video is online. Do you want to find out how your LMM performs on video quality understanding? Come and test on Q-Bench-Video !! Dataset Download

In this benchmark, the meta-structure tuple (V,Q,A,C) of each data item can be decomposed into several components: the video object V (which can be a single video or a pair of videos), the video quality query Q, the set of possible answers A, and the correct answer C. The subcategories are organized as follows:

- Question Types: Yes-or-No Questions, What-How Questions, Open-ended Questions

- Quality Concerns: Technical, Aesthetic, Temporal, and AIGC

- Single Videos & Video Pairs: Single-Global, Single-referring, Pair-Compare-Coarse, Pair-Compare-Fine

For open-source models, mPLUG-Owl3 takes the first place. For closed-source models, GPT-4o takes the first place.

A Quick Look of the Q-Bench-Video Outcomes.

| Model | Yes-or-No | What-How | Open-ended | Technical | Aesthetic | Temporal | AIGC | Overall |

|---|---|---|---|---|---|---|---|---|

| Random guess w/o Open-ended | 50.00% | 25.00% | / | 37.10% | 37.31% | 37.25% | 37.22% | 37.79% |

| Human | 86.57% | 81.00% | 77.11% | 79.22% | 80.23% | 82.72% | 86.21% | 81.56% |

| Open-source Image LMMs | ||||||||

| LLaVA-Next (Mistral-7B) | 62.83% | 45.14% | 33.69% | 46.38% | 57.86% | 47.84% | 48.46% | 47.52% |

| LLaVA-v1.5 (Vicuna-v1.5-13B) | 52.98% | 46.44% | 37.01% | 45.77% | 58.12% | 45.30% | 46.48% | 45.64% |

| mPLUG-Owl2 (LLaMA2-7B) | 59.19% | 39.07% | 31.19% | 42.07% | 52.38% | 41.71% | 39.37% | 43.43% |

| Open-source Video LMMs | ||||||||

| mPLUG-Owl3 (Qwen2-7B) | 60.48% | 56.39% | 39.48% | 52.68% | 58.31% | 52.05% | 43.49% | 52.39% |

| LLaVA-OneVision (Qwen2-7B) | 61.34% | 53.88% | 39.15% | 49.35% | 64.15% | 50.68% | 44.30% | 51.70% |

| InternVL-Chat (Vicuna-7B) | 66.02% | 52.13% | 33.93% | 48.42% | 52.73% | 50.59% | 53.12% | 51.11% |

| VILA1.5 (LLaMA3-8B) | 61.95% | 46.00% | 39.60% | 47.85% | 57.85% | 45.65% | 42.57% | 49.41% |

| PLLaVA (Mistral-7B) | 65.63% | 52.33% | 32.23% | 49.69% | 61.32% | 50.96% | 53.64% | 50.39% |

| LLaVA-Next-Video (Mistral-7B) | 61.34% | 45.95% | 38.10% | 49.03% | 60.94% | 46.97% | 49.40% | 48.69% |

| ST-LLM (Vicuna-v1.1-7B) | 44.63% | 28.50% | 32.78% | 34.99% | 46.11% | 34.28% | 34.02% | 35.42% |

| Video-LLaVA (Vicuna-v1.5-7B) | 64.67% | 40.79% | 29.11% | 43.25% | 54.04% | 42.38% | 42.76% | 43.49% |

| VideoChat2 (Mistral-7B) | 56.09% | 29.98% | 34.99% | 39.26% | 50.02% | 38.25% | 35.88% | 40.56% |

| Proprietary LMMs | ||||||||

| Gemini 1.5 Flash | 65.48% | 56.79% | 47.51% | 54.11% | 66.58% | 53.51% | 50.22% | 56.78% |

| Gemini 1.5 Pro | 65.42% | 62.35% | 47.57% | 56.80% | 69.61% | 53.38% | 53.26% | 58.63% |

| GPT-4o mini | 62.95% | 50.93% | 42.10% | 49.38% | 60.90% | 48.43% | 41.71% | 52.20% |

| GPT-4o | 67.48% | 58.79% | 49.25% | 56.01% | 58.57% | 65.39% | 52.22% | 58.70% |

| GPT-4 Turbo | 66.93% | 58.33% | 40.15% | 54.23% | 66.23% | 54.00% | 52.04% | 56.36% |

We release the performance of LMMs against humans. Several conclusions can be obtained:

-

General Performance. Human>Proprietary LMMs>Open-source LMMs>Random guess.

-

Open-ended questions are more challenging for LMMs.

-

MMs exhibit unbalanced performance across different types of distortions.

- Test on Q-Bench-Video

Assume that you have downloaded the Q-Bench-Video.

We provide a sample GPT_test.py of testing GPT API format on Q-Bench-Video

Use the following command or make necessary modifications to quickly test GPT and your LMM models.

python GPT_test.py --json_file path/to/Q_Bench_Video_dev.json --video_dir path/to/video/directory --output_file path/to/Q_Bench_Video_dev_response.json --api_key your_openai_api_key

- Evaluating open-ended responses:

We provide a sample function to rate the open-ended responses, result' is the list of scores while score' is the standardized score.

from openai import OpenAI

client = OpenAI(api_key = "your_api_key")

import timeout_decorator

def judge_open(question, answer, correct_ans):

result = []

msg = f'''Given the question [{question}], evaluate whether the response [{answer}] completely matches the correct answer [{correct_ans}].

First, check the response and please rate score 0 if the response is not a valid answer.

Please rate score 2 if the response completely or almost completely matches the correct answer on completeness, accuracy, and relevance.

Please rate score 1 if the response partly matches the correct answer on completeness, accuracy, and relevance.

Please rate score 0 if the response doesn't match the correct answer on completeness, accuracy, and relevance at all.

Please only provide the result in the following format: Score:'''

print(msg)

@timeout_decorator.timeout(5)

def get_completion(msg):

completion = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful assistant that grades answers related to visual video quality. There are a lot of special terms or keywords related to video processing and photography. You will pay attention to the context of `quality evaluation' when grading."},

{"role": "user", "content": msg}

]

)

return completion.choices[0].message.content

for i in range(5):

try:

response = get_completion(msg)

print(response)

score = response.split(": ")[-1]

result.append(score)

except timeout_decorator.TimeoutError:

result.append("N/A")

except Exception as e:

result.append("N/A")

for i in result:

if i in ['0','1','2']:

cnt = cnt + 1

score = score + float(i)/2

score = score / cnt

return result, score

Please contact any of the first authors of this paper for queries.

- Zicheng Zhang,

zzc1998@sjtu.edu.cn, @zzc-1998