Conditional Flow Matching (CFM) is a fast way to train continuous normalizing flow (CNF) models. CFM is a simulation-free training objective for continuous normalizing flows that allows conditional generative modeling and speeds up training and inference. CFM's performance closes the gap between CNFs and diffusion models. To spread its use within the machine learning community, we have built a library focused on Flow Matching methods: TorchCFM. TorchCFM is a library showing how Flow Matching methods can be trained and used to deal with image generation, single-cell dynamics, tabular data and soon SO(3) data.

The density, vector field, and trajectories of simulation-free CNF training schemes: mapping 8 Gaussians to two moons (above) and a single Gaussian to two moons (below). Action matching with the same architecture (3x64 MLP with SeLU activations) underfits with the ReLU, SiLU, and SiLU activations as suggested in the example code, but it seems to fit better under our training setup (Action-Matching (Swish)).

The models to produce the GIFs are stored in examples/models and can be visualized with this notebook:

We also have included an example of unconditional MNIST generation in examples/notebooks/mnist_example.ipynb for both deterministic and stochastic generation.

In our version 1 update we have extracted implementations of the relevant flow matching variants into a package torchcfm. This allows abstraction of the choice of the conditional distribution q(z). torchcfm supplies the following loss functions:

-

ConditionalFlowMatcher:$z = (x_0, x_1)$ ,$q(z) = q(x_0) q(x_1)$ -

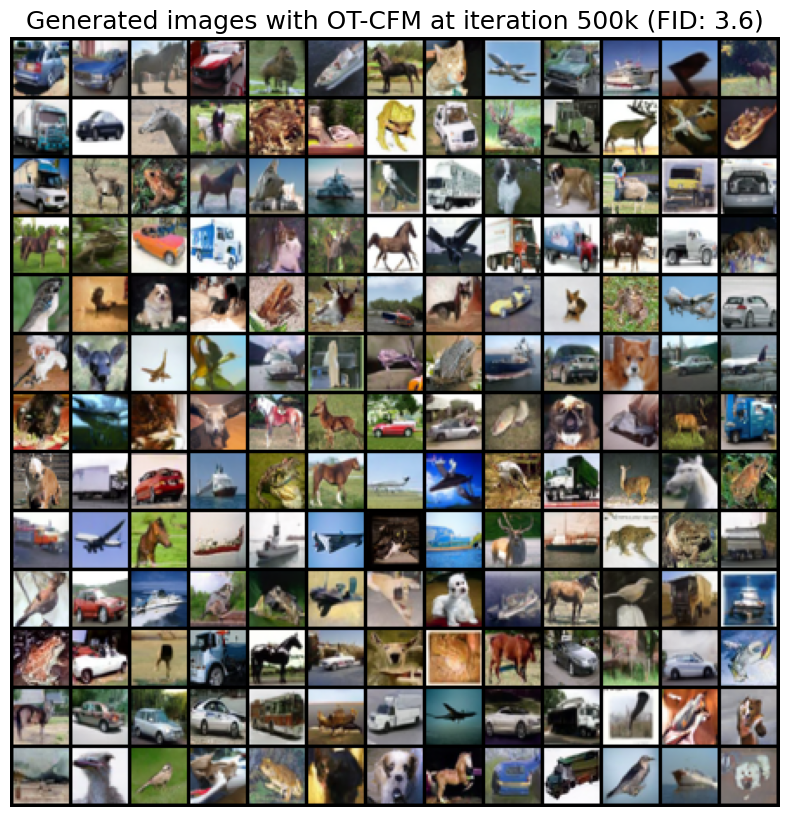

ExactOptimalTransportConditionalFlowMatcher:$z = (x_0, x_1)$ ,$q(z) = \pi(x_0, x_1)$ where$\pi$ is an exact optimal transport joint. This is used in [Tong et al. 2023a] and [Poolidan et al. 2023] as "OT-CFM" and "Multisample FM with Batch OT" respectively. -

TargetConditionalFlowMatcher:$z = x_1$ ,$q(z) = q(x_1)$ as defined in Lipman et al. 2023, learns a flow from a standard normal Gaussian to data using conditional flows which optimally transport the Gaussian to the datapoint (Note that this does not result in the marginal flow being optimal transport). -

SchrodingerBridgeConditionalFlowMatcher:$z = (x_0, x_1)$ ,$q(z) = \pi_\epsilon(x_0, x_1)$ where$\pi_\epsilon$ is an entropically regularized OT plan, although in practice this is often approximated by a minibatch OT plan (See Tong et al. 2023b). The flow-matching variant of this where the marginals are equivalent to the Schrodinger Bridge marginals is known asSB-CFM[Tong et al. 2023a]. When the score is also known and the bridge is stochastic is called [SF]2M [Tong et al. 2023b] -

VariancePreservingConditionalFlowMatcher:$z = (x_0, x_1)$ $q(z) = q(x_0) q(x_1)$ but with conditional Gaussian probability paths which preserve variance over time using a trigonometric interpolation as presented in [Albergo et al. 2023a].

This repository contains the code to reproduce the main experiments and illustrations of two preprints:

- Improving and generalizing flow-based generative models with minibatch optimal transport. We introduce Optimal Transport Conditional Flow Matching (OT-CFM), a CFM variant that approximates the dynamical formulation of optimal transport (OT). Based on OT theory, OT-CFM leverages the static optimal transport plan as well as the optimal probability paths and vector fields to approximate dynamic OT.

- Simulation-free Schrödinger bridges via score and flow matching. We propose Simulation-Free Score and Flow Matching ([SF]2M). [SF]2M leverages OT-CFM as well as score-based methods to approximate Schrödinger bridges, a stochastic version of optimal transport.

If you find this code useful in your research, please cite the following papers (expand for BibTeX):

A. Tong, N. Malkin, G. Huguet, Y. Zhang, J. Rector-Brooks, K. Fatras, G. Wolf, Y. Bengio. Improving and Generalizing Flow-Based Generative Models with Minibatch Optimal Transport, 2023.

@article{tong2024improving,

title={Improving and generalizing flow-based generative models with minibatch optimal transport},

author={Alexander Tong and Kilian FATRAS and Nikolay Malkin and Guillaume Huguet and Yanlei Zhang and Jarrid Rector-Brooks and Guy Wolf and Yoshua Bengio},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2024},

url={https://openreview.net/forum?id=CD9Snc73AW},

note={Expert Certification}

}A. Tong, N. Malkin, K. Fatras, L. Atanackovic, Y. Zhang, G. Huguet, G. Wolf, Y. Bengio. Simulation-Free Schrödinger Bridges via Score and Flow Matching, 2023.

@article{tong2023simulation,

title={Simulation-Free Schr{\"o}dinger Bridges via Score and Flow Matching},

author={Tong, Alexander and Malkin, Nikolay and Fatras, Kilian and Atanackovic, Lazar and Zhang, Yanlei and Huguet, Guillaume and Wolf, Guy and Bengio, Yoshua},

year={2023},

journal={arXiv preprint 2307.03672}

}Major Changes:

- Added cifar10 examples with an FID of 3.5

- Added code for the new Simulation-free Score and Flow Matching (SF)2M preprint

- Created

torchcfmpip installable package - Moved

pytorch-lightningimplementation and experiments torunnerdirectory - Moved

notebooks->examples - Added image generation implementation in both lightning and a notebook in

examples

List of implemented papers:

- Flow Matching for Generative Modeling (Lipman et al. 2023) Paper

- Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow (Liu et al. 2023) Paper Code

- Building Normalizing Flows with Stochastic Interpolants (Albergo et al. 2023a) Paper

- Action Matching: Learning Stochastic Dynamics From Samples (Neklyudov et al. 2022) Paper Code

- Concurrent work to our OT-CFM method: Multisample Flow Matching: Straightening Flows with Minibatch Couplings (Pooladian et al. 2023) Paper

- Generating and Imputing Tabular Data via Diffusion and Flow-based Gradient-Boosted Trees (Jolicoeur-Martineau et al.) Paper Code

- Soon: SE(3)-Stochastic Flow Matching for Protein Backbone Generation (Bose et al.) Paper

Run a simple minimal example here

TorchCFM is now on pypi! You can install it with:

pip install torchcfmTo use the full library with the different examples, you can install dependencies:

# clone project

git clone https://github.com/atong01/conditional-flow-matching.git

cd conditional-flow-matching

# [OPTIONAL] create conda environment

conda create -n torchcfm python=3.10

conda activate torchcfm

# install pytorch according to instructions

# https://pytorch.org/get-started/

# install requirements

pip install -r requirements.txt

# install torchcfm

pip install -e .To run our jupyter notebooks, use the following commands after installing our package.

# install ipykernel

conda install -c anaconda ipykernel

# install conda env in jupyter notebook

python -m ipykernel install --user --name=torchcfm

# launch our notebooks with the torchcfm kernelThe directory structure looks like this:

│

├── examples <- Jupyter notebooks

| ├── cifar10 <- Cifar10 experiments

│ ├── notebooks <- Diverse examples with notebooks

│

│── runner <- Everything related to the original version (V0) of the library

│

|── torchcfm <- Code base of our Flow Matching methods

| ├── conditional_flow_matching.py <- CFM classes

│ ├── models <- Model architectures

│ │ ├── models <- Models for 2D examples

│ │ ├── Unet <- Unet models for image examples

|

├── .gitignore <- List of files ignored by git

├── .pre-commit-config.yaml <- Configuration of pre-commit hooks for code formatting

├── pyproject.toml <- Configuration options for testing and linting

├── requirements.txt <- File for installing python dependencies

├── setup.py <- File for installing project as a package

└── README.md

This toolbox has been created and is maintained by

It was initiated from a larger private codebase which loses the original commit history which contains work from other authors of the papers.

Before making an issue, please verify that:

- The problem still exists on the current

mainbranch. - Your python dependencies are updated to recent versions.

Suggestions for improvements are always welcome!

TorchCFM development and maintenance are financially supported by:

Conditional-Flow-Matching is licensed under the MIT License.

MIT License

Copyright (c) 2023 Alexander Tong

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.