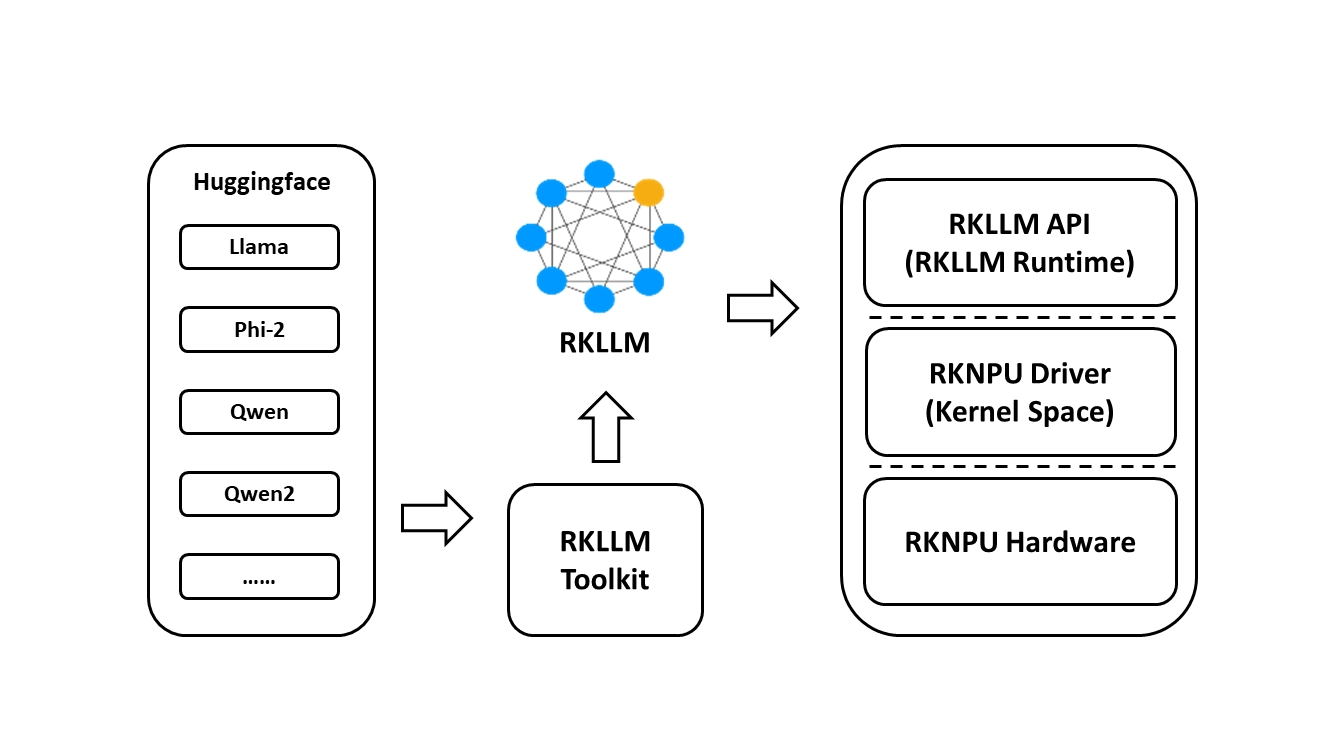

RKLLM software stack can help users to quickly deploy AI models to Rockchip chips. The overall framework is as follows:

In order to use RKNPU, users need to first run the RKLLM-Toolkit tool on the computer, convert the trained model into an RKLLM format model, and then inference on the development board using the RKLLM C API.

-

RKLLM-Toolkit is a software development kit for users to perform model conversionand quantization on PC.

-

RKLLM Runtime provides C/C++ programming interfaces for Rockchip NPU platform to help users deploy RKLLM models and accelerate the implementation of LLM applications.

-

RKNPU kernel driver is responsible for interacting with NPU hardware. It has been open source and can be found in the Rockchip kernel code.

- RK3588 Series

- RK3576 Series

- RK3562 Series

- RV1126B Series

- LLAMA models

- TinyLLAMA models

- Qwen2/Qwen2.5/Qwen3

- Phi2/Phi3

- ChatGLM3-6B

- Gemma2/Gemma3/Gemma3n

- InternLM2 models

- MiniCPM3/MiniCPM4

- TeleChat2

- Qwen2-VL-2B-Instruct/Qwen2-VL-7B-Instruct/Qwen2.5-VL-3B-Instruct

- MiniCPM-V-2_6

- DeepSeek-R1-Distill

- Janus-Pro-1B

- InternVL2-1B/InternVL3-1B

- SmolVLM

- RWKV7

- Benchmark results of common LLMs.

- Run the frequency-setting script from the

scriptsdirectory on the target platform. - Execute

export RKLLM_LOG_LEVEL=1on the device to log model inference performance and memory usage. - Use the

eval_perf_watch_cpu.shscript to measure CPU utilization. - Use the

eval_perf_watch_npu.shscript to measure NPU utilization.

- You can download the latest package from RKLLM_SDK, fetch code: rkllm

- You can download the converted rkllm model from rkllm_model_zoo, fetch code: rkllm

- Multimodel deployment demo: multimodal_model_demo

- API usage demo: rkllm_api_demo

- API server demo: rkllm_server_demo

-

The supported Python versions are:

- Python 3.8

- Python 3.9

- Python 3.10

- Python 3.11

- Python 3.12

Note: Before installing package in a Python 3.12 environment, please run the command:

export BUILD_CUDA_EXT=0

- On some platforms, you may encounter an error indicating that libomp.so cannot be found. To resolve this, locate the library in the corresponding cross-compilation toolchain and place it in the board's lib directory, at the same level as librkllmrt.so.

- RWKV model conversion only supports Python 3.12. Please use

requirements_rwkv7.txtto set up the pip environment. - Latest version: v1.2.2

If you want to deploy additional AI model, we have introduced a SDK called RKNN-Toolkit2. For details, please refer to:

https://github.com/airockchip/rknn-toolkit2

- Added support for Gemma3n and InternVL3 models

- Supported for multi-instance inference

- Supported for LongRoPE

- Fixed issues with asynchronous inference interfaces

- Fixed chat template parsing issues

- Optimized inference performance

- Optimized multimodal vision model demo

for older version, please refer CHANGELOG