Qiyao Wang1,2*,

Shiwen Ni1*,

Huaren Liu2,

Shule Lu2,

Guhong Chen1,3,

Xi Feng1,

Chi Wei1,

Qiang Qu1,

Hamid Alinejad-Rokny5,

Yuan Lin2†,

Min Yang1,4†

*Equal Contribution, † Corresponding Authors.

1Shenzhen Key Laboratory for High Performance Data Mining, Shenzhen Institute of Advanced Technology, Chinese Academy of Sciences

2Dalian University of Technology

3Southern University of Scienceand Technology

4Shenzhen University of Advanced Technology

5The University of New South Wales

- [2024-08-30] Research Beginning.

- [2024-12-13] We have submitted our paper to arXiv, and it will be publicly available soon.

- [2024-12-13] We have released the initial version of AutoPatent on GitHub. The complete code and data will be made publicly available following the paper’s acceptance.

- [2024-12-16] The paper has been public at Arxiv.

- [2024-12-20] This work has been fortunate to receive attention and coverage from Xin Zhi Yuan, and it will continue to be expanded and improved in the future. [News]

- [2024-12-20] We have released 10 generated patent samples in this GitHub repository. Please see the “example” folder.

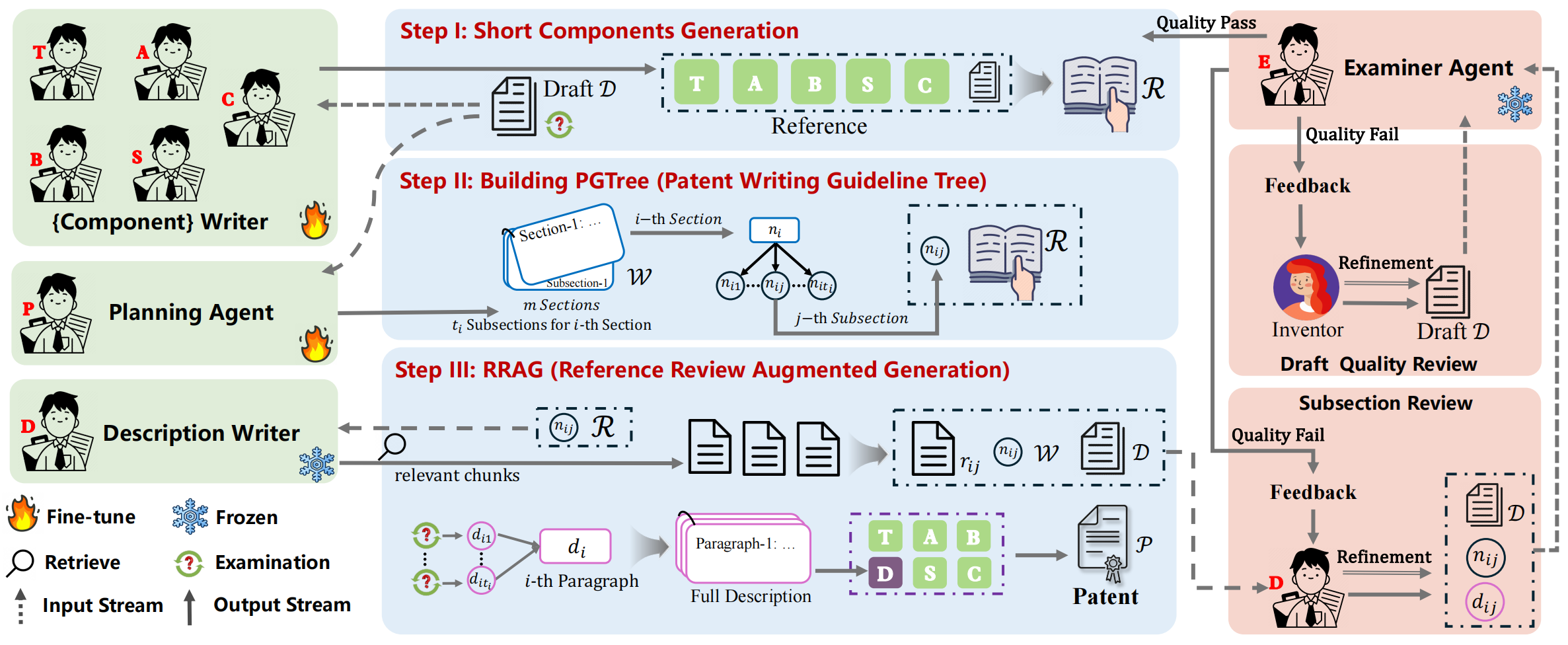

We introduce a novel and practical task known as Draft2Patent, along with its corresponding D2P benchmark, which challenges LLMs to generate full-length patents averaging 17K tokens based on initial drafts. Patents present a significant challenge to LLMs due to their specialized nature, standardized terminology, and extensive length.

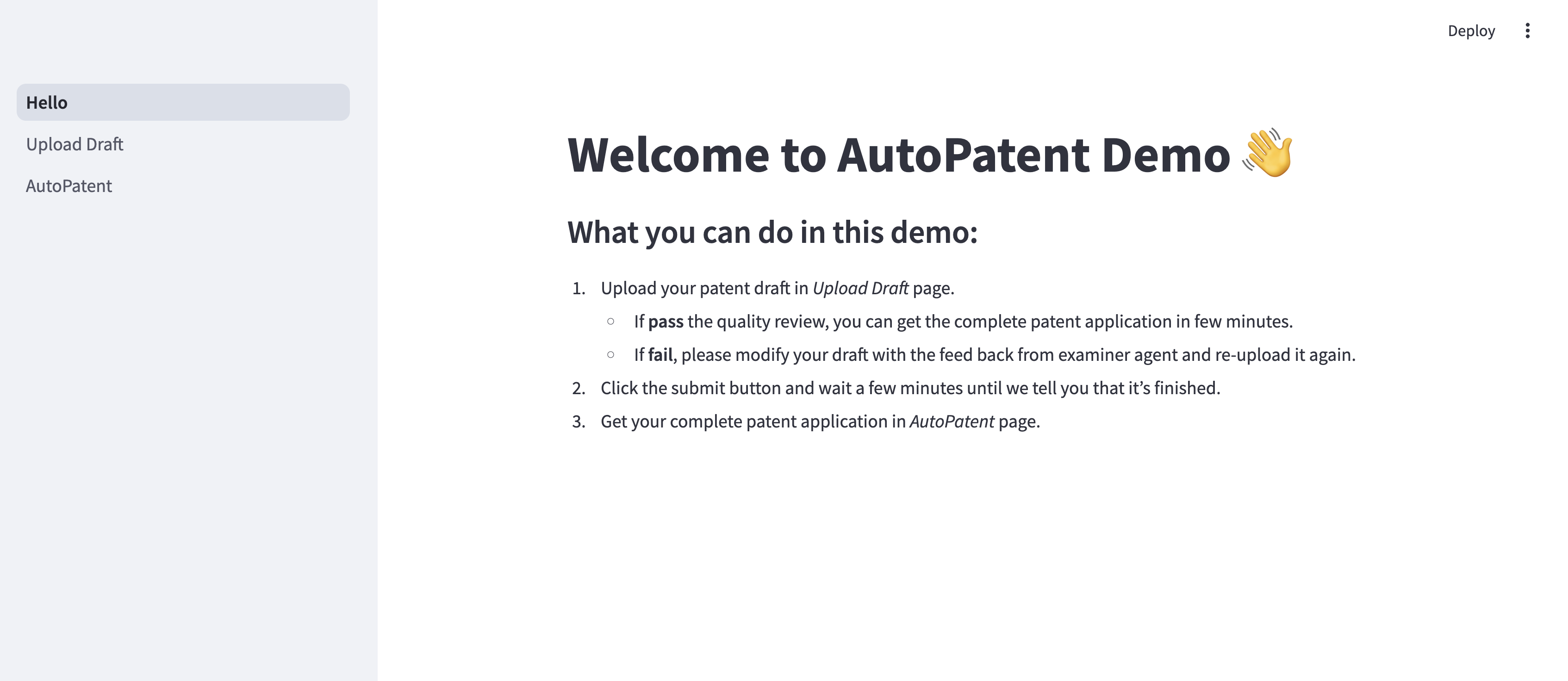

We propose a multi-agent framework called AutoPatent which leverages the LLM-based planner agent, writer agents, and examiner agent with PGTree and RRAG to generate to craft lengthy, intricate, and high-quality complete patent documents.

We will make the data and code available upon the paper's acceptance.

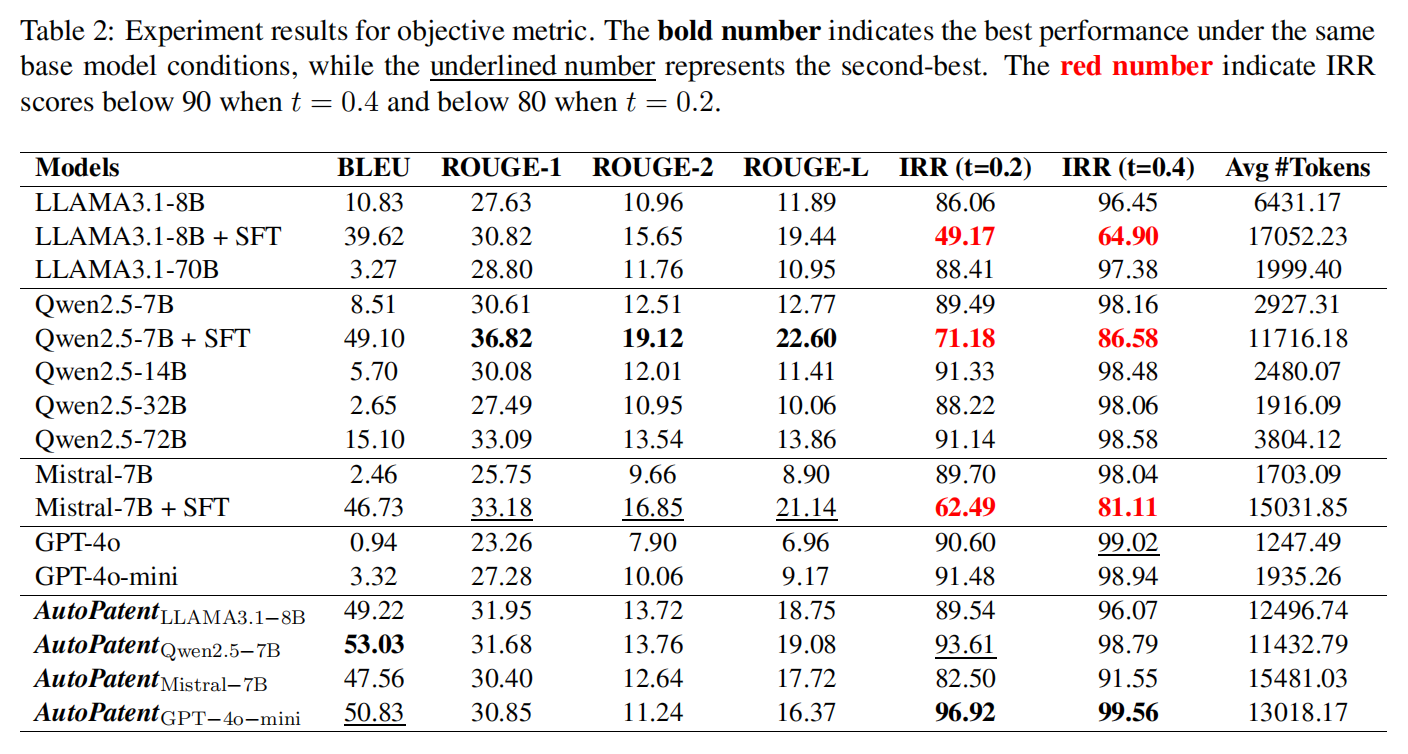

We use the n-gram-based metric, BLEU, the F1 scores of ROUGE-1, ROUGE-2, and ROUGE-L as the objective metrics.

We propose a new metric, termed IRR (Inverse Repetition Rate), to measure the degree of sentence repetition within the patent

The IRR is defined as:

Where the time complexity of the IRR metric is

The function

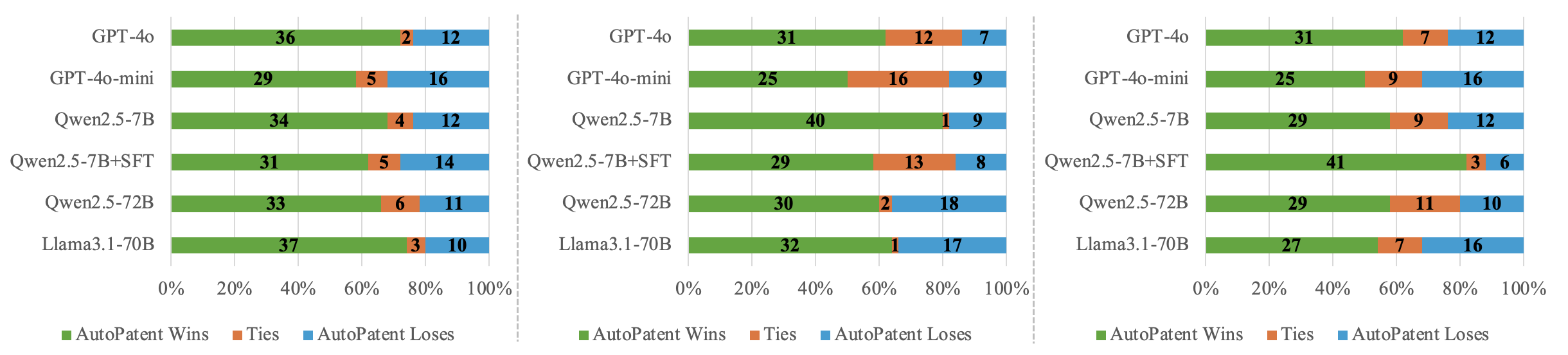

We invite three experts who are familiar with the patent law and patent drafting to evaluate the quality of generated patent using a single-bind review.

The prompt is provided in Appendix C.1 of the paper.

Models:

- Commercial Model

- GPT-4o

- GPT-4o-mini

- Open source model

- LLAMA3.1 (8B and 70B)

- Qwen2.5 (7B, 14B, 32B and 72B)

- Mistral-7B

We utilize 1,500 draft-patent pairs from D2P’s training set to perform fully supervised fine-tuning on LLAMA3.1-8B, Qwen2.5-7B, and Mistral-7B models (each with fewer than 14 billion parameters).

The fine-tuning process leverages LLaMA-Factory as the tool for efficiently fine-tuning models.

We have released the first demo video of AutoPatent on our website.

A publicly accessible and customizable demo will be available upon the paper's acceptance.

If you find this repository helpful, please consider citing the following paper:

@article{wang2024autopatent,

title={AutoPatent: A Multi-Agent Framework for Automatic Patent Generation},

author={Qiyao Wang and Shiwen Ni and Huaren Liu and Shule Lu and Guhong Chen and Xi Feng and Chi Wei and Qiang Qu and Hamid Alinejad-Rokny and Yuan Lin and Min Yang},

year={2024},

eprint={2412.09796},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2412.09796},

}If you have any questions, feel free to contact us at wangqiyao@mail.dlut.edu.cn or sw.ni@siat.ac.cn.