This toolkit is used to evaluate general thermal infrared (TIR) trackers on the TIR object tracking benchmark, LSOTB-TIR, which consists of a large-scale training dataset and an evaluation dataset with a total of 1,400 TIR image sequences and more than 600K frames. To evaluate a TIR tracker on different attributes, we define 4 scenario attributes and 12 challenge attributes in the evaluation dataset. By releasing LSOTB-TIR, we encourage the community to develop deep learning based TIR trackers and evaluate them fairly and comprehensively.

Paper, Supplementary materials

- 2020-08, Our paper is accepted by ACM Multimedia Conference 2020.

- 2020-11, We update the evaluation dataset because we miss a test sequence 'cat_D_001'.

- 2022-10, We provide a 'LSOTB-TIR.json' file at here Baidu or TeraBox for evaluating on the pysot toolkit.

- 2023-01, Our extended paper is accepted by TNNLS.paper, evaluation dataset

- Large-scale: 1400 TIR sequences, 600K+ frames, 730K+ bounding boxes.

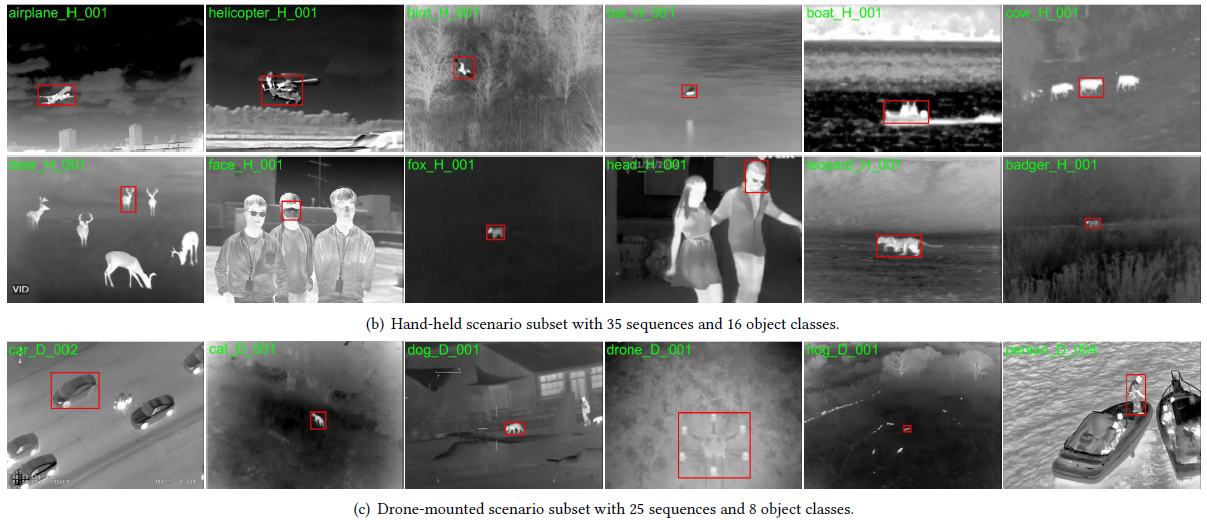

- High-diversity: 12 challenges, 4 scenario, 47 object classes.

- Contain both training and evaluation data sets.

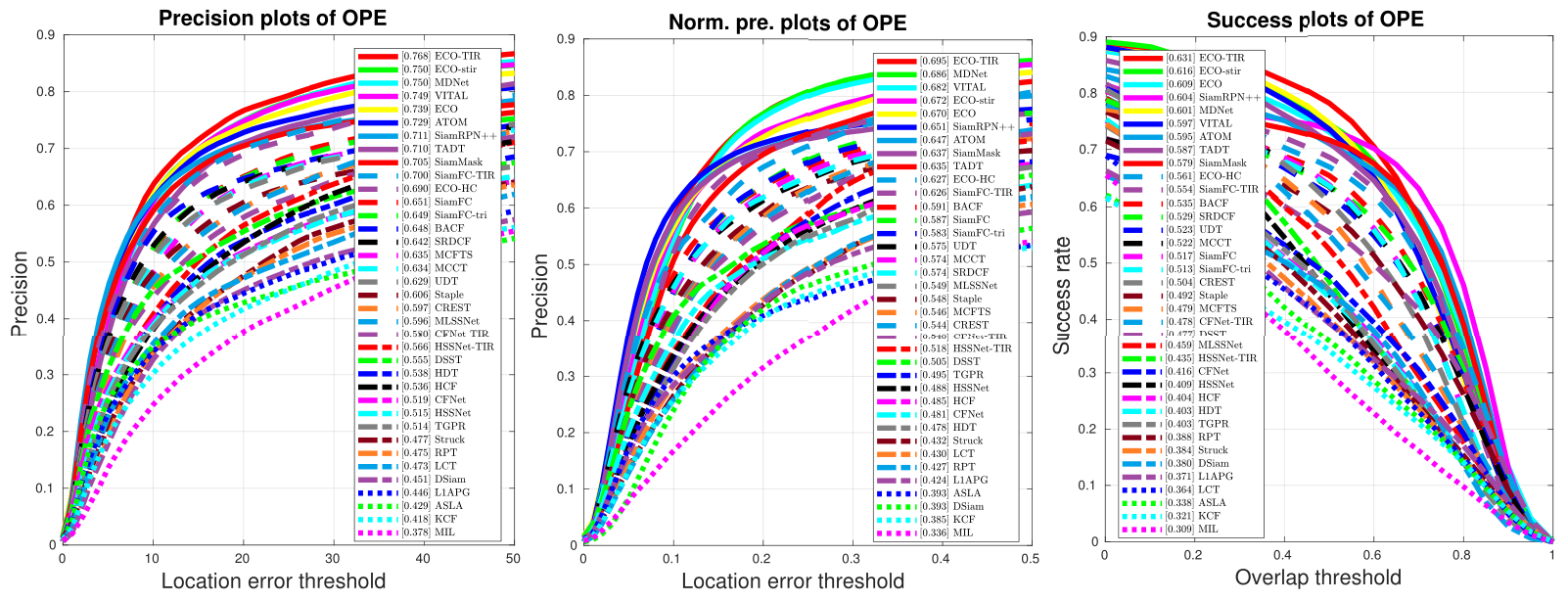

- Provide 30+ tracker's evaluation results.

- Provide short-term and long-term TIR tracking evaluation.

- Download the dataset and 30+ tracker's evaluation raw results from TeraBox, if you are not in china.

- Download the dataset and 30+ tracker's evaluation raw results from Baidu Pan using the password: dr3i, if you are in china.

- Download the evaluation dataset of the TNNLS version from Baidu Pan or TeraBox and corresponding raw results from here.

- Download the evaluation dataset and put it into the

sequencesfolder. - Download the evaluation raw results and put them into the

resultsfolder. - Run

run_evaluation.mandrun_speed.mto draw the result plots. - Configure

configTrackers.mand then usemain_running_one.mto run your own tracker on the benchmark.

- CMD-DiMP. Sun J, et al. Unsupervised Cross-Modal Distillation for Thermal Infrared Tracking, ACM MM, 2021. [Github]

- MMNet. Liu Q, et al. Multi-task driven feature model for thermal infrared tracking, AAAI, 2020. [Github]

- ECO-stir. Zhang L, et al. Synthetic data generation for end-to-end thermal infrared tracking, TIP, 2019. [Github]

- MLSSNet. Liu Q, et al, Learning Deep Multi-Level Similarity for Thermal Infrared Object Tracking, TMM, 2020. [Github]

- HSSNet. Li X, et al, Hierarchical spatial-aware Siamese network for thermal infrared object tracking, KBS, 2019.[Github]

- MCFTS. Liu Q, et al, Deep convolutional neural networks for thermal infrared object tracking, KBS, 2017. [Github]

- ECO. Danelljan M, et al, ECO: efficient convolution operators for tracking, CVPR, 2017. [Github]

- DeepSTRCF. Li F et al, Learning spatial-temporal regularized correlation filters for visual tracking, CVPR, 2018. [Github]

- MDNet. Nam H, et al, Learning multi-domain convolutional neural networks for visual tracking, CVPR, 2016. [Github]

- SRDCF. Danelljan M, et al, Learning spatially regularized correlation filters for visual tracking, ICCV, 2015. [Project]

- VITAL. Song Y, et al., Vital: Visual tracking via adversarial learning, CVPR, 2018. [Github]

- TADT. Li X, et al, Target-aware deep tracking, CVPR, 2019. [Github]

- MCCT. Wang N, et al, Multi-cue correlation filters for robust visual tracking, CVPR, 2018. [Github]

- Staple. Bertinetto, L, et al, Staple: Complementary learners for real-time tracking, CVPR, 2016. [Github]

- DSST. Danelljan M, et al, Accurate scale estimation for robust visual tracking, BMVC, 2014. [Github]

- UDT. Wang N, et al, Unsupervised deep tracking, CVPR, 2019. [Github]

- CREST. Song Y, et al, Crest: Convolutional residual learning for visual tracking, ICCV, 2017. [Github]

- SiamFC. Bertinetto, L, et al, Fully-Convolutional Siamese Networks for Object Tracking, ECCVW, 2016. [Github]

- SiamFC-tri. Dong X, et al, Triplet loss in Siamese network for object tracking, ECCV, 2018. [Github]

- HDT. Qi Y, et al, Hedged deep tracking, CVPR, 2016. [Project]

- CFNet. Valmadre, J, et al, End-to-end representation learning for correlation filter based tracking, CVPR, 2017. [Github]

- HCF. Ma, C, et al, Hierarchical convolutional features for visual tracking, ICCV, 2015. [Github]

- L1APG. Bao, C, et al, Real time robust L1 tracker using accelerated proximal gradient approach, CVPR, 2012. [Project]

- SVM. Wang N, et al, Understanding and diagnosing visual tracking systems, ICCV, 2015. [Project]

- KCF. Henriques, J, et al, High-speed tracking with kernelized correlation filters, TPAMI, 2015. [Project]

- DSiam. Guo, Q, et al, Learning dynamic siamese network for visual object tracking, ICCV, 2017. [Github]

Feedbacks and comments are welcome! Feel free to contact us via liuqiao.hit@gmail.com or liuqiao@stu.hit.edu.cn