By Shanshan Zhong and Zhongzhan Huang and Daifeng Li and Wushao Wen and Jinghui Qin and Liang Lin

This repository is the implementation of "Mirror Gradient: Towards Robust Multimodal Recommender Systems via Exploring Flat Local Minima" [paper]. Our paper has been accepted at the 2024 ACM Web Conference (WWW 2024).

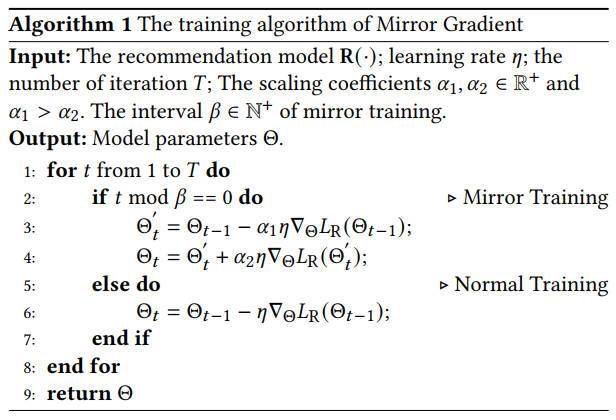

Multimodal recommender systems utilize various types of information, such as texts and images, to model user preferences and item features, helping users discover items aligned with their interests. However, it simultaneously magnifies certain risks from multimodal information inputs, such as inherent noise risk and information adjustment risk. To this end, we propose a concise gradient strategy Mirror Gradient (MG) that inverses the gradient signs appropriately during training to make the multimodal recommendation models approach flat local minima easier compared to models with normal training.

As a general gradient method of recommender systems, MG can be applied to the training of various recommendation models. The code of this repository is based on MMRec, a modern multimodal recommendation toolbox, and the core code of MG is in src/common/trainer.py. We also integrate MG to the modern multimodal recommendation toolbox MMRec

(1) Clone the code.

git clone https://github.com/Qrange-group/Mirror-Gradient

cd Mirror-Gradient(2) Prepare the enviroment.

conda env create -f environment.yaml

conda activate mg

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118

pip install --no-index torch_cluster -f https://pytorch-geometric.com/whl/torch-2.0.1+cu118.html

pip install --no-index torch-scatter -f https://pytorch-geometric.com/whl/torch-2.0.1+cu118.html

pip install --no-index torch_sparse -f https://pytorch-geometric.com/whl/torch-2.0.1+cu118.html

pip install --no-index torch_spline_conv -f https://pytorch-geometric.com/whl/torch-2.0.1+cu118.html

pip install torch_geometric(3) Prepare datasets.

See data. If you want to train models on other Amazon datasets, please see the processing tutorial.

(4) Train the model with MG.

cd src

python main.pyYou can change the model and dataset by the code,

python main.py --model DRAGON --dataset sports

python main.py --model LayerGCN --dataset sportsIf you don't want to use MG during training, please use --not_mg. For example,

python main.py --not_mg(5) Analyze logs.

The training logs are saved in src/logs, and the log file name of models trained with MG has the logo of mg. You can run the following code and get the best result of each log file in src/analysis.txt.

python analysis.py-

Models: BM3, DRAGON, DualGNN, FREEDOM, GRCN, ItemKNNCBF, LATTICE, LayerGCN, MGCN, MMGCN, MVGAE, SELFCFED_LGN, SLMRec, VBPR. See models' details in src/models. You also can add others recommendation models to src/models refering to the format of itemknncbf.py.

-

Datasets: Amazon datasets. You can download the processed datasets

baby,sports,clothing, andelecfrom GoogleDriver. If you want to train models on other Amazon datasets, please see the processing tutorial.

For the evaluation of recommendation performance, we pay attention to top-5 accuracy as recommendations in the top positions of rank lists are more important, and adopt four widely used metrics including recall (REC), precision (PREC), mean average precision (MAP), and normalized discounted cumulative gain (NDCG).

You can resume the checkpoint by,

python main.py --model DRAGON --dataset sports --resume DRAGON-sports.pth| Model | REC | PREC | MAP | NDCG |

|---|---|---|---|---|

| VBPR | 0.0353 | 0.0079 | 0.0189 | 0.0235 |

| VBPR + MG | 0.0375 | 0.0084 | 0.0203 | 0.0251 |

| MMGCN | 0.0216 | 0.0049 | 0.0114 | 0.0143 |

| MMGCN + MG | 0.0241 | 0.0054 | 0.0126 | 0.0158 |

| GRCN | 0.0360 | 0.0080 | 0.0196 | 0.0241 |

| GRCN + MG | 0.0383 | 0.0086 | 0.0207 | 0.0256 |

| DualGNN | 0.0374 | 0.0084 | 0.0206 | 0.0253 |

| DualGNN + MG | 0.0387 | 0.0086 | 0.0212 | 0.0261 |

| SLMRec | 0.0429 | 0.0095 | 0.0233 | 0.0288 |

| SLMRec + MG | 0.0449 | 0.0099 | 0.0242 | 0.0299 |

| BM3 | 0.0353 | 0.0078 | 0.0194 | 0.0238 |

| BM3 + MG | 0.0386 | 0.0086 | 0.0210 | 0.0259 |

| FREEDOM | 0.0446 | 0.0098 | 0.0232 | 0.0291 |

| FREEDOM + MG | 0.0466 | 0.0102 | 0.0242 | 0.0303 |

| DRAGON | 0.0449 | 0.0098 | 0.0239 | 0.0296 |

| DRAGON + MG | 0.0465 | 0.0102 | 0.0248 | 0.0307 |

| Model | REC | PREC | MAP | NDCG |

|---|---|---|---|---|

| VBPR | 0.0182 | 0.0042 | 0.0098 | 0.0122 |

| VBPR + MG | 0.0203 | 0.0046 | 0.0110 | 0.0136 |

| MMGCN | 0.0140 | 0.0033 | 0.0075 | 0.0094 |

| MMGCN + MG | 0.0157 | 0.0036 | 0.0084 | 0.0106 |

| GRCN | 0.0226 | 0.0051 | 0.0126 | 0.0155 |

| GRCN + MG | 0.0250 | 0.0057 | 0.0139 | 0.0171 |

| DualGNN | 0.0238 | 0.0054 | 0.0132 | 0.0162 |

| DualGNN + MG | 0.0249 | 0.0056 | 0.0139 | 0.0170 |

| BM3 | 0.0280 | 0.0062 | 0.0157 | 0.0192 |

| BM3 + MG | 0.0285 | 0.0063 | 0.0159 | 0.0195 |

| FREEDOM | 0.0252 | 0.0056 | 0.0139 | 0.0171 |

| FREEDOM + MG | 0.0260 | 0.0058 | 0.0144 | 0.0176 |

@article{zhong2024mirror,

title={Mirror Gradient: Towards Robust Multimodal Recommender Systems via Exploring Flat Local Minima},

author={Zhong, Shanshan and Huang, Zhongzhan and Li, Daifeng and Wen, Weushao and Qin, Jinghui and Lin, Liang},

journal={arXiv preprint arXiv:2402.11262},

year={2024}

}

Many thanks to enoche for their MMRec for multimodal recommendation task.