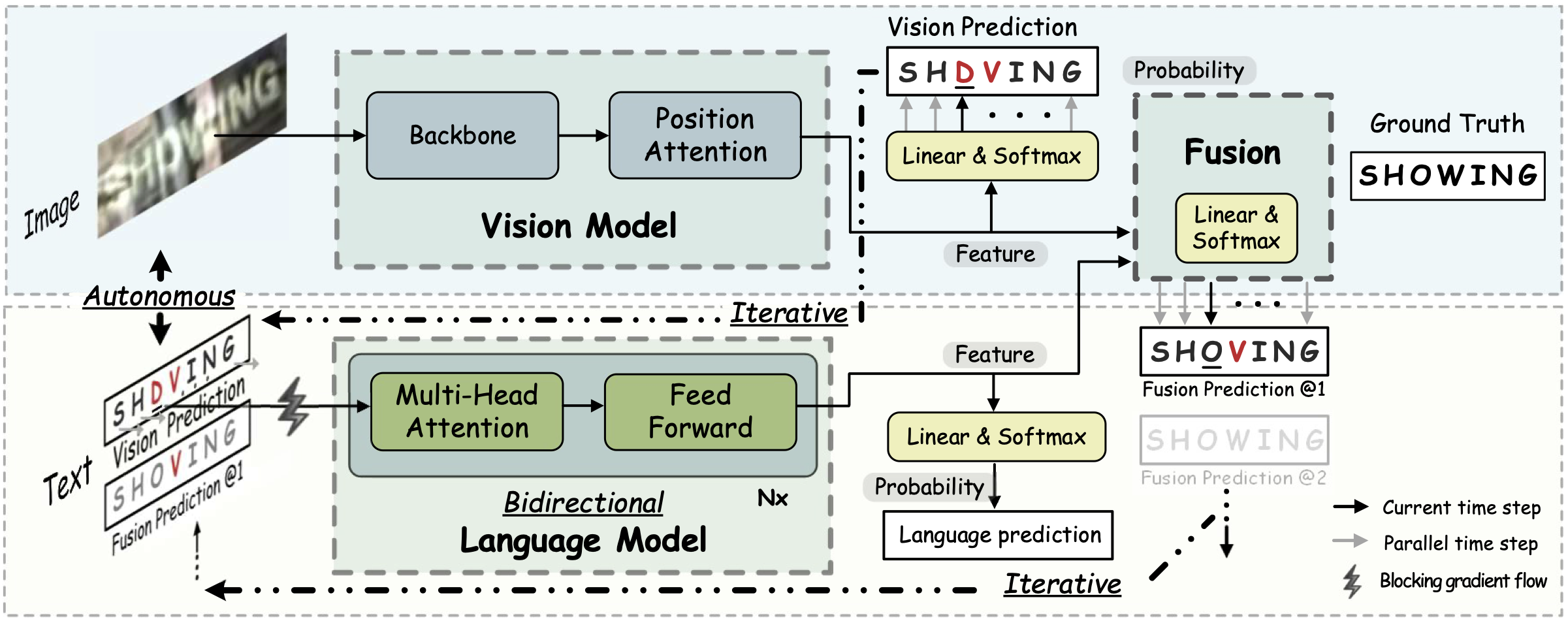

Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition

The official code of ABINet (CVPR 2021, Oral).

ABINet uses a vision model and an explicit language model to recognize text in the wild, which are trained in end-to-end way. The language model (BCN) achieves bidirectional language representation in simulating cloze test, additionally utilizing iterative correction strategy.

-

We provide a pre-built docker image using the Dockerfile from

docker/Dockerfile -

Running in Docker

$ git@github.com:FangShancheng/ABINet.git $ docker run --gpus all --rm -ti --ipc=host -v $(pwd)/ABINet:/app fangshancheng/fastai:torch1.1 /bin/bash -

(Untested) Or using the dependencies

pip install -r requirements.txt

-

Training datasets

- MJSynth (MJ):

- Use

tools/create_lmdb_dataset.pyto convert images into LMDB dataset - LMDB dataset BaiduNetdisk(passwd:n23k)

- Use

- SynthText (ST):

- Use

tools/crop_by_word_bb.pyto crop images from original SynthText dataset, and convert images into LMDB dataset bytools/create_lmdb_dataset.py - LMDB dataset BaiduNetdisk(passwd:n23k)

- Use

- WikiText103, which is only used for pre-trainig language models:

- Use

notebooks/prepare_wikitext103.ipynbto convert text into CSV format. - CSV dataset BaiduNetdisk(passwd:dk01)

- Use

- MJSynth (MJ):

-

Evaluation datasets, LMDB datasets can be downloaded from BaiduNetdisk(passwd:1dbv), GoogleDrive.

- ICDAR 2013 (IC13)

- ICDAR 2015 (IC15)

- IIIT5K Words (IIIT)

- Street View Text (SVT)

- Street View Text-Perspective (SVTP)

- CUTE80 (CUTE)

-

The structure of

datadirectory isdata ├── charset_36.txt ├── evaluation │ ├── CUTE80 │ ├── IC13_857 │ ├── IC15_1811 │ ├── IIIT5k_3000 │ ├── SVT │ └── SVTP ├── training │ ├── MJ │ │ ├── MJ_test │ │ ├── MJ_train │ │ └── MJ_valid │ └── ST ├── WikiText-103.csv └── WikiText-103_eval_d1.csv

Get the pretrained models from BaiduNetdisk(passwd:kwck), GoogleDrive. Performances of the pretrained models are summaried as follows:

| Model | IC13 | SVT | IIIT | IC15 | SVTP | CUTE | AVG |

|---|---|---|---|---|---|---|---|

| ABINet-SV | 97.1 | 92.7 | 95.2 | 84.0 | 86.7 | 88.5 | 91.4 |

| ABINet-LV | 97.0 | 93.4 | 96.4 | 85.9 | 89.5 | 89.2 | 92.7 |

- Pre-train vision model

CUDA_VISIBLE_DEVICES=0,1,2,3 python main.py --config=configs/pretrain_vision_model.yaml - Pre-train language model

CUDA_VISIBLE_DEVICES=0,1,2,3 python main.py --config=configs/pretrain_language_model.yaml - Train ABINet

CUDA_VISIBLE_DEVICES=0,1,2,3 python main.py --config=configs/train_abinet.yaml

Note:

- You can set the

checkpointpath for vision and language models separately for specific pretrained model, or set toNoneto train from scratch

CUDA_VISIBLE_DEVICES=0 python main.py --config=configs/train_abinet.yaml --phase test --image_only

Additional flags:

--checkpoint /path/to/checkpointset the path of evaluation model--test_root /path/to/datasetset the path of evaluation dataset--model_eval [alignment|vision]which sub-model to evaluate--image_onlydisable dumping visualization of attention masks

python demo.py --config=configs/train_abinet.yaml --input=figs/test

Additional flags:

--config /path/to/configset the path of configuration file--input /path/to/image-directoryset the path of image directory or wildcard path, e.g,--input='figs/test/*.png'--checkpoint /path/to/checkpointset the path of trained model--cuda [-1|0|1|2|3...]set the cuda id, by default -1 is set and stands for cpu--model_eval [alignment|vision]which sub-model to use--image_onlydisable dumping visualization of attention masks

Successful and failure cases on low-quality images:

If you find our method useful for your reserach, please cite

@article{fang2021read,

title={Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition},

author={Fang, Shancheng and Xie, Hongtao and Wang, Yuxin and Mao, Zhendong and Zhang, Yongdong},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2021}

}This project is only free for academic research purposes, licensed under the 2-clause BSD License - see the LICENSE file for details.

Feel free to contact fangsc@ustc.edu.cn if you have any questions.