Idunn is the main back-end API of Qwant Maps, it acts a the entrypoint in front of many other APIs and is in charge of aggregating data for geocoding, directions, POIs details, ...

- historicaly, Idunn was only an API to get points-of-interest information for QwantMaps.

- The POIs are taken from the mimir ElasticSearch database.

- It also fetches POI data from Wikipedia API and a custom Wikidata Elasticsearch source.

- Why Idunn ? Because she is the wife of Bragi that is also the main mimir API.

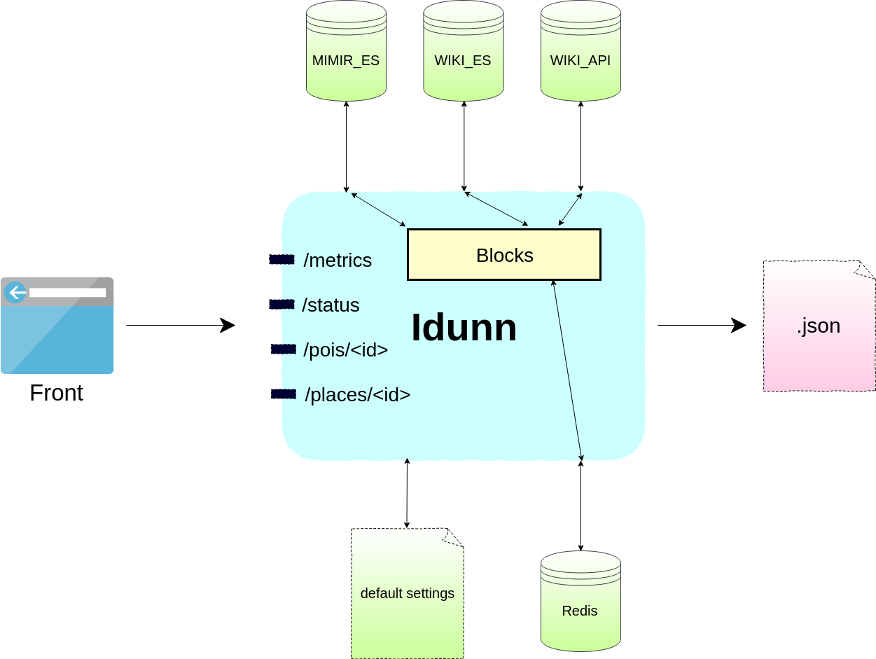

- A simple workflow schema of Idunn is presented below.

Note: this diagram may be outdated:

- The API provides its OpenAPI schema with:

GET /openapi.json

The main endpoints are:

/v1/places/{place_id}?lang={lang}&type={type}&verbosity={verbosity}to get the details of a place (admin, street, address or POI).type: (optional) parameter belongs to the set{'admin', 'street', 'address', 'poi'}verbosityparameter belongs to the set{'long', 'short'}. The default verbosity islong.

/v1/places?bbox={bbox}&category=<category-name>&size={size}to get a list of all points of interest matching the given bbox and categoriesbbox: left,bot,right,top e.g.bbox=2.0,48.0,3.0,49.0category: multiple values are accepted (e.g.category=leisure&category=museum)size: maximum number of places in the responseverbosity: default verbosity islist(equivalent tolong, except "information" and "wiki" blocks are not returned)source: (optional) to force a data source (instead of automated selection based on coverage). Accepted values:osm,pages_jaunesq: full-text query (optional, experimental)

/v1/places?bbox={bbox}&raw_filter=class,subclass&size={size}to get a list of all points of interest matching the given bbox (=left,bot,right,top e.g.bbox=2,48,3,49) and the raw filters (e.g.raw_filter=*,restaurant&raw_filter=shop,*&raw_filter=bakery,bakery)/v1/categoriesto get the list of all the categories you can filter on./v1/directionsSee directions.md for details/v1/events?bbox={bbox}&category=<category_name>&size={size}to get a list of all events matching the given bbox and outing_categorybbox: left,bot,right,top e.g.bbox=2.0,48.0,3.0,49.0category: one value is accepted (e.g.category=concert | show | exhibition | sport | entertainment)size: maximum number of events in the response

/v1/statusto get the status of the API and associated ES cluster./v1/metricsto get some metrics on the API that give statistics on the number of requests received, the duration of requests... This endpoint can be scraped by Prometheus.

- Python 3.10

- Pipenv, to manage dependencies and virtualenv

-

Create the virtualenv and install dependencies:

pipenv install

-

and then:

IDUNN_MIMIR_ES=<url_to_MIMIR_ES> IDUNN_WIKI_ES=<url_to_WIKI_ES> pipenv run python app.py

-

you can query the API on port 5000:

curl localhost:5000/v1/places/toto?lang=fr&type=poi

The configuration can be given from different ways:

- a default settings is available in

utils/default_settings.yaml - a yaml settings file can be given with an env var

IDUNN_CONFIG_FILE(the default settings is still loaded and overriden) - specific variable can be overriden with env var. They need to be given like "IDUNN_{var_name}={value}"

eg IDUNN_MIMIR_ES=...

You can create a

.envfile with commonly used env variables, it will be loaded by pipenv by default.

Please note that you will need an API key from openweathermap in order to use the Weather block. You can then set it into the IDUNN_WEATHER_API_KEY environment variable or directly into the WEATHER_API_KEY inside the utils/default_settings.yaml file.

To run tests, first make sure you have dev dependencies installed:

pipenv install --devThen, you can run the full testsuite using pytest:

pipenv run pytest -vv -xIf you are using a .env file, you need to make sure that pipenv won't load it, which can be done by setting the environment variable PIPENV_DONT_LOAD_ENV=1.

-

Idunn comes along with all necessary components to contribute as easily as possible: specifically you don't need to have any Elasticsearch instance running. Idunn uses docker images to simulate the Elasticsearch sources and the Redis. This means that you will need a local docker install to be able to spawn an ES cluster.

-

To contribute the common workflow is:

- install the dev dependencies:

pipenv install --dev - add a test in

./testsfor the new feature you propose - implement your feature

- run pytest:

pipenv run pytest -vv -x - check the linter output:

pipenv run lint - if everything is fixed, then check the format:

pipenv run black --diff --check

- install the dev dependencies:

You can run it with both Redis and elasticsearch using docker. First, edit the docker-compose.yml file to add a link to your elasticsearch instance (for example: https://somewhere.lost/) in IDUNN_MIMIR_ES.

Then you just need to run:

$ docker-compose up --buildIf you need to clean the Redis cache, run:

$ docker-compose kill

$ docker image prune --filter "label=idunn_idunn"