Semantic Augmented Contextual-Paraformer (SeACo-Paraformer) is a non-autoregressive E2E ASR system with flexible and effective hotword customization ability which follows the main idea of CLAS and ColDec. This repo is built for showing (1) detailed experiment results; (2) source codes; (3) open model links; (4) open hotword customization test sets based on Aishell-1 as discussed in our paper.

SeACo-Paraformer: A Non-Autoregressive ASR System with Flexible and Effective Hotword Customization Ability

The model proposed and compared in the paper are implemented with FunASR

- Paraformer Model

: source code

- Paraformer-CLAS Model

: source code

- SeACo-Paraformer Model

: source code

Modelscope is a open platform for sharing models, AI spaces and datasets. We open our industrial models discussed in the paper through Modelscope:

- Paraformer Model: model link

- Paraformer-CLAS Model: model link

- SeACo-Paraformer Model: will be released once paper is accepted: model link

With FunASR

The following code conducts ASR with hotword customization:

from funasr import AutoModel

model = AutoModel(model="iic/speech_seaco_paraformer_large_asr_nat-zh-cn-16k-common-vocab8404-pytorch",

model_revision="v2.0.4",

device="cuda:0"

)

res = model.generate(input="YOUR_PATH/aishell1_hotword_dev.scp",

hotword='./data/dev/hotword.txt',

batch_size_s=300,

)

fout1 = open("dev.output", 'w')

for resi in res:

fout1.write("{}\t{}\n".format(resi['key'], resi['text']))

res = model.generate(input="YOUR_PATH/aishell1_hotword_test.scp",

hotword='./data/test/hotword.txt',

batch_size_s=300,

)

fout2 = open("test.output", 'w')

for resi in res:

fout2.write("{}\t{}\n".format(resi['key'], resi['text']))Previous hotword customization related works have seen performance reported on internal test sets, we hope to provide a common testbed for testing the customization ability of models.

Based on a famous Mandarin speech dataset - Aishell-1, we filtered two subsets from test and dev set and prepare hotword lists for them.

Find them in data/test and data/dev, you may download the entire Aishell-1 and filter out the subset Test-Aishell1-NE and Dev-Aishell1-NE with data/test/uttid and data/dev/uttid respectively. And we prepare the entire hotword list hotword.txt and R1-hotword list r1-hotword.txt for them (R1-hotword stands for hotwords whose recall rate on a general ASR model recognition results is lower than 40%)

| #utt | #hotwords | #R1-hotwords | |

|---|---|---|---|

| Test-Aishell1-NE | 808 | 400 | 226 |

| Dev-Aishell1-NE | 1334 | 600 | 371 |

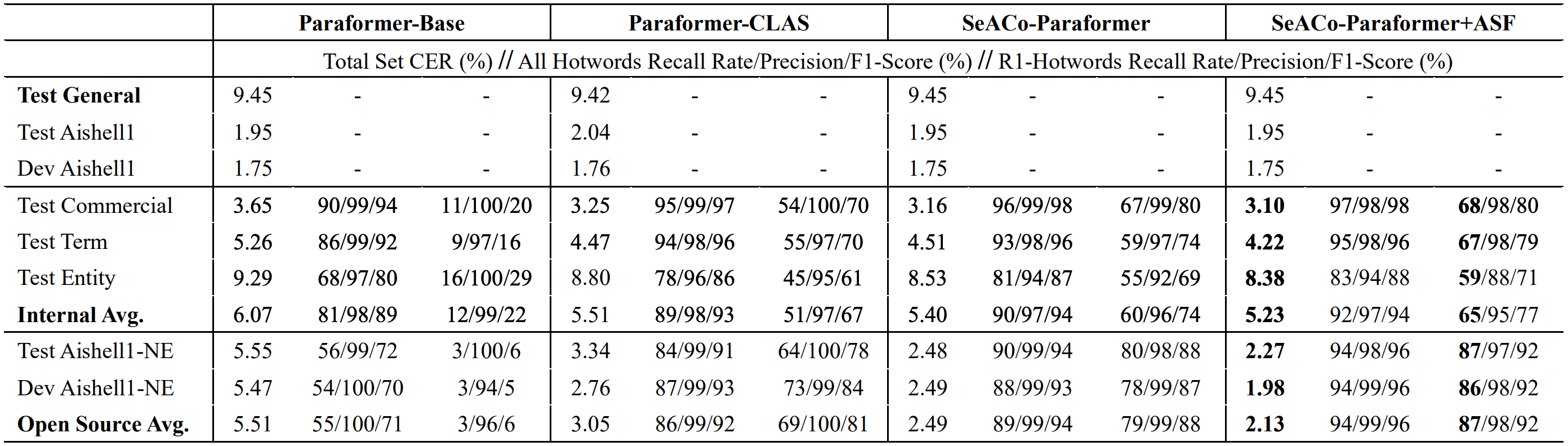

Result1: Comparing the models

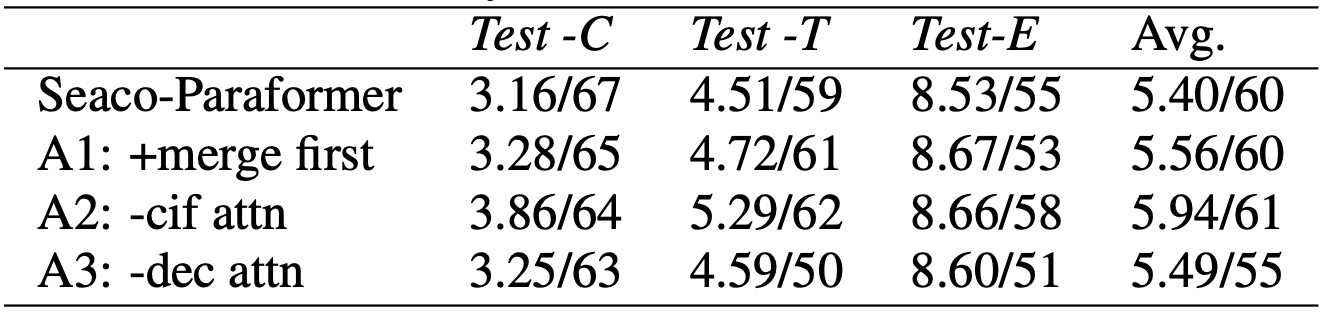

Result2: Ablation study over bias decoder calculation

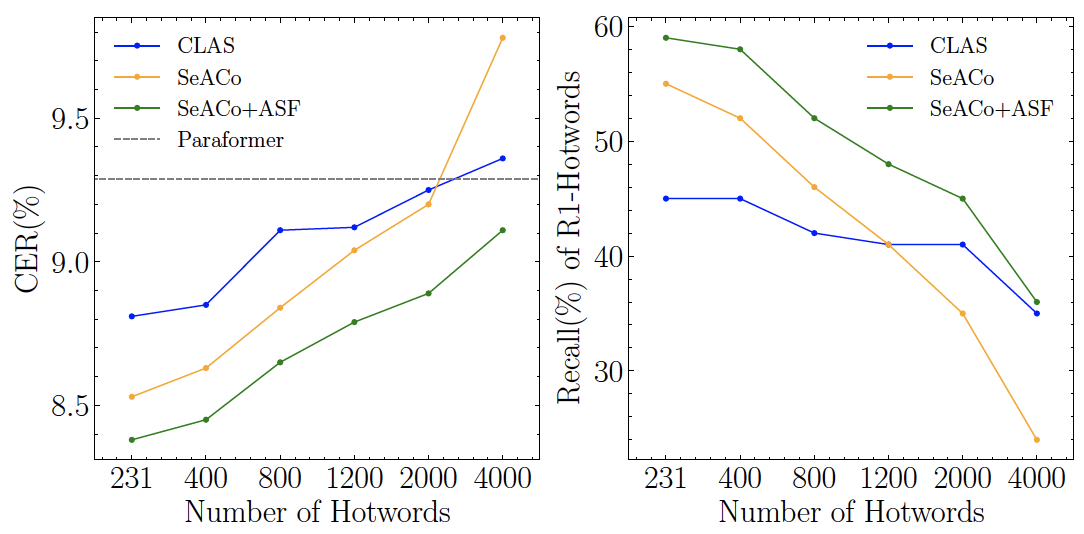

Result3: Performance comparision with larger incoming hotword list

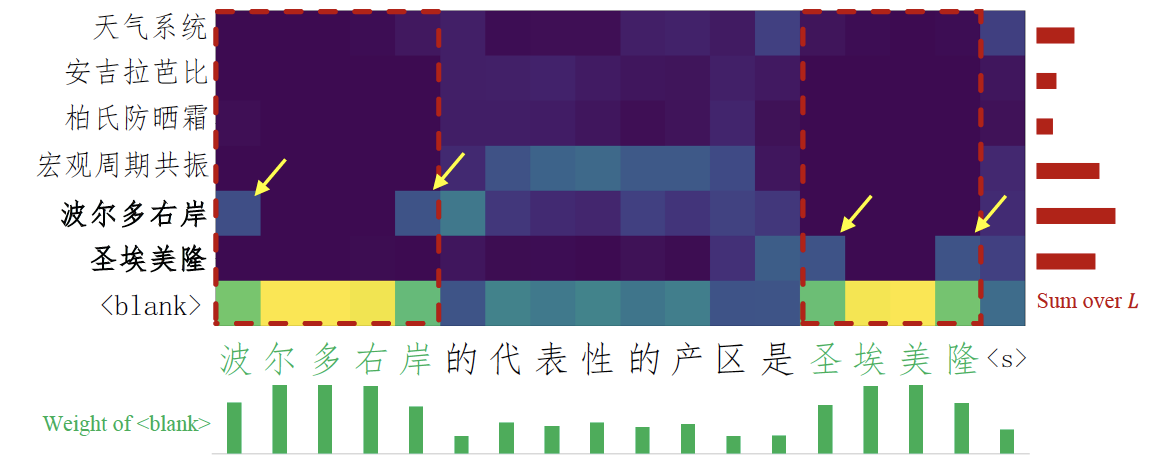

Result4: Attention score matrix analysis