Community • Folder Structure • GettingStart • QuickStart • Result •

🌊 LakeBench is a large-scale benchmark designed to test the mettle of table discovery methods on a much larger scale, providing a more comprehensive and realistic evaluation platform for the field, including finance, retail, manufacturing, energy, media, and more.

Despite their paramount significance, existing benchmarks for evaluating and supporting table discovery processes have been limited in scale and diversity, often constrained by small dataset sizes. They are not sufficient to systematically evaluate the effectiveness, scalability, and efficiency of various solutions.

LakeBench consists of over 16 million real tables –1,600X larger than existing data lakes, from multiple sources, with an overall size larger than 1TB (100X larger). LakeBench contains both synthesized and real queries, in total more than 10 thousand queries –10X more than existing benchmarks, for join and union search respectively.

🙌 With LakeBench, we thoroughly evaluate the state-of-the-art table discovery approaches on our benchmark and present our experimental findings from diverse perspectives, which we believe can push the research of table discovery.

We deeply appreciate the invaluable effort contributed by our dedicated team of developers, supportive users, and esteemed industry partners.

- Massachusetts Institute of Technology

- Beijing Institute of Technology

- Hong Kong University of Science and Technology

- Apache Flink

- Intel

.

├─── imgs # picture of different experiments

├─── join # join algorithms

| ├─── Joise

| ├─── LSH

| ├─── Pexeso

| └─── DeepJoin

|

├─── union # union algorithms

| ├─── TUS

| ├─── D3L

| ├─── Santos

| └─── Starmie

|

├─── join&union # join&union algorithms

| ├─── Joise

| ├─── LSH

| ├─── Pexeso

| └─── DeepJoin

|

├─── README.md

└─── requirements.txt

This is an example of how to set up LakeBench locally. To get a local copy up, running follow these simple example steps.

LakeBench is built on pytorch, with torchvision, torchaudio, and transformers.

To install the required packages, you can create a conda environment:

conda create --name lakebench python=3.then use pip to install -r requirements.txt

pip install -r requirements.txtFrom now on, you can start use LakeBench by typing

python test.pyThe detailed instructions for downloading and processing are shown in table below. Please follow it to download datasets/queries before running or developing algorithms.

LakeBench is easy to use and extend. Going through the bellowing examples will help you familiar with LakeBench for detailed instructions, evaluate an existing join/union algorithm on your own dataset, or developing new join/union algorithms.

Here is an example to run InfoGather. Running other supported algorithms (on other datasets with different queries) can be specified by the table below.

Folder Structure • GettingStart • QuickStart • Result •

🌊 The Web contains a vast corpus of HTML tables, specifically entityattribute tables. We present three core operations, namely entity augmentation by attribute name, entity augmentation by example and attribute discovery, that are useful for "information gathering" tasks (e.g., researching for products or stocks). We propose to use web table corpus to perform them automatically. We require the operations to have high precision and coverage, have fast (ideally interactive) response times and be applicable to any arbitrary domain of entities. The naive approach that attempts to directly match the user input with the web tables suffers from poor precision and coverage. Our key insight is that we can achieve much higher precision and coverage by considering indirectly matching tables in addition to the directly matching ones. The challenge is to be robust to spuriously matched tables: we address it by developing a holistic matching framework based on topic sensitive pagerank and an augmentation framework that aggregates predictions from multiple matched tables. We propose a novel architecture that leverages preprocessing in MapReduce to achieve extremely fast response times at query time. Our experiments on real-life datasets and 573M web tables show that our approach has (i) significantly higher precision and coverage and (ii) four orders of magnitude faster response times compared with the state-of-the-art approach.

.

├─── img # picture of model

├─── CreatIndex.py # cerat KIV 、KIA 、Inverted index、docnum, etc

|

├─── binaryGraphMatch.py # binaryGrapthMatch to achieve union base on join

|─── changeParamiter_PPR_opendata.py # full ppr for opendata set

|─── changeParamiter_PPR_webtable.py # full ppr for webtable set

|─── creat_topk_join.py # get topk for querying about join

|─── creat_topk_union.py # get topk for querying about union

|

├─── join.py # join

|─── join_creatofflineIndex_webtable_opendata.py # creat offline_index for join

|─── join_queryonline_webtable.py # query online for webtable

|─── join_queryonline_opendata.py # query online for opendata

|─── join_creat_topkfile.py # get topkfile for join

|─── join_staticdata_webtable_opendat.py # stati cdata

|

├─── union.py

├─── union.py # union

|─── union_webtable.py # union on webtable

|─── union_opendata.py # union on opendata

|─── union_creat_topkfile.py # get topkfile about union

|─── union_staticdata_opendata.py # static data for opendata

|─── union_staticdata_webtable.py # static data for webtable

|

├─── util.py # common functions

├─── page_ranks.py # pageranks

├─── queryOnline.py # query

├─── querydata.py # process query

├─── staticdata.py # static data

├─── staticdata_union_opendat.py

├─── staticdata_union_webtable.py

├─── myLogger.py # log file

├─── info.md # readme file

Infogather is easy to use and extend. Going through the bellowing examples will help you familiar with infogather for detailed instructions, evaluate an existing join/union algorithm on your own dataset, or developing new join/union algorithms.

Infogather is bulit on pytorch, with torchvision, torchaudio, and transfrmers.

To install the required packages, you can create a conda environment:

conda create --name info_env python=3.then use pip to install the required packages

pip install -r requirements.txt

Step1: Check your environment

You need to properly install nvidia driver first. To use GPU in a docker container You also need to install nvidia-docker2 (Installation Guide). Then, Please check your CUDA version via nvidia-smi

Step2: index

python join_creatofflineIndex_webtable_opendata.py

-- datalist: list, dataset list

-- indexstorepath: string, the path of storing index

-- columnValue_rate: float, the columnValue importance of the column

-- columnName_rate : float, the columnName importance of the column

-- columnWith_rate : float, the columnWith importance of the column

-- dataset_large_or_small: sting , large or small

-- num_walks: int, the superparameter of ppr

-- reset_pro: float,the superparameter of ppr Step3: online

# online:

script: join_queryonline_opendata.py/join_queryonline_webtable.py

run commond: python join_creatofflineIndex_webtable_opendata.py

* parameters

-- queryfilepath:string the querytablefilepath

-- columnname: the query columnStep4: get_topk

# get topk:

topk: join_creat_topkfile.py/join_creat_topkfile.py

script: python join_creat_topkfile.py

run commond: python join_creatofflineIndex_webtable_opendata.py

* parameters:

-- filepath: string,the index of final_res_dic.pkl filepath

-- storepath: string, the result of topk file store path Step1: Check your environment

You need to properly install nvidia driver first. To use GPU in a docker container You also need to install nvidia-docker2 (Installation Guide). Then, Please check your CUDA version via nvidia-smi. Because we often get the results of union search based on the Bipartite Graph Matching on the results of join search, which is stored in storepath (the join result of topk file store path)

Step2: online

# online:

script: union_opendata.py/union_webtable.py

python union_opendata.py/union_webtable.py

Step3: get_topk

# get topk:

topk: union_creat_topkfile.py

script: python union_creat_topkfile.py

run command: python python union_creat_topkfile.pyIf you want to try other algorithms, you can read more details according to the table:

| Algorithms | Task | Train |

|---|---|---|

| Joise | Join | ./join/Joise/joise.md |

| LSH Ensemble | Join | ./join/LSH/lsh.md |

| Pexeso | Join | ./join/Pexeso/pexeso.md |

| DeepJoin | Join | ./join/Deepjoin/deepjoin.md |

| TUS | Union | ./union/TUS/tus.md |

| D3L | Union | ./union/D3L/d3l.md |

| Santos | Union | ./union/Santos/santos.md |

| Starmie | Union | ./union/Starmie/starmie.md |

| Frt12 | Join & Union | ./join&union/Frt12/frt.md |

| InfoGather | Join & Union | ./join&union/InfoGather/info.md |

| Aurum | Join & Union | ./join&union/Aurum/aurum.md |

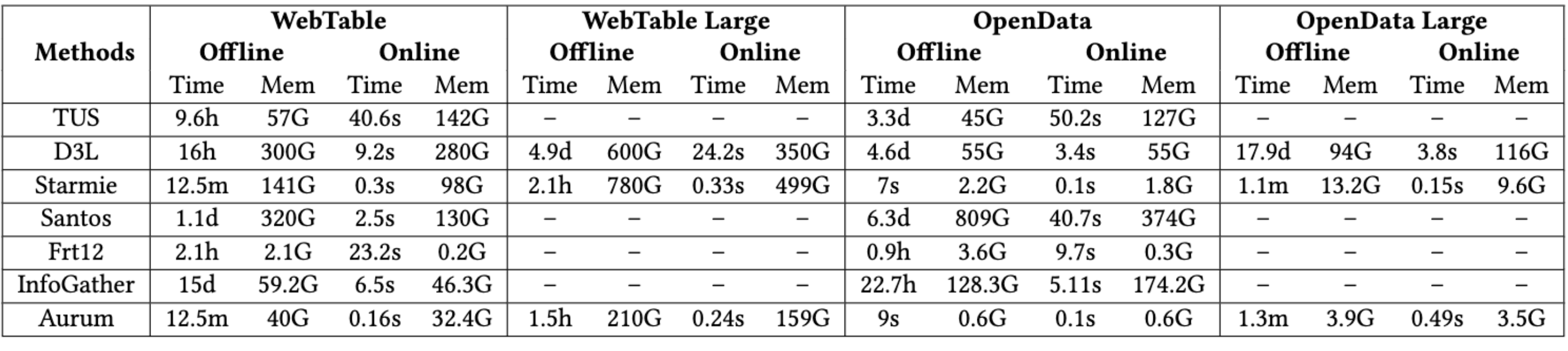

Efficiency and Memory Usage of Table Join Search:

Efficiency and Memory Usage of Table Union Search:

We propose to build an end-to-end prototype system, LakeCompass that encapsulates the above functionalities through flexible Python APIs, where the user can upload data, construct indexes, search tables and build ML models. We also provide a Web interface to serve table search and analysis in a more intuitive way.

We support various types of indexes built over data lakes, enabling efficient table searches across three categories: keyword-based, joinable, and unionable. First, we invoke ‘indexing’ function to build an index over data lake metadata. The arguments for this function include index type, search type, and an instance of a configuration class. Once the index is created, we utilize ‘keyword_search’ function to retrieve tables associated with the keyword ‘education’.

# build index for the datalake

datalake = LakeCompass,DataLake('/datalake demo')

keyword_index = datalake.metadata.indexing(index_type='HNSW', search_type='keyword', config=LakeCompass.HNSWconfig())

# keyword-based search

candidate_table = LakeCompass.keyword_search(keyword_index, 'education')After selecting a table as the query table and training a model based solely on it, the results indicate that the performance of the model tends to be poor.

# train configuration

predict_col ='Educational Category'

model_config = {'model':'SvM','type': 'classification', 'k':4, 'model config': SVMconfig()}

query_table = LakeCompass.read('education_Des_Moines.csv')

query_table_model = DownstreamModel(**model_config)

val_set = pd.read csv('val set.csv')

test_set = pd.read csv('test set.csv')

# trian a model using query table

query_table_model.train(query_table, predict_col, val_set)

query_table_model.eval(test_set, predict_col)Therefore, we proceed to employ the ‘union_search’ function to retrieve unionable tables based on semantics similarities. Before the search, we build another index over the columns within the data lake.

#unionable table search

union_index = datalake.columns.indexing(index_type='HNSW', search_type='union', config=LakeCompass.HNSWconfig())

unionable_tables = LakeCompass.union_search(union_index, query_table)We further provides ‘auto_augment’ function that augments the original query table with the retrieved tables based on their benefits to the downstream model performance. Simultaneously, the model is trained using the augmented table datasets. This augmentation and training process follows a novel iterative strategy. The results indicate that the model trained on the augmented table datasets outperforms the former model that trained solely on the query table.

#train another model using unionable tables

augmented_table_model = DownstreamModel(**model_config)

augmented_table_model = unionable_tables.auto_augment(augmented_table_model, val_set, predict_col)

# evaluate models

augmented_table_model.eval(test_set, predict_col)