A collection of papers and resources about unifying large language models (LLMs) and knowledge graphs (KGs).

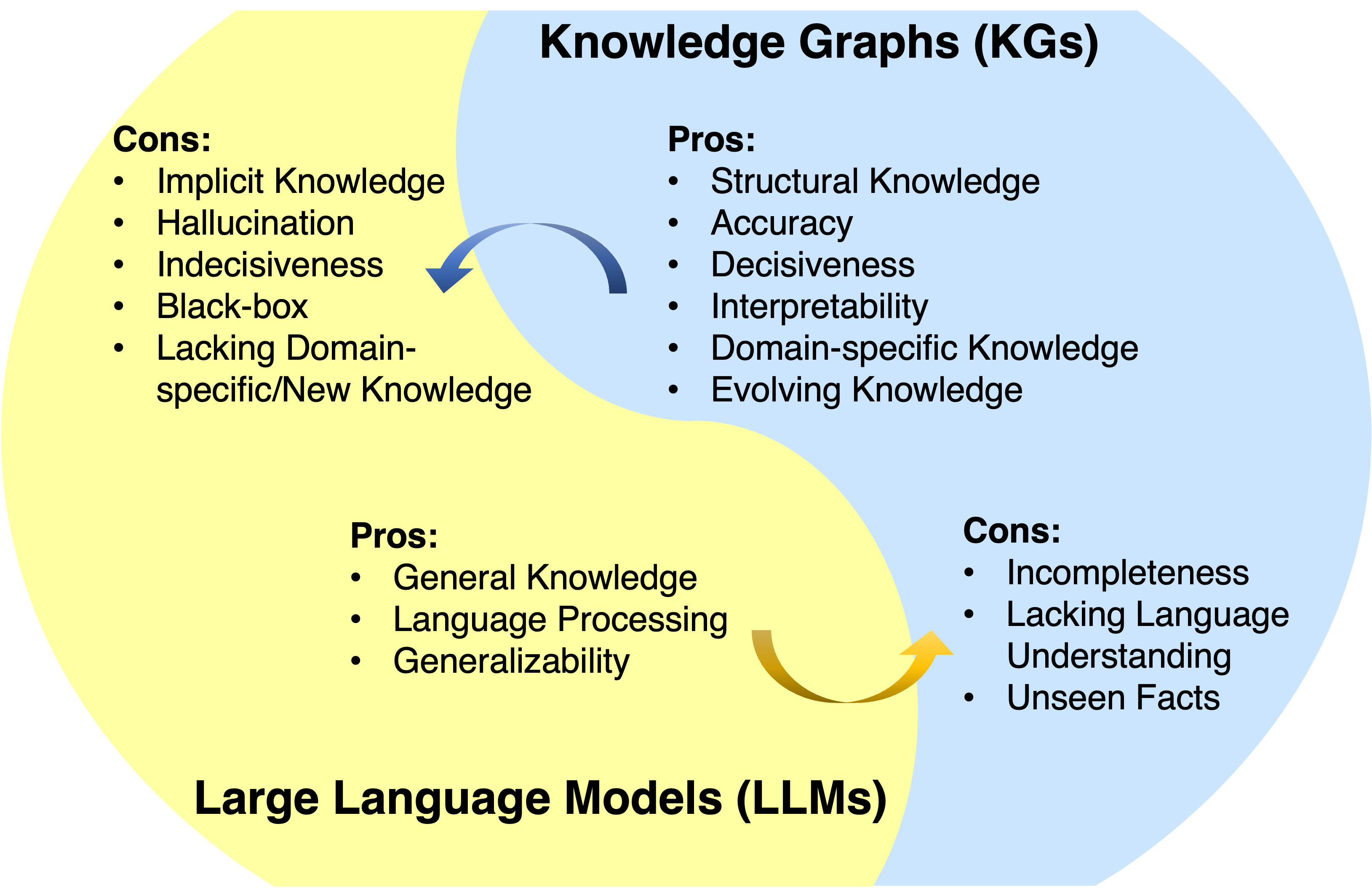

Large language models (LLMs) have achieved remarkable success and generalizability in various applications. However, they often fall short of capturing and accessing factual knowledge. Knowledge graphs (KGs) are structured data models that explicitly store rich factual knowledge. Nevertheless, KGs are hard to construct and existing methods in KGs are inadequate in handling the incomplete and dynamically changing nature of real-world KGs. Therefore, it is natural to unify LLMs and KGs together and simultaneously leverage their advantages.

🔭 This project is under development. You can hit the STAR and WATCH to follow the updates.

- We are happy to release the first graph foundation model-powered RAG pipeline (GFM-RAG) that combines the power of GNNs with LLMs to enhance reasoning. Paper and Code.

- Our latest work on KG + LLM reasoning is now public: Graph-constrained Reasoning: Faithful Reasoning on Knowledge Graphs with Large Language Models

- Our LLM for temporal KG reasoning work: Large Language Models-guided Dynamic Adaptation for Temporal Knowledge Graph Reasoning has been accepted by NeurIPS 2024!

- Our KG for analyzing LLM reasoning paper: Direct Evaluation of Chain-of-Thought in Multi-hop Reasoning with Knowledge Graphs has been accepted by ACL 2024.

- Our roadmap paper has been accepted by TKDE.

- Our KG for LLM probing paper: Systematic Assessment of Factual Knowledge in Large Language Models has been accepted by EMNLP 2023.

- Our KG + LLM reasoning paper: Reasoning on Graphs: Faithful and Interpretable Large Language Model Reasoning has been accepted by ICLR 2024.

- Our LLM for KG reasoning paper: ChatRule: Mining Logical Rules with Large Language Models for Knowledge Graph Reasoning is now public.

- Our roadmap paper: Unifying Large Language Models and Knowledge Graphs: A Roadmap is now public.

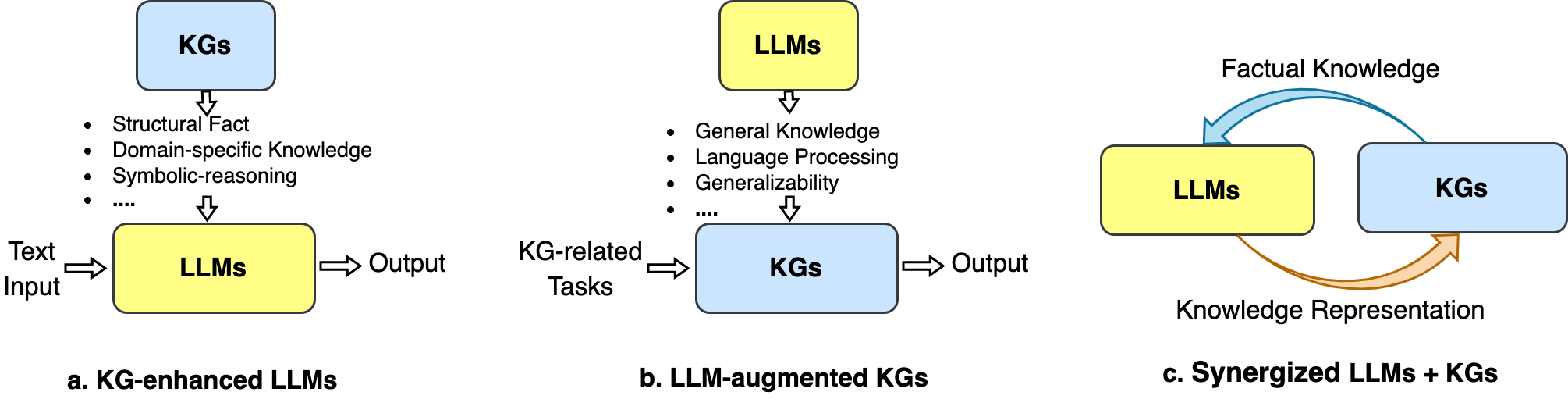

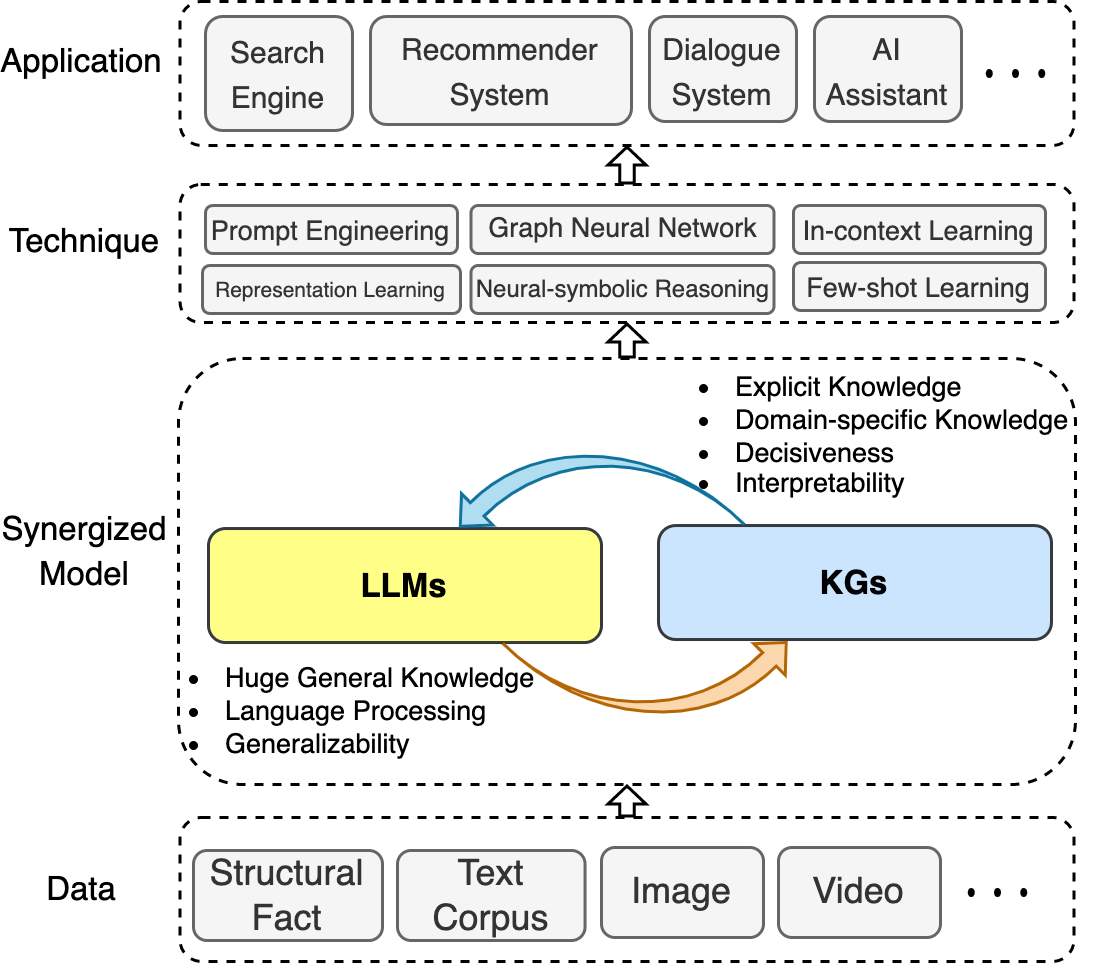

In this repository, we collect recent advances in unifying LLMs and KGs. We present a roadmap that summarizes three general frameworks: 1) KG-enhanced LLMs, 2) LLMs-augmented KGs, and 3) Synergized LLMs + KGs.

We also illustrate the involved techniques and applications.

We hope this repository can help researchers and practitioners to get a better understanding of this emerging field.

If this repository is helpful for you, plase help us by citing this paper:

@article{llm_kg,

title={Unifying Large Language Models and Knowledge Graphs: A Roadmap},

author={Pan, Shirui and Luo, Linhao and Wang, Yufei and Chen, Chen and Wang, Jiapu and Wu, Xindong},

journal={IEEE Transactions on Knowledge and Data Engineering (TKDE)},

year={2024}

}- Awesome-LLM-KG

- Unifying Large Language Models and Knowledge Graphs: A Roadmap (TKDE, 2024) [paper]

- A Survey on Knowledge-Enhanced Pre-trained Language Models (Arxiv, 2023) [paper]

- A Survey of Knowledge-Intensive NLP with Pre-Trained Language Models (Arxiv, 2022) [paper]

- A Review on Language Models as Knowledge Bases (Arxiv, 2022) [paper]

- Generative Knowledge Graph Construction: A Review (EMNLP, 2022) [paper]

- Knowledge Enhanced Pretrained Language Models: A Compreshensive Survey (Arxiv, 2021) [paper]

- Reasoning over Different Types of Knowledge Graphs: Static, Temporal and Multi-Modal (Arxiv, 2022) [paper][code]

- ERNIE: Enhanced Language Representation with Informative Entities (ACL, 2019) [paper]

- Exploiting structured knowledge in text via graph-guided representation learning (EMNLP, 2019) [paper]

- SKEP: Sentiment knowledge enhanced pre-training for sentiment analysis (ACL, 2020) [paper]

- E-bert: A phrase and product knowledge enhanced language model for e-commerce (Arxiv, 2020) [paper]

- Pretrained encyclopedia: Weakly supervised knowledge-pretrained language model (ICLR, 2020) [paper]

- BERT-MK: Integrating graph contextualized knowledge into pre-trained language models (EMNLP, 2020) [paper]

- K-BERT: enabling language representation with knowledge graph (AAAI, 2020) [paper]

- CoLAKE: Contextualized language and knowledge embedding (COLING, 2020) [paper]

- Kepler: A unified model for knowledge embedding and pre-trained language representation (TACL, 2021) [paper]

- K-Adapter: Infusing Knowledge into Pre-Trained Models with Adapters (ACL Findings, 2021) [paper]

- Cokebert: Contextual knowledge selection and embedding towards enhanced pre-trained language models (AI Open, 2021) [paper]

- Ernie 3.0: Large-scale knowledge enhanced pre-training for language understanding and generation (Arixv, 2021) [paper]

- Pre-training language models with deterministic factual knowledge (EMNLP, 2022) [paper]

- Kala: Knowledge-augmented language model adaptation (NAACL, 2022) [paper]

- DKPLM: decomposable knowledge-enhanced pre-trained language model for natural language understanding (AAAI, 2022) [paper]

- Dict-BERT: Enhancing language model pre-training with dictionary (ACL Findings, 2022) [paper]

- JAKET: joint pre-training of knowledge graph and language understanding (AAAI, 2022) [paper]

- Tele-Knowledge Pre-training for Fault Analysis (ICDE, 2023) [paper]

- Barack’s wife hillary: Using knowledge graphs for fact-aware language modeling (ACL, 2019) [paper]

- Retrieval-augmented generation for knowledge-intensive nlp tasks (NeurIPS, 2020) [paper]

- Realm: Retrieval-augmented language model pre-training (ICML, 2020) [paper]

- QA-GNN: Reasoning with language models and knowledge graphs for question answering (NAACL, 2021) [paper]

- Memory and knowledge augmented language models for inferring salience in long-form stories (EMNLP, 2021) [paper]

- JointLK: Joint reasoning with language models and knowledge graphs for commonsense question answering (NAACL, 2022) [paper]

- Enhanced Story Comprehension for Large Language Models through Dynamic Document-Based Knowledge Graphs (AAAI, 2022) [paper]

- Greaselm: Graph reasoning enhanced language models (ICLR, 2022) [paper]

- An efficient memory-augmented transformer for knowledge-intensive NLP tasks (EMNLP, 2022) [paper]

- Knowledge-Augmented Language Model Prompting for Zero-Shot Knowledge Graph Question Answering (NLRSE@ACL, 2023) [paper]

- LLM-Based Multi-Hop Question Answering with Knowledge Graph Integration in Evolving Environments (EMNLP Findings, 2024) [paper]

- Language models as knowledge bases (EMNLP, 2019) [paper]

- Kagnet: Knowledge-aware graph networks for commonsense reasoning (Arxiv, 2019) [paper]

- Autoprompt: Eliciting knowledge from language models with automatically generated prompts (EMNLP, 2020) [paper]

- How can we know what language models know? (ACL, 2020) [paper]

- Knowledge neurons in pretrained transformers (ACL, 2021) [paper]

- Can Language Models be Biomedical Knowledge Bases? (EMNLP, 2021) [paper]

- Interpreting language models through knowledge graph extraction (Arxiv, 2021) [paper]

- QA-GNN: Reasoning with language models and knowledge graphs for question answering (ACL, 2021) [paper]

- How to Query Language Models? (Arxiv, 2021) [paper]

- Rewire-then-probe: A contrastive recipe for probing biomedical knowledge of pre-trained language models (Arxiv, 2021) [paper]

- When Not to Trust Language Models: Investigating Effectiveness and Limitations of Parametric and Non-Parametric Memories (Arxiv, 2022) [paper]

- How Pre-trained Language Models Capture Factual Knowledge? A Causal-Inspired Analysis (Arxiv, 2022) [paper]

- Can Knowledge Graphs Simplify Text? (CIKM, 2023) [paper]

- Entity Alignment with Noisy Annotations from Large Language Models (NeurIPS, 2024) [paper]

- LambdaKG: A Library for Pre-trained Language Model-Based Knowledge Graph Embeddings (Arxiv, 2023) [paper]

- Integrating Knowledge Graph embedding and pretrained Language Models in Hypercomplex Spaces (Arxiv, 2022) [paper]

- Endowing Language Models with Multimodal Knowledge Graph Representations (Arxiv, 2022) [paper]

- Language Model Guided Knowledge Graph Embeddings (IEEE Access, 2022) [paper]

- Language Models as Knowledge Embeddings (IJCAI, 2022) [paper]

- Pretrain-KGE: Learning Knowledge Representation from Pretrained Language Models (EMNLP, 2020) [paper]

- KEPLER: A Unified Model for Knowledge Embedding and Pre-trained Language Representation (TACL, 2020) [paper]

- Multi-perspective Improvement of Knowledge Graph Completion with Large Language Models (COLING 2024) [paper] [Code]

- KG-BERT: BERT for knowledge graph completion (Arxiv, 2019) [paper]

- Multi-task learning for knowledge graph completion with pre-trained language models (COLING, 2020) [paper]

- Do pre-trained models benefit knowledge graph completion? A reliable evaluation and a reasonable approach (ACL, 2022) [paper]

- Joint language semantic and structure embedding for knowledge graph completion (COLING, 2022) [paper]

- MEM-KGC: masked entity model for knowledge graph completion with pre-trained language model (IEEE Access, 2021) [paper]

- Knowledge graph extension with a pre-trained language model via unified learning method (Knowl. Based Syst., 2023) [paper]

- Structure-augmented text representation learning for efficient knowledge graph completion (WWW, 2021) [paper]

- Simkgc: Simple contrastive knowledge graph completion with pre-trained language models (ACL, 2022) [paper]

- Lp-bert: Multi-task pre-training knowledge graph bert for link prediction (Arxiv, 2022) [paper]

- From discrimination to generation: Knowledge graph completion with generative transformer (WWW, 2022) [paper]

- Sequence-to-sequence knowledge graph completion and question answering (ACL, 2022) [paper]

- Knowledge is flat: A seq2seq generative framework for various knowledge graph completion (COLING, 2022) [paper]

- A framework for adapting pre-trained language models to knowledge graph completion (EMNLP, 2022) [paper]

- Dipping plms sauce: Bridging structure and text for effective knowledge graph completion via conditional soft prompting (ACL, 2023) [paper]

- GenWiki: A dataset of 1.3 million content-sharing text and graphs for unsupervised graph-to-text generation (COLING, 2020) [paper]

- KGPT: Knowledge-grounded pre-training for data-to-text generation (EMNLP 2020) [paper]

- JointGT: Graph-text joint representation learning for text generation from knowledge graphs (ACL Findings, 2021) [paper]

- Investigating pretrained language models for graph-to-text generation (NLP4ConvAI, 2021) [paper]

- Few-shot knowledge graph-to-text generation with pretrained language models (ACL, 2021) [paper]

- EventNarrative: A large-scale Event-centric Dataset for Knowledge Graph-to-Text Generation (Neurips, 2021) [paper]

- GAP: A graph-aware language model framework for knowledge graph-to-text generation (COLING, 2022) [paper]

- UniKGQA: Unified Retrieval and Reasoning for Solving Multi-hop Question Answering Over Knowledge Graph (ICLR, 2023) [paper]

- StructGPT: A General Framework for Large Language Model to Reason over Structured Data (Arxiv, 2023) [paper]

- An Empirical Study of GPT-3 for Few-Shot Knowledge-Based VQA (AAAI, 2022) [paper]

- An Empirical Study of Pre-trained Language Models in Simple Knowledge Graph Question Answering (World Wide Web Journal, 2023) [paper]

- Empowering Language Models with Knowledge Graph Reasoning for Open-Domain Question Answering (EMNLP, 2022) [paper]

- DRLK: Dynamic Hierarchical Reasoning with Language Model and Knowledge Graph for Question Answering (EMNLP, 2022) [paper]

- Subgraph Retrieval Enhanced Model for Multi-hop Knowledge Base Question Answering (ACL, 2022) [paper]

- GREASELM: GRAPH REASONING ENHANCED LANGUAGE MODELS FOR QUESTION ANSWERING (ICLR, 2022) [paper]

- LaKo: Knowledge-driven Visual Question Answering via Late Knowledge-to-Text Injection (IJCKG, 2022) [paper]

- QA-GNN: Reasoning with Language Models and Knowledge Graphs for Question Answering (NAACL, 2021) [paper]

- Tele-Knowledge Pre-training for Fault Analysis (ICDE, 2023) [paper]

- Pre-training language model incorporating domain-specific heterogeneous knowledge into a unified representation (Expert Systems with Applications, 2023) [paper]

- Deep Bidirectional Language-Knowledge Graph Pretraining (NIPS, 2022) [paper]

- KEPLER: A Unified Model for Knowledge Embedding and Pre-trained Language Representation (TACL, 2021) [paper]

- JointGT: Graph-Text Joint Representation Learning for Text Generation from Knowledge Graphs (ACL 2021) [paper]

- A Unified Knowledge Graph Augmentation Service for Boosting Domain-specific NLP Tasks (Arxiv, 2023) [paper]

- Unifying Structure Reasoning and Language Model Pre-training for Complex Reasoning (Arxiv, 2023) [paper]

- Complex Logical Reasoning over Knowledge Graphs using Large Language Models (Arxiv, 2023) [paper]

- RecInDial: A Unified Framework for Conversational Recommendation with Pretrained Language Models (Arxiv, 2023) [paper]

- Tele-Knowledge Pre-training for Fault Analysis (ICDE, 2023) [paper]