The code is based on materials from Udacity Deep Reinforcement Learning Nanodegree Program.

The policy methods are the class of RL (Reinforcement Learning) methods that do not estimate value function directly but tries to optimize the weights of the policy network that would maximize the expected return by interacting with an environment. Policy gradient methods (PGM) are a subclass of policy-based methods. PGM optimize the weights of the policy network by gradient ascent.

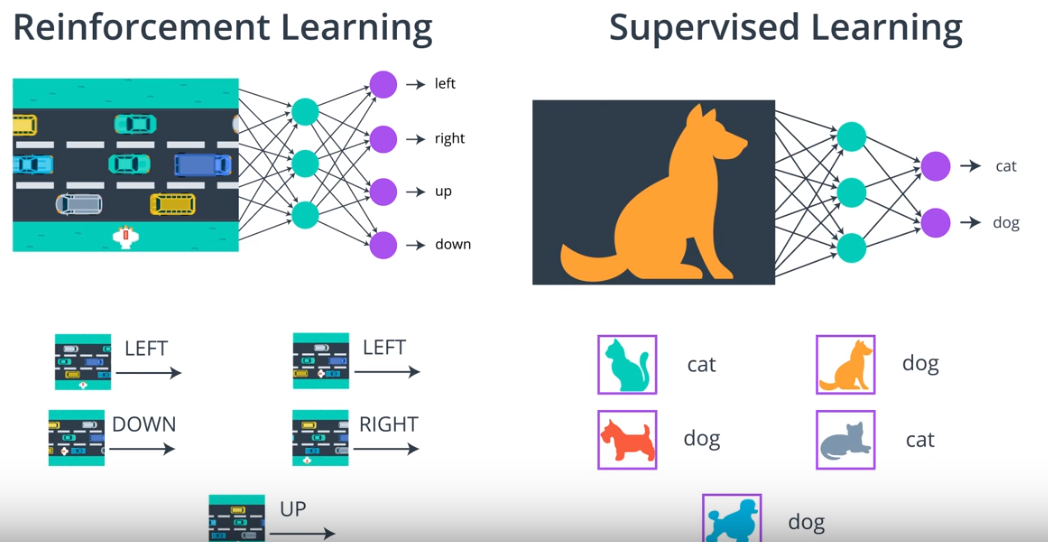

As it was noted by many, including Andrej Karpathy, that RL shares more features with Supervised Learning. The connection with Supervised learning is pretty straightforward. Supervised learning setup, we have a bunch of labeled data that is feed into NN, in this context learning means tweaking the weights (back-propagation) of the NN to identify a given picture correctly. Changing the weights increases the probability of giving the right label. In RL setup, we collect many episodes by following some policy that is labeled at the end of the episode by winning or losing the game, and then actions are the same as pictures in Supervised learning.

The concept of policy gradient method is as follows: After collecting many episodes, the agent takes the time to reflect on the experience received. Let us say that the agent won a game by random sampling. Then the PGM algorithm goes step by step to analyze all the action for the episode that led to winning. The PGM algorithm would increase all the action probability by little that led to winning the game and decrease for those that led to losing the game.

for n times

collect an episode

change the weights of the policy network

If WON, increase the probability of each (state, action) combination.

If LOST, decrease the probability of each (state, action) combination.

Here, let us introduce the notion of trajectory

H is for horizon - the length of an episode.

You may be wondering: why are we using trajectories instead of episodes? The answer is that maximizing expected return over trajectories (instead of episodes) lets us search for optimal policies for both episodic and continuing tasks!

REINFORCE is the algorithm that can be used to find the best weights for a policy network that maximizes the expected return U.

- Use the policy

$\pi_{\theta}$ to collect m trajectories$\tau^{1}, \tau^{2}, ..., \tau^{m}$ with horizont$H$ . We refer to the$i$ -th trajectory as$$\tau^{i} = (s_0^{i}, a_0^{i}, ..., s_H^{i}, a_H^{i}, s_{H+1}^{i})$$ - In the REINFORCE algorithm log of probability it used to increase/decrease the occurrence of the action in the trajectory. Use the trajectories to estimate the gradient

$\nabla_{\theta}U(\theta)$ :