This document will describe an End to End(E2E) scenario demonstrating the integration between Azure Synapse and a SAP S/4HANA on Azure system.

To demonstrate the SAP integration with a well-known, less complex and repeatable data model, the SAP SFlight sample application was selected. Further information about the SAP SFlight sample application can be found here.

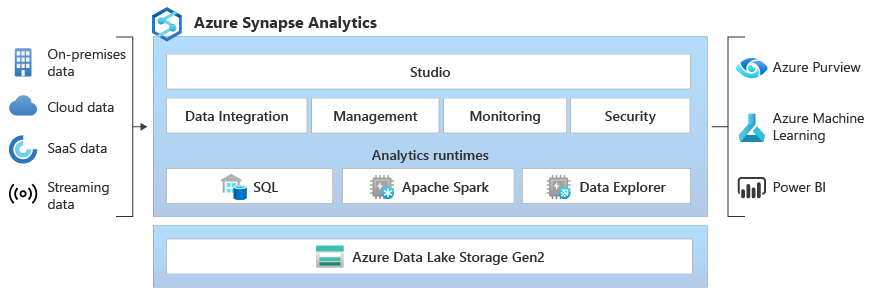

The motivation for creating this E2E scenario is to demonstrate, how SAP ERP data is practically processed using the Data-Warehouse(DWH) management components and services of Azure Synapse. This documentation is work in progress and the current plan is to continuously extend the scenarios and documentation.

The current backlog:

- Automated deployment of the scenarios

- Extended Data-Warehouse scenarios with Change Data Capture(CDC) handling

- Azure Purview integration (Link)

For the creation and implementation of the scenario three types of personas were assumed:

Bianca Basis

- SAP Basis administrator

- Maintains RFC and data authorizations for the Azure Data Factory(ADF) SAP Table Connector

- Monitors the RFC memory consumption and general performance

- Contact for SAP ERP related troubleshooting

Sabrina Sap

- DWH and BI developer

- SAP domain and data model expert

- SQL and data engineering knowledge

- PowerBI expert

- Contact person for LoB user

Daniel Data-Science

- Data-Scientist

- Fluent in Scala and Python

- Builds M/L models on SAP data

- Builds on Sabrina's curated models

Based on the personas and expertise described above, two scenarios or use-cases were defined and implemented.

This use-case is conceptually designed for rapid prototyping and piloting with SAP ERP data using Azure Synapse and Microsoft PowerBI(PBI). These prototypes and pilots would be the agile preparation for further productive DWH implementations.

Before creating the final DWH data models and data ingestion pipelines, certain SAP data model and customer specific data discovery and exploration tasks have typically to be executed:

- Identify custom columns (Z-fields)

- Filled and empty columns in SAP tables

- Identify timestamp columns for Change Data Capture

- Identify partition criteria and filters for efficient data replication

This scenario is fully integrated into Azure Synapse Workspaces using the following Azure data platform and Azure Synapse services and tools:

- Azure Data Lake Gen2

- Azure Synapse serverless SQL pools

- Azure Synapse Pipelines

- Microsoft PowerBI (Integrated into Azure Synapse Workspace)

In this scenario a Data Scientist utilizes Apache Spark and Spark Notebooks to implement machine learning models on SAP ERP data. Additional requirements are the option to use Python libraries for visualizations within the Apache Spark notebooks or to process large data volumes with Apache Spark.

The scenario was designed based on the following Azure Synapse tools and services:

- Apache Spark Pools

- Apache Spark Notebooks

- Python libraries like Pandas, Matplotlib or Seaborn

The architecture and components were selected based on the capabilities provided by the Modern Data Warehousing architecture. Each architecture layer and corresponding high-level implementation steps are described along the numbers marked in the architecture diagram:

- SAP S/4HANA on Azure Virtual Machines (VMs)

The scenario was implemented on an SAP S/4HANA demo system running on Azure. Further technical information about providing SAP S/4HANA with Virtual Machines on Azure as infrastructure can be found here.

Due to the integration via the Self hosted integration runtime, the scenario could as well be implemented for additional deployment options like SAP HANA Enterprise Cloud(HEC) or S/4HANA Private Cloud Edition (PCE) on Azure using networking options like VNet-peering.

- Self hosted Integration Runtime

The Self Hosted Integration Runtime(SHIR) establishes the secure connection between the SAP system and Azure Synapse. Technically the SAP Remote Function Call(RFC) interface and SAP Connector for .NET are used to establish the communication between the SAP system and the SHIR. Detailed information for the required SAP Netweaver configuration can be found here.

Create and configure a self-hosted integration runtime.

- Azure Synapse Pipeline built with Copy Data Tool

For data ingestion from SAP S/4HANA to Azure Data Lake Gen2 an Azure Synapse Pipeline was implemented. The data extraction interface, from the SAP ERP to the Synapse Pipeline, is the Azure Data Factory(ADF) SAP table connector. The SAP table connector enables data engineers to configure SAP ABAP tables for data extraction by the Azure Synapse Pipeline. The Copy Data tool provides the capability to efficiently configure multiple SAP tables for extraction within a single Pipeline. Data engineers have the option to mass select and preview SAP tables in a single dialog as shown in the screenshot below.

By providing a responsive design for browsing over a large number of ABAP-tables, the Copy Data Tool enables an efficient bulk configuration of the required SAP tables:

Additional ADF SAP Table connector related topics like timestamp-based change data capture (CDC) or partitioning options and strategies will be covered in the near future as extension to this initial scenario.

Further resources and additional reading about the ADF SAP Table Connector:

- SAP ABAP tables stored as parquet files on Azure Data Lake Storage (ADLS) Gen 2

After executing the Copy Data Pipeline, the SFLIGHT model related tables are stored in the compressed parquet file format in an Azure Data Lake Storage Gen2(ADLS Gen2) folder and are ready for consumption by different consumers.

Typical consumers would be Azure Synapse Pipelines, for further transformations, or for direct SQL-queries via Azure Synapse Serverless Pools could be used. An additional option is the consumption with Spark DataFrames as demonstrated in this scenario.

Not (yet) covered in the current scenarios and documentation is the potential integration with the Microsoft Common Data Model (CDM).

CDM would allow to maintain the business terms and schema information, for typical SAP model specific tables and columns like BSEG or VBAP, as metadata in addition to the raw data (table and column names) exported into the parquet file. In addition, it would be possible to maintain the relationships between extracted SAP tables. This will allow tools like Azure Data Factory or Microsoft PowerBI to read the CDM manifest file and consume the maintained metadata information as described here.

Further resources and additional reading about the first ideas and concepts about integrating SAP semantics with the Microsoft Common Data Model:

- Azure Synapse serverless SQL pool to create SQL views on parquet files

For enabling the data exploration and discovery use cases on the SAP data stored in the data lake, the parquet files were queried using Synapse serverless SQL pools and T-SQL. Key aspect for efficient and fast data exploration is the option to create SQL views with auto detection (infer) of the schema from the underlying data lake files.

-- Create SQL view from parquet file with schema auto detection.

CREATE VIEW FS1_SFLIGHT_MODEL.SBOOK AS

SELECT * FROM

OPENROWSET(

BULK 'https://adlsv2.dfs.core.windows.net/sap/FS1-TABLE-SFLIGHT/SBOOK',

FORMAT='PARQUET'

) AS [SBOOK];

- PowerBI workspace with Direct Query connection to SQL view

For creating the first report pilots and prototypes based on the SAP ERP data, a semantical data model was created with Microsoft Power BI(PBI). This data model defines the relationship between the SFLIGHT tables and adds additional semantical information like DAX(Calculated) measures, calculated fields, aggregations and hierarchies.

In this scenario the Direct Query interface was used to create the connection between the PBI data model, Azure Synapse SQL views and the underlying parquet files stored on ADLS. As well important to mention is the seamless integration of the PBI workspace into the Synapse Studio development environment.

- Create advanced visualizations leveraging Spark Dataframes and Apache Spark Notebooks

In this example a Data Engineer or Data-Scientist uses Apache Spark Notebooks to create advanced visualizations using Spark Notebooks and Python Libraries like the Advanced Data Analysis Library (Pandas).

Additional demonstrated features are queries on the parquet files using SparkSQL.

For using these services an Apache Spark pool was deployed and made available within the Synapse Studio.

Next to creating visualizations, the notebook also was used to create Spark tables using Python.

spark.sql("CREATE DATABASE IF NOT EXISTS SAPLAB_SFLIGHT")

df_sbook = spark.read.parquet('abfss://sapfs1sflight@ftasaplabadlsv2.dfs.core.windows.net/FS1-TABLE-SFLIGHT/SBOOK')

df_sbook.write.mode("overwrite").saveAsTable("SAPLAB_SFLIGHT.sbook")These Spark tables were created using Apache Spark and Python by a data engineer for easy consumption by a SQL-developer using a single SQL statement and Azure Synapse Serverless Pools:

Summarized this document provided a comprehensive overview about analytical integration scenarios and use-cases between Azure Synapse and data from an SAP ERP. The description covered the following implementation steps:

Ideally the simplified SFLight model is starting point for Microsoft partners to create their own Azure Synapse & SAP demo-systems and scenarios. Microsoft partners are as well always invited to collaborate on this document with their own solutions or extensions. In case of comments, conceptual bugs or additional requirements please feel free to create issues on GitHub or reach out to the author via LinkedIn.

🙏 Many thanks for your time and reading the document until here! 🙏