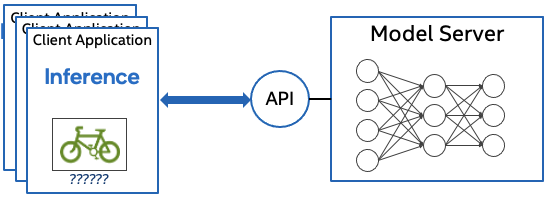

OpenVINO™ Model Server (OVMS) is a high-performance system for serving machine learning models. It is based on C++ for high scalability and optimized for Intel solutions, so that you can take advantage of all the power of the Intel® Xeon® processor or Intel’s AI accelerators and expose it over a network interface. OVMS uses the same architecture and API as TensorFlow Serving, while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making it easy to deploy new algorithms and AI experiments.

Model repositories may reside on a locally accessible file system (e.g. NFS), as well as online storage compatible with Google Cloud Storage (GCS), Amazon S3, or Azure Blob Storage.

Read release notes to find out what’s new.

Review the Architecture concept document for more details.

Key features:

- support for multiple frameworks, such as Caffe, TensorFlow, MXNet, PaddlePaddle and ONNX

- online deployment of new model versions

- configuration updates in runtime

- support for AI accelerators, such as Intel Movidius Myriad VPUs, GPU, and HDDL

- works with Bare Metal Hosts as well as Docker containers

- model reshaping in runtime

- directed Acyclic Graph Scheduler - connecting multiple models to deploy complex processing solutions and reducing data transfer overhead

- custom nodes in DAG pipelines - allowing model inference and data transformations to be implemented with a custom node C/C++ dynamic library

- serving stateful models - models that operate on sequences of data and maintain their state between inference requests

- binary format of the input data - data can be sent in JPEG or PNG formats to reduce traffic and offload the client applications

- model caching - cache the models on first load and re-use models from cache on subsequent loads

- metrics - metrics compatible with Prometheus standard

Note: OVMS has been tested on RedHat, and Ubuntu. The latest publicly released docker images are based on Ubuntu and UBI. They are stored in:

A demonstration on how to use OpenVINO Model Server can be found in our quick-start guide. For more information on using Model Server in various scenarios you can check the following guides:

-

Speed and Scale AI Inference Operations Across Multiple Architectures - webinar recording

-

Capital Health Improves Stroke Care with AI - use case example

If you have a question, a feature request, or a bug report, feel free to submit a Github issue.

* Other names and brands may be claimed as the property of others.