embedding method for measuring uncertainty

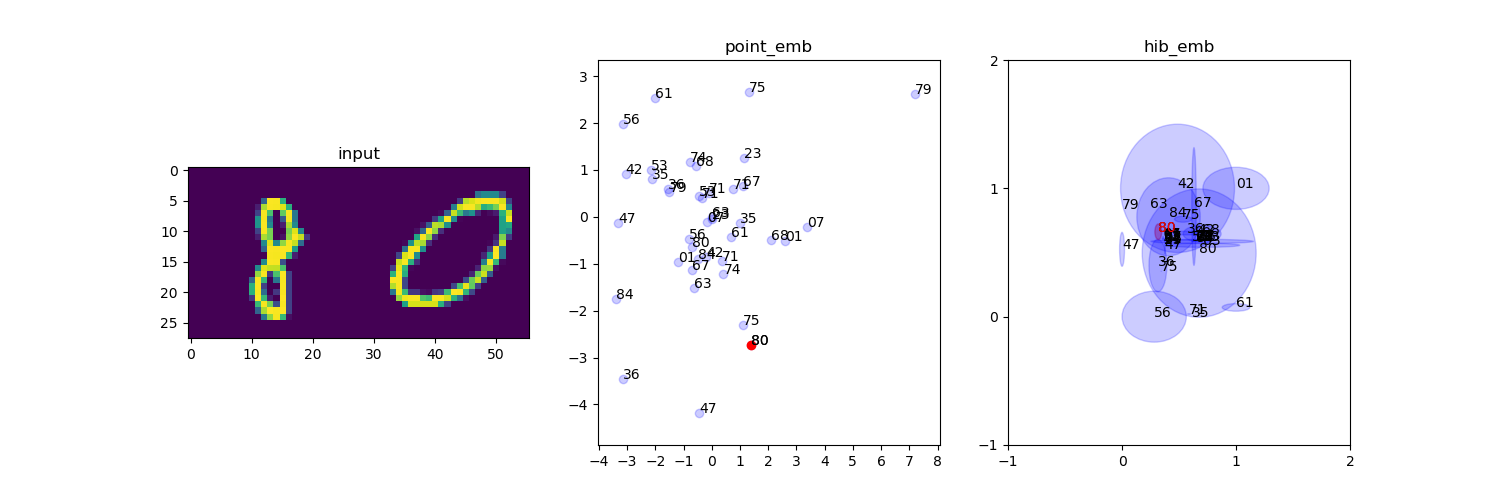

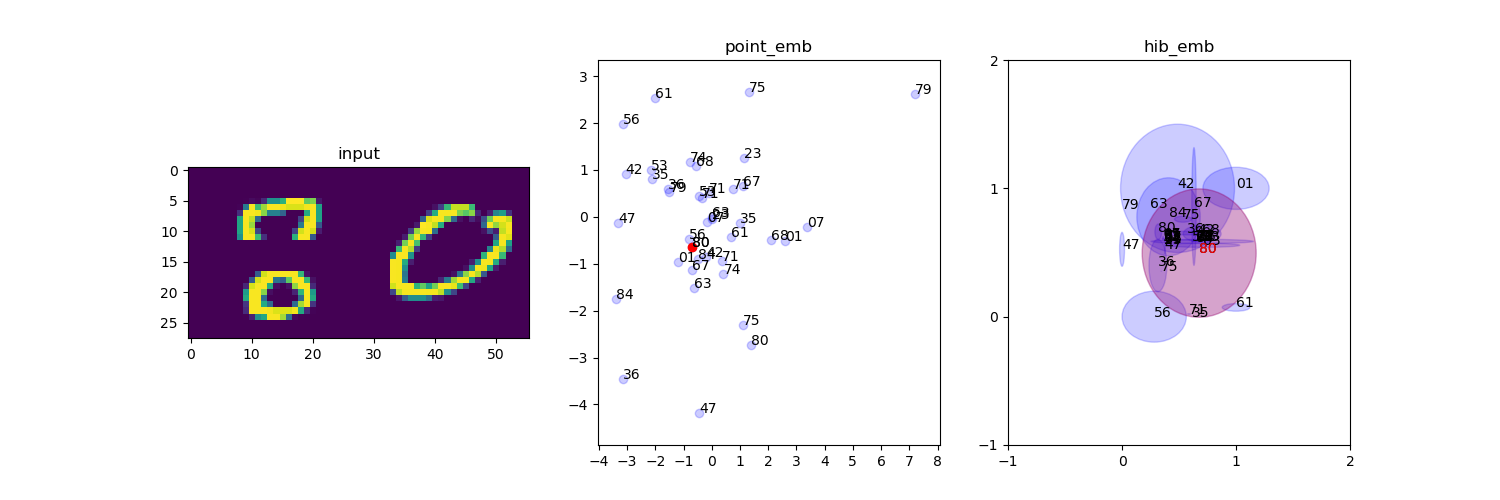

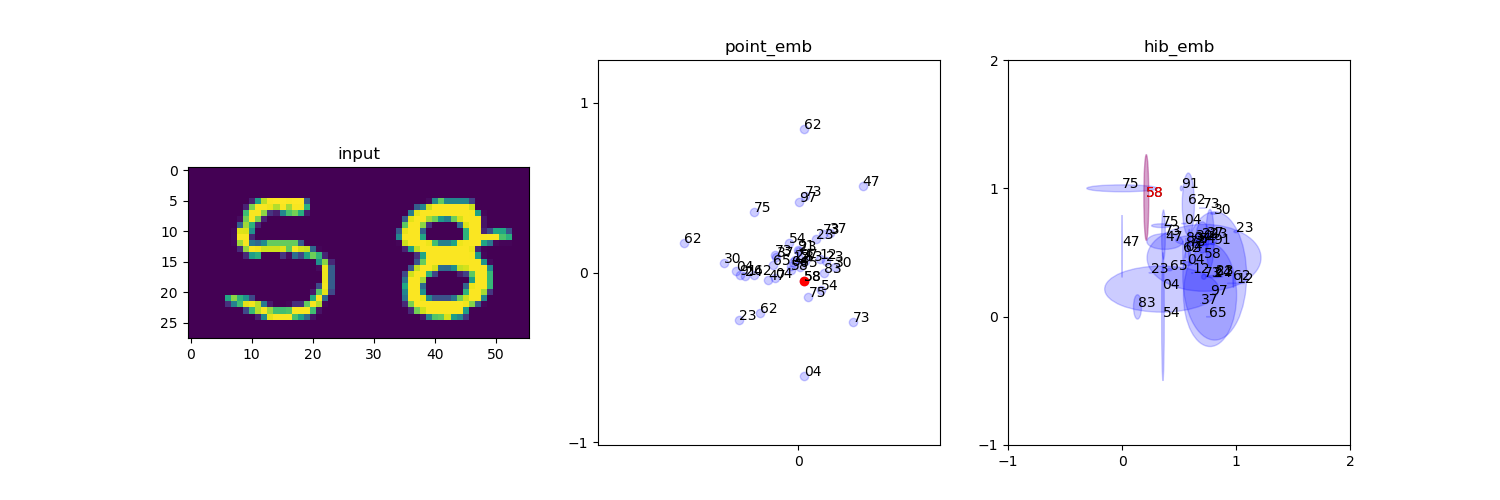

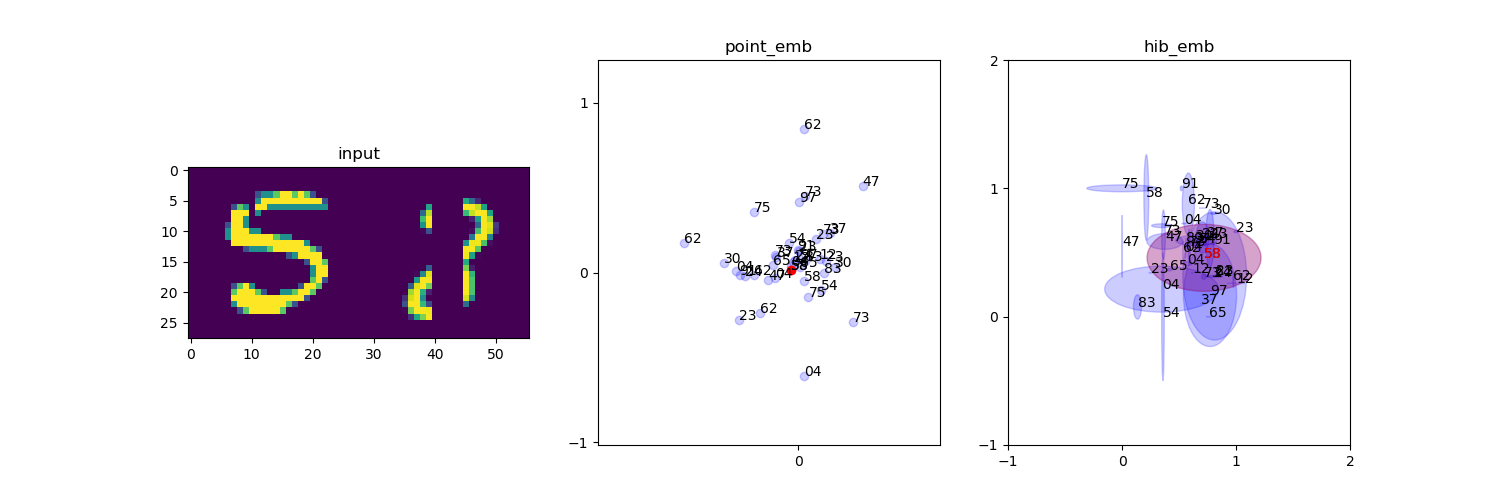

Often the distance between points is used as a proxy for match confidence. However, this can fail to represent uncertainty arising when the input is ambiguous, e.g., due to occlusion or blurriness

This work addresses this issue and explicitly models the uncertainty by hedging the location of each input in the embedding space.

- numpy 1.16.3

- tensorflow 2.0

- tfp-nightly 0.7.0

- matplotlib 3.0.3

- sklearn 0.21.1

- train

python train.py [option]- visualize

python visualize.py [option]In paper, see 2 - 1 - (3)

In paper, see 2 - 3 - (8)

- Input image 80: clean vs occlusion

- Input image 58: clean vs occlusion