This repository shares the documentation for the RaDelf dataset as well as the code for reproducing the results of [1].

Example video from our dataset, with the camera on top, lidar on the right and the point cloud from [1] on the left.

-

[Original paper](Coming Soon)

The RaDelft dataset is a large-scale, real-life multi-sensor dataset recorded in various driving scenarios. It provides radar data in different processing levels, synchronised with lidar, camera and odometry.

The output of the next sensors have been recorded:

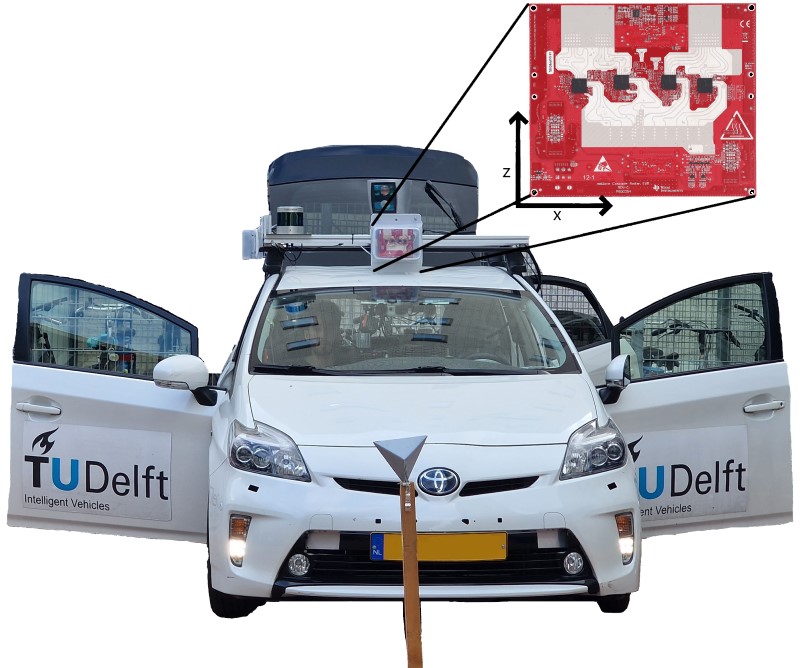

- A texas instruments MIMO radar board MMWCAS-RF-EVM mounted on the roof.

- A RoboSense Ruby Plus Lidar (128 layers rotating lidar) mounted on the roof.

- A video camera mounted on the windshield (1936 x 1216 px, ~30Hz).

- The ego vehicle’s odometry (filtered combination of RTK GPS, IMU, and wheel odometry, ∼100 Hz).

All sensors were jointly calibrated. See the figure below for a general overview of the sensor setup.

The dataset is made freely available for non-commercial research purposes only. Eligibility to use the dataset is limited to Master- and PhD-students, and staff of academic and non-profit research institutions. The dataset is hosted in 4TU.ResearchData:

By requesting access, the researcher agrees to use and handle the data according to the license. See furthermore our privacy statement.

After validating the researcher’s association to a research institue, we will send an email containing password protected download link(s) of the RaDelft dataset. Sharing these links and/or the passwords is strictly forbidden (see licence).

In case of questions of problems, please send an email to i.roldanmontero at tudelft.nl.

Frequently asked questions about the license:

Q: Is it possible for MSc and PhD students to use the dataset if they work/cooperate with a for-profit organization?

A: The current VoD license permits the use of the VoD dataset of an MS/PhD student on the compute facilities (storing, processing) of his/her academic institution for research towards his/her degree - even if this MS/PhD student is (also) employed by a company. The license does not permit the use of the VoD dataset in the computing facilities (storage, processing) of a for-profit organization.

This example shows how to generate the dataloader for the single and multiframe versions.

This example notebook shows how to load the plot of the 3D point clouds of the lidar and radar with the NN detector.

Note

Not all scenes have the point clouds generated by the network. Please, read next section.

Only the point clouds for scenes 2 and 6 are provided for the network since scenes 1,3,4,5 and 7 are used for training. If the network-generated point clouds are needed for the training scenes, the pre-trained models available with the data can be used to generate the point clouds. To do it, call the generate_point_clouds method of network_time.py setting params["test_scenes"] equal to [1,2,3,4,5,6,7]. The only change is to point to the path of the pre-trained network checkpoint available at the data repository.

Warning

Velocity saved in the point clouds does not use the maximum Doppler unambiguous algorithm. To use the extension, all the Doppler cubes must be generated, which will take up too much space in the data repository. To generate the matrices, the file generateRadarCubeFFTs.m can be run with the saveDopplerFold parameter equal to 1. Then, the path to the Doppler folder can be passed in the cube_to_pointcloud function in data_preparation.py. Moreover, the current algorithm for unambiguous Doppler extension is only valid when a single target occurs in a range-Doppler cell.

Note

Labeling of the data is being made to enable classification and segmentation algorithms. Labels will be released in the following updates.

- The development kit is realeased under the Apache License, Version 2.0, see here.

- The dataset can be used by accepting the Research Use License.

Important

The paper explaining in detail all the content of the repository is under review at IEEE Transactions on Radar Systems. The pre-print can be found at: https://arxiv.org/abs/2406.04723