- Sketch-2-Paint

- About The Project

- Getting Started

- Theory and Approach

- Usage

- Results and Demo

- Troubleshooting

- Future Works

- Contributors

- Acknowledgements and Resources

- License

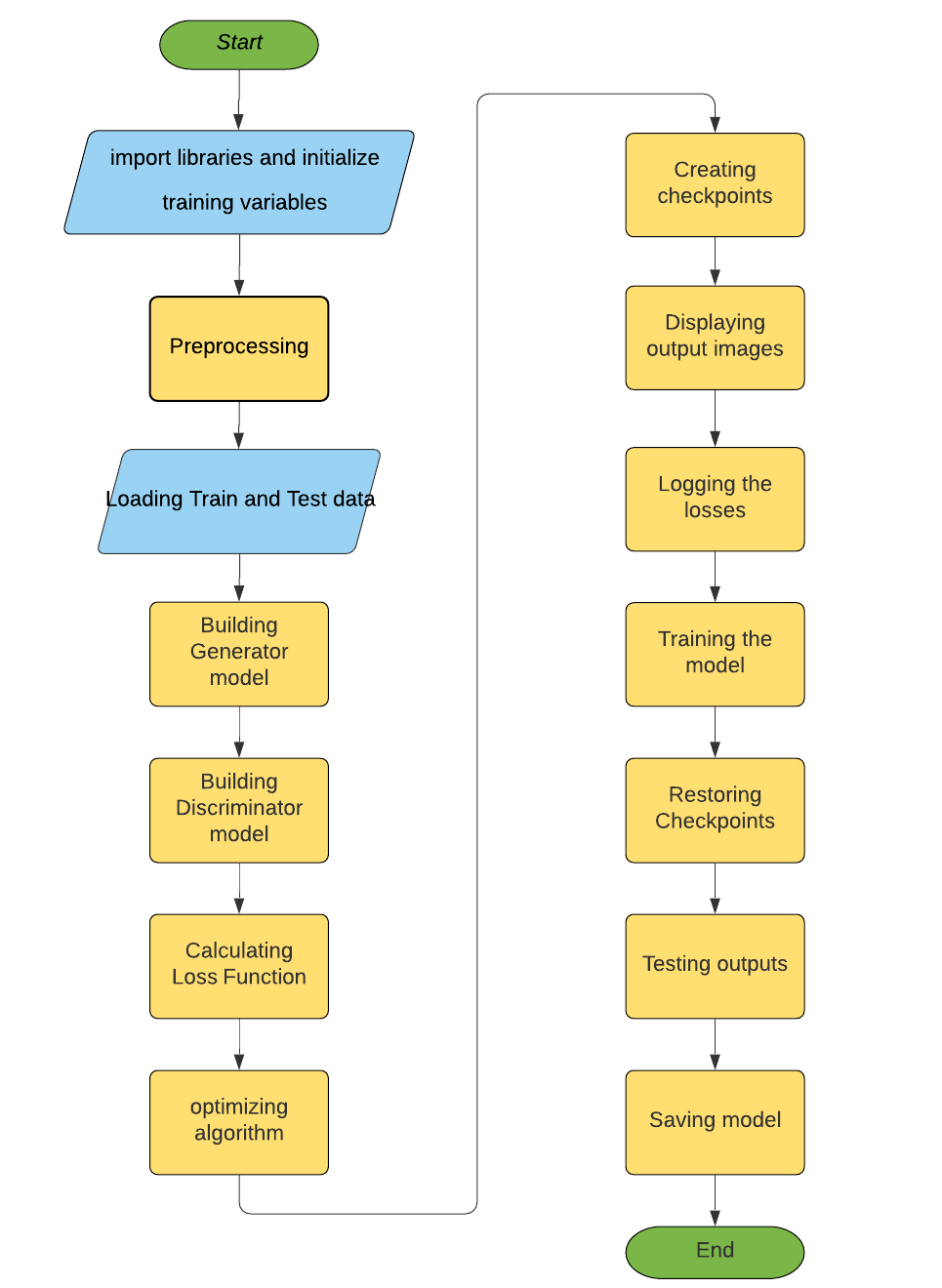

Aim of this project is to build a Conditional Generative Adversarial Network which accepts a 256x256 px black and white sketch image and predicts the colored version of the image without knowing the ground truth.

Sketch to Color Image generation is an image-to-image translation model using Conditional Generative Adversarial Networks as described in Image-to-Image Translation with Conditional Adversarial Networks.

Refer to our documentation

This section contains the technologies we used for this project.

├── assets # Folder containing gifs

├── notes # Notes of project

├── 3b1b_notes

├── deep_learning_notes

├── face_aging

├── gans

├── linear_algebra_notes

├── report # Project report

├── resources # List of all resources

├── src # Source code files

├── builddiscriminator.py

├── buildgenerator.py

├── runmodel.py

├── LICENSE # MIT license

├── README.md # readme.md

├── sketch_2_paint.ipynb # colab notebook

├── sketch_2_paint_v2.ipynb # colab notebook with better results

To download and use this code, the minimum requirements are:

- Python 3.6 and above

- pip 19.0 or later

- Windows 7 or later (64-bit)

- Microsoft Visual C++ Redistributable for Visual Studio 2015, 2017 and 2019

- Tensorflow 2.2 and above

- GPU support requires a CUDA®-enabled card

- Clone the repo

git clone https://github.com/KunalA18/Sketch-2-PaintGenerative Adversarial Networks, or GANs for short, are an approach to generative modeling using deep learning methods, such as convolutional neural networks.

Generative modeling is an unsupervised learning task in machine learning that involves automatically discovering and learning the regularities or patterns in input data in such a way that the model can be used to generate or output new examples that plausibly could have been drawn from the original dataset.

GANs are a clever way of training a generative model by framing the problem as a supervised learning problem with two sub-models: the generator model that we train to generate new examples, and the discriminator model that tries to classify examples as either real (from the domain) or fake (generated). The two models are trained together in a zero-sum game, adversarial, until the discriminator model is fooled about half the time, meaning the generator model is generating plausible examples.

GANs are an exciting and rapidly changing field, delivering on the promise of generative models in their ability to generate realistic examples across a range of problem domains, most notably in image-to-image translation tasks such as translating photos of summer to winter or day to night, and in generating photorealistic photos of objects, scenes, and people that even humans cannot tell are fake.

Once the requirements are checked, you can easily download this project and use it on your machine.

NOTE:

1 - You will have to change the path to dataset as per your machine environment on line #12. You can download the dataset from Kaggle at https://www.kaggle.com/ktaebum/anime-sketch-colorization-pair.

2 - GANs are resource intensive models so if you run into OutOfMemory or such erros, try customizing the variables as per your needs available from line #15 to #19

-

After the execution is complete, the generator model will be saved in your root direcrtory of the project as

AnimeColorizationModelv1.h5file. You can use this model to directly generate colored images from any Black and White images in just a few seconds. Please note that the images used for training are digitally drawn sketches. So, use images with perfect white background to see near perfect results.

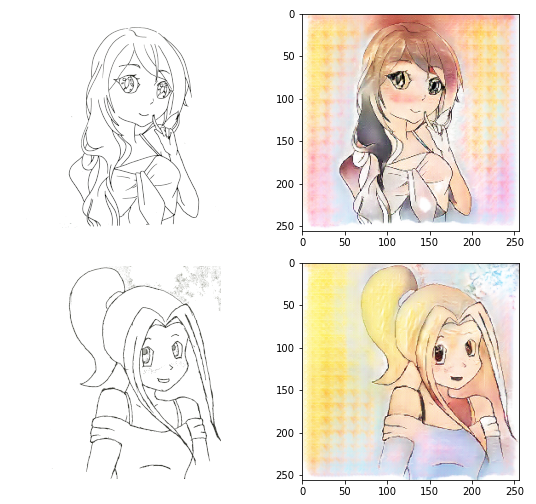

You can see some of the results from hand drawn sketches shown below:

If you are planning on using any cloud environments like Google Colab, you need to keep in mind that the training is going to take a lot of time as GANs are computationally quite heavy to run. Google Colab has an absolute timeout of 12 hours which means that the notebook kernel is reset so you’ll need to consider some points like mounting the Google Drive and saving checkpoints after regular intervals so that you can continue training from where it left off before the timeout.

We enjoyed working on GANs during our project and plan to continue exploring the field for further applications and make new projects. Some of the points that We think this project can grow or be a base for are listed below.

- Trying different databases to get an idea of preprocessing different types of images and building models specific to those input image types.

- This is a project applied on individual Image to Image translation. Further the model can be used to process black and white sketch video frames to generate colored videos.

- SRA VJTI Eklavya 2021

- Referred this for understanding the use of tensorflow

- Completed these 4 courses for understanding Deep Learning concepts like Convulational Neural networks and learnt to make a DL model

- Referred this for understanding code statements

- Referred this to understand the concept of GANs

- Special Thanks to our awesome mentors Saurabh Powar and Chaitravi Chalke who always helped us during our project journey

The License used in this project.